AI + Machine Learning, Azure Machine Learning, Internet of Things, Thought leadership

LUIS.AI: Automated Machine Learning for Custom Language Understanding

Posted on

8 min read

This blog post was co-authored by Riham Mansour, Principal Program Manager, Fuse Labs.

Conversational systems are rapidly becoming a key component of solutions such as virtual assistants, customer care, and the Internet of Things. When we talk about conversational systems, we refer to a computer’s ability to understand the human voice and take action based on understanding what the user meant. What’s more, these systems won’t be relying on voice and text alone. They’ll be using sight, sound, and feeling to process and understand these interactions, further blurring the lines between the digital sphere and the reality in which we are living. Chatbots are one common example of conversational systems.

Chatbots are a very trendy example of conversational systems that can maintain a conversation with a user in natural language, understand the user’s intent and send responses based on the organization’s business rules and data. These chatbots use Artificial Intelligence to process language, enabling them to understand human speech. They can decipher verbal or written questions and provide responses with appropriate information or direction. Many customers first experienced chatbots through dialogue boxes on company websites. Chatbots also interact verbally with consumers, such as Cortana, Siri and Amazon’s Alexa. Chatbots are now increasingly being used by businesses to augment their customer service.

Language understanding (LU) is a very centric component to enable conversational services such as bots, IoT experiences, analytics, and others. In a spoken dialog system, LU converts from the words in a sentence into a machine-readable meaning representation, typically indicating the intent of the sentence and any present entities. For example, consider a physical ï¬tness domain, with a dialog system embedded in a wearable device like a watch. This dialog system could recognize intents like StartActivity and StopActivity, and could recognize entities like ActivityType. In the user input “begin a jog”, the goal of LU is to identify the intent as StartActivity, and identify the entity ActivityType= ’’jog’’.

Historically, there have been two options for implementing LU, machine learning (ML) models and handcrafted rules. Handcrafted rules are accessible for general software developers, but they are difï¬cult to scale up, and do not beneï¬t from data. ML-based models are trained on real usage data, generalize to new situations, and are superior in terms of robustness. However, they require rare and expensive expertise, access to large sets of data, and complex Machine Learning (ML) tools. ML-based models are therefore generally employed only by organizations with substantial resources.

In an effort to democratize LU, Microsoft’s Language Understanding Intelligent Service, LUIS shown in Figure 1 aims at enabling software developers to create cloud-based machine-learning LU models speciï¬c to their application domains, and without ML expertise. It is offered as part of the Microsoft Azure Cognitive Services Language offering. LUIS allows developers to build custom LU models iteratively, with the ability to improve models based on real traffic using advanced ML techniques. LUIS technologies capitalize on the continuous innovation of Microsoft in Artificial Intelligence and its applications to natural language understanding with research, science, and engineering efforts dating back at least 20 years or more. In this blog, we dive deeper into the LUIS capabilities to enable intelligent conversational systems. We also highlight some of our customer stories that show how large enterprises use LUIS as an automated AI solution to build their LU models. This blog aligns with the December 2017 announcement of the general availability of our conversational AI and language understanding tools with customers such as Molson Coors, UPS, and Equadex.

Figure 1: Language Understanding Service

Building Language Understanding Model with LUIS

A LUIS app is a domain-specific language model designed by you and tailored to your needs. LUIS is a cloud-based service that your end users can use from any device. It supports 12 languages and is deployed in 12 regions across the globe making it an extremely attractive solution to large enterprises that have customers in multiple countries.

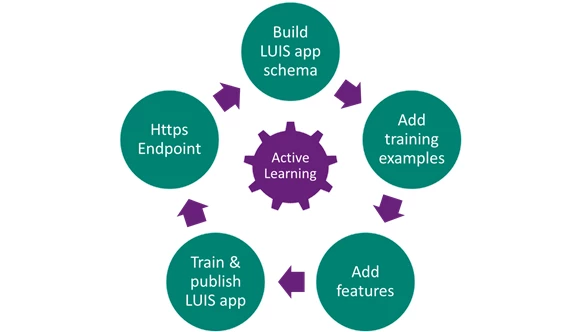

You can start with a prebuilt domain model, build your own, or blend pieces of a prebuilt domain with your own custom information. Through a simple user experience, developers start by providing a few example utterances and labeling them to bootstrap initial reasonably-accurate application. The developer trains and publishes the LUIS app to obtain an HTTP endpoint on Azure that can receive real traffic. Once your LUIS application has endpoint queries, LUIS enables you to improve individual intents and entities that are not performing well on real traffic through active learning. In the active learning process, LUIS examines all the endpoint utterances, and selects utterances that it is unsure of. If you label these utterances, train, and publish, then LUIS identifies utterances more accurately. It is highly recommended that you build your LUIS application in multiple short and fast iterations where you use active learning to improve individual intents and entities until you obtain satisfactory performance. Figure 2 depicts the LUIS application development lifecycle.

Figure 2: LUIS Application Development Lifecycle

After the LUIS app is designed, trained, and published, it is ready to receive and process utterances. The LUIS app receives the utterance as an HTTP request and responds with extracted user intentions. Your client application sends the utterance and receives LUIS’s evaluation as a JSON object, as shown in Figure 3. Your client app can then take appropriate action.

Figure 3: LUIS Input Utterances and Output JSON

There are three key concepts in LUIS:

- Intents: An intent represents actions the user wants to perform. The intent is a purpose or goal expressed in a user’s input, such as booking a flight, paying a bill, or finding a news article. You define and name intents that correspond to these actions. A travel app may define an intent named “BookFlight.”

- Utterances: An utterance is text input from the user that your app needs to understand. It may be a sentence, like “Book a ticket to Paris”, or a fragment of a sentence, like “Booking” or “Paris flight.” Utterances aren’t always well-formed, and there can be many utterance variations for a particular intent.

- Entities: An entity represents detailed information that is relevant in the utterance. For example, in the utterance “Book a ticket to Paris”, “Paris” is a location. By recognizing and labeling the entities that are mentioned in the user’s utterance, LUIS helps you choose the specific action to take to answer a user’s request.

LUIS supports a powerful set of entity extractors that enable developers to build apps that can understand sophisticated utterances. LUIS offers a set of pre-built entities that offer common types which developers need often in their apps like date and time recognizers, money, number, etc. Developers can build custom entities based on top-notch machine learning algorithms as well as lexicon-based entities or a blend of both. Entities created through machine learning could be simple entities like “organization name”, hierarchical or composite. Additionally, LUIS enables developers to build list entities that are lexicon-based in a quick and easy way through recommended entries offered by huge-size dictionaries mined from the web.

Hierarchical entities span more than one level to model “Is-A” relation between entities. For instance, to analyze an utterance like “I want to book a flight from London to Seattle”, you need to build a model the could differentiate between the origin “London” and the destination “Seattle” given that both are cities. In that case, you build a hierarchical entity “Location” that has two children “origin” and “destination”.

Composite entities model “Has-A” relation among entities. For instance, to analyze an utterance like “I want to order two fries and three burgers”, you want to make sure that the utterance analysis binds “two” with “fries” and “three” with “burgers”. In this case, you build a composite entity in LUIS called “food order” that is composed of “number of items” and “food type”.

LUIS provides a set of powerful tools to help developers get started quickly on building custom language understanding applications. These tools are combined with customizable pre-built apps and entity dictionaries, such as calendar, music, and devices, so you can build and deploy a solution more quickly. Dictionaries are mined from the collective knowledge of the web and supply billions of entries, helping your model to correctly identify valuable information from user conversations.

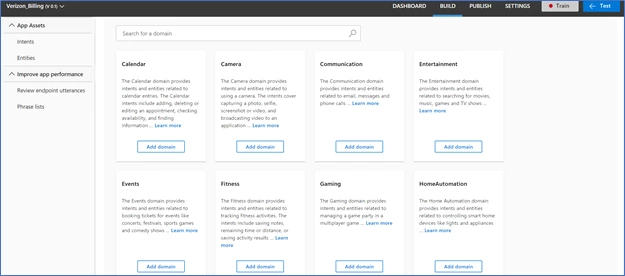

Prebuilt domains as shown in Figure 4 are pre-built sets of intents and entities that work together for domains or common categories of client applications. The prebuilt domains have been pre-trained and are ready for you to add to your LUIS app. The intents and entities in a prebuilt domain are fully customizable once you’ve added them to your app. You can train them with utterances from your system so they work for your users. You can use an entire prebuilt domain as a starting point for customization, or just borrow a few intents or entities from a prebuilt domain.

Figure 4: LUIS pre-built domains

LUIS provides developers with capabilities to actively learn in production and gives guidance on how to make the improvements. Once the model starts processing input at the endpoint, developers can go to the Improve app performance tab to constantly update and improve the model. LUIS examines all the endpoint utterances and selects utterances that it is unsure of and surfaces it to the developer. If you label these utterances, train, and publish, then LUIS processes these utterances more accurately.

LUIS has two ways to build a model, the Authoring APIs and the LUIS.ai web app. Both methods give you control of your LUIS model definition. You can use either LUIS.ai or the Authoring APIs or a combination of both to build your model. The management capabilities we provide includes models, versions, collaborators, external APIs, testing, and training.

Customer Stories

LUIS enables multiple conversational AI scenarios that were much harder to implement in the past. The possibilities are now vast, including productivity bots like meeting assistants and HR bots, digital assistants that present better service to customers and IoT applications. Our value proposition is strongly evidenced through our customers who use LUIS as an automated AI solution to enable their digital transformation.

UPS recently completed a transformative project that improves service levels via a Chatbot called UPS Bot, which runs on the Microsoft Bot Framework and LUIS. Customers can engage UPS Bot in text-based and voice-based conversations to get the information they need about shipments, rates, and UPS locations. According to Katie Duffy, Application Architect, UPS “Conversation as a platform is the future, so it’s great that we’re already offering it to our customers using the Bot Framework and LUIS”.

Working with Microsoft Services, Dixons Carphone has developed a Chatbot called Cami that is designed to help customers navigate the world of technology. Cami currently accepts text-based input in the form of questions, and she also accepts pictures of products’ in-store shelf labels to check stock status. The bot uses the automated AI capabilities in LUIS for conversational abilities, and the Computer Vision API to process images. Dixons Carphone programmed Cami with information from its online buying guide and store colleague training materials to help guide customers to the right product.

Rockwell Automation has customers in more than 80 countries, 22,000 employees, and reported annual revenue of US $5.9 billion in 2016. To give customers real-time operational insight, the company decided to integrate the Windows 10 IoT Enterprise operating system with existing manufacturing equipment and software, and connect the on-premises infrastructure to the Microsoft Azure IoT Suite. Instead of connecting an automation controller in a piece of equipment to a separate standalone computer, the company designed a hybrid automation controller with the Windows 10 IoT Enterprise operating system embedded next to their industry leading Logix 5000TM controller engine. The solution eliminates the need for a separate standalone computer and easily connects to the customer’s IT environment and Azure IoT Suite and Cognitive Services including LUIS for advanced analytics.

LUIS is part of a much larger portfolio of capabilities now available on Azure to build AI applications. I invite you to learn more about how AI can augment and empower every developer as shown in Figure 5. We’ve also launched the AI School to help developers get up to speed with all of the AI technologies shown in Figure 4.

Figure 5: Resources for developers to get started with AI technologies.

Dive in and learn how to infuse conversational AI into your applications today.