Who spends their summer at the Microsoft Garage New England Research & Development Center (or “NERD”)? The Microsoft Garage internship seeks out students who are hungry to learn, not afraid to try new things, and able to step out of their comfort zones when faced with ambiguous situations. The program brought together Grace Hsu from Massachusetts Institute of Technology, Christopher Bunn from Northeastern University, Joseph Lai from Boston University, and Ashley Hong from Carnegie Mellon University. They chose the Garage internship because of the product focus—getting to see the whole development cycle from ideation to shipping—and learning how to be customer obsessed.

Microsoft Garage interns take on experimental projects in order to build their creativity and product development skills through hacking new technology. Typically, these projects are proposals that come from our internal product groups at Microsoft, but when Stanley Black & Decker asked if Microsoft could apply image recognition for asset management on construction sites, this team of four interns accepted the challenge of creating a working prototype in twelve weeks.

Starting with a simple request for leveraging image recognition, the team conducted market analysis and user research to ensure the product would stand out and prove useful. They spent the summer gaining experience in mobile app development and AI to create an app that recognizes tools at least as accurately as humans can.

The problem

In the construction industry, it’s not unusual for contractors to spend over 50 hours every month tracking inventory, which can lead to unnecessary delays, overstocking, and missing tools. All together, large construction sites could lose more than $200,000 worth of equipment over the course of a long project. Addressing this problem is an unstandardized mix that typically involves barcodes, Bluetooth, RFID tags, and QR codes. The team at Stanley Black & Decker asked, “wouldn’t it be easier to just take a photo and have the tool automatically recognized?”

Because there are many tool models with minute differences, recognizing a specific drill, for example, requires you to read a model number like DCD996. Tools can also be assembled with multiple configurations, such as with or without a bit or battery pack attached, and can be viewed from different angles. You also need to take into consideration the number of lighting conditions and possible backgrounds you’d come across on a typical construction site. It quickly becomes a very interesting problem to solve using computer vision.

How they hacked it

Classification algorithms can be easily trained to reach strong accuracy when identifying distinct objects, like differentiating between a drill, a saw, and a tape measure. Instead, they wanted to know if a classifier could accurately distinguish between very similar tools like the four drills shown above. In the first iteration of the project, the team explored PyTorch and Microsoft’s Custom Vision service. Custom Vision appeals to users by not requiring a high level of data science knowledge to get a working model off the ground, and with enough images (roughly 400 for each tool), Custom Vision proved to be an adequate solution. However, it immediately became apparent that manually gathering this many images would be challenging to scale for a product line with thousands of tools. The focus quickly shifted to find ways of synthetically generating the training images.

For their initial approach, the team did both three-dimensional scans and green screen renderings of the tools. These images were then overlaid with random backgrounds to mimic a real photograph. While this approach seemed promising, the quality of the images produced proved challenging.

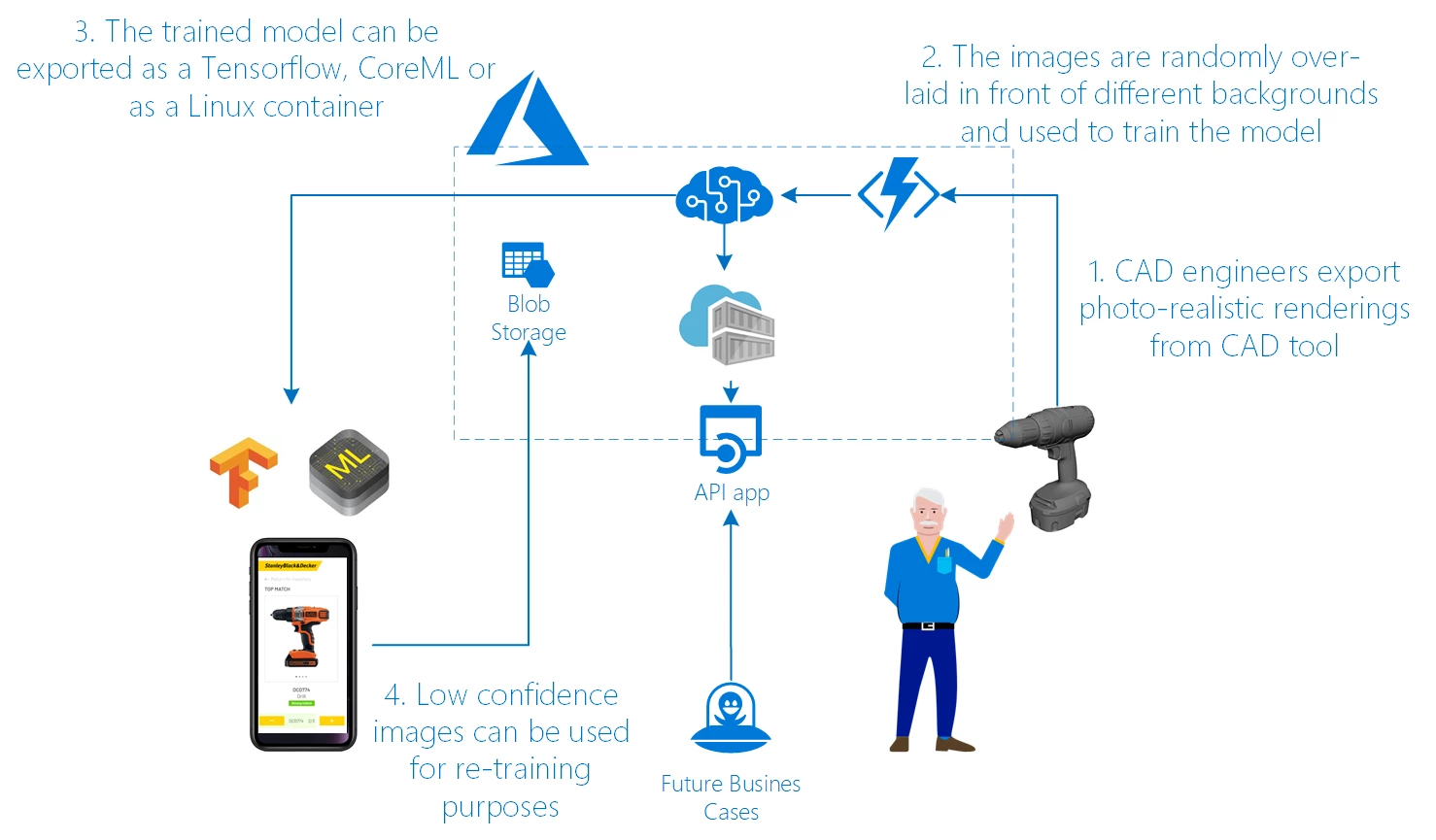

In the next iteration, in collaboration with Stanley Black & Decker’s engineering team, the team explored a new approach using photo-realistic renders from computer-aided design (CAD) models. They were able to use relatively simple Python scripts to resize, rotate, and randomly overlay these images on a large set of backgrounds. With this technique, the team could generate thousands of training images within minutes.

On the left is an image generated in front of a green screen versus an extract from CAD on the right.

Benchmarking the iterations

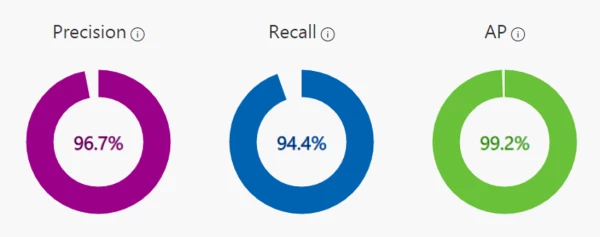

The Custom Vision service offers reports on the accuracy of the model as shown below.

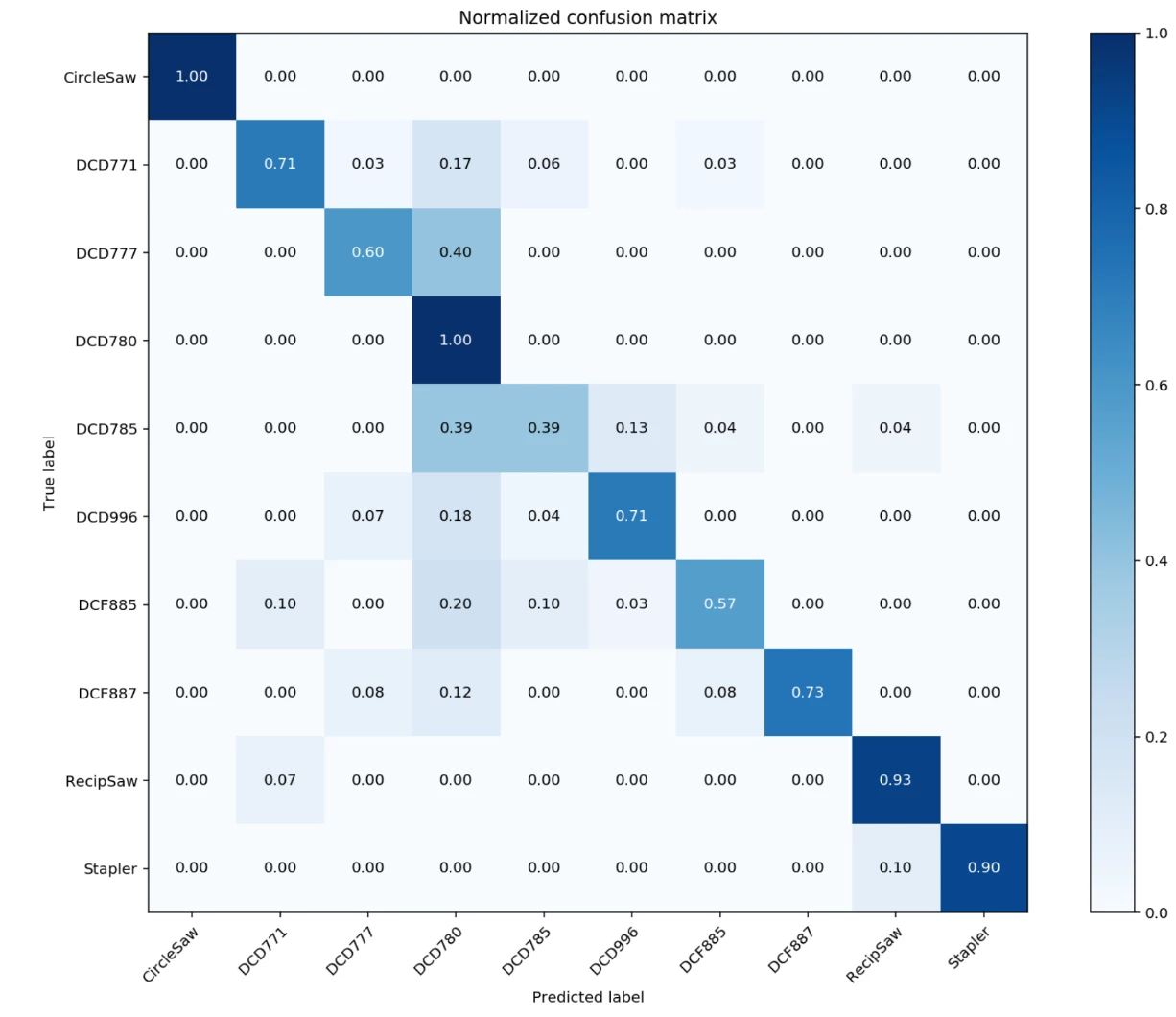

For a classification model that targets visually similar products, a confusion matrix like the one below is very helpful. A confusion matrix visualizes the performance of a prediction model by comparing the true label of a class in the rows with the label outputted by the model in the columns. The higher the scores on the diagonal, the more accurate the model is. When high values are off the diagonal it helps the data scientists understand which two classes are being confused with each other by the trained model.

Existing Python libraries can be used to quickly generate a confusion matrix with a set of test images.

The result

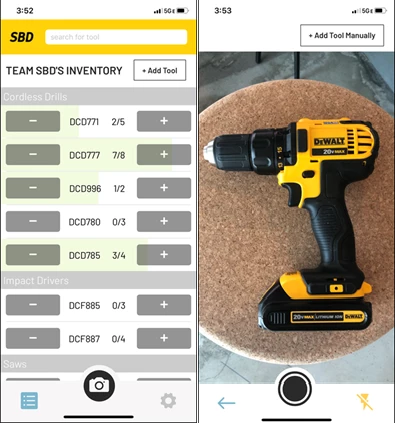

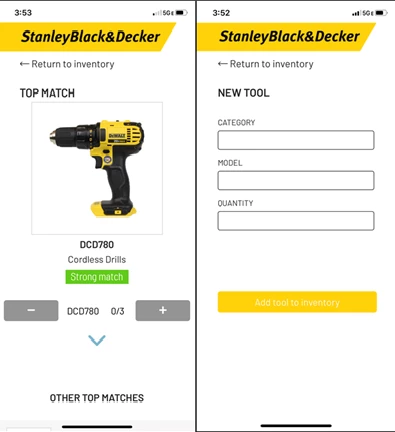

The team developed a React Native application that runs on both iOS and Android and serves as a lightweight asset management tool with a clean and intuitive UI. The app adapts to various degrees of Wi-Fi availability and when a reliable connection is present, the images taken are sent to the APIs of the trained Custom Vision model on Azure Cloud. In the absence of an internet connection, the images are sent to a local computer vision model.

These local models can be obtained using Custom Vision, which exports models to Core ML for iOS, TensorFlow for Android, or as a Docker container that can run on a Linux App Service in Azure. An easy framework for the addition of new products to the machine learning model can be implemented by exporting rendered images from CAD and generating synthetic images.

Images in order from left to right: inventory checklist screen, camera functionality to send a picture to Custom Vision service, display of machine learning model results, and a manual form to add a tool to the checklist.

What’s next

Looking for an opportunity for your team to hack on a computer vision project? Search for an OpenHack near you.

Microsoft OpenHack is a developer focused event where a wide variety of participants (Open) learn through hands-on experimentation (Hack) using challenges based on real world customer engagements designed to mimic the developer journey. OpenHack is a premium Microsoft event that provides a unique upskilling experience for customers and partners. Rather than traditional presentation-based conferences, OpenHack offers a unique hands-on coding experience for developers.

The learning paths can also help you get hands on with the cognitive services.