The progress of AI has been astounding with solutions pushing the envelope by augmenting human understanding, preferences, intent, and even spoken language. AI is improving our knowledge and understanding by helping us provide faster, more insightful solutions that fuel transformation beyond our imagination. However, with this rapid growth and transformation, AI’s demand for compute power has grown by leaps and bounds, outpacing Moore’s Law’s ability to keep up. With AI powering a wide array of important applications that include natural language processing, robot-powered process automation, and machine learning and deep learning, AI silicon manufacturers are finding new, innovative ways to get more out of each piece of silicon such as integration of advanced, mixed-precision capabilities, to enable AI innovators to do more with less. At Microsoft, our mission is to empower every person and every organization on the planet to achieve more, and with Azure’s purpose-built AI infrastructure we intend to deliver on that promise.

Azure high-performance computing provides scalable solutions

The need for purpose-built infrastructure for AI is evident—one that can not only scale up to take advantage of multiple accelerators within a single server but also scale out to combine many servers (with multi-accelerators) distributed across a high-performance network. High-performance computing (HPC) technologies have significantly advanced multi-disciplinary science and engineering simulations—including innovations in hardware, software, and the modernization and acceleration of applications by exposing parallelism and advancements in communications to advance AI infrastructure. Scale-up AI computing infrastructure combines memory from individual graphics processing units (GPUs) into a large, shared pool to tackle larger and more complex models. When combined with the incredible vector-processing capabilities of the GPUs, high-speed memory pools have proven to be extremely effective at processing large multidimensional arrays of data to enhance insights and accelerate innovations.

With the added capability of a high-bandwidth, low-latency interconnect fabric, scale-out AI-first infrastructure can significantly accelerate time to solution via advanced parallel communication methods, interleaving computation and communication across a vast number of compute nodes. Azure scale-up-and scale-out AI-first infrastructure combines the attributes of both vertical and horizontal system scaling to address the most demanding AI workloads. Azure’s AI-first infrastructure delivers leadership-class price, compute, and energy-efficient performance today.

Cloud infrastructure purpose-built for AI

Microsoft Azure, in partnership with NVIDIA, delivers purpose-built AI supercomputers in the cloud to meet the most demanding real-world workloads at scale while meeting price/performance and time-to-solution requirements. And with available advanced machine learning tools, you can accelerate incorporating AI into your workloads to drive smarter simulations and accelerate intelligent decision-making.

Microsoft Azure is the only global public cloud service provider that offers purpose-built AI supercomputers with massively scalable scale-up-and-scale-out IT infrastructure comprised of NVIDIA InfiniBand interconnected NVIDIA Ampere A100 Tensor Core GPUs. Optional and available Azure Machine Learning tools facilitate the uptake of Azure’s AI-first infrastructure—from early development stages through enterprise-grade production deployments.

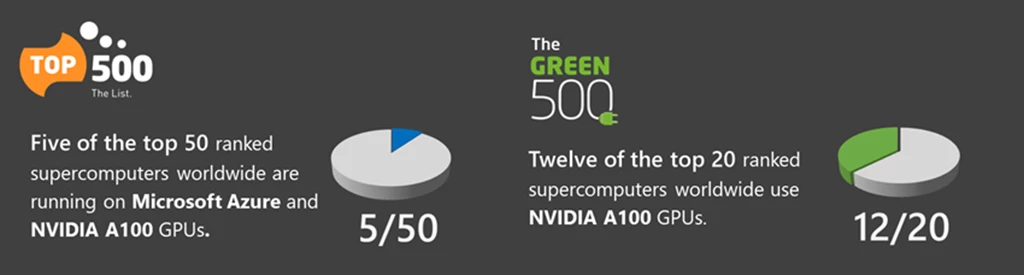

Scale-up-and-scale-out infrastructures powered by NVIDIA GPUs and NVIDIA Quantum InfiniBand networking rank amongst the most powerful supercomputers on the planet. Microsoft Azure placed in the top 15 of the Top500 supercomputers worldwide and currently, five systems in the top 50 use Azure infrastructure with NVIDIA A100 Tensor Core GPUs. Twelve of the top twenty ranked supercomputers in the Green500 list use NVIDIA A100 Tensor Core GPUs.

Source: Top 500 The List: Top500 November 2022, Green500 November 2022.

With a total solution approach that combines the latest GPU architectures, designed for the most compute-intensive AI training and inference workloads, and optimized software to leverage the power of the GPUs, Azure is paving the way to beyond exascale AI supercomputing. And this supercomputer-class AI infrastructure is made broadly accessible to researchers and developers in organizations of any size around the world in support of Microsoft’s stated mission. Organizations that need to augment their existing on-premises HPC or AI infrastructure can take advantage of Azure’s dynamically scalable cloud infrastructure.

In fact, Microsoft Azure works closely with customers across industry segments. Their increasing need for AI technology, research, and applications is fulfilled, augmented, and/or accelerated with Azure’s AI-first infrastructure. Some of these collaborations and applications are explained below:

Retail and AI

AI-first cloud infrastructure and toolchain from Microsoft Azure featuring NVIDIA are having a significant impact in retail. With a GPU-accelerated computing platform, customers can churn through models quickly and determine the best-performing model. Benefits include:

- Deliver 50x performance improvements for classical data analytics and machine learning (ML) processes at scale with AI-first cloud infrastructure.

- Leveraging RAPIDS with NVIDIA GPUs, retailers can accelerate the training of their machine learning algorithms up to 20x. This means they can use larger data sets and process them faster with more accuracy, allowing them to react in real-time to shopping trends and realize inventory cost savings at scale.

- Reduce the total cost of ownership (TCO) for large data science operations.

- Increase ROI for forecasting, resulting in cost savings from reduced out-of-stock and poorly placed inventory.

With autonomous checkout, retailers can provide customers with frictionless and faster shopping experiences while increasing revenue and margins. Benefits include:

- Deliver better and faster customer checkout experience and reduce queue wait time.

- Increase revenue and margins.

- Reduce shrinkage—the loss of inventory due to theft such as shoplifting or ticket switching at self-checkout lanes, which costs retailers $62 billion annually, according to the National Retail Federation.

In both cases, these data-driven solutions require sophisticated deep learning models—models that are much more sophisticated than those offered by machine learning alone. In turn, this level of sophistication requires AI-first infrastructure and an optimized AI toolchain.

Customer story (video): Everseen and NVIDIA create a seamless shopping experience that benefits the bottom line.

Manufacturing

In manufacturing, compared to routine-based or time-based preventative maintenance, proactive predictive maintenance can get ahead of the problem before it happens and save businesses from costly downtime. Benefits of Azure and NVIDIA cloud infrastructure purpose-built for AI include:

- GPU-accelerated compute enables AI at an industrial scale, taking advantage of unprecedented amounts of sensor and operational data to optimize operations, improve time-to-insight, and reduce costs.

- Process more data faster with higher accuracy, allowing faster reaction time to potential equipment failures before they even happen.

- Achieve a 50 percent reduction in false positives and a 300 percent reduction in false negatives.

Traditional computer vision methods that are typically used in automated optical inspection (AOI) machines in production environments require intensive human and capital investment. Benefits of GPU-accelerated infrastructure include:

- Consistent performance with guaranteed quality of service, whether on-premises or in the cloud.

- GPU-accelerated compute enables AI at an industrial scale, taking advantage of unprecedented amounts of sensor and operational data to optimize operations, improve quality, time to insight, and reduce costs.

- Leveraging RAPIDS with NVIDIA GPUs, manufacturers can accelerate the training of their machine-learning algorithms up to 20x.

Each of these examples require an AI-first infrastructure and toolchain to significantly reduce false positives and negatives in predictive maintenance and to account for subtle nuances in ensuring overall product quality.

Customer story (video): Microsoft Azure and NVIDIA gives BMW the computing power for automated quality control.

As we have seen, AI is everywhere, and its application is growing rapidly. The reason is simple. AI enables organizations of any size to gain greater insights and apply those insights to accelerating innovations and business results. Optimized AI-first infrastructure is critical in the development and deployment of AI applications.

Azure is the only cloud service provider that has a purpose-built, AI-optimized infrastructure comprised of Mellanox InfiniBand interconnected NVIDIA Ampere A100 Tensor Core GPUs for AI applications of any scale for organizations of any size. At Azure, we have a purpose-built AI-first infrastructure that empowers every person and every organization on the planet to achieve more. Come and do more with Azure!

Learn more about purpose-built infrastructure for AI

- Watch the Understanding AI and AI Infrastructure webcast.

- Read the An AI-First Infrastructure and Toolchain for Any Scale whitepaper.

- Read the Accelerating AI and HPC in the Cloud whitepaper.

- Learn more about Azure HPC + AI.

- Keep up to date on the Azure + NVIDIA partnership and offerings.