Conversational language understanding

A feature of AI Language that uses natural language understanding (NLU) so people can interact with your apps, bots, and IoT devices.

Get 10,000 text request transactions S0 tier free every month for 12 months.

Add custom NLU to your apps

Build applications with conversational language understanding, a AI Language feature that understands natural language to interpret user goals and extracts key information from conversational phrases. Create multilingual, customizable intent classification and entity extraction models for your domain-specific keywords or phrases across 96 languages. Train in one natural language and use them in multiple languages without retraining.

Language studio simplifies creation, labeling, and deployment for your custom models.

No machine-learning experience required.

Configurable to return the best response from multiple language applications.

Enterprise-grade security and privacy applied to both your data and trained models.

Quickly build a custom, multilingual solution

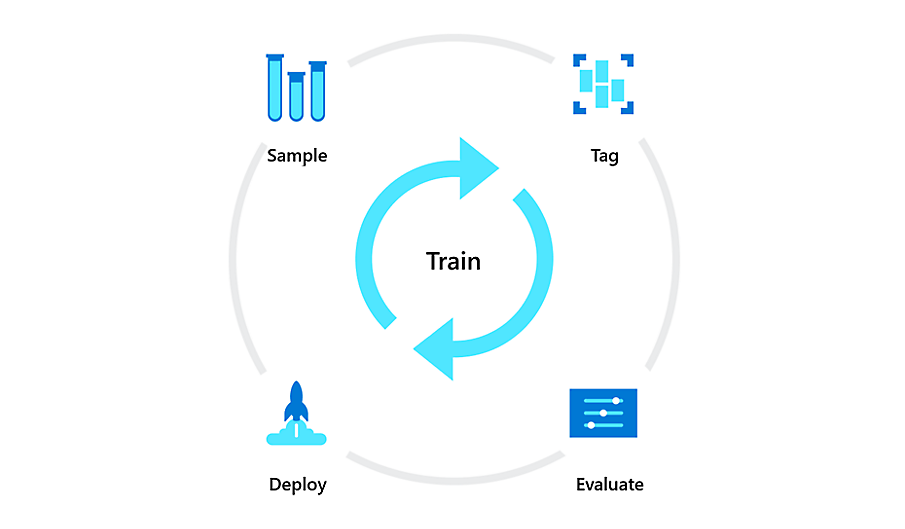

Quickly create intents and entities and label your own utterances. Add prebuilt components from a wide variety of common available types. Evaluate with built-in quantitative measurements like precision and recall. Use the simple dashboard to manage model deployments in the intuitive and user-friendly language studio.

Build a natural language processing solution

Use seamlessly with other features within Azure AI Language, as well as Azure Bot Service for an end-to-end conversational solution.

Improve your apps with the next generation of LUIS

Conversational language understanding is the next generation of Language Understanding (LUIS). It comes with state-of-the-art language models that understand the utterance's meaning and capture word variations, synonyms, and misspellings while being multilingual. It also automatically orchestrates bots powered by conversational language understanding, question answering, and classic LUIS.

Explore conversational language understanding scenarios

Build an enterprise-grade conversational bot

This reference architecture describes how to build an enterprise-grade conversational bot (chatbot) using the Azure Bot Service framework.

Commerce chatbot

Together, Azure Bot Service and conversational language understanding enable developers to create conversational interfaces for various scenarios, such as banking, travel, and entertainment.

Controlling IoT devices using a voice assistant

Create seamless conversational interfaces that understand natural language with all your internet-accessible devices—from your connected television or fridge to devices in a connected power plant.

Conversational language understanding is the next generation of Language Understanding (LUIS)

| Conversational language understanding | Language Understanding (LUIS) | |

|---|---|---|

| Transformer-based, state-of-the-art models |  | |

| Train in one natural language and use the model in multiple languages without retraining |  | |

| Orchestrate between multiple language application |  | |

| Interoperable with Bot Framework SDK |  |  |

| Interoperable with Bot Framework Composer |  | |

| Run on premises or at the edge with containers |  | |

| Annotate, train, evaluate, and deploy models with language studio | Language Studio | LUIS.ai |

Comprehensive security and compliance, built in

-

Microsoft invests more than $1 billion annually on cybersecurity research and development.

-

We employ more than 3,500 security experts who are dedicated to data security and privacy.

-

Get the power, control, and customization you need with flexible pricing

Pay only for what you use, with no upfront costs. With Azure AI Language, pay as you go based on the number of transactions.

Get started with an Azure free account

1

2

After your credit, move to pay as you go to keep building with the same free services. Pay only if you use more than your free monthly amounts.

3

Documentation and resources

Code samples

Customization resources

Frequently asked questions about conversational language understanding

-

Conversational language understanding is the next generation of LUIS. It comes with state-of-the-art language models and technology that understand the utterance's meaning and easily captures word variations, synonyms, and misspellings, all while being multilingual immediately out of the box. It also comes with orchestration for you to directly connect to conversational language understanding projects, custom question answering (formerly QnA Maker) knowledge bases, and even classic LUIS applications.

-

Please refer to its pricing page.

-

It uses native multilingual technology to train your intent classes and entity extractors. For example, train a project in English, and query it in French, German, or Italian, and still get the expected results for intents and entities. Add data in different languages in case the results of any of the languages aren't performing as well.

-

Complex conversational services like chatbots require more than just one language project to serve its scenarios. Create orchestration projects and connect to conversational language understanding projects, custom question answering knowledge bases, and classic LUIS apps. Each connection is mapped to an intent in the orchestration project. A query to the project will predict which intent is best suited to the query and route it to the connected project, and return with the connected project's response.

-

Conversational language understanding supports multiple languages.

-

Follow the documentation for orchestration.

-

LUIS will continue to be supported and maintained as a GA service.