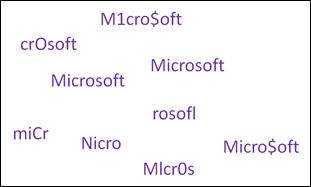

In Video Indexer, we have the capability for recognizing display text in videos. This blog explains some of the techniques we used to extract the best quality data. To start, take a look at the sequence of frames below.

Source: Keynote-Delivering mission critical intelligence with SQL Server 2016

Did you manage to recognize the text in the images? It is highly reasonable that you did, without even noticing. However, using the best Optical Character Recognition (OCR) service for text extraction on these images, will yield broken words such as “icrosof”, “Mi”, “osoft” and “Micros”, simply because the text is partially hidden in each image.

There is a misconception that AI for video is simply extracting frames from a video and running computer vision algorithms on each video frame but video processing is much more than processing individual frames using an image processing algorithm – for example, with 30 frames per second, a minute-long video is 1800 frames producing a lot of data but, as we see above, not many meaningful words. There is a separate blog that covers how AI for video is different from AI for images.

While humans have cognitive abilities that allow them to complete hidden parts of the text and disambiguate local deficiencies resulting from bad video quality, direct application of OCR is not sufficient for automatic text extraction from videos.

In Video Indexer, we developed and implemented a dedicated approach to tackle this challenge. In this post, we will introduce the approach and see a couple of results that demonstrate its power.

Machine Learning in Action

Examining OCR results from various videos, there is an interesting pattern that can be observed. Many extracted text fragments suffer from errors or deficiencies coming from blocking of text or from difficulty to recognize characters algorithmically. The figure below demonstrates this concept using OCR detections from individual frames in a real video.

Video Indexer uses advanced Machine Learning techniques to address the challenge. First, we learn which strings are likely to come from the same visual source. Since objects may appear in different parts of the frame, we use a specialized algorithm to infer that multiple strings need to be consolidated into one output string. Then, we use a second algorithm to infer the correct string to be presented, by correcting the mistakes introduced by individual OCR detections.

As we see in many artificial intelligence examples, tasks that are easy and natural to us humans, require some heavy algorithms to be mimicked.

To see the algorithm in action, let us look at a short demo video by DirectX.

During the video, the company logo of “Lionhead Studios” appears at the bottom right corner of the screen. Since the scenes and background change during the video, the logo cannot always be seen clearly.

This results in noisy and inconsistent text extraction when using OCR as-is, which is sensitive to the noise in the background image. On the other hand using the interference algorithm, the overall consolidation algorithm robust this fluctuation, yielding more consistent and reliable text extraction. The table below, which shows the strings extracted by each of the methods highlights these differences.

| OCR | Consolidated OCR |

| LIONHEAD | LIONHEAD |

| ONHEAD | STUDIOS |

| D | |

| Q | |

| STUDIOS | |

| ‘STUDIO | |

| STUD | |

| TUDIOS | |

| DIOS |

Combining evidence from visual and audial channels

Composed of audio and graphics, videos are complex objects of multiple dimensions which complete each other. Using information from other video elements can boost or reduce the confidence in text fragments identified by the OCR model. Going back to our previous example, knowing that the word “Microsoft” is being spoken multiple times during the keynote talk, increases our confidence it is a valid word. This is helpful to make a decision when visual evidence needs strengthening. It is also what we as humans do.

We incorporate this information in the algorithm, by factoring into the algorithms above weight to words that appear in the transcription.

Reducing errors with Language Detection

Video Indexer supports transcription in 10 widely spoken languages. Our core OCR technology supports a large set of characters: Latin, Arabic, Chinese, Japanese and Cyrillic. One of the challenges in video OCR is noise coming from detection of characters where other similar objects appear. When the set of characters is large, this can introduce noise.

A new patent-pending method implemented in VI enables us to make an intelligent decision about which OCR detections have high probability of not being a character, but another object. Automatic language detection creates a distribution of detected characters, which is combined with OCR detection confidence levels to make a decision.

More examples

You are invited to take a look at more examples in this gallery video. The words Adobe and Azure are blocked in many ways, but identified correctly by Video Indexer.

Let us see two more challenging examples, and how Video Indexer extracts the correct text.

Conclusions

Text recognition in video is a challenging task, that has a significant impact for various multimedia applications. Video Indexer uses advanced machine learning to consolidate OCR results, extracting meaningful and searchable text. Where the whole is greater than the sum of its parts, Video Indexer leverages other video components as an additional confidence layer to the OCR model.

You are most welcome to familiarize yourself with this new feature, on gallery examples as well as on your own examples, for videoindexer.ai.