A technical overview of Azure Cosmos DB

Posted on

13 min read

Microsoft’s globally distributed, multi-model database service – A technical overview

Azure Cosmos DB is Microsoft’s globally distributed, horizontally partitioned, multi-model database service. The service is designed to allow customers to elastically (and independently) scale throughput and storage across any number of geographical regions. Azure Cosmos DB offers guaranteed low latency at the 99th percentile, 99.99% high availability, predictable throughput, and multiple well-defined consistency models. Azure Cosmos DB is the first and only globally distributed database service in the industry today to offer comprehensive Service Level Agreements (SLAs) encompassing all four dimensions of global distributions which our customers care the most: throughput, latency at the 99th percentile, availability, and consistency. As a cloud service, we have carefully designed and engineered Azure Cosmos DB with multi-tenancy and global distribution in mind. In this blog post, we provide an overview of the notable capabilities and architectural choices of Azure Cosmos DB.

Foundations of Azure Cosmos DB

The work of Dr. Leslie Lamport, Turing Award Winner and world renowned computer scientist, has profoundly influenced many large scale distributed systems. Azure Cosmos DB is no exception. Over the course of the seven years building Azure Cosmos DB, Leslie’s work has been a constant source of our inspiration for us.

In this new interview, Leslie shares his thoughts on the foundations of Azure Cosmos DB and his influence in the design of Azure Cosmos DB.

Design Goals of Azure Cosmos DB

Azure Cosmos DB had its beginnings in 2010 as “Project Florence”. The goal was to address the fundamental pain-points faced by developers building internet-scale applications inside Microsoft. We set the following design goals for Azure Cosmos DB.

- Enable customers to elastically scale throughput and storage based on demand, globally. The system should deliver the configured throughput within 5 seconds at the 99th percentile, from the time of the request to scale.

- Enable customers to build highly responsive, mission-critical applications. The system must deliver predictable and guaranteed end-to-end low read and write latencies at the 99th percentile.

- Ensure that the system is “always on”. The system must provide 99.99% availability regardless of the number of regions associated with their database. To enable customers to test the end-to-end availability properties of the applications, (in steady state) the service must also allow customers to simulate regional failures or mark regions associated with their database offline. This helps validate the end-to-end availability properties of applications.

- Enable developers to write correct globally distributed applications. The system must offer an intuitive and predictable programming model around data consistency. While strong consistency comes with a price, writing large globally distributed applications against an “eventually consistent” database results in an application code which is hard to reason about, is brittle, and rife with correctness bugs.

- Offer stringent financially-backed comprehensive SLAs for 1, 2, 3 and 4 above.

- Relieve the developers from the burden of database schema/index management and versioning. Keeping database schema and indexes in-sync with an application’s schema is especially painful for globally distributed applications.

- Natively support multiple data models and popular APIs for accessing data. The translation between the externally exposed APIs and internal data representation needed to be efficient.

- Operate at a very low cost to pass on the savings to customers.

Noteworthy Aspects of Azure Cosmos DB’s Design

Individually as well as collectively, the above goals required novel solutions and careful navigation of complex engineering tradeoffs. The uniqueness of the Azure Cosmos DB’s design lies in the specific approach we took to navigate these constraints and the engineering tradeoffs we’ve made.

The following are the noteworthy aspects of Azure Cosmos DB’s system design. We will describe these in detail, in future posts.

- Azure Cosmos DB’s design to dynamically configure the proximity of the database engine and the underlying storage to support multiple service tiers with different performance SLAs. Depending on the service tier, the system can be configured to support the computation and storage to be (a) co-located within the same process space, (b) disaggregated across machines within the same cluster or (c) disaggregated across different clusters/datacenters within the same region.

- Azure Cosmos DB’s implementation of comprehensive SLAs for throughput, latency, consistency and availability. These SLAs clearly specify the tradeoffs between latency, consistency, availability and throughput in a globally distributed setup.

- Azure Cosmos DB’s unique design of resource governance at the core of the system, which provides a consistent programming model to provision throughput across a heterogeneous set of database operations.

- Azure Cosmos DB’s highly modular and fully resource governed approach to solve a variety of coordination problems including, cross region replication and transparent partition management.

- Azure Cosmos DB’s design to elastically scale throughput across multiple geographical regions while maintaining the SLAs. The system is designed to scale throughput across regions and ensures that the changes to the throughput is instantaneous.

- Azure Cosmos DB’s design and implementation for precisely specifying a set of relaxed yet well-defined consistency models with TLA+. This enables practical consistency models for real-world scenarios; provides provable consistency guarantees; is commercially viable in a multi-tenant and globally distributed setup; and offers an intuitive programming model which enables developers to write correct distributed applications. As far as we know, Azure Cosmos DB is the only globally distributed database system which has operationalized the bounded staleness, session and consistent prefix consistency models and exposed them to developers with clear semantics, performance/availability tradeoffs and backed by SLAs.

- Azure Cosmos DB’s write-optimized, resource-governed and schema-agnostic database engine (NOTE: it has significantly evolved since the paper was published) which is capable of ingesting sustained volume of updates; it automatically indexes everything it ingests and synchronously makes the index durable and highly available before acknowledging the client’s updates while maintaining low latency guarantees.

- Azure Cosmos DB’s design for its core data model and type system, as well as its extensible database engine design which allows for multiple data models and APIs and programming language type systems to be efficiently added, translated and projected onto its core data model.

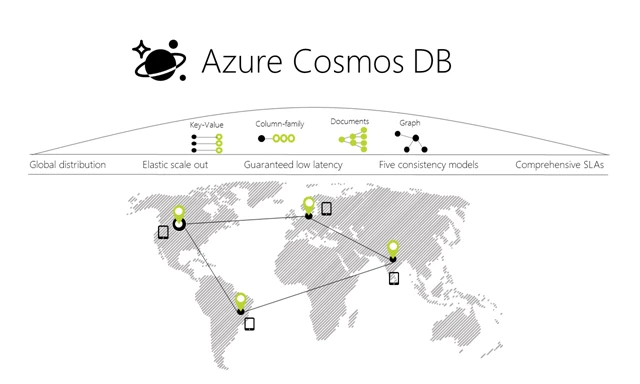

A Multi-Model, Multi-API Database Service

Figure 1. Azure Cosmos DB as a multi-model, multi-API globally distributed database platform

As illustrated in Figure 1, Azure Cosmos DB natively supports multiple data models. The core type system of Azure Cosmos DB’s database engine is atom-record-sequence (ARS) based. Atoms consist of a small set of primitive types e.g. string, bool, number etc., records are structs and sequences are arrays consisting of atoms, records or sequences. The database engine of Azure Cosmos DB is capable of efficiently translating and projecting the data models onto the ARS based data model. The core data model of Azure Cosmos DB is natively accessible from dynamically typed programming languages and can be exposed as-is using JSON or other similar representations. The design also enables natively supporting popular database APIs for data access and query. Azure Cosmos DB’s database engine currently supports DocumentDB SQL, MongoDB, Azure Table Storage, and Gremlin graph query API. We intend to extend it to support other popular database APIs as well. The key benefit is that developers can continue to build their applications using popular OSS APIs but get all the benefits of a battle-tested and fully managed, globally distributed database system.

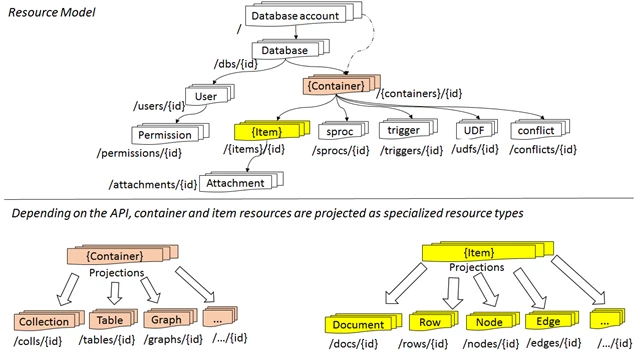

Resource Model and API Projections

Developers can start using Azure Cosmos DB by provisioning a database account using their Azure subscription. A database account manages one or more databases. An Azure Cosmos DB database in-turn manages users, permissions and containers. An Azure Cosmos DB container is a schema-agnostic container of arbitrary user-generated entities and stored procedures, triggers and user-defined-functions (UDFs). Entities under the customer’s database account – databases, users, permissions, containers etc., are referred to as resources as illustrated in Figure 2.

Figure 2. Resource Model and API projection

Each resource is uniquely identified by a stable and logical URI and represented as a JSON document. The overall resource model of an application using Azure Cosmos DB is a hierarchical overlay of the resources rooted under the database account, and can be navigated using hyperlinks. With the exception of the item resource – which is used to represent arbitrary user defined content, all other resources have a system-defined schema. The content model of the item resource is based on atom-record-sequence (ARS) described earlier. Both, container and item resources are further projected as reified resource types for a specific type of API interface as depicted in Table 1. For example, while using document-oriented APIs, container and item resources are projected as collection (container) and document (item) resources, respectively; likewise, for graph-oriented API access, the underlying container and item resources are projected as graph (container), node (item) and edge (item) resources respectively; while accessing using a key-value API, table (container) and item/row (item) are projected.

|

API |

Container is projected as … |

Item is projected as … |

|

DocumentDB SQL |

Collection |

Document |

|

MongoDB |

Collection |

Document |

|

Azure Table Storage |

Table |

Item |

|

Gremlin |

Graph |

Node and Edge |

Table 1. Projections of containers and items based on the data model of the specific API.

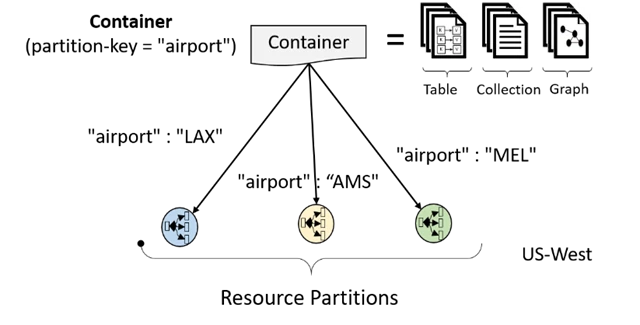

Horizontal Partitioning

All the data within an Azure Cosmos DB container (e.g. collection, table, graph etc.) is horizontally partitioned and transparently managed by resource partitions as illustrated in Figure 3. A resource partition is a consistent and highly available container of data partitioned by a customer specified partition-key; it provides a single system image for a set of resources it manages and is a fundamental unit of scalability and distribution. Azure Cosmos DB is designed for customer to elastically scale throughput based on the application traffic patterns across different geographical regions to support fluctuating workloads varying both by geography and time. The system manages the partitions transparently without compromising the availability, consistency, latency or throughput of an Azure Cosmos DB container.

Figure 3. Elastic scalability using horizontal partitioning

Customers can elastically scale throughput of a container by programmatically provisioning throughput at a second or minute granularity on an Azure Cosmos DB container. Internally, the system transparently manages resource partitions to deliver the throughput on a given container. Elastically scaling throughput using horizontal partitioning of resources requires that each resource partition is capable of delivering the portion of the overall throughput for a given budget of system resources. Since an Azure Cosmos DB container is globally distributed, Azure Cosmos DB ensures that the throughput of a container is available for use across all the regions where the container is distributed within a few seconds of the change in its value. Customers can provision throughput (measured in using a currency unit called, Request Unit or RU) on an Azure Cosmos DB container at both.

Global Distribution from the Ground-Up

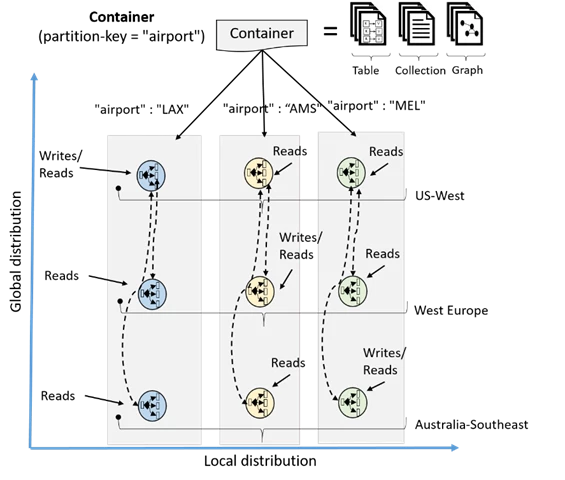

As illustrated in Figure 5, a customer’s resources are distributed along two dimensions: within a given region, all resources are horizontally partitioned using resource partitions (local distribution). Each resource partition is also replicated across geographical regions (global distribution).

Figure 5. A container can be both locally and globally distributed.

When customers elastically scale throughput or storage, Azure Cosmos DB transparently performs partition management operations across all the regions. Independent of the scale, distribution, or failures, Azure Cosmos DB continues to provide a single system image of the globally-distributed resources. Global distribution of resources in Azure Cosmos DB is turnkey: at any time with a few button clicks (or programmatically with a single API call), customers can associate any number of geographical regions with their database account. Regardless of the amount of data or the number of regions, Azure Cosmos DB guarantees each newly associated region to start processing client requests in under an hour at the 99th percentile. This is done by parallelizing the seeding and copying data from all the source resource partitions to the newly associated region. Customers can also remove an existing region or take a region that was previously associated with their database account “offline”.

Transparent Multi-Homing and 99.99% High Availability

Customers can also dynamically associate “priorities” to the regions associated with their database account. Priorities are used to direct the requests to specific regions in the event of regional failures. In an unlikely event of a regional disaster, Azure Cosmos DB will automatically failover in the order of priority. In order to test the end-to-end availability of the application, customers can manually trigger failover (rate limited to two operations within an hour). Azure Cosmos DB guarantees zero data loss in the case of customer triggered regional failover and guarantees an upper-bound on data loss in the event of a system-triggered automatic failover during a regional disaster. The application does not need to be redeployed upon regional failover, and the availability SLAs are maintained. For this, Azure Cosmos DB allows developers to interact with their resources using either logical (region-agnostic) or physical (region-specific) endpoints. The former ensures that the application can transparently be multi-homed in case of failover; the latter provides fine-grained control to the application to redirect reads and writes to specific regions. Azure Cosmos DB guarantees 99.99% availability SLA for every database account. The availability guarantees are agnostic of the scale (throughput and storage associated with a customer’s database), number of regions, or geographical distance between regions associated with a given database.

Low Latency Guarantees at the 99th percentile

As part of its SLAs, Azure Cosmos DB guarantees end-to-end low latency at the 99th percentile to its customers. For a typical 1KB item, Azure Cosmos DB guarantees end-to-end latency of reads under 10ms and indexed writes under 15ms at the 99th percentile within the same Azure region. The average latencies are significantly lower (under 5ms). With an upper bound of request processing on every database transaction, Azure Cosmos DB allows clients to clearly distinguish between transactions with high latency vs. a database being unavailable.

Multiple, Well-Defined Consistency Models Backed By SLAs

Currently available commercial distributed databases fall into two categories: (1) Databases which do not offer well-defined, provable consistency choices or (2) Databases which offer two extreme consistency choices – strong vs. eventual consistency. The former systems burden the application developers with minutia of their replication protocols and expects them to make difficult tradeoffs between consistency, availability, latency, and throughput. The latter systems put pressure on application developers to choose between the two extremes. Despite the abundance of research and proposals for numerous consistency models, the commercial distributed database services have not been able to operationalize consistency levels beyond strong and eventual consistency. Azure Cosmos DB allows developers to choose between five well-defined consistency models along the consistency spectrum (Figure 6) – strong, bounded staleness, session, consistent prefix and eventual.

Figure 6. Multiple well-defined consistency choices along the spectrum.

Developers using Azure Cosmos DB can configure the default consistency level on their database account (and later override the consistency on a specific read request). Internally, the default consistency level applies to data within the partition-sets which may be span regions. About 73% of our customers use session consistency and 20% prefer bounded staleness. We observe that approximately 3% of customers experiment with various consistency levels initially before settling with a specific consistency choice for their application. We also observe that on average, only 2% of our customers override consistency levels on a per request basis. To report any violations for the consistency SLAs to customers, we employ a linearizability checker, which continuously operates over our service telemetry. For bounded staleness, we monitor and report any violations to k and t bounds. For all four relaxed consistency levels, among other metrics, we also track and report the probabilistic bounded staleness (PBS) metric.

Fully Resource Governed Stack

Azure Cosmos DB is designed to allow customers to elastically scale throughput based on the application traffic patterns across different regions to support fluctuating workloads varying both by geography and time. Operating hundreds of thousands of globally distributed and diverse workloads cost-effectively requires fine-grained multi-tenancy, where hundreds of customers share the same machine and yet thousands share the same cluster. To provide performance isolation to each customer while operating cost-effectively, we’ve engineered the entire system from the ground up with resource governance in mind. As a resource governed system, Azure Cosmos DB is a massively distributed queuing system with cascaded stages of components, each carefully calibrated to deliver predictable throughput while operating within the allotted budget of system resources. In order to optimally utilize the system resources (CPU, memory, disk, and network) available within a given cluster, every machine in the cluster is capable of dynamically hosting from 10s to 100s of customers. Rate-limiting and back-pressure are plumbed across the entire stack from the admission control to all I/O paths. Our database engine is designed to exploit fine-grained concurrency and to deliver high throughput while operating within frugal amounts of system resources.

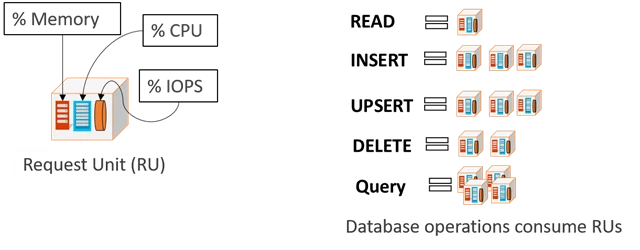

The number of database operations issued within a unit of time (i.e., throughput) is the fundamental unit of reservation and consumption of system resources. Customers can perform wide range of database operations against their data. Depending on the operation type and the size of (the request and response) payload the operation may consume different amounts of system resources. In order to provide a normalized model for accounting the resources consumed by a request, budget system resources corresponding to the throughput a given resource partition needs to deliver, and charge the customers for throughput across various database operations consistently and in a hardware agnostic manner, we have defined an abstract rate-based currency for throughput called Request Unit or RU (plural, RUs, see Figure 7), which is available in two denominations based on the time granularity – request units/sec (RU/s) and request units per minute (RU/m). Customers can elastically scale throughput of a container by programmatically provisioning RU/s (and/or RU/m) on a container. Internally, the system manages resource partitions to deliver the throughput on a given container. Elastically scaling throughput using horizontally partitioning of resources requires that each resource partition is capable of delivering the portion of the overall throughput for a given budget of system resources.

Figure 7. RU/s (and RU/m) is the normalized currency of throughput for various database operations.

As part of the admission control, each resource partition employs adaptive rate limiting. If the resource partition receives more requests within a second than it was calibrated against, the client will receive “request rate too large” with a back-off interval after which the client can retry. Within each second, a resource partition performs (rate limited) background chores (e.g., background GC of the log structured database engine, taking periodic snapshot backups, deleting expired items etc.) within the spare capacity of RUs (if any). Once a request is admitted, we account for the RUs consumed by each micro-operation (e.g., analyzing an item, reading/writing a page, or executing a query operator).

Conclusion

Global distribution, elastic horizontal scalability, and multi-model and schema-agnostic database engine are all central to Azure Cosmos DB’s design. As a cloud-born multi-tenant database system, Azure Cosmos DB’s design interleaves resource governance across its entire stack. The system is designed from the ground up to offer global distribution of data, multiple well-defined consistency levels, ability to elastically scale throughput across geographical regions, and comprehensive SLAs encompassing throughput, consistency, latency, and availability to all its customers.

Acknowledgements

Azure Cosmos DB started as “Project Florence” in late 2010, which eventually grew into Azure DocumentDB before expanding and blossoming into its current form. We are very thankful to Dave Campbell, Mark Russinovich, Scott Guthrie, and Gopal Kakivaya for their support. Our thanks to all the teams inside Microsoft who have made Azure Cosmos DB robust, by their extensive use of the service over the years. We stand on the shoulders of giants – there are many component technologies Azure Cosmos DB is built upon; special thanks to the Service Fabric team for providing us a great distributed systems infrastructure, their support and partnership. We are grateful to Dr. Leslie Lamport for deeply inspiring us and influencing our approach to designing distributed systems. Last but not the least, we are grateful to the team of Azure Cosmos DB engineers for their deep commitment and care.