AI + Machine Learning, Announcements, Azure AI, Azure AI Studio, Azure Arc, Azure CycleCloud, Azure Machine Learning, Azure Synapse Analytics, Events, Partners

Microsoft and NVIDIA partnership continues to deliver on the promise of AI

Posted on

6 min read

At NVIDIA GTC, Microsoft and NVIDIA are announcing new offerings across a breadth of solution areas from leading AI infrastructure to new platform integrations, and industry breakthroughs. Today’s news expands our long-standing collaboration, which has paved the way for revolutionary AI innovations that customers are now bringing to fruition.

Microsoft and NVIDIA collaborate on Grace Blackwell 200 Superchip for next-generation AI models

Microsoft and NVIDIA are bringing the power of the NVIDIA Grace Blackwell 200 (GB200) Superchip to Microsoft Azure. The GB200 is a new processor designed specifically for large-scale generative AI workloads, data processing, and high performance workloads, featuring up to a massive 16 TB/s of memory bandwidth and up to an estimated 30 times the inference on trillion parameter models relative to the previous Hopper generation of servers.

Microsoft has worked closely with NVIDIA to ensure their GPUs, including the GB200, can handle the latest large language models (LLMs) trained on Azure AI infrastructure. These models require enormous amounts of data and compute to train and run, and the GB200 will enable Microsoft to help customers scale these resources to new levels of performance and accuracy.

Microsoft will also deploy an end-to-end AI compute fabric with the recently announced NVIDIA Quantum-X800 InfiniBand networking platform. By taking advantage of its in-network computing capabilities with SHARPv4, and its added support for FP8 for leading-edge AI techniques, NVIDIA Quantum-X800 extends the GB200’s parallel computing tasks into massive GPU scale.

Azure will be one of the first cloud platforms to deliver on GB200-based instances

Microsoft has committed to bringing GB200-based instances to Azure to support customers and Microsoft’s AI services. The new Azure instances-based on the latest GB200 and NVIDIA Quantum-X800 InfiniBand networking will help accelerate the generation of frontier and foundational models for natural language processing, computer vision, speech recognition, and more. Azure customers will be able to use GB200 Superchip to create and deploy state-of-the-art AI solutions that can handle massive amounts of data and complexity, while accelerating time to market.

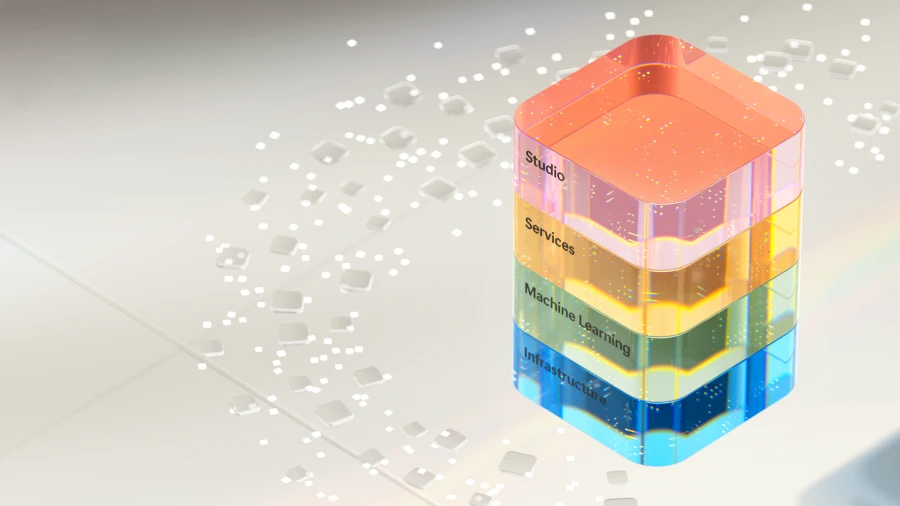

Azure also offers a range of services to help customers optimize their AI workloads, such as Microsoft Azure CycleCloud, Azure Machine Learning, Microsoft Azure AI Studio, Microsoft Azure Synapse Analytics, and Microsoft Azure Arc. These services provide customers with an end-to-end AI platform that can handle data ingestion, processing, training, inference, and deployment across hybrid and multi-cloud environments.

Delivering on the promise of AI to customers worldwide

With a powerful foundation of Azure AI infrastructure that uses the latest NVIDIA GPUs, Microsoft is infusing AI across every layer of the technology stack, helping customers drive new benefits and productivity gains. Now, with more than 53,000 Azure AI customers, Microsoft provides access to the best selection of foundation and open-source models, including both LLMs and small language models (SLMs), all integrated deeply with infrastructure data and tools on Azure.

The recently announced partnership with Mistral AI is also a great example of how Microsoft is enabling leading AI innovators with access to Azure’s cutting-edge AI infrastructure, to accelerate the development and deployment of next-generation LLMs. Azure’s growing AI model catalogue offers, more than 1,600 models, letting customers choose from the latest LLMs and SLMs, including OpenAI, Mistral AI, Meta, Hugging Face, Deci AI, NVIDIA, and Microsoft Research. Azure customers can choose the best model for their use case.

“We are thrilled to embark on this partnership with Microsoft. With Azure’s cutting-edge AI infrastructure, we are reaching a new milestone in our expansion propelling our innovative research and practical applications to new customers everywhere. Together, we are committed to driving impactful progress in the AI industry and delivering unparalleled value to our customers and partners globally.”

Arthur Mensch, Chief Executive Officer, Mistral AI

General availability of Azure NC H100 v5 VM series, optimized for generative inferencing and high-performance computing

Microsoft also announced the general availability of Azure NC H100 v5 VM series, designed for mid-range training, inferencing, and high performance compute (HPC) simulations; it offers high performance and efficiency.

As generative AI applications expand at incredible speed, the fundamental language models that empower them will expand also to include both SLMs and LLMs. In addition, artificial narrow intelligence (ANI) models will continue to evolve, focused on more precise predictions rather than creation of novel data to continue to enhance its use cases. Their applications include tasks such as image classification, object detection, and broader natural language processing.

Using the robust capabilities and scalability of Azure, we offer computational tools that empower organizations of all sizes, regardless of their resources. Azure NC H100 v5 VMs is yet another computational tool made generally available today that will do just that.

The Azure NC H100 v5 VM series is based on the NVIDIA H100 NVL platform, which offers two classes of VMs, ranging from one to two NVIDIA H100 94GB PCIe Tensor Core GPUs connected by NVLink with 600 GB/s of bandwidth. This VM series supports PCIe Gen5, which provides the highest communication speeds (128GB/s bi-directional) between the host processor and the GPU. This reduces the latency and overhead of data transfer and enables faster and more scalable AI and HPC applications.

The VM series also supports NVIDIA multi-instance GPU (MIG) technology, enabling customers to partition each GPU into up to seven instances, providing flexibility and scalability for diverse AI workloads. This VM series offers up to 80 Gbps network bandwidth and up to 8 TB of local NVMe storage on full node VM sizes.

These VMs are ideal for training models, running inferencing tasks, and developing cutting-edge applications. Learn more about the Azure NC H100 v5-series.

“Snorkel AI is proud to partner with Microsoft to help organizations rapidly and cost-effectively harness the power of data and AI. Azure AI infrastructure delivers the performance our most demanding ML workloads require plus simplified deployment and streamlined management features our researchers love. With the new Azure NC H100 v5 VM series powered by NVIDIA H100 NVL GPUs, we are excited to continue to can accelerate iterative data development for enterprises and OSS users alike.”

Paroma Varma, Co-Founder and Head of Research, Snorkel AI

Microsoft and NVIDIA deliver breakthroughs for healthcare and life sciences

Microsoft is expanding its collaboration with NVIDIA to help transform the healthcare and life sciences industry through the integration of cloud, AI, and supercomputing.

By using the global scale, security, and advanced computing capabilities of Azure and Azure AI, along with NVIDIA’S DGX Cloud and NVIDIA Clara suite, healthcare providers, pharmaceutical and biotechnology companies, and medical device developers can now rapidly accelerate innovation across the entire clinical research to care delivery value chain for the benefit of patients worldwide. Learn more.

New Omniverse APIs enable customers across industries to embed massive graphics and visualization capabilities

Today, NVIDIA’s Omniverse platform for developing 3D applications will now be available as a set of APIs running on Microsoft Azure, enabling customers to embed advanced graphics and visualization capabilities into existing software applications from Microsoft and partner ISVs.

Built on OpenUSD, a universal data interchange, NVIDIA Omniverse Cloud APIs on Azure do the integration work for customers, giving them seamless physically based rendering capabilities on the front end. Demonstrating the value of these APIs, Microsoft and NVIDIA have been working with Rockwell Automation and Hexagon to show how the physical and digital worlds can be combined for increased productivity and efficiency. Learn more.

Microsoft and NVIDIA envision deeper integration of NVIDIA DGX Cloud with Microsoft Fabric

The two companies are also collaborating to bring NVIDIA DGX Cloud compute and Microsoft Fabric together to power customers’ most demanding data workloads. This means that NVIDIA’s workload-specific optimized runtimes, LLMs, and machine learning will work seamlessly with Fabric.

NVIDIA DGX Cloud and Fabric integration include extending the capabilities of Fabric by bringing in NVIDIA DGX Cloud’s large language model customization to address data-intensive use cases like digital twins and weather forecasting with Fabric OneLake as the underlying data storage. The integration will also provide DGX Cloud as an option for customers to accelerate their Fabric data science and data engineering workloads.

Accelerating innovation in the era of AI

For years, Microsoft and NVIDIA have collaborated from hardware to systems to VMs, to build new and innovative AI-enabled solutions to address complex challenges in the cloud. Microsoft will continue to expand and enhance its global infrastructure with the most cutting-edge technology in every layer of the stack, delivering improved performance and scalability for cloud and AI workloads and empowering customers to achieve more across industries and domains.

Join Microsoft at NVIDIA CTA AI Conference, March 18 through 21, at booth #1108 and attend a session to learn more about solutions on Azure and NVIDIA.