Ever since its inception and public preview almost 7 years ago at PDC 2008, Microsoft Azure has provided a means to detect health of virtual machines (VMs) running on the platform and to perform auto-recovery of those virtual machines should they ever fail. This process of auto-recovery is referred to as “Service Healing”, as it is a means of “healing” your service instances. We believe service healing, a.k.a auto-recovery, is an integral part of running business critical workloads in the cloud and hence this auto-recovery mechanism is enabled and available on virtual machines across all the different VM sizes and offerings, across all Azure regions and datacenters. In this blog post we will take a look at the mechanism of service healing/auto-recovery in Azure when a failure does occur.

A key aspect in recovery from any failure is first detecting that a failure has occurred. The quicker we detect failures, the less the virtual machine stays down in the event of a failure. This is also referred to as Mean Time to Detect (MTTD). The Fabric Controller, the kernel of the Microsoft Azure cloud operating system, has a number of health protocols to keep track of the state of all the virtual machines as well as the code that runs within them (in the case of Web or Worker Roles).

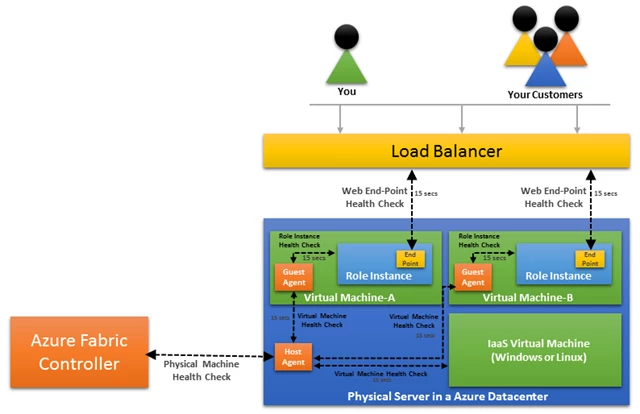

The below diagram provides an overview of the different layers of the system where faults can occur and the health checks that Azure performs to detect them –

Cloud Services: In the case of your Web and Worker Roles, your code is running in its web or worker role instance. If it’s a web role, its web endpoint is being pinged by the load balancer every 15 seconds to determine if it's healthy. If the health check fails for a predefined number of consecutive pings, then the endpoint is deemed unhealthy and a recovery action is taken which is to remove the role from rotation and mark the role as unhealthy.

Note: This applies only in the case of the default GA based Load Balancer rotation and not custom LB probe.

For both web and worker roles, Azure injects a guest agent into the VM to monitor your role. This guest agent also performs a health check every 15 seconds of the role instance in the virtual machine. Again, if a predefined number of consecutive health check failures or a signal from the load balancer causes a role to become unhealthy and a recovery action to be initiated which is to restart the role instance. You’ll notice that these health checks across Azure are performed within a 15 second interval, this is to improve MTTD. Detecting failures quick and early means we can fix them as soon as they occur and prevent dependent systems from failing and cascading through the system.

The next layer in the system is the health of the virtual machine itself within which the role instance is running. The virtual machine is hosted on a physical server running inside an Azure datacenter. The physical server runs another agent called the Host Agent. The Host Agent monitors the health of the virtual machine by ensuring there is a heartbeat from the guest agent for web and worker role VMs every 15 seconds. It is quite plausible that a virtual machine is under stress from its workload, which could be its CPU is at 100% utilization, hence missing just a couple of health checks doesn’t warrant a recovery action. We allow up to 10 minutes for the virtual machine to respond to a health check before we initiate a recovery action. The recovery action in this case is to recycle the virtual machine with a clean OS disk in the case of a Web & Worker Role.

Azure Virtual Machines: In the case of Azure Virtual Machines, there is no role instance and there is no guest agent automatically injected by default. Hence the health check is performed on the state of the virtual machine as reported by the hypervisor. If there is any issue with the power state of the VM, we perform a reboot preserving the disk state intact. For Azure Virtual Machines, we don’t take any action for issues happening within the VM. This includes continuous high CPU utilization, VM OS halt and application faults in the VM OS. For internal VM OS issues, you will need to set up monitoring within your application.

The next layer in the system is the health of the physical server itself on which the virtual machines are running. The Fabric Controller is performing health checks on the physical server through the host agent periodically. If a certain consecutive number of health checks fail consecutively then the physical server is marked unhealthy and a recovery action is initiated if the machine doesn’t come back into healthy state. We will begin by performing a reboot of the physical server.

If the physical server doesn’t come back in a healthy state within a predefined period, then we recover the virtual machines running on the physical server to a different healthy server within that partition of the datacenter.

The physical server will then be taken out of rotation, isolated and will undergo extensive diagnosis for determining the reason of its failure and if need be, will be sent out to the manufacturer for repairs.

Predicting Failures even before they occur to take Preventive Action

What we’ve discussed so far are cases where a failure has occurred and how Azure goes about detecting and recovering from them. Azure not only reacts to failures but also uses heuristics to predict failures that are imminent and takes preventive action before the failure even occurs, thus improving Mean Time to Recovery (MTTR).

The host agent we discussed earlier which sits on the physical server periodically collects health information about the server and other devices attached to it such as disks, memory and other hardware. This information not only includes performance counters such as CPU utilization and Disk IO but also other metrics. For example, bad sectors on disk drives, can help predict when a disk failure is imminent. We perform recovery of the virtual machines on those servers as quickly as possible even before the failure has occurred and pass the physical server on for extensive diagnosis.

Analyzing Failures using Big Data Analytics

Microsoft Azure produces multiple terabytes of diagnostic logs, health check information and crash dumps every day from all of virtual machines running on the platform. We use this data to identify patterns in the failures. We run automated big data analytics on our map-reduce implementation called “Cosmos” to analyze these logs and crashes. These analytics helps us identify patterns in these failures which in turn help identify bugs in the system. In addition, these have often in the past helped identify new features to improve the system or improve existing features. This data coupled with invaluable feedback from our customers such as yourself helps evolve the platform forward.

Also note, we may adjust the health check values mentioned in this blog post anytime to further improve MTTD and MTTR; these were provided in this post to illustrate how the Azure Service Healing mechanism works.