Exchange-traded financial products—like stocks, treasuries, and currencies—have had the benefit of a tremendous wave of technological innovation in the past 20 years, resulting in more efficient markets, lower transaction costs, and greater transparency to investors.

However, large parts of the capital markets have been left behind. Valuation of instruments composing the massive $500 trillion market in over-the-counter (OTC) derivatives—such as interest rate swaps, credit default swaps, and structured products—lack the same degree of immediate clarity that is enjoyed by their more straightforward siblings.

In times of increased volatility, traders and their managers need to know the impacts of market conditions on a given instrument as the day unfolds to be able to take appropriate action. Reports reflecting the conditions at the previous close of business are only valuable in calm markets and even then, firms with access to fast valuation and risk sensitivity calculations have a substantial edge in the marketplace.

Unlike exchange-traded instruments, where values can be observed each time the instrument trades, values for OTC derivatives need to be computed using complex financial models. The conventional means of accomplishing this is through traditional Monte Carlo—a simple but computationally expensive probabilistic sweep through a range of scenarios and resultant outcomes- or finite-difference analysis.

Banks spend tens of millions of dollars annually to calculate the values of their OTC derivatives portfolios in large, nightly batches. These embarrassingly parallel workloads have evolved directly from the mainframe days to run on on-premise clusters of conventional, CPU-bound workers—delivering a set of results good for a given day.

Using conventional algorithms, real-time pricing, and risk management is out of reach. But as the influence of machine learning extends into production workloads, a compelling pattern is emerging across scenarios and industries reliant on traditional simulation. Once computed, the output of traditional simulation can be used to train DNN models that can then be evaluated in near real-time with the introduction of GPU acceleration.

We recently collaborated with Riskfuel, a startup developing fast derivatives models based on artificial intelligence (AI), to measure the performance gained by running a Riskfuel-accelerated model on the now generally available Azure ND40rs_v2 (NDv2-Series) Virtual Machine instance powered by NVIDIA GPUs against traditional CPU-driven methods.

Riskfuel is pioneering the use of deep neural networks to learn the complex pricing functions used to value OTC derivatives. The financial instrument chosen for our study was the foreign exchange barrier option.

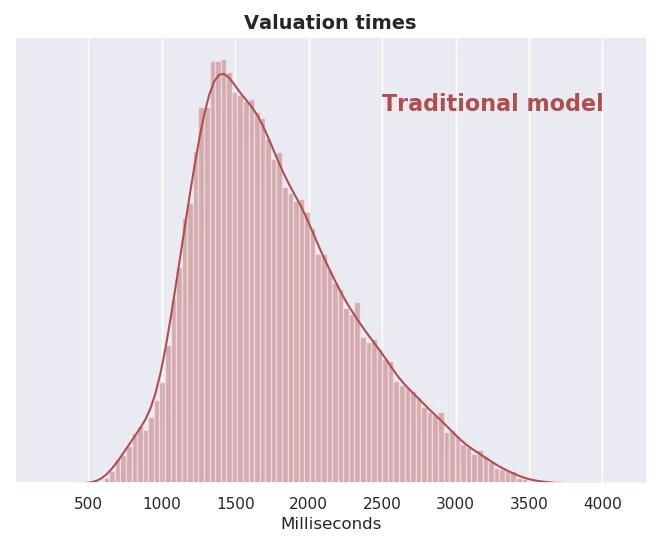

The first stage of this trial consisted of generating a large pool of samples to be used for training data. In this instance, we used conventional CPU-based workers to generate 100,000,000 training samples by repeatedly running the traditional model with inputs covering the entire domain to be approximated by the Riskfuel model. The traditional model took an average of 2250 milliseconds (ms) to generate each valuation. With the traditional model, the valuation time is dependent on the maturity of the trade.

The histogram in Figure 1 shows the distribution of valuation times for a traditional model:

Figure 1: Distribution of valuation times for traditional models.

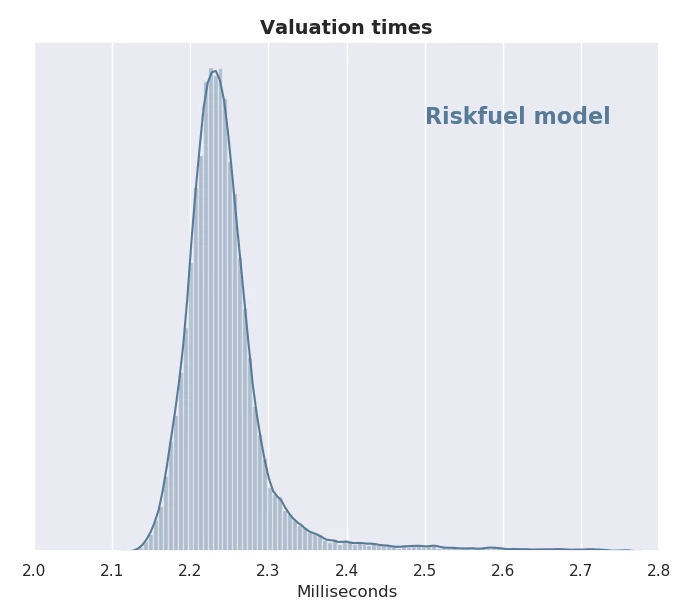

Once the Riskfuel model is trained, valuing individual trades is much faster with a mean under 3 ms, and is no longer dependent on maturity of the trade:

Figure 2: Riskfuel model demonstrating valuation times with a mean under 3 ms.

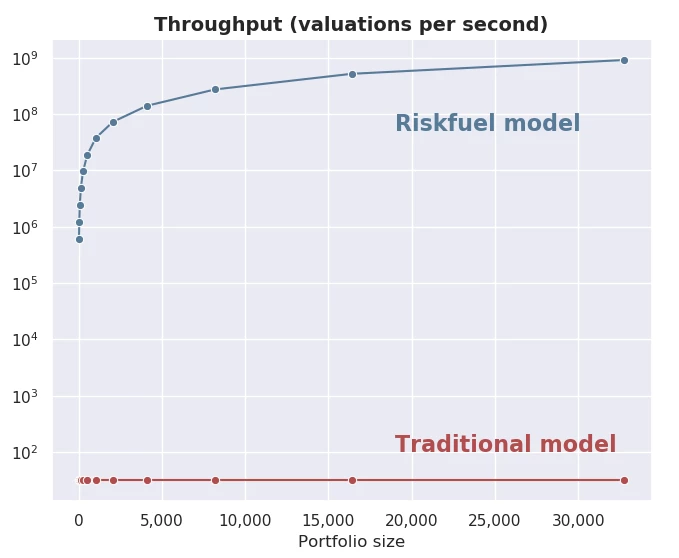

These results are for individual valuations and don’t use the massive parallelism that the Azure ND40rs_v2 Virtual Machine can deliver when saturated in a batch inferencing scenario. When called upon to value portfolios of trades, like those found in a typical trading book, the benefits are even greater. In our study, the combination of a Riskfuel-accelerated version of the foreign exchange barrier option model and with an Azure ND40rs_v2 Virtual Machine showed a 20M+ times performance improvement over the traditional model.

In Figure 3 shows the throughput, as measured in valuations per second, of the traditional model running on a non-accelerated Azure Virtual Machine versus the Riskfuel model running on an Azure ND40rs_v2 Virtual Machine (in blue):

Figure 3: Model comparison of traditional model running versus the Riskfuel model.

For portfolios with 32,768 trades, the throughput on an Azure ND40rs_v2 Virtual Machine is 915,000,000 valuations per second, whereas the traditional model running on CPU-based VMs has a throughput of just 32 valuations per second. This is a demonstrated improvement of more than 28,000,000x.

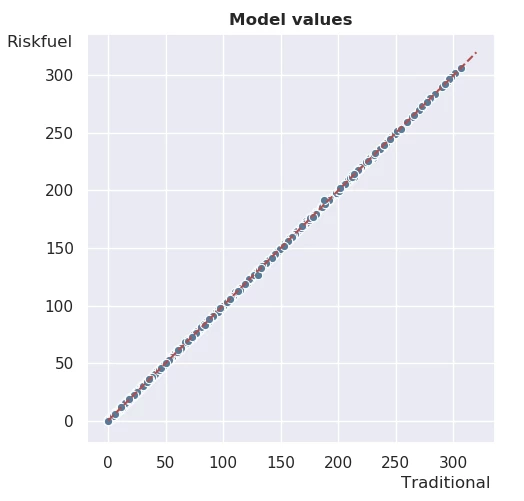

It is critical to point out here that the speedup resulting from the Riskfuel model does not sacrifice accuracy. In addition to being extremely fast, the Riskfuel model effectively matches the results generated by the traditional model, as shown in Figure 4:

Figure 4: Accuracy of Riskfuel model.

These results clearly demonstrate the potential of supplanting traditional on-premises high-performance computing (HPC) simulation workloads with a hybrid approach: using traditional methods in the cloud as a methodology to produce datasets used to train DNNs that can then evaluate the same set of functions in near real-time.

The Azure ND40rs_v2 Virtual Machine is a new addition to the NVIDIA GPU-based family of Azure Virtual Machines. These instances are designed to meet the needs of the most demanding GPU-accelerated AI, machine learning, simulation, and HPC workloads, and the decision to use the Azure ND40rs_v2 Virtual Machine was to take full advantage of the massive floating point performance it offers to achieve the highest batch-oriented performance for inference steps, as well as the greatest possible throughput for model training.

The Azure ND40rs_v2 Virtual Machine is powered by eight NVIDIA V100 Tensor Core GPUs, each with 32 GB of GPU memory, and with NVLink high-speed interconnects. When combined, these GPUs deliver one petaFLOPS of FP16 compute.

Riskfuel’s Founder and CEO, Ryan Ferguson, predicts the combination of Riskfuel accelerated valuation models and NVIDIA GPU-powered VM instances on Azure will transform the OTC market:

“The current market volatility demonstrates the need for real-time valuation and risk management for OTC derivatives. The era of the nightly batch is ending. And it’s not just the blazing fast inferencing of the Azure ND40rs_v2 Virtual Machine that we value so much, but also the model training tasks as well. On this fast GPU instance, we have reduced our training time from 48 hours to under four! The reduced time to train the model coupled with on-demand availability maximizes the productivity of our AI engineering team.”

Scotiabank recently implemented Riskfuel models into their leading-edge derivatives platform already live on the Azure GPU platform with NVIDIA GPU-powered Azure Virtual Machine instances. Karin Bergeron, Managing Director and Head of XVA Trading at Scotiabank, sees the benefits of Scotia’s new platform:

“By migrating to the cloud, we are able to spin up extra VMs if something requires some additional scenario analysis. Previously we didn’t have access to this sort of compute on demand. And obviously the performance improvements are very welcome. This access to compute on demand helps my team deliver better pricing to our customers.”

Additional resources

- Learn more about Azure NDv2-Series Virtual Machines.

- Explore Azure HPC.

- Learn more about Riskfuel solutions.