Azure Machine Learning service is the first major cloud ML service to support NVIDIA’s RAPIDS, a suite of software libraries for accelerating traditional machine learning pipelines with NVIDIA GPUs.

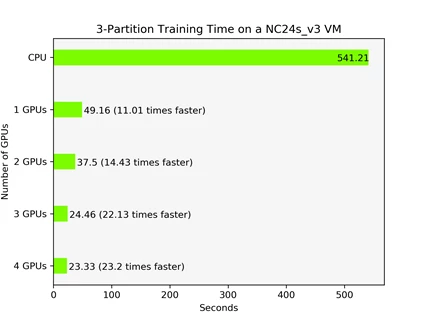

Just as GPUs revolutionized deep learning through unprecedented training and inferencing performance, RAPIDS enables traditional machine learning practitioners to unlock game-changing performance with GPUs. With RAPIDS on Azure Machine Learning service, users can accelerate the entire machine learning pipeline, including data processing, training and inferencing, with GPUs from the NC_v3, NC_v2, ND or ND_v2 families. Users can unlock performance gains of more than 20X (with 4 GPUs), slashing training times from hours to minutes and dramatically reducing time-to-insight.

The following figure compares training times on CPU and GPUs (Azure NC24s_v3) for a gradient boosted decision tree model using XGBoost. As shown below, performance gains increase with the number of GPUs. In the Jupyter notebook linked below, we’ll walk through how to reproduce these results step by step using RAPIDS on Azure Machine Learning service.

How to use RAPIDS on Azure Machine Learning service

Everything you need to use RAPIDS on Azure Machine Learning service can be found on GitHub.

The above repository consists of a master Jupyter Notebook that uses the Azure Machine Learning service SDK to automatically create a resource group, workspace, compute cluster, and preconfigured environment for using RAPIDS. The notebook also demonstrates a typical ETL and machine learning workflow to train a gradient boosted decision tree model. Users are also free to experiment with different data sizes and the number of GPUs to verify RAPIDS multi-GPU support.

About RAPIDS

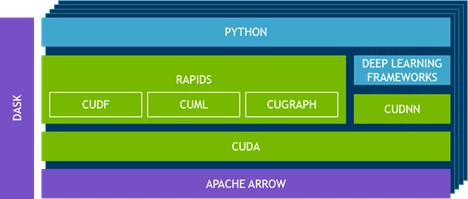

RAPIDS uses NVIDIA CUDA for high-performance GPU execution, exposing GPU parallelism and high memory bandwidth through a user-friendly Python interface. It includes a dataframe library called cuDF which will be familiar to Pandas users, as well as an ML library called cuML that provides GPU versions of all machine learning algorithms available in Scikit-learn. And with DASK, RAPIDS can take advantage of multi-node, multi-GPU configurations on Azure.

Accelerating machine learning for all

With the support for RAPIDS on Azure Machine Learning service, we are continuing our commitment to an open and interoperable ecosystem where developers and data scientists can use the tools and frameworks of their choice. Azure Machine Learning service users will be able to use RAPIDS in the same way they currently use other machine learning frameworks, and they will be able to use RAPIDS in conjunction with Pandas, Scikit-learn, PyTorch, TensorFlow, etc. We strongly encourage the community to try it out and look forward to your feedback!