A few months ago, I had the opportunity to speak to some of our partners about what we’re bringing to market with Azure AI. It was a fast-paced hour and the session was nearly done when someone raised their hand and acknowledged that there was no questioning the business value and opportunities ahead—but what they really wanted to hear more about was Responsible AI and AI safety.

This stays with me because it shows how top of mind this is as we move further in the era of AI with the launch of powerful tools that advance humankind’s critical thinking and creative expression. That partner question reminded me of the importance that AI systems are responsible by design. This means the development and deployment of AI must be guided by a responsible framework from the very beginning. It can’t be an afterthought.

Our investment in responsible AI innovation goes beyond principles, beliefs, and best practices. We also invest heavily in purpose-built tools that support responsible AI across our products. Through Azure AI tooling, we can help scientists and developers alike build, evaluate, deploy, and monitor their applications for responsible AI outcomes like fairness, privacy, and explainability. We know what a privilege it is for customers to place their trust in Microsoft.

Brad Smith authored a blog about this transformative moment we find ourselves in as AI models continue to advance. In it, he shared about Microsoft’s investments and journey as a company to build a responsible AI foundation. This began in earnest with the creation of AI principles in 2018 built on a foundation of transparency and accountability. We quickly realized principles are essential, but they aren’t self-executing. A colleague described it best by saying “principles don’t write code.” This is why we operationalize those principles with tools and best practices and help our customers do the same. In 2022, we shared our internal playbook for responsible AI development, our Responsible AI Standard, to invite public feedback and provide a framework that could help others get started.

I’m proud of the work Microsoft has done in this space. As the landscape of AI evolves rapidly and new technologies emerge, safe and responsible AI will continue to be a top priority. To echo Brad, we’ll approach whatever comes next with humility, listening, and sharing our learning along the way.

In fact, our recent commitment to customers for our first-party copilots is a direct reflection of that. Microsoft will stand behind our customers from a legal perspective if copyright infringement lawsuits are brought forward from using our Copilot, putting that commitment to responsible AI innovation into action.

In this month’s blog, I’ll focus on a few things. How we’re helping customers operationalize responsible AI with purpose-built tools. A few products and updates are designed to empower our customers with confidence so they can innovate safely with our trusted platform. I’ll also share the latest on what we’re delivering for organizations to prepare their data estates to go forward and succeed in the era of AI. Finally, I’ll highlight some fresh stories of organizations putting Azure to work. Let’s dive in!

Availability of Azure AI Content Safety delivers better online experiences

One of my favorite innovations that reflects the constant collaboration between research, policy, and engineering teams at Microsoft is Azure AI Content Safety. This month we announced the availability of Azure AI Content Safety, a state-of-the-art AI system to help keep user-generated and AI-generated content safe, ultimately creating better online experiences for everyone.

The blog shares the story of how South Australia’s Department of Education is using this solution to protect students from harmful or inappropriate content with their new, AI-powered chatbot, EdChat. The chatbot has safety features built in to block inappropriate queries and harmful responses, allowing teachers to focus on the educational benefits rather than control oversight. It’s fantastic to see this solution at work helping create safer online environments!

As organizations look to deepen their generative AI investments, many are concerned about trust, data privacy, and the safety and security of AI models and systems. That’s where Azure AI can help. With Azure AI, organizations can build the next generation of AI applications safely by seamlessly integrating responsible AI tools and practices developed through years of AI research, policy, and engineering.

All of this is built on Azure’s enterprise-grade foundation for data privacy, security, and compliance, so organizations can confidently scale AI while managing risk and reinforcing transparency. Microsoft even relies on Azure AI Content Safety to help protect users of our own AI-powered products. It’s the same technology helping us responsibly release large language models-based experiences in products like GitHub Copilot, Microsoft Copilot, and Azure OpenAI Service, which all have safety systems built in.

New model availability and fine-tuning for Azure OpenAI Service models

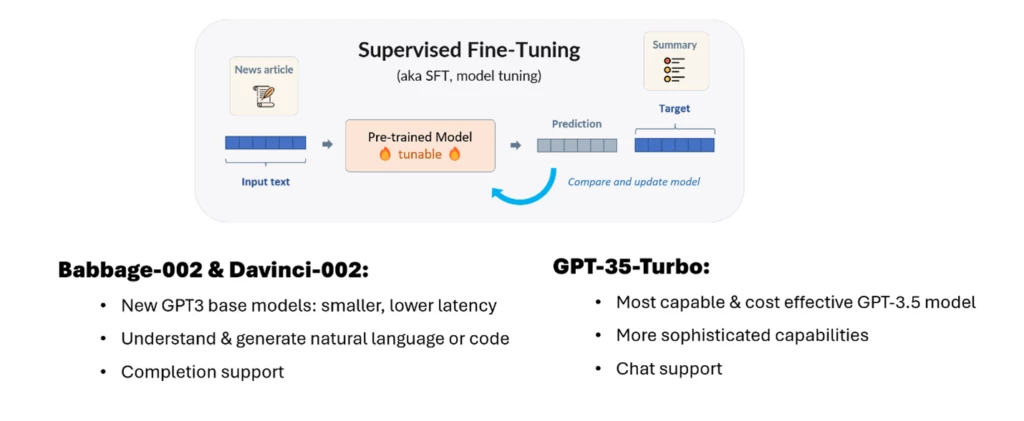

This month, we shared two new base inference models (Babbage-002 and Davinci-002) that are now generally available, and fine-tuning capabilities for three models (Babbage-002, Davinci-002, and GPT-3.5-Turbo) are in public preview. Fine-tuning is one of the methods available to developers and data scientists who want to customize large language models for specific tasks.

Since we launched Azure OpenAI, it’s been amazing to see the power of generative AI applied to new applications! Now it’s possible to customize your favorite OpenAI models for completion use cases using the latest base inference models to solve your specific challenges and easily and securely deploy those new custom models on Azure.

One way that developers and data scientists can adapt large language models for specific tasks is fine tuning. Unlike methods like Retrieval Augmented Generation (RAG) and prompt engineering that work by adding information and instructions to prompts, fine tuning works by modifying the large language model itself.

With Azure OpenAI Service and Azure Machine Learning, you can use Supervised Fine Tuning, which lets you provide custom data (prompt/completion or conversational chat, depending on the model) to teach new skills to the base model.

We suggest companies begin with prompt engineering or RAG to set up a baseline before they embark on fine-tuning—it’s the quickest way to get started, and we make it simple with tools like Prompt Flow or On Your Data. By starting with prompt engineering and RAG, developers establish a baseline to compare against, so your effort is not wasted.

Recent news from Azure Data and AI

We’re constantly rolling out new solutions to help customers maximize their data and successfully put AI to work for their businesses. Here are some product announcements from the past month:

- Public Preview of the new Synapse Data Science experience in Microsoft Fabric. We plan to release even more new experiences going forward to help you build data science solutions as part of your analytics workflows.

- Azure Cache for Redis recently introduced Vector Similarity Search, which enables developers to build generative AI based applications using Azure Cache for Redis Enterprise as a robust and high-performance vector database. From there, you can use Azure AI Content Safety to verify and filter out any results to help ensure safer content for users.

- Data Activator is now in public preview for all Microsoft Fabric users. This Fabric experience lets you drive automatic alerts and actions from your Fabric data, eliminating the need for constant manual monitoring of dashboards.

Limitless innovation with Azure Data and AI

Customers and partners are experiencing the transformative power of AI

One of my favorite parts of this job is getting to see how businesses are using our data and AI solutions to solve business challenges and real-world problems. Seeing an idea go from a pure possibility to real world solution never gets old.

With the AI safety tools and principles we’ve infused into Azure AI, businesses can move forward with confidence that whatever they build is done safely and responsibly. This means the innovation potential for any organization is truly limitless. Here are a few recent stories showing what’s possible.

For any pet parents out there, the MetLife Pet app offers a one-stop shop for all your pet’s medical care needs, including records and a digital library full of care information. The app uses Azure AI services to apply advanced machine learning to automatically extract key text from documents making it easier than ever to access your pet’s health information.

HEINEKEN has begun using Azure OpenAI Service, built-in ChatGPT capabilities, and other Azure AI services to build chatbots for employees and to improve their existing business processes. Employees are excited about its potential and are even suggesting new use cases that the company plans to roll out over time.

Consultants at Arthur D. Little turned to Azure AI to build an internal, gen AI powered solution to search across vast amounts of complex document formats. Using natural language processing from Azure AI Language and Azure OpenAI Service, along with Azure Cognitive Search’s advanced information retrieval technology, the firm can now transform difficult document formats. For example, this means 100-plus slide PowerPoint decks with fragmented text and images, are immediately making them human readable and searchable.

SWM is the municipal utility company serving Munich, Germany—and it relies on Azure IoT, AI, and big data analysis to drive every aspect of the city’s energy, heating, and mobility transition forward more sustainably. The scalability of the Azure cloud platform removes all limits when it comes to using big data.

Generative AI has quickly become a powerful tool for businesses to streamline tasks and enhance productivity. Check out this recent story of five Microsoft partners—Commerce. AI, Datadog, Modern Requirements, Atera, and SymphonyAI—powering their own customers’ transformations using generative AI. Microsoft’s layered approach for these generative models is guided by our AI Principles to help ensure organizations build responsibly and comply with the Azure OpenAI Code of Conduct.

Opportunities to enhance your AI skills and expertise

New learning paths for business decision makers are available from the Azure Skilling team for you to hone your skills with Microsoft’s AI technologies and ensure you’re ahead of the curve as AI reshapes the business landscape. We’re also helping leaders from the Healthcare and Financial Services industries learn more about how to apply AI in their everyday work. Check out the new learning material on knowledge mining and developing generative AI solutions with Azure OpenAI Service.

The Azure AI and Azure Cosmos DB University Hackathon kicked off this month. The hackathon is a call to students worldwide to reimagine the future of education using Azure AI and Azure Databases. Read the blog post to learn more and register.

If you’re already using generative AI and want to learn more—or if you haven’t yet and are not sure where to start—I have a new resource for you. Here are 25 tips to help you unlock the potential of generative AI through better prompts. These tips help specify your input and yield more accurate and relevant results from language models like ChatGPT.

Just like in real life, HOW you ask for something can limit what you get in response so the inputs are important to get right. Whether you’re conducting market research, sourcing ideas for your child’s Halloween costume, or (my personal favorite) creating marketing narratives, these prompt tips will help you get better results.

Embrace the future of data and AI with upcoming events

PASS Data Community Summit 2023 is just around the corner, from November 14, 2023, through November 17, 2023. This is an opportunity to connect, share, and learn with your peers and industry thought leaders—and to celebrate all things data! The full schedule is now live so you can register and start planning your Summit week.

I hope you’ll join us for Microsoft Ignite 2023, which is also next month, November 14, 2023, through November 17, 2023. If you’re not headed to Seattle, be sure to register for the virtual experience. There’s so much in store for you to experience AI transformation in action!