Happy Birthday Kubernetes! In the short three years that Kubernetes has been around, it has become the industry standard for orchestration of containerized workloads. In Azure, we have spent the last three years helping customers run Kubernetes in the cloud. As much as Kubernetes simplifies the task of orchestration, there’s plenty of setup and management that needs to take place for you to take full advantage of Kubernetes. This is where Azure Kubernetes Service (AKS) comes in. With Microsoft’s unique knowledge of the requirements of an enterprise, and our heritage of empowering developers, this managed service takes care of many of the complexities and delivers the best Kubernetes experience in the cloud.

In this blog post, I will dig into the top scenarios that Azure customers are building on Azure Kubernetes Service. After that, we will blow out the candles and have some cake.

If you are new to AKS, check out the Azure Kubernetes Service page and this video to learn more.

Lift and shift to containers

Organizations typically want to move to the cloud quickly and it is often not possible to re-write applications to take full advantage of cloud-native features right from the beginning. Containerizing applications makes it much simpler to modernize your apps and move to the cloud in a frictionless manner while adding benefits such as CI/CD automation, auto-scale, security, monitoring, and much more. It also allows for simplified management at the data layer by taking advantage of Azure’s PaaS based databases such as Azure CosmosDB or Azure’s managed PostgreSQL Service.

For example, I worked with a customer in the manufacturing industry who had many legacy Java applications sprawled throughout a high cost datacenter. They were often unable to scale these applications to meet customer demand and updates were cumbersome and unreliable. With Azure Kubernetes Service and containers, they were able to host many of these applications in a single managed service. This led to a much higher reliability and the ability to ship new capabilities much more frequently.

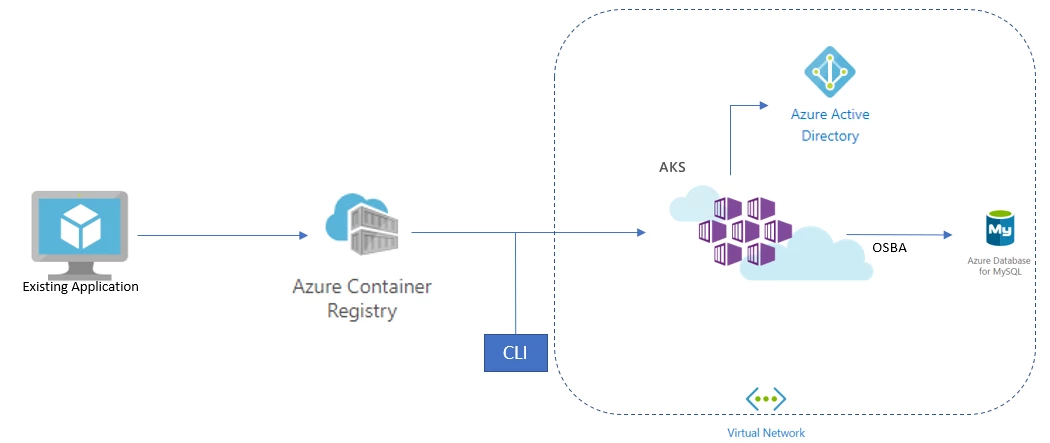

The following diagram visually illustrates a typical lift and shift approach involving AKS. To get started with AKS, check out the following tutorial.

Microservices based cloud native applications

Microservices bring super powers to many applications and include benefits such as:

- Independent deployments

- Improved scale and resource utilization per service

- Smaller, focused developer teams

- Focus code around business capabilities

Containers are the ideal technology to deliver microservices based applications. Kubernetes provides the much-needed orchestration layer to help organizations manage these distributed microservices apps at scale.

What Azure uniquely brings to the table is its native integration with developer tools, and its flexibility to plug into the best tools and services coming out of the Kubernetes ecosystem. It offers the comprehensive, yet simple, end-to-end experience for seamless Kubernetes lifecycle management on Azure. Since microservices are polyglot in nature for language, processes, and tooling, Azure’s focus on developer and ops productivity attracts companies like Siemens, Varian, and Tryg to run microservices at scale with AKS. My customers running AKS also found the below capabilities helpful for development, deployment, and management of their microservices-based applications:

- Azure Dev Spaces support to iteratively develop, test, and debug microservices targeting at AKS clusters.

- Automating external access to services with HTTP application routing.

- Using ACI Connector, a Virtual Kubelet implementation, to allow for fast scaling of services with Azure Container Instances.

- Simplifying CI/CD with Azure DevOps Projects and open source tools such as Helm, Draft, and Brigade, all backed by a secure, private Docker repository in Azure Container Registry.

- Supporting Service Mesh solutions such as Istio or Linkerd to easily add complex network routing, logging, TLS security, and distributed tracing to distributed apps.

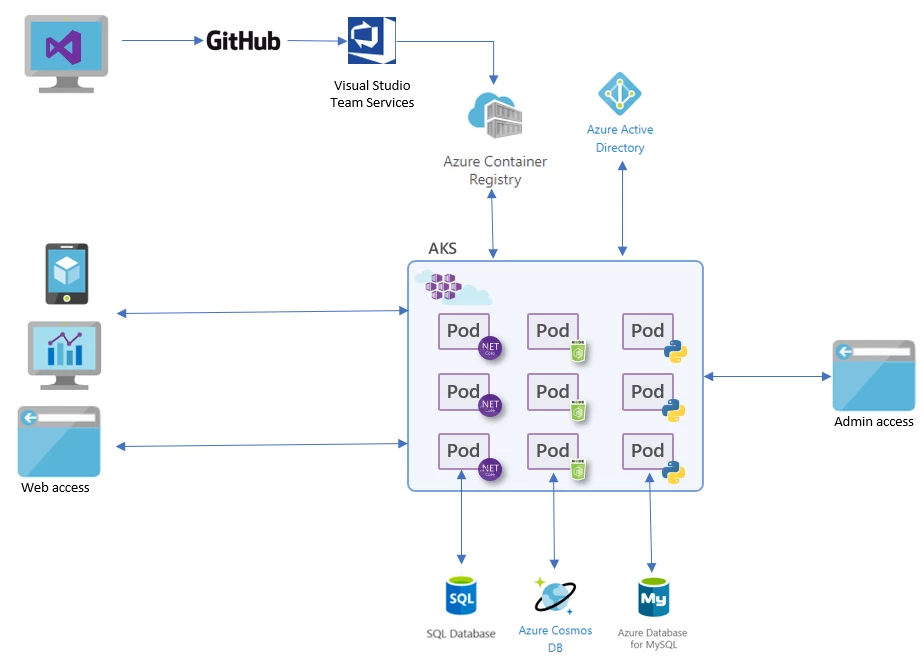

The image below shows how some of the elements called out above fit in the overall scenario.

This blog has a good run down of developing microservices with AKS. If you are looking to get more hands-on and build microservices with AKS, the following four part blog-tutorial provides good end-to-end coverage.

IoT Edge deployments

IoT solutions such as SmartCity, ConnectedCar, and ConnectedHealth have enabled many diverse applications connecting billions of devices to the cloud. With advances in computing power, these IoT devices are becoming more and more powerful. Of course, IoT application development poses some challenges as well. For instance, crafting, maintaining and updating a robust IoT solution is time-consuming. Such solutions also face a higher degree of difficulty when it comes to maintaining cohesive security in a distributed environment. Device incompatibility with existing infrastructure, and challenges in scaling further compound IoT solution development.

Azure provides a robust set of capabilities to address these IoT challenges. More specifically, Azure IoT Edge was created to help customers run custom business logic and cloud analytics on edge devices so that the focus of the devices can be on business insights instead of data management.

At Azure, we are seeing customers utilize the power of AKS to bring containers and orchestration to help manage this IoT Edge layer. Customers can combine AKS with the IoT Edge connector, a Virtual Kubelet implementation, to help provide:

- Consistency between cloud and edge software configuration.

- Applying identical deployments across multiple IoT hubs.

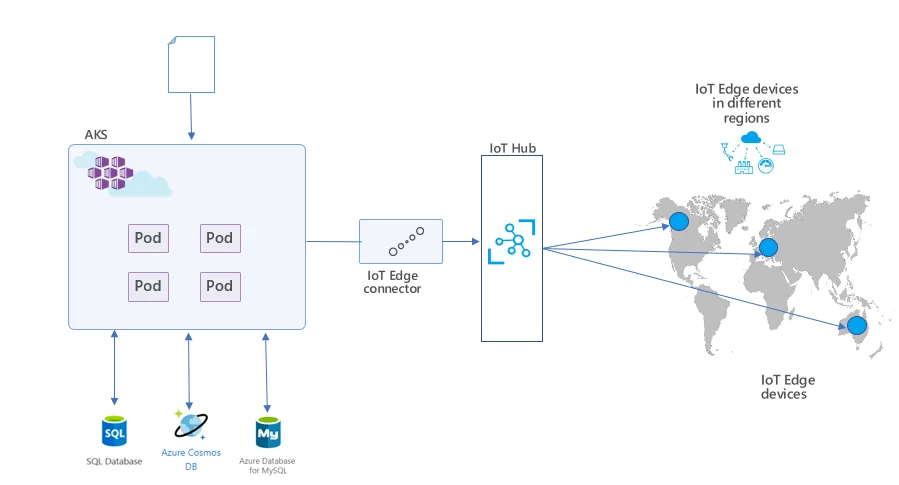

With AKS and the IoT Edge connector, the configuration can be defined in a Kubernetes manifest and then simply and reliably deployed to all IoT devices at the edge with a single command. The simplicity of a single manifest to manage all IoT Hubs helps customers deliver and manage IoT applications at scale. For example, consider the challenges involved in deploying and managing devices across different regions. AKS, along with the IoT Hub and the IoT Edge connector, make these deployments simple. The graphic below illustrates this IoT scenario involving AKS and the IoT Edge connector.

Check out this blog for more information on managing IoT Edge deployments with Kubernetes.

Machine learning at scale

Though machine learning is immensely powerful, using it in practice is by no means easy. Machine learning in practice often involves training and hosting models which tend to require the data scientist to reproduce the code to work in different environments and be deployed at different scales. Additionally, once the model is running in production with large scale clusters, lifecycle management becomes increasingly difficult. Configuration and deployment are often left to data scientists which results in their time being consumed by infrastructure setup instead of data science itself.

AKS can help address these challenges faced with training and hosting ML models and the lifecycle management workflows.

- For training, AKS can help ensure GPU resources, designed for compute-intensive, high-scale workloads, are available on demand and scaled down when not needed. This becomes critical when a group of data scientists are all working on various projects and require resources on very diverse schedules. This also allows for faster training cycles by enabling strategies such as distributed training and hyperparameter optimization.

- For hosting, AKS brings DevOps capabilities to machine learning models. These models can be upgraded more easily using the rolling upgrades capability, and strategies such as blue/green or canary deployments can be easily applied.

- Using containers also brings much higher consistency across test, dev, and production environments. Also, self-healing capabilities dramatically improve reliability of the execution.

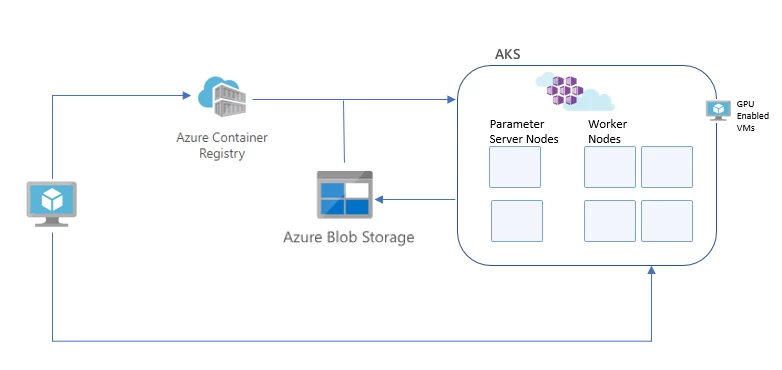

A possible scenario involving AKS for machine learning models is shown in the image below.

Learn more about running AKS with GPU enabled VMs for compute-intensive, high-scale workloads such as machine learning.

What next?

Are you using or planning to use AKS to implement one of these scenarios? Or have a different scenario in mind where AKS can help address a key challenge? Connect with us to share and discuss your use cases.

To get you started, we also put together a simple demo for you to get familiar with Azure Kubernetes Service, check it out. We also have a webinar coming to walk you through end to end Kubernetes development experience on Azure. Sign up to get started today.