Today’s enterprises are entering a new phase of AI adoption—one where trust, flexibility, and production readiness aren’t optional; they’re foundational. Microsoft has collaborated closely with xAI to bring Grok 4, their most advanced model, to Azure AI Foundry—delivering powerful reasoning within a platform designed for business-ready safety and control.

Grok 4 undeniably has exceptional performance. With a 128K-token context window, native tool use, and integrated web search, it pushes the boundaries of what’s possible in contextual reasoning and dynamic response generation. But performance alone isn’t enough. AI at the frontier must also be accountable. Over the last month, xAI and Microsoft have worked closely to enhance responsible design. The team has evaluated from a responsible AI perspective, putting Grok 4 through a suite of safety tests and compliance checks. Azure AI Content Safety is on by default, adding another layer of protection for enterprise use. Please see the Foundry model card for more information about model safety.

In this blog, we’ll explore what makes Grok 4 stand out, how it compares to other frontier models, and how developers can access it via Azure AI Foundry.

Grok 4: Enhanced reasoning, expanded context, and real-time insights

Grok models were trained on xAI’s Colossus supercomputer, utilizing a massive compute infrastructure that xAI claims delivers a 10 times leap in training scale compared to Grok 3. Grok 4’s architecture marks a significant shift from its predecessors, emphasizing reinforcement learning (RL) and multi-agent systems. According to xAI, the model prioritizes reasoning over traditional pre-training, with a heavy focus on RL to refine its problem-solving capabilities.

Key architectural highlights include:

First-principles reasoning: “think mode”

One of Grok 4’s headline features is its first-principles reasoning ability. Essentially, the model tries to “think” like a scientist or detective, breaking problems down step by step. Instead of just blurting out an answer, Grok 4 can work through the logic internally and refine its response. It has strong proficiency in math (solving competition-level problems), science, and humanities questions. Early users have noted it excels at logic puzzles and nuanced reasoning better than some incumbent models, often finding correct answers where others get confused. Put simply, Grok 4 doesn’t just recall information—it actively reasons through problems. This focus on logical consistency makes it especially attractive if your use case requires step-by-step answers (think of research analysis, tutoring, or complex troubleshooting scenarios).

Example prompt: Explain how you would generate electricity on Mars if you had no existing infrastructure. Start from first principles: what are the fundamental resources, constraints, and physical laws you would use?

Extended context window

Perhaps one of Grok 4’s most impressive technical feats is its handling of extremely large contexts. The model is built to process and remember massive amounts of text in one go. In practical terms, this means Grok 4 can ingest extensive documents, lengthy research papers, or even a large codebase, and then reason about them without needing to truncate or forget earlier parts. For use cases like:

- Document analysis: You could feed in hundreds of pages of a document and ask Grok to summarize, find inconsistencies, or answer specific questions. Grok 4 is far less likely to miss the details simply because it ran out of context window, compared to other models.

- Research and academia: Load an entire academic journal issue or a very long historical text and have Grok analyze it or answer questions across the whole text. It could, for example, take in all of Shakespeare’s plays and answer a question that requires connecting info from multiple plays.

- Code repositories: Developers could input an entire code repository or multiple files (up to millions of characters of code) and ask Grok 4 to find where a certain function is defined, or to detect bugs across the codebase. This is huge for understanding large legacy projects.

xAI has claimed that this is not just “memory” but “smart memory.” Grok can intelligently compress or prioritize information in very long inputs, remembering the crucial pieces more strongly. For the end user or developer, the takeaway is: Grok 4 can handle very large input texts in one shot. This reduces the need to chop up documents or code and manage context fragments manually. You can throw a ton of information at it and it can keep the whole thing “in mind” as it responds.

Example prompt: Read this Shakespeare play and find my password (password is buried in the long context text).

Data-aware responses and real-time insights

Another strength of Grok 4 is how it can integrate external data sources and trending information into its answers—effectively acting as a data analyst or real-time researcher when needed. It understands that sometimes the best answer needs to come from outside its training data, and it has mechanisms to retrieve and incorporate that external data. It turns the chatbot into more of an autonomous research assistant. You ask a question, it might go read a few things online, and come back with an answer that’s enriched by real data. Of course, caution is needed—live data can sometimes be incorrect, or the model might pick up on biased sources; one should verify critical outputs.

Example prompt: Check the latest news on global AI regulations (past 48 hours).

- Summarize the top 3 developments.

- Highlight which regions or governments are driving the changes.

- Explain what impact these updates could have on companies deploying foundation models.

- Provide the sources you referenced.

Stacking up Grok 4: How it performs against top models

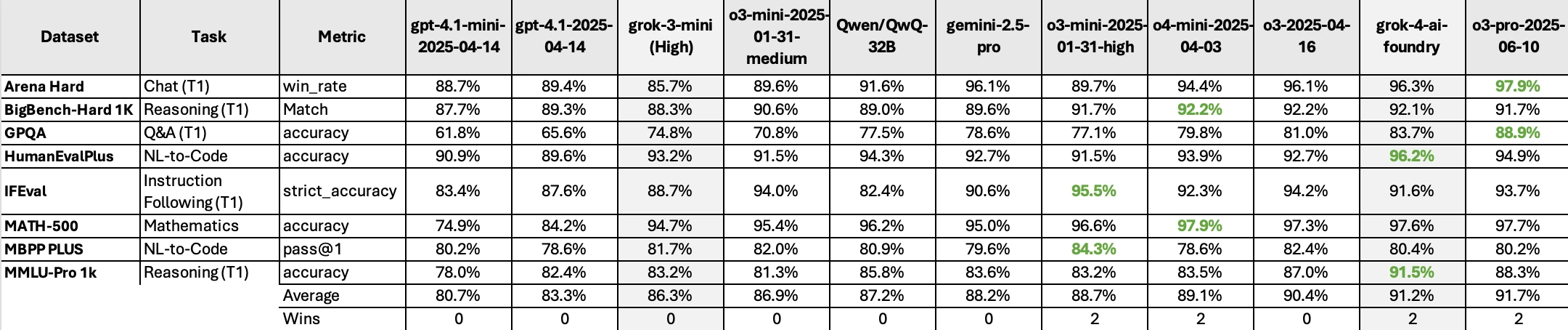

Grok 4 showcases impressive capabilities on high-complexity tasks. These benchmarks underscore Grok 4’s leading-edge capabilities in high-level reasoning, STEM disciplines, complex problem-solving, and industry-specific tasks. These benchmark numbers are calculated using our own internal Azure AI Foundry benchmarking service, which we use to compare models across a set of industry standard benchmarks.

Family of Grok models

In addition to Grok 4, Azure AI Foundry also has 3 additional Grok models already available.

- Grok 4 Fast Reasoning is optimized for tasks requiring logical inference, problem-solving, and complex decision-making, making it ideal for analytical applications.

- Grok 4 Fast Non-Reasoning focuses on speed and efficiency for straightforward tasks like summarization or classification, without deep logical processing.

- Grok Code Fast 1 is tailored specifically for code generation and debugging, excelling in programming-related tasks across multiple languages.

While all three models prioritize speed, their core strengths differ: reasoning for logic-heavy tasks, non-reasoning for lightweight operations, and code for developer workflows.

Pricing including Azure AI Content Safety:

| Model | Deployment Type | Price $/1M tokens |

| Grok 4 | Global Standard | Input- $5.5 Output- $27.5 |

Get started with Grok 4 in Azure AI Foundry

Lead with insight, build with trust. Grok 4 unlocks frontier‑level reasoning and real‑time intelligence, but it is not a deploy and forget model. Pair Azure’s guardrails with your own domain checks, monitor outputs against evolving standards, and iterate responsibly—while we continue to harden the model and disclose new safety scores. Please see the Azure AI Foundry Grok 4 model card for more information about model safety.

Head over to ai.azure.com, search for “Grok,” and start exploring what these powerful models can do.