We are in the midst of an application development and IT system management revolution driven by the cloud. Fast, agile, inexpensive, and massively scalable infrastructure, offered fully self-service and with pay-as-you-go billing, is improving operational efficiency and enabling faster-time-to-value across industries. The emergence of containers, with their fast startup, standardized application packaging, and isolation model, is further contributing to efficiency and agility.

However, many companies are finding that making their applications highly available, scalable and agile is still challenging. Competitive business pressures demand that applications continuously evolve, adding new features and functionality while remaining available 24×7. For example, it is no longer acceptable for a bank website to have a maintenance window, whereas even a few years ago it was the norm. Similarly, an e-commerce site that’s down for even a short time will drive customers to one of many competitors that can serve them at that moment. Failure to meet these demands can mean the difference between staying relevant and losing business.

These business realities are driving developers to adopt an application architecture model called “microservices,” a term popularized by James Lewis and Martin Fowler. In this post, I'll talk about how and why a microservices architecture can help with application development and lifecycle tasks, and describe the capabilities that platforms can provide to support those architectures. Then I’ll list some of the platforms commonly used by developers as the foundation for their microservice based applications that Azure supports, and finally, I’ll briefly describe our microservice application platform, called Service Fabric, that provides comprehensive support for microservices lifecycle management out of the box.

Monolithic application models

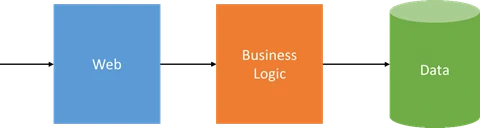

For decades, the cost, time, and complexity of provisioning new hardware, whether physical or virtual, has strongly influenced application development. These factors are more pronounced when those applications are mission-critical, since high uptime requires highly available infrastructure, including expensive hardware such as SANs and hardware load-balancers. Because IT infrastructure is static, applications were written to be statically sized and designed for specific hardware, even when virtualized. Even when applications were decomposed to minimize overall hardware requirements and offer some level of agility and independent scaling, it was commonly into the classic three-tier model, with web, business logic and data tiers, as shown in the figure below. However, each tier was still its own monolith, implementing diverse functions that were combined into a single package deployed onto hardware pre-scaled for peak loads. When load caused an application to outgrow its hardware, the answer was typically to “scale up,” or upgrade the application’s hardware to add capacity, in order to avoid datacenter reconfiguration and software re-architecture.

Figure 1. Three-Tier Monolithic Application

The monolithic application model was a natural result of the limitations of infrastructure agility, but it resulted in inefficiencies. Because static infrastructure and long development cycles meant that there was little advantage to decomposing applications beyond a few tiers, developers created tight coupling between unrelated application services within tiers. A change to any application service, even small ones, required its entire tier to be retested and redeployed. A simple update could have unforeseen effects on the rest of the tier, making changes risky and lengthening development cycles to allow for more rigorous testing. Their dependence on statically-assigned resources and highly-available hardware made applications susceptible to variations in load and hardware performance, which could push them outside their standard operating zone and cause their performance to severely degrade. An outright hardware failure could send the entire application into a tailspin.

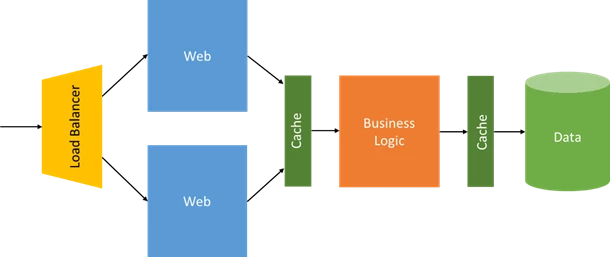

Finally, another challenge facing monolithic applications that took advantage of a tiered approach was delivering fast performance with data stored in the backend tier. A typical approach was to introduce intermediate caches to buffer against the inefficiencies caused by separating compute and data, but that raised costs by adding unused hardware resources, and it created additional development and update complexities.

Figure 2. Three-Tier Monolithic Application with Caches

Microservices architecture

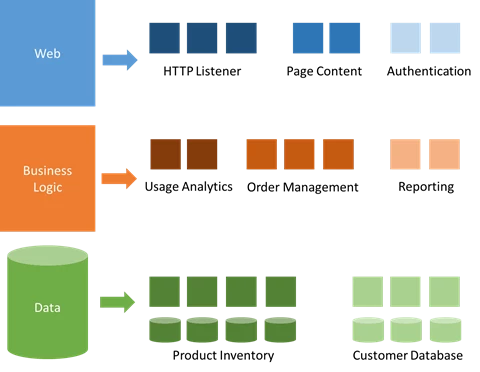

While there are simple and limited scale applications for which a monolithic architecture still makes sense, microservices are a different approach to application development and deployment, one that is perfectly suited to the agility, scale and reliability requirements of many modern cloud applications. A microservices application is decomposed into independent components called “microservices,” that work in concert to deliver the application’s overall functionality. The term “microservice” emphasizes the fact that applications should be composed of services small enough to truly reflect independent concerns such that each microservice implements a single function. Moreover, each has well-defined contracts (API contracts) – typically RESTful – for other microservices to communicate and share data with it. Microservices must also be able to version and update independently of each other. This loose coupling is what supports the rapid and reliable evolution of an application. Figure 3 shows how a monolithic application might be broken into different microservices.

Figure 3. Breaking the Monolith into Microservices

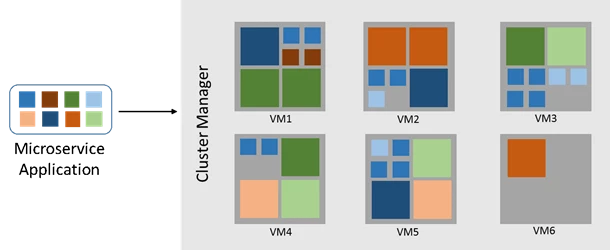

Microservice-based applications also enable the separation of the application from the underlying infrastructure on which it runs. Unlike monolithic applications where developers declare resource requirements to IT, microservices declare their resource requirements to a distributed software system known as a “cluster manager,” that “schedules,” or places, them onto machines assigned to the cluster in order to maximize the cluster’s overall resource utilization while honoring each microservice’s requirements for high availability and data replication, as shown in Figure 4. Because microservices are commonly packaged as containers and many usually fit within a single server or virtual machine, their deployment is fast and they can be densely packed to minimize the cluster’s scale requirements.

Figure 4. Cluster of Servers with Deployed Microservices

With this model, microservice scale-out can be nearly instantaneous, allowing an application to adapt to changing loads. Their loose coupling also means that microservices can scale independently. For example, the public endpoint HTTP listener, one microservice in the Web-facing functionality of an application, might be the only microservice of an application that scales out to handle some additional incoming traffic.

The independent, distributed nature of microservice-based applications also enables rolling updates, where only a subset of the instances of a single microservice will update at any given time. If a problem is detected, a buggy update can be “rolled back,” or undone, before all instances update with the faulty code or configuration. If the update system is automated, integration with Continuous Integration (CI) and Continuous Delivery (CD) pipelines allow developers to safely and frequently evolve the application without fear of impacting availability.

While the classic model for application scalability is to have a load-balanced, stateless tier with a shared external datastore or database to store persistent state, stateful microservices can achieve higher performance, lower latency, massive scale and maintain developer agility for service updates. Stateful microservices manage persistent data, usually storing it locally on the servers on which they are placed to avoid the overhead of network access and complexity of cross-service operations. This enables the fastest possible processing and can eliminate the need for caches. Further, in order to manage data sizes and transfer throughputs beyond that which a single server can support, scalable stateful microservices partition data among their instances and implement schema versioning so that clients see a consistent version even during updates, regardless of which microservice instance they communicate with.

Microservice application platforms

Our experience internally running cloud-scale Microsoft services like Bing, Cortana and Intune have given us a deep firsthand understanding of the complexities associated with designing, developing, and deploying large-scale applications at cloud scale. Frequent updates to large-scale, always on applications is a challenge, no matter how well designed the application. Just dropping microservices into virtual machines or even containers does not enable the full potential of the microservices approach, that requires a microservices application platform with DevOps-focused tooling.

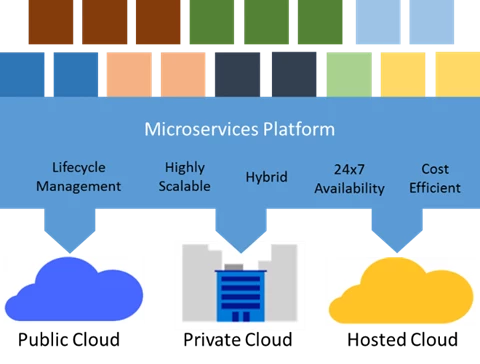

A full-featured microservices application platform provides all the previously stated microservices architecture benefits of cost efficiency, scalability and 24×7 availability. It also takes these a step further. As mentioned earlier, it should choreograph safe and reliable upgrades using an extensible health model and automatic rollback. It also helps microservices to discover each other by providing a naming service, and monitors and maintains their health. For example, when scaling or healing, a microservices platform communicates updated placement information to other microservices via the naming service so that they can quickly establish or re-establish communication.

To keep microservices healthy, the platform automatically moves instances to healthy VMs or servers when the software or hardware on which they are running fails or must be restarted for upgrades. In addition, a microservices platform must be deployable in private and public clouds. This is necessary to support hybrid scenarios where workloads burst from a private cloud to a public one, and to enable public cloud dev/test with production deployment in a private cloud. Supporting multiple clouds also addresses concerns about vendor lock-in when choosing an application platform, separating the platform from the infrastructure.

Figure 5. Microservices Platform

This section briefly describes several popular platforms on which developers are building and deploying microservices applications today. All of the below can run on Azure infrastructure, giving you choice based upon your requirements.

Docker Swarm and Docker Compose

The standard packaging format and resource isolation of Docker containers have made them a natural fit with microservices architectures. Docker Compose defines an application model that supports multiple Docker-packaged microservices, and Docker Swarm serves as a cluster manager across a set of infrastructure that exposes the same protocol that a single-node Docker installation does, so that it works with the broad Docker tooling ecosystem. The Azure Container Service supports both Docker Swarm and Docker Compose. More details running this on Azure can be found here.

Kubernetes

Kubernetes is an open-source system for automating deployment, operations, and scaling of containerized applications. It groups containers that make up an application into logical units for easy management and discovery. Originally developed by Google, it builds on their experiences running large scale services such as Search and Gmail. Even some traditional PaaS solutions are merging with Kubernetes, like Apprenda. More details running Kubernetes on Azure can be found here.

Mesosphere DCOS, with Apache Mesos and Marathon

Microsoft and Mesosphere have partnered to bring open source components of the Mesosphere Datacenter Operating System (DCOS), including Apache Mesos and Marathon, to Azure. The Mesosphere DCOS, powered by Mesos, is a scalable cluster manager that includes Mesosphere’s Marathon, a production-grade container orchestration tool. It is available as part of the Azure Container Service. There is also an enterprise version available from Mesosphere that runs on Azure. The Mesosphere DCOS provides microservices platform capabilities including service discovery, load-balancing, health-checks, placement constraints, and metrics aggregation. Finally, Mesosphere offers a library of certified services that provide additional capabilities, like Kafka, Chronos, Cassandra, Spark and others, which can all be installed with a single command. More details running this on Azure can be found here.

OpenShift

OpenShift by Red Hat is a platform-as-a-service offering that leverages Docker container-based packaging for deploying container orchestration and compute management capabilities for Kubernetes, enabling users to run containerized JBoss Middleware, multiple programming languages, databases and other application runtimes. OpenShift Enterprise 3 offers a devops experience that enables developers to automate the application build and deployment process within a secure, enterprise-grade application infrastructure. With recent support for Red Hat Enterprise Linux images in Azure, OpenShift is supported in Azure. Look for some documentation on this topic shortly.

Pivotal Cloud Foundry

Pivotal Cloud Foundry enables microservice architectures by combining workflow and container scheduling from Cloud Foundry with integrations for microservice patterns like service discovery, client side load balancing, circuit breakers and distributed tracing, utilizing Spring Cloud and NetflixOSS. Pivotal Cloud Foundry supports ongoing microservice operations via deployment and service management capabilities such as autoscaling, blue-green updates, health monitoring, application metrics, streaming logs and more. Learn more about running Pivotal Cloud Foundry on Azure here.

Service Fabric

To support our own internal evolution from on-premises to cloud and from monolithic to microservice-based applications, we developed Service Fabric over ten years ago. Service Fabric powers many of our hyper-scale cloud services, including SQL DB, DocDB, Intune, Cortana and Skype for Business, as well as many internal Azure infrastructure services. We’ve taken the exact same technology and released Service Fabric as-a-service on Azure, and the standalone SDK will enable the deployment of Service Fabric applications in on-premises clusters and on other clouds. In its initial public release, Service Fabric runs on Windows with any .NET language and Linux and Java support are under development. Service Fabric has built-in support for lifecycle management, hybrid deployments, and 24×7 availability with integrated development experiences using Visual Studio. The platform offers extensible health models for both the infrastructure and microservices to enable automated health-based upgrade and automatic rollback, simplifying DevOps. Furthermore, Service Fabric supports both stateless and stateful microservices with leadership election to support data consistency and a state replication framework that supports transactions for stateful data guarantees. More details running this on Azure can be found here.

Conclusion

The computing world has changed forever with the advent of the cloud. Cloud gives developers access to infrastructure instantly, cheaply, and at nearly infinite scales. The agility of cloud and high availability and constant agility demands of modern business have strained monolithic architectures and resulted in the rise of microservices-based applications. With a comprehensive microservices platform, developers can create applications that support massive scale with high performance, high availability, cost effectiveness and independent lifecycle management, across public clouds and private clouds. Microservices are an application revolution powered by the cloud.

Check out the video on below where I get to talk Microservices with Channel9’s Seth Juarez.