This post was co-authored by Mohit Chand, Principal SWE Lead, Microsoft IT.

We are going a step beyond the traditional methods of developing a data warehouse by adopting CI practices, which are more prevalent for API (.NET) based applications. It has been long pending for data warehouse teams to catch up on the modern software engineering practices. With the emergence of Visual Studio Online (VSTS) & SQL Server Data Tools (SSDT), spinning environments on the fly, deploying code across environments with maximum automation has become easy. We adopted these modern practices to boost engineering productivity in our Business Insights (DW) project. With the help of SSDT and VSTS, we were able to align DW deployment perfectly with the Agile releases (2 weeks sprint). In this blog I will elaborate a detailed approach on how to implement CI for your Data Warehouse. I will explain the life cycle of a business user story starting from code branching, pull-request-triggered-build, Azure resources and environment provisioning, schema deployment, seed data generation, daily-integration releases with automated tests, and approval based workflows to promote new code to higher environments.

DevOps

Why DevOps? In traditional development and operations model there is always a possibility of confusion and debate when the software doesn’t function as expected. Dev would claim the software working just fine in their respective environment and defend that as an Ops problem. Ops would indicate that Devs didn’t provide a production ready software, and it’s a Dev problem. How do we solve this? Wouldn’t it be a good idea for a single team takes care of development, testing, and operations?

We work closely with business and other stake holders to efficiently deliver better and faster results to customers. DevOps has enabled us to deliver faster with a better a connection with customers while simultaneously reducing our technical debt and risks.

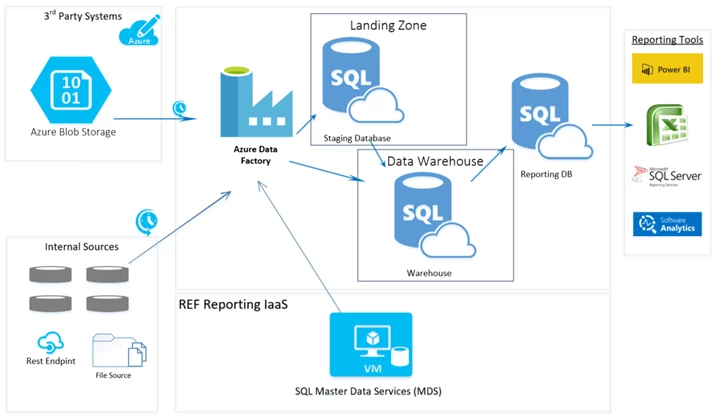

DW Architecture

This Data Warehouse uses Azure technologies. Data arrives to the landing zone or staging area from different sources through Azure Data Factory. We use Azure Data Factory (ADF) jobs to massage and transform data into the warehouse. Once ready, the data is available to customers in the form of dimension and fact tables.

Tools/Technologies

This Modern Data Warehouse primarily uses Microsoft technologies to deliver the solution to customers –

- SQL Azure (PaaS)

- Azure Data Factory

- Azure Blob Storage

- SQL MDS 2016

- Visual Studio Team Services (VSTS)

-

- Agile and Kanban board

- Code branching (Git)

- Gated check-ins

- Automated Tests

- Build

- Release

-

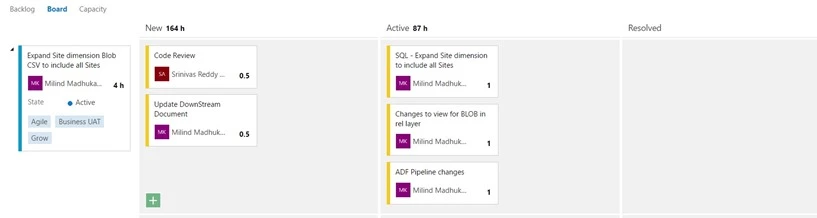

Plan

In agile scrum, user story is the unit of implementation. Engineers pick up and deliver the user stories in any given sprint.

Code

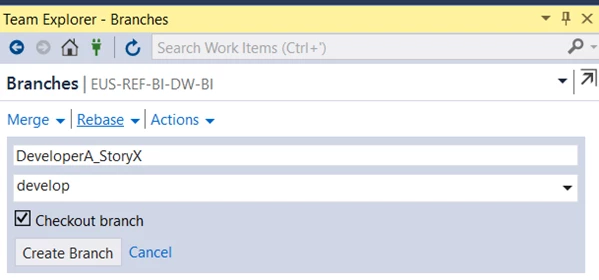

Branching Strategy

With Agile code branching plays a critical role. There are various ways to do it including sprint branching, feature branching, story/bug branching, etc. In our case we adopted user story level branching. A contributor creates a branch from the “develop” branch against each story he/she picks up. It is the contributor’s responsibility to maintain this isolated branch and merge it by creating a “Pull Request” with the develop branch once the story is complete or ready for code review. A contributor is not allowed to merge his/her code with the main stream branch directly. It requires a minimum two code reviews to approve the code.

Story based branching enables developers to merge the code frequently with the main stream and avoid working a long time on the same branch. This significantly reduces the code integration issues. Another benefit is that developers can work more efficiently by having other developer’s code available more frequently. Code dependency wait time gets reduced, hence less blockers for developers.

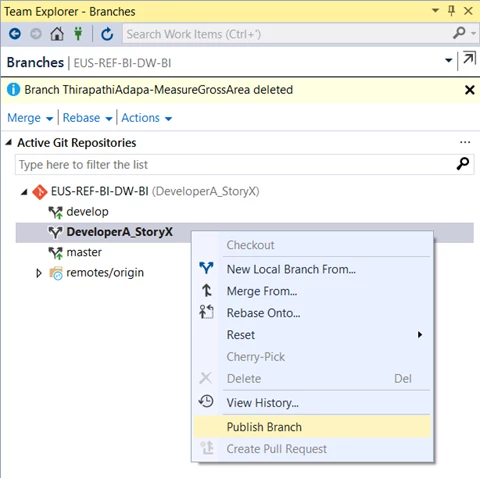

Using VSTS, contributor creates a new branch:

Nomenclature followed is _

After creating the branch, the contributor publishes it to make it visible to everyone else on the team.

Once the branching is set the contributor is all set to start with the story and starts writing code to implement the functionality.

Code Review and Code Merge

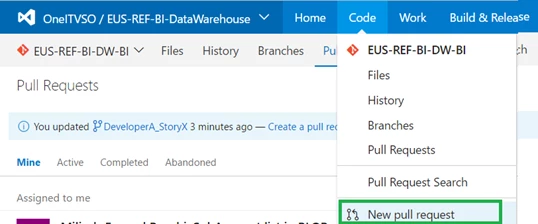

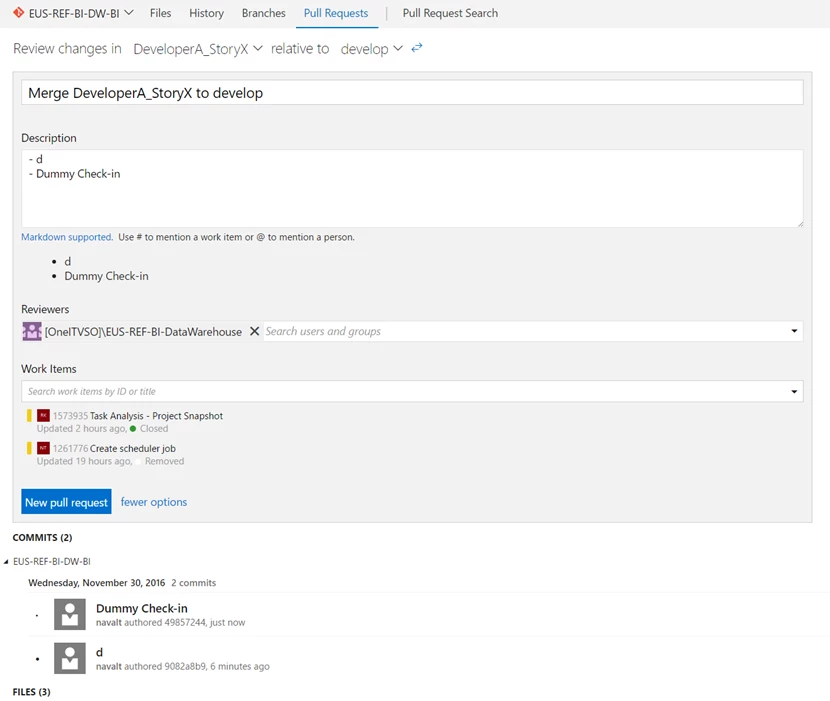

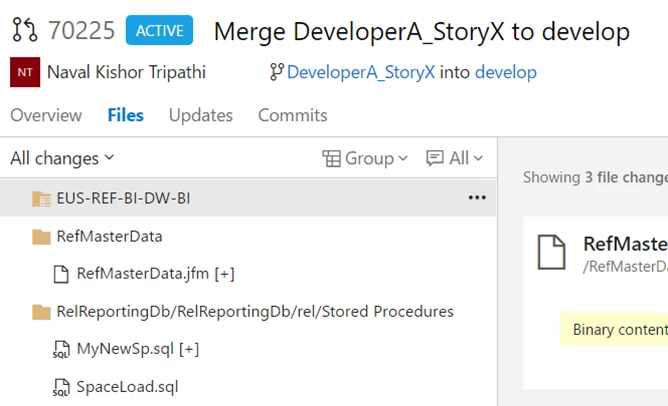

Once the code is complete, the developer checks-in the code and creates a pull request using VSTS portal. To ensure a higher level of code quality, it’s imperative to have a gated check-in process in place. Each developer has to ensure the build is not broken when they check-in the code. The code needs to be reviewed and approved by at least two peers before it gets merged with the main stream. Without two code review approvals, it’s not possible to merge the code with the main stream code.

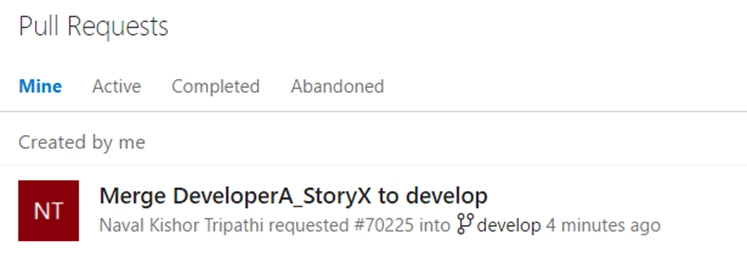

A pull request is created by the developer and submitted with appropriate comments and work items.

Build

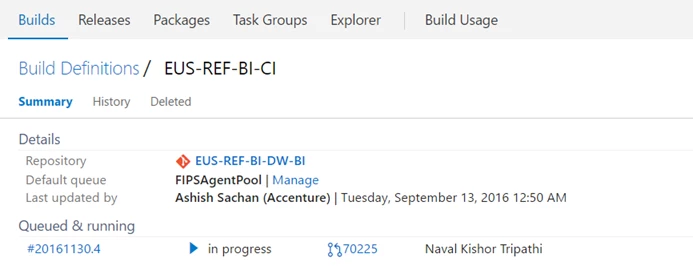

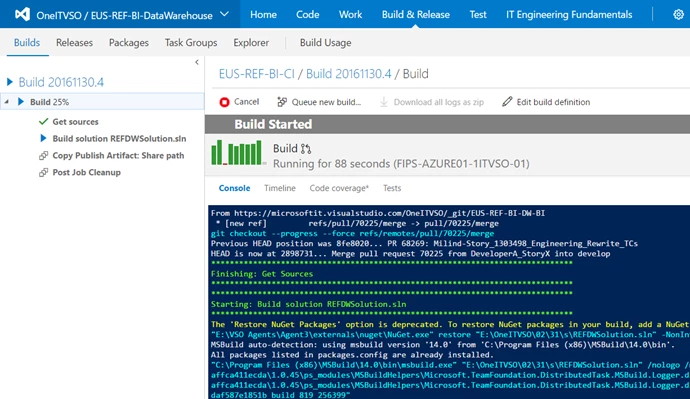

As soon as the “Pull Request” is created by the contributor, the CI build automatically gets fired.

An email notification is received by all the reviewers, which updates them about the new “Pull Request” created by a contributor. The reviewers are now good to starts the code reviews.

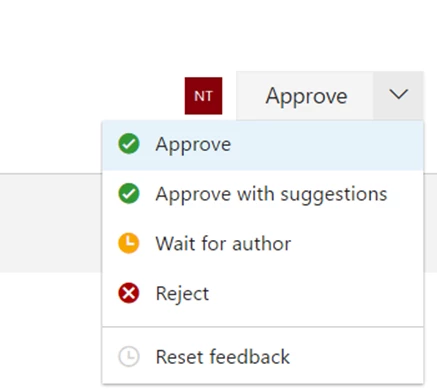

Depending upon the quality of the code, the reviewer “Approves”, raise questions, or “Reject” the code.

Once all reviewers are done with code reviews, the lead developer merges the code with the main stream.

Test (Automation)

Ensuring high code quality across various environments could be challenging in a DW project because data might vary from environment to environment. We ensure that every new piece of code we write has automated test cases before creating a pull request. This not only prevented bug leaks to production, but it also ensured a higher quality of deliverables.

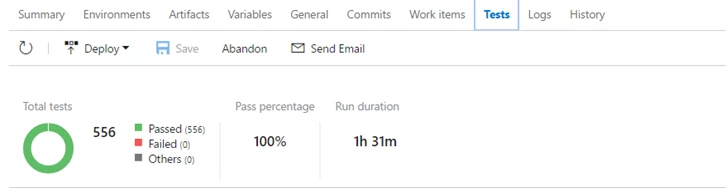

The diagram below depicts the overall test case execution results. As part of the deployment, we execute all of our test cases to ensure the integrity and quality of the end product.

Release & Deploy

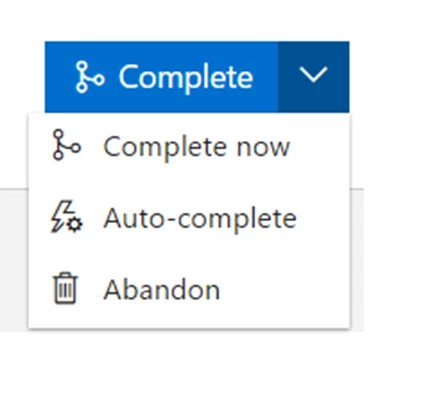

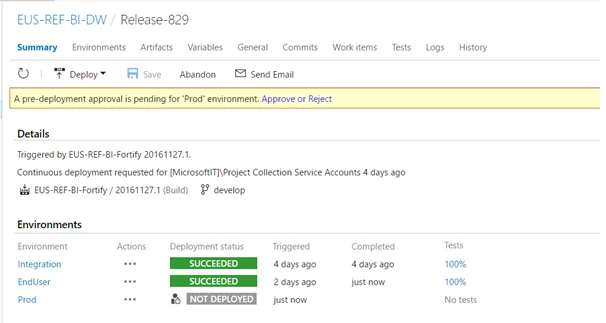

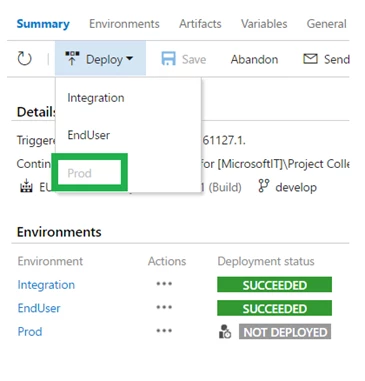

Once the code is successfully merged with main stream code, a new build fires automatically. The Integration environment gets deployed once a day with the latest code.

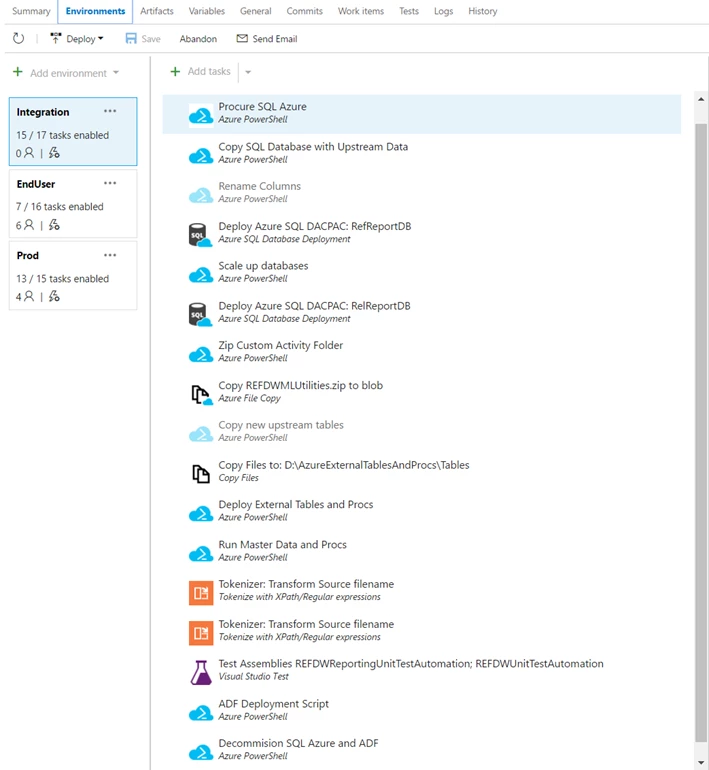

The diagram below depicts three environments we manage for the Data Warehouse. We have “Integration”, “End User”, and “Production” environments. The integration environment is a continuous integration and deployment environment, which is provisioned and de-provisioned dynamically and managed as “Infra as a Code”. It is a scheduled process which run the following steps in sequence to “integrate” the check-ins happening daily.

- Build the new bits by getting the latest from the develop branch (includes integrated code scanning)

- Create a new Azure Resource Group and procure the SQL Instance

- Copy the “Seed Data” to the newly created SQL instance

- Execute any schema renaming

- Deploy the DACPAC to deploy new schema changes

- Scale-up the databases to execute the steps faster

- Copy code bits to build server

- Deploy additional SQL entities

- Run data sync jobs

- Execute test assemblies

- Deploy Azure Data Factories

- Decommission environment

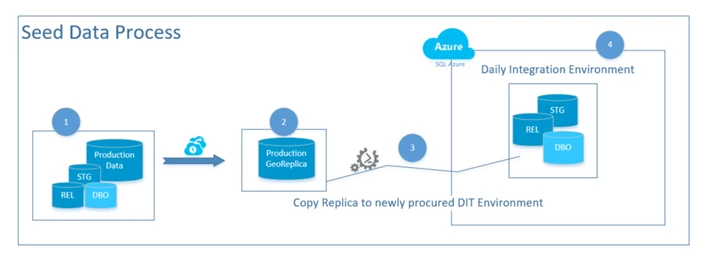

Seed data to enable automated testing

Automated testing in a DW depends a lot on the availability of accurate data. Without data there will be numerous scenarios which cannot be tested. To solve this problem we use production copy of data as “seed data” during deployment. The diagram below depicts how we populate the seed data in our Daily Integration Environment (DIT). In cases where the data is pretty huge, a miniature DB, which contains subset of production data, can be used instead of copying the entire replica.

Step-1: Represents the production data with multiple schemas which we use to segregate data in our DW environments (e.g. staging, transformed data, etc.)

Step-2: Represents that data gets copied to Azure Geo Replica (Disaster Recovery copy).

Step-3: During release deployment we copy the geo replica to the newly procured DIT SQL server instance.

Step-4: Represents the availability of production equivalent copy of data. In addition, the DACPACK deployment happens to add the newly added schema and later our test automation suites runs to test the quality of our end product.

Deploying release to higher environments

Promoting the release from one environment to another is setup through an approval workflow and it is not allowed to deploy directly. In this scenario it is not possible to deploy directly to Production without the approval from pre-assigned stakeholders.

The approval workflow depicts the environment promotion. Once the required approvers approve the workflow the release gets promoted to next environment automatically.

Monitor

As DevOps the same team monitors the pre-prod and prod environments for any failures. We adopted the DRI (directly responsible individual) model and the DRI person proactively monitors and checks the system’s health and all the notifications. Issues, if any, are fixed on priority to ensure continued availability of application. We use out of the box ADF monitoring and notification along with a couple of custom monitoring tools. We also have multiple data quality checks implemented as automated reports that run daily in the production environment and report out data anomalies that can either be fixed as a bug in our processes or be traced back to the source systems quickly for getting fixed there.

It’s true that setting up CI for a data warehouse isn’t that simple, however, it’s worth every penny. We did face challenges of test case failures when we add new code during the sprints, however, the team has learned from those instances and now we ensure to update the existing test cases when new code is being added. We are continuously adding functional, build, and environment verification test cases to constantly increase the quality of the product. CI has enabled us to be truly agile and be super confident in our end product. We are able to prevent common bug leaks to production by having an automated test suite. We were able to eliminate the need of the test environment and eyeing to deploy directly in production in coming quarters. We strongly believe it’s possible!