This post is part 1 of a two-part series about how organizations use Azure Cosmos DB to meet real world needs and the difference it’s making to them. In part 1, we explore the challenges that led service developers for Minecraft Earth to choose Azure Cosmos DB and how they’re using it to capture almost every action taken by every player around the globe—with ultra-low latency. In part 2, we examine the solution’s workload and how Minecraft Earth service developers have benefited from building it on Azure Cosmos DB.

Extending the world of Minecraft into our real world

You’ve probably heard of the game Minecraft, even if you haven’t played it yourself. It’s the best-selling video game of all time, having sold more than 176 million copies since 2011. Today, Minecraft has more than 112 million monthly players, who can discover and collect raw materials, craft tools, and build structures or earthworks in the game’s immersive, procedurally generated 3D world. Depending on game mode, players can also fight computer-controlled foes and cooperate with—or compete against—other players.

In May 2019, Microsoft announced the upcoming release of Minecraft Earth, which began its worldwide rollout in December 2019. Unlike preceding games in the Minecraft franchise, Minecraft Earth takes things to an entirely new level by enabling players to experience the world of Minecraft within our real world through the power of augmented reality (AR).

For Minecraft Earth players, the experience is immediately familiar—albeit deeply integrated with the world around them. For developers on the Minecraft team at Microsoft, however, the delivery of Minecraft Earth—especially the authoritative backend services required to support the game—would require building something entirely new.

Nathan Sosnovske, a Senior Software Engineer on the Minecraft Earth services development team explains:

“With vanilla Minecraft, while you could host your own server, there was no centralized service authority. Minecraft Earth is based on a centralized, authoritative service—the first ‘heavy’ service we’ve ever had to build for the Minecraft franchise.”

In this case study, we’ll look at some of the challenges that Minecraft Earth service developers faced in delivering what was required of them—and how they used Azure Cosmos DB to meet those needs.

The technical challenge: Avoiding in-game lag

Within the Minecraft Earth client, which runs on iOS-based and Android-based AR-capable devices, almost every action a player takes results in a write to the core Minecraft Earth service. Each write is a REST POST that must be immediately accepted and acknowledged to avoid any noticeable in-game lag.

“From a services perspective, Minecraft Earth requires low-latency writes and medium-latency reads,” explains Sosnovske. “Writes need to be fast because the client requires confirmation on each one, such as might be needed for the client to render—for example, when a player taps on a resource to see what’s in it, we don’t want the visuals to hang while the corresponding REST request is processed. Medium-latency reads are acceptable because we can use client-side simulation until the backing model behind the service can be updated for reading.”

To complicate the challenge, Minecraft Earth service developers needed to ensure low-latency writes regardless of a player’s location. This required running copies of the service in multiple locations within each geography where Minecraft Earth would be offered, along with built-in intelligence to route the Minecraft Earth client to the nearest location where the service is deployed.

“Typical network latency between the east and west coasts of the US is 70 to 80 milliseconds,” says Sosnovske. “If a player in New York had to rely on a service running in San Francisco, or vice versa, the in-game lag would be unacceptable. At the same time, the game is called Minecraft Earth—meaning we need to enable players in San Francisco and New York to share the same in-game experience. To deliver all this, we need to replicate the service—and its data—in multiple, geographically distributed datacenters within each geography.”

The solution: An event sourcing pattern based on Azure Cosmos DB

To satisfy their technical requirements, Minecraft Earth service developers implemented an event sourcing pattern based on Azure Cosmos DB.

“We originally considered using Azure Table storage to store our append-only event log, but its lack of any SLAs for read and write latencies made that unfeasible,” says Sosnovske. “Ultimately, we chose Azure Cosmos DB because it provides 10 millisecond SLAs for both reads and writes, along with the global distribution and multi-master capabilities needed to replicate the service in multiple locations within each geography.”

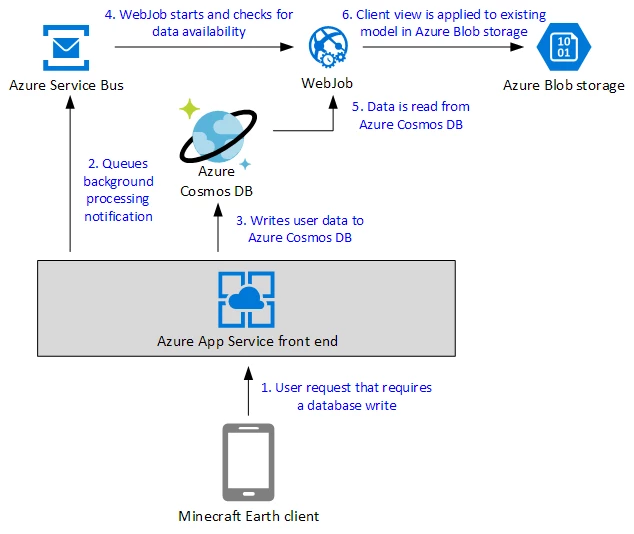

With an event sourcing pattern, instead of just storing the current state of the data, the Minecraft Earth service uses an append-only data store that’s based on Azure Cosmos DB to record the full series of actions taken on the data—in this case, mapping to each in-game action taken by the player. After immediate acknowledgement of a successful write is returned to the client, queues that subscribe to the append-only event store handle postprocessing and asynchronously apply the collected events to a domain state maintained in Azure Blob storage. To optimize things further, Minecraft Earth developers combined the event sourcing pattern with domain-driven design, in which each app domain—such as inventory items, character profiles, or achievements—has its own event stream.

“We modeled our data as streams of events that are stored in an append-only log and mutate an in-memory model state, which is used to drive various client views,” says Sosnovske. “That cached state is maintained in Azure Blob storage, which is fast enough for reads and helps to keep our request unit costs for Azure Cosmos DB to a minimum. In many ways, what we’ve done with Azure Cosmos DB is like building a write cache that’s really, really resilient.”

The following diagram shows how the event sourcing pattern based on Azure Cosmos DB works:

Putting Azure Cosmos DB in place

In putting Azure Cosmos DB to use, developers had to make a few design decisions:

Azure Cosmos DB API. Developers chose to use the Azure Cosmos DB Core (SQL) API because it offered the best performance and the greatest ease of use, along with other needed capabilities.

“We were building a system from scratch, so there was no need for a compatibility layer to help us migrate existing code,” Sosnovske explains. “In addition, some Azure Cosmos DB features that we depend on—such as TransactionalBatch—are only supported with the Core (SQL) API. As an added advantage, the Core (SQL) API was really intuitive, as our team was already familiar with SQL in general.”

Read Introducing TransactionalBatch in the .NET SDK to learn more.

Partition key. Developers ultimately decided to logically partition the data within Azure Cosmos DB based on users.

“We originally partitioned data on users and domains—again, examples being inventory items or achievements—but found that this breakdown was too granular and prevented us from using database transactions within Azure Cosmos DB to their full potential,” says Sosnovske.”

Consistency level. Of the five consistency levels supported by Azure Cosmos DB, developers chose session consistency, which they combined with heavy etag checking to ensure that data is properly written.

“This works for us because of how we store data, which is modeled as an append-only log with a head document that serves as a pointer to the tail of the log,” explains Sosnovske. “Writing to the database involves reading the head document and its etag, deriving the N+1 log ID, and then constructing a transactional batch operation that overwrites the head pointer using the previously read etag and creates a new document for the log entry. In the unlikely case that the log has already been written, the etag check and the attempt to create a document that already existed will result in a failed transaction. This happened regardless of whether another request ‘beats’ us to writing or if our request reads slightly out-of-date data.”

In part 2 of this series, we examine the solution’s current workload and how Minecraft Earth service developers have benefited from building it on Azure Cosmos DB.

Get started with Azure Cosmos DB

- Visit Azure Cosmos DB.

- Learn more about Azure for Gaming.