Azure Media Services is pleased to announce the preview of a new platform capability called Live Video Analytics, or in short, LVA. LVA provides a platform for you to build hybrid applications with video analytics capabilities. The platform offers the capability of capturing, recording, and analyzing live video and publishing the results (which could be video and/or video analytics) to Azure Services in the cloud and/or the edge.

With this announcement, the LVA platform is now available as an Azure IoT Edge module via the Azure Marketplace. The module, referred to as, “Live Video Analytics on IoT Edge” is built to run on a Linux x86-64 edge device in your business location. This enables you to build IoT solutions with video analytics capabilities, without worrying about the complexity of designing, building, and operating a live video pipeline.

LVA is designed to be a “pluggable” platform, so you can integrate video analysis modules, whether they are custom edge modules built by you with open source machine learning models, custom models trained with your own data (using Azure Machine Learning or other equivalent services) or Microsoft Cognitive Services containers. You can combine LVA functionality with other Azure edge modules such as Stream Analytics on IoT Edge to analyze video analytics in real-time to drive business actions (e.g. generate an alert when a certain type of object is detected with a probability above a threshold).

You can also choose to integrate LVA with Azure services such as Event Hub (to route video analytics messages to appropriate destinations), Cognitive Services Anomaly Detector (to detect anomalies in time-series data), Azure Time Series Insights (to visualize video analytics data), and so on. This enables you to build powerful hybrid (i.e. edge + cloud) applications.

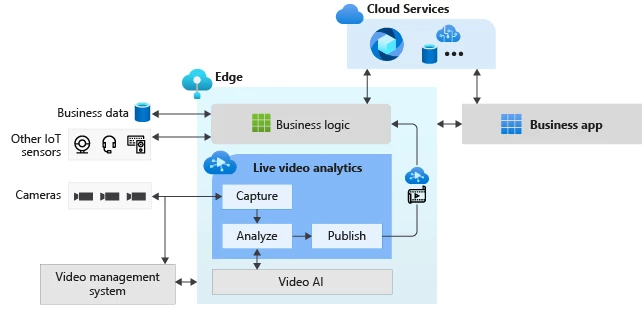

With LVA on IoT Edge, you can continue to use your CCTV cameras with your existing video management systems (VMS) and build video analytics apps independently. It can also be used in conjunction with existing computer vision SDKs (e.g. extract text from video frames) to build cutting edge, hardware-accelerated live video analytics enabled IoT solutions. The diagram below illustrates this process:

Use cases for LVA

With LVA, you can bring the AI of your choice and integrate it with LVA for different use cases. It can be first-party Microsoft AI models, open source or third-party models, etc.

Retail

Retailers can use LVA to analyze video from cameras in their parking lots to detect and match incoming cars to registered consumers to enable curb-side pickup of items ordered by the consumer via their online store. This enables consumers and employees to maintain a safe physical distance from each other, which is particularly important in the current pandemic environment.

In addition, retailers can use video analytics to understand how consumers view and interact with products and displays in their stores to make decisions about product placement. They can also use real-time video analytics to build interactive displays that respond to consumer behavior.

Transportation

When it comes to transportation and traffic, video analytics can be used to monitor parking spots, track usage to display automated, “No parking available” signs and re-route those trying to park. It can also be used for public transportation to monitor queues and crowds and identify capacity needs, enabling organizations to add capacity or open new entrances or exits. By feeding business data, pricing can be adjusted in real-time based on demand and capacity.

Manufacturing

Manufacturers can use LVA to monitor lines for quality assurances or ensure safety equipment is being used and procedures are being followed. For example, monitoring personnel to see that they are wearing helmets where required or even checking that face shields are lowered when needed.

Platform capabilities

The LVA on IoT Edge platform offers the following capabilities for you to develop video analytics functionality in IoT solutions.

Process video in your own environment

Live Video Analytics on IoT Edge can be deployed on your own appliance in your business environment. Depending on your business needs you can choose to process the video on your device and have only analytics data go to cloud services such as Power BI. This helps in avoiding cost related to moving video from the edge to the cloud and helps address any privacy or compliance concerns.

Analyze video with your own AI

Live Video Analytics on IoT Edge enables you to plug in your own AI and be in control of analyzing your video per your business needs. You have flexibility in using your own custom-built AI, open source AI, or AI built by companies specializing in your business domain.

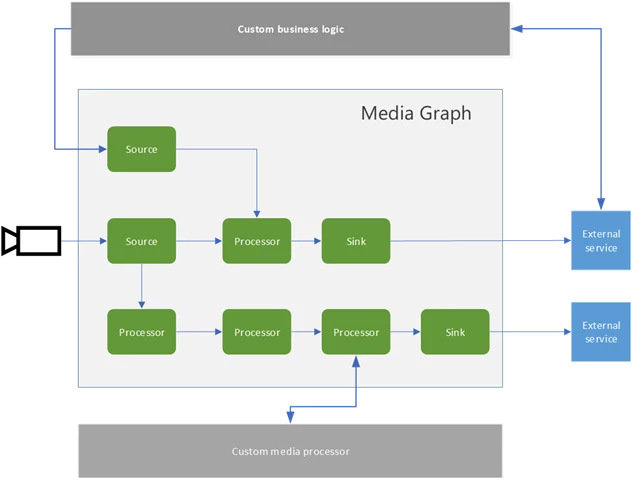

Flexible live video workflows

You can define a variety of live video workflows using the concept of Media Graph. Media Graph lets you define where video should be captured from, how it should be processed, and where the results should be delivered. You accomplish this by connecting components, or nodes, in the desired manner. The diagram below provides a graphical representation of a Media Graph. You can learn more about it on the Media Graph concept page.

Integrate with other Azure services

LVA on IoT Edge can be combined with other Azure services on the edge and in the cloud to build powerful business applications with relative ease. As an example, you can use LVA on IoT Edge to capture video from cameras, sample frames at a frequency of your choice, use an open source AI model such as Yolo to detect objects, use Azure Stream Analytics on IoT Edge to count and/or filter objects detected by Yolo, and use Azure Time Series Insights to visualize the analytics data in the cloud, while using Azure Media Services to record the video and make it available for consumption by video players in browsers and mobile apps.

Next steps

To learn more, visit LVA on IoT Edge, watch this demo, and see the LVA on IoT Edge documentation.

Microsoft is committed to designing responsible AI and has published a set of Responsible AI principles. Please review the Transparency Note: Live Video Analytics (LVA) to learn more about designing responsible AI integrations.