Advanced Analytics and Cognitive Intelligence on Petabyte sized files and trillions of objects with Azure Data Lake

Today we are announcing the general availability of Azure Data Lake, ushering in a new era of productivity for your big data developers and scientists. Fundamentally different from today’s cluster-based solutions, the Azure Data Lake services enable you to securely store all your data centrally in a “no limits” data lake, and run on-demand analytics that instantly scales to your needs. Our state-of-the-art development environment and rich and extensible U-SQL language enable you to write, debug, and optimize massively parallel analytics programs in a fraction of the time of existing solutions.

Before Azure Data Lake

Traditional approaches for big data analytics constrain the productivity of your data developers and scientists due to time spent on infrastructure planning, and writing, debugging, & optimizing code with primitive tooling. They also lack rich built-in cognitive capabilities like keyphrase extraction, sentiment analysis, image tagging, OCR, face detection, and emotion analysis. The underlying storage systems also impose challenges with artificial limits on file and account sizes requiring you to build workarounds. Additionally, your developer’s valuable time is spent either optimizing the system or you end up overpaying for unused cluster capacity. The friction in these existing systems is so high, it effectively prevents companies from realizing the business transformation that Big Data promises.

With Azure Data Lake

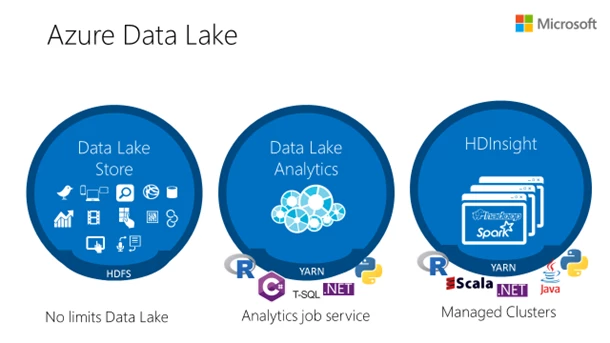

With thousands of customers, Azure Data Lake has become one of the fastest growing Azure services. You can get started on this new era of big data productivity and scalability with the general availability of Azure Data Lake Analytics and Azure Data Lake Store.

- Azure Data Lake Store – the first cloud Data Lake for enterprises that is secure, massively scalable and built to the open HDFS standard. With no limits to the size of data and the ability to run massively parallel analytics, you can now unlock value from all your unstructured, semi-structured and structured data.

- Azure Data Lake Analytics – the first cloud analytics job service where you can easily develop and run massively parallel data transformation and processing programs in U-SQL, R, Python and .NET over petabytes of data. It has rich built-in cognitive capabilities such as image tagging, emotion detection, face detection, deriving meaning from text, and sentiment analysis with the ability to extend to any type of analytics. With Azure Data Lake Analytics, there is no infrastructure to manage, and you can process data on demand, scale instantly, and only pay per job.

- Azure HDInsight – the only fully managed cloud Hadoop offering that provides optimized open source analytic clusters for Spark, Hive, Map Reduce, HBase, Storm, Kafka and R-Server backed by a 99.9% SLA. Today, we are announcing the general availability of R Server for HDInsight to do advanced analytics and predictive modelling with R+Spark. Further, we are introducing the public preview of Kafka for HDInsight, now the first managed cluster solution in the cloud for real-time ingestion with Kafka.

Data Lake Store – A No Limits Data Lake that powers Big Data Analytics

Petabyte sized files and trillions of objects

With Azure Data Lake Store your organization can now securely capture and analyze all data in a central location with no artificial constraints to limit the growth of your business. It can manage trillions of files where a single file can be greater than a petabyte in size – this is 200x larger file size than other cloud object stores. Without the limits that constrain other cloud offerings, Data Lake Store is ideal for managing any type of data; including massive datasets like high-resolution video, genomic and seismic datasets, medical data, and data from a wide variety of industries. Data Lake Store is an enterprise data lake that can power your analytics today and in the future.

DS-IQ provides Dynamic Shopper Intelligence by curating data from large amounts of non-relational sources like weather, health, traffic, and economic trends so that we can give our customers actionable insights to drive the most effective marketing and service communications. Azure Data Lake was perfect for us because it could scale elastically, on-demand, to petabytes of data within minutes. This scalability and performance has impressed us, giving us confidence that it can handle the amounts of data we need to process today and, in the future, enable us to provide even more valuable, dynamic, context-aware experiences for our clients.”

-William Wu, Chief Technology Officer at DS-IQ

Scalability for massively parallel analytics

Data Lake Store provides massive throughput to run analytic jobs with thousands of concurrent executors that read and write hundreds of terabytes of data efficiently. You are no longer forced to redesign your application or repartition your data because Data Lake Store scales throughput to support any size of workload. Multiple services like Data Lake Analytics, HDInsight or HDFS compliant applications can efficiently analyze the same data simultaneously.

Ecolab has an ambitious mission to find solutions to some of the world’s biggest challenges – clean water, safe food, abundant energy and healthy environments. “Azure Data Lake has been deployed to our water division where we are collecting real-time data from IoT devices so we can help our customers understand how they can reduce, reuse, and recycle water and at the same address one of the world’s most pressing sustainability issues. We’ve been impressed with Azure Data Lake because it allows us to store any amount of data we require and also lets us use our existing skills to analyze the data. Today, we have both groups who use open source technologies such as Spark in HDInsight to do analytics and other groups that use U-SQL, leveraging the extensibility of C# with the simplicity of SQL.”

-Kevin Doyle, VP of IT, Global Industrial Solutions at Ecolab

Data Lake Analytics – A On-Demand Analytics Job Service to power intelligent action

Start in seconds, scale instantly, pay per job

Our on-demand service will have your data developer and scientist processing big data jobs to power intelligent action in seconds. There is no infrastructure to worry about because there are no servers, VMs, or clusters to wait for, manage or tune. Instantly apply or adjust the analytic units (processing power) from one to hundreds or even thousands for each job. Only pay for the processing used per job, freeing valuable developer time from doing capacity planning and optimizations required in cluster-based systems that can take weeks to months.

Azure Data Lake is instrumental because it helps Insightcentr ingest IoT-scale telemetry from PCs in real-time and gives detailed analytics to our customers without us spending millions of dollars building out big data clusters by scratch. We saw Azure Data Lake as the fastest and most scalable way we can get bring our customers these valuable insights to their business.”

-Anthony Stevens, CEO Australian start-up Devicedesk

Develop massively parallel analytic programs with simplicity

U-SQL is an easy-to-use, highly expressive, and extensible language that allows you to write code once and automatically have it be parallelized for the scale you need. Instead of writing low-level code dealing with clusters, nodes, mappers, and reducers, etc., a developer writes a simple logical description of how data should be transformed for their business using both declarative and imperative techniques as desired. The U-SQL data processing system automatically parallelizes the code – enabling developers to control the amount of resources devoted to parallel computation with the simplicity of a slider. The U-SQL language is highly extensible and can reuse existing libraries written in a variety of languages like .NET languages, R, or Python. You can massively parallelize the code to process petabytes of data for diverse workload categories such as ETL, machine learning, feature engineering, image tagging, emotion detection, face detection, deriving meaning from text, and sentiment analysis.

Azure Data Lake allows us to develop quickly on large sets of data with our current developer expertise. We have been able to leverage Azure Data Lake Analytics to capture and process large marketing audiences for our dynamic marketing platforms.”

-McPherson White, Director of Development at PureCars

Run Big Cognition at Petabyte Scale

Furthermore, we’ve incorporated the technology that sits behind the Cognitive Services API inside U-SQL directly. Now you can process any amount of unstructured data, e.g., text, images, and extract emotions, age, and all sorts of other cognitive features using Azure Data Lake and perform query by content. You can join emotions from image content with any other type of data you have and do incredibly powerful analytics and intelligence over it. This is what we call ‘Big Cognition’. It’s not just extracting one piece of cognitive information at a time, not just about understanding an emotion or whether there’s an object in an image, but rather it’s about joining all the extracted cognitive data with other types of data, so you can do some really powerful analytics with it. We have demonstrated this capability at Microsoft Ignite and PASS Summit, by showing a Big Cognition demo in which we used U-SQL inside Azure Data Lake Analytics to process a million images and understand what’s inside those images. You can watch this demo here and try it yourself using a sample project on GitHub.

Debug and optimize your Big Data programs with ease

With today’s tools, developers face serious challenges debugging distributed programs. Azure Data Lake makes debugging failures in cloud distributed programs as easy as debugging a program in your personal environment using the powerful tools within Visual Studio. Developers no longer need to inspect thousands of logs on each machine searching for failures. When a U-SQL job fails, logs are automatically located, parsed, and filtered to the exact components involved in the failure and available as a visualization. Developers can even debug the specific parts of the U-SQL job that failed to their own local workstation without wasting time and money resubmitting jobs to the cloud. Our service can detect and analyze common performance problems that big data developers encounter such as imbalanced data partitioning and offers suggestions to fix your programs using the intelligence we’ve gathered in the analysis of over a billion jobs in Microsoft’s data lake.

Developers do a lot of heavy lifting when optimizing big data systems and frequently overpay for unused cluster capacity. Developers must manually optimize their data transformations, requiring them to carefully investigate how their data is transformed step-by-step, often manually ordering steps to gain improvements. Understanding performance and scale bottlenecks is challenging and requires distributed computing and infrastructure experts. For example, to improve performance, developers must carefully account for the time & cost of data movement across a cluster and rewrite their queries or repartition their data. Data Lake’s execution environment actively analyzes your programs as they run and offers recommendations to improve performance and reduce cost. For example, if you requested 1000 AUs for your program and only 50 AUs were needed, the system would recommend that you only use 50 AUs resulting in a 20x cost savings.

We ingest a massive amount of live data from mobile, web, IoT and retail transactions. Data Lake gives us the ability to easily and cost effectively store everything and analyse what we need to, when we need to. The simplicity of ramping up parallel processing on the U-SQL queries removes the technical complexities of fighting with the data and lets the teams focus on the business outcomes. We are now taking this a step further and exposing the powerful Data Lake tools directly to our clients in our software allowing them to more easily explore their data using these tools.”

-David Inggs, CTO at Plexure

Today, we are also announcing the availability of this big data productivity environment in Visual Studio Code allowing users to have this type of productivity in a free cross-platform code editor that is available on Windows, Mac OS X, and Linux.

Azure HDInsight introduces fully managed Kafka for real-time analytics and R Server for advanced analytics

At Strata + Hadoop World New York, we announced new security, performance and ISV solutions that build on Azure HDInsight’s leadership for enterprise-ready cloud Hadoop. Today, we are announcing the public preview of Kafka for HDInsight. This service lets you ingest massive amounts of real-time data and analyze that data with integration to Storm, Spark, for HDInsight and Azure IoT Hub to build end-to-end IoT, fraud detection, click-stream analysis, financial alerts, or social analytics solutions.

We are also announcing the general availability of R Server for HDInsight. Running Microsoft R Server as a service on top of Apache Spark, developers can achieve unprecedented scale and performance with code that combines the familiarity of the open source R language and Spark. Multi-threaded math libraries and transparent parallelization in R Server enables handling up to 1000x more data and up to 50x faster speeds than open source R—helping you train more accurate models for better predictions than previously possible. Newly available in GA is the inclusion of R Studio Server Community Edition out-of-the-box making it easy for data scientists to get started quickly.

Milliman is among the world’s largest providers of actuarial and related products and services, with offices in major cities around the globe. R Server for HDInsight, offers the ability for our clients to be able to forecast risk over much larger datasets than ever before, improving the accuracy of predictions, in a cost-efficient way. The familiarity of the R Programming language to our users, as well as the ability to spin up Hadoop and Spark clusters within minutes, running at unprecedented scale and performance, is what really gets me excited about R Server for HDInsight.”

-Paul Maher, Chief Technology Officer of the Life Technology Solutions practice at Milliman

Enterprise-grade Security, Auditing and Support

Enterprise-grade big data solutions must meet uptime guarantees, stringent security, governance & compliance requirements, and integrate with your existing IT investments. Data Lake services (Store, Analytics, and HDInsight) guarantee an industry-leading 99.9% uptime SLA and 24/7 support for all Data Lake services. They are built with the highest levels of security for authentication, authorization, auditing, and encryption to give you peace-of-mind when storing and analyzing sensitive corporate data and intellectual property. Data is always encrypted; in motion using SSL, and at rest using service or user managed HSM-backed keys in Azure Key Vault. Capabilities such as single sign-on (SSO), multi-factor authentication and seamless management of your on-premises identity & access management is built-in through Azure Active Directory. You can authorize users and groups with fine-grained POSIX-based ACLs for all data in the Store or with Apache Ranger in HDInsight enabling role-based access controls. Every access or configuration change is automatically audited for security and regulatory compliance requirements.

Supporting open source and open standards

Microsoft continues to collaborate with the open source community reflected by our contributions to Apache Hadoop, Spark, Apache REEF and our work with Jupyter notebooks. This is also the case with Azure Data Lake.

Azure Data Lake Analytics uses Apache YARN, the central part of Apache Hadoop to govern resource management and deliver consistent operations. To lead innovations to YARN, Microsoft has been a primary contributor to improve performance, scale, and made security innovations.

Hortonworks and Microsoft have partnered closely for the past 5 years to further the Hadoop platform for big data analytics, including contributions to YARN, Hive, and other Apache projects. Azure Data Lake services, including Azure HDInsight and Azure Data Lake Store, demonstrate our shared commitment to make it easier for everyone to work with big data in an open and collaborative way.”

-Shaun Connolly, Chief Strategy Officer at Hortonworks

Data Lake Store supports the open Apache Hadoop Distributed File System (HDFS) standard. Microsoft has also contributed improvements to HDFS such as OAuth 2.0 protocol support.

Cloudera is working closely with Microsoft to integrate Cloudera Enterprise with the Azure Data Lake Store. Cloudera on Azure will benefit from the Data Lake Store which acts as a cloud-based landing zone for all data in your enterprise data hub. Cloudera will leverage Data Lake and provide customers with a secure and flexible big data solution in the future.”

-Mike Olson, founder and chief strategy officer at Cloudera

Leadership

Both industry analysts and customers recognize Microsoft’s capabilities in big data. Forrester recently recognized Microsoft Azure as a leader in their Big Data Hadoop Cloud Solutions. Forrester notes that leaders have the most comprehensive, scalable, and integrated platforms. Microsoft specifically was called out for having a cloud-first strategy that is paying off.

Getting started with Data Lake

Data Lake Analytics and Store is generally available today. R Server for HDInsight is also generally available today. Kafka for HDInsight is in public preview. Try it today individually or as part of Cortana Intelligence Suite to transform your data into intelligent action.

- Read the overview, pricing and getting started pages of Data Lake Analytics or attend the free course

- Read the overview, pricing and getting started pages of Data Lake Store

- Read the R Server, Kafka overview and pricing pages of HDInsight

@josephsirosh