Event driven architecture (EDA) is a common data integration pattern that involves production, detection, consumption and reaction to events. Today, we are announcing the support for event based triggers in your Azure Data Factory (ADF) pipelines.

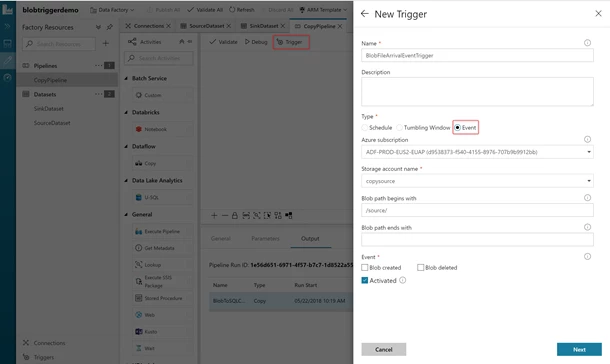

A lot of data integration scenarios requires data factory customers to trigger pipelines based on events. A typical event could be file landing or getting deleted in your azure storage. Now you can simply create an event based trigger in your data factory pipeline.

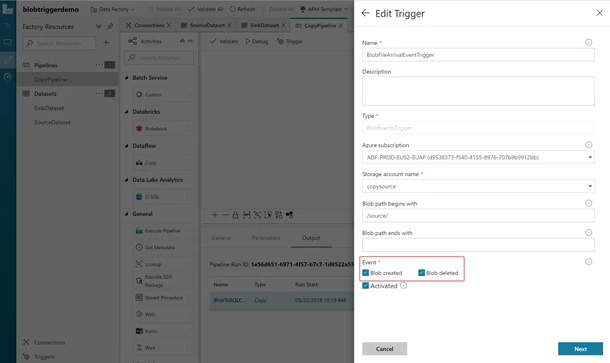

As soon as the file arrives in your storage location and the corresponding blob is created, it will trigger and run your data factory pipeline. You can create an event based trigger on blob creation, blob deletion or both in your data factory pipelines.

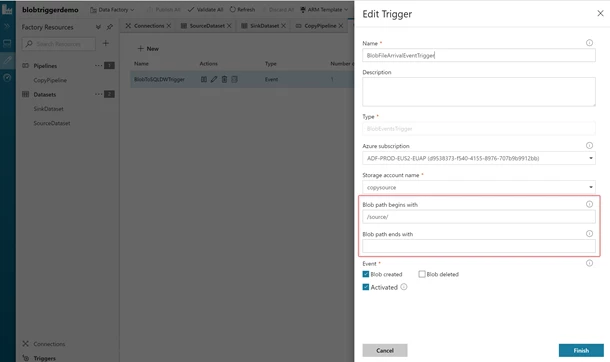

With the “Blob path begins with” and “Blob path ends with” properties, you can tell us for which containers, folders, and blob names you wish to receive events. You can also use wide variety of patterns for both “Blob path begins with” and “Blob path ends with” properties. At least, one of these properties is required.

Examples:

- Blob path begins with (/containername/) – Will receive events for any blob in the container.

- Blob path begins with (/containername/blobs/foldername) – Will received events for any blobs in the containername container and foldername folder.

- Blob path begins with (/containername/blobs/foldername/file.txt) – Will receive events for a blob named file.txt in the foldername folder under the containername container.

- Blob path ends with (file.txt) – Will receive events for a blob named file.txt at any path.

- Blob path ends with (/containername/blobs/file.txt) – Will receive events for a blob named file.txt under container containername.

- Blob path ends with (foldername/file.txt) – Will receive events for a blob named file.txt in foldername folder under any container.

Our goal is to continue adding features and improve the usability of Data Factory tools. Get more information and detailed steps on event based triggers in data factory.

Get started building pipelines easily and quickly using Azure Data Factory. If you have any feature requests or want to provide feedback, please visit the Azure Data Factory forum.