Azure Blob storage is a massively scalable object storage solution that serves from small amounts to hundreds of petabytes of data per customer across a diverse set of data types, including logging, documents, media, genomics, seismic processing, and more. Read the Introduction to Azure Blob storage to learn more about how it can be used in a wide variety of scenarios.

Increasing file size support for Blob storage

Customers that have workloads on-premises today utilize files that are limited by the filesystem used with file size maximums up to exabytes in size. Most usage would not go up to the filesystem limit but do scale up to the tens of terabytes in size for specific workloads that make use of large files. We recently announced the preview of our new maximum blob size of 200 TB (specifically 209.7 TB), increasing our current limit of 5TB in size, which is a 40x increase! The increased size of over 200TB per object is much larger than other vendors that provide a 5TB max object size. This increase allows workloads that currently require multi-TB size files to be moved to Azure without additional work to break up these large objects.

This increase in object size limit will unblock workloads, including seismic analysis, backup files, media and entertainment (video rendering and processing), and others which include scenarios where multi-TB object size is used. As an example, a media company which is trying to move from a private datacenter to Azure can now do so with our ability to support files up to 200TB in size. Increasing our object size removes the need to carefully inventory existing file sizes as part of a plan to migrate a workload to Azure. Given many on-premises solutions can store files in the ten to hundreds of terabytes in size, removing this gap simplifies migration to Azure.

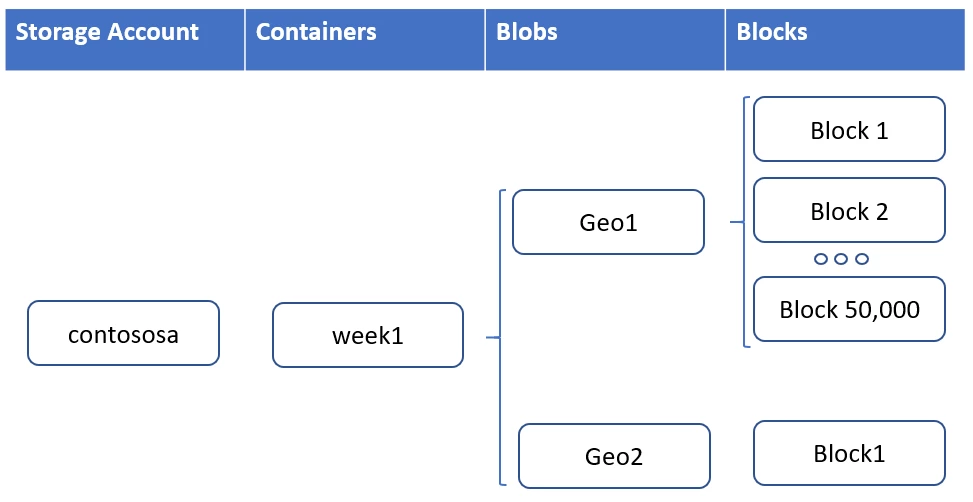

With large file size support, being able to break up an object into blocks to ease upload and download is critical. Every Azure Blob is made up of up to 50,000 blocks. This allows a multi-terabyte object to be broken down into manageable pieces for write. The previous maximum of 5 TB (4.75TiB) was based on a max block size of 100 MiB x 50,000 blocks. The preview increases the block size to 4,000 MiB and keeps 50,000 blocks per object for a maximum object size of 4,000 MiB x 50,000 = 190.7 TiB. Conceptually in your application (or within the utility or SDK), the large file is broken into blocks, each block is written to Azure Storage, and, after all, blocks have successfully been uploaded, the entire file (object) is committed.

As an example of the overall relationship within a storage account, the following diagram shows a storage account, Contososa, which contains one container with two blobs. The first is a large blob made up of 50,000 blocks. The second is a small blob made of a single block.

The 200 TB preview block blob size is supported in all regions, using tiers including Premium, Hot, Cool, and Archive. There is no additional charge for this preview capability. We do not support upload of very large objects using Azure Portal. The various methods to transfer data into Azure will be updated to make use of this new blob size. To get started today with your choice in language:

- .Net.

- Java.

- JavaScript.

- Python.

- REST.

Next steps

We look forward to hearing your feedback via email or post in the Azure Storage technet forum.