In the industry, the concept of a data lake is relatively new. It’s as an enterprise wide repository of every type of data collected in a single place prior to any formal definition of requirements or schema. This allows every type of data to be kept without discrimination regardless of its size, structure, or how fast it is ingested. Organizations can then use Hadoop or advanced analytics to find patterns of the data. Data lakes can also serve as a repository for lower cost data preparation prior to moving curated data into a data warehouse.

While the potential of the data lake can be profound, it has yet to be fully realized. Limits to storage capacity, hardware acquisition, scalability, performance and cost are all potential reasons why customers haven’t been able to implement a data lake. Today at Build, we announced the Azure Data Lake, Microsoft’s hyperscale repository for big data analytic workloads in the cloud. This offering is built for the cloud, compatible with HDFS, and has unbounded scale with massive throughput and enterprise-grade capabilities.

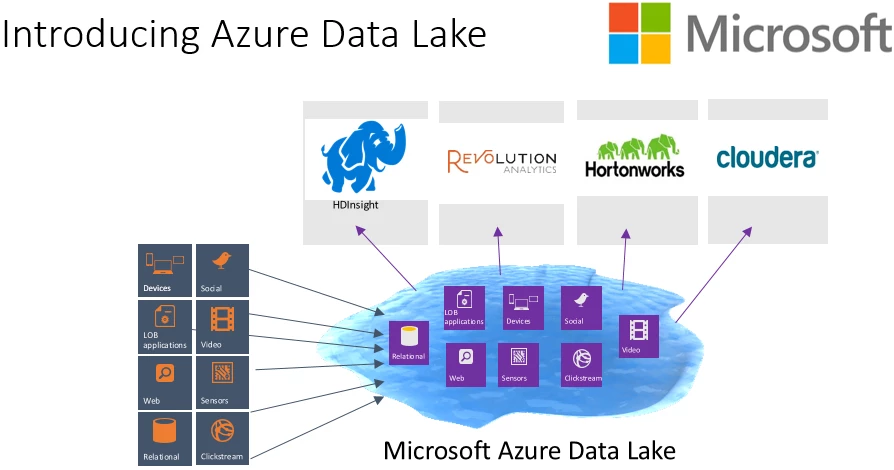

- HDFS for the Cloud: The Azure Data Lake is a Hadoop File System compatible with HDFS enabling Microsoft offerings such as Azure HDInsight, Revolution-R Enterprise, industry Hadoop distributions like Hortonworks and Cloudera all to connect to it.

- Petabyte files, massive throughput: The goal of the data lake is to run Hadoop and advanced analytics on all your data to discover conclusions from the data itself. To do this, the data lake must be built to support massively parallel queries so that discoveries can be returned in a timely fashion. Azure Data Lake meets this requirement with no fixed limits to how much data can be stored in a single account. It can also store very large files with no fixed limits to size . Finally, it is built to handle high volumes of small writes at low latency making it optimized for near real-time scenarios like website analytics, Internet of Things (IoT), analytics from sensors, and others.

- Enterprise ready: Being “enterprise ready” means that you can run this solution as an important part of your existing data platform. Azure Data Lake does this by leveraging Azure Active Directory as well as providing data replication to ensure high durability and availability.

Microsoft has been on a journey for broad big data adoption with a suite of big data and advanced analytics solutions like Azure HDInsight, Azure Data Factory, Revolution R Enterprise and Azure Machine Learning. We are excited for what Azure Data Lake will bring to this ecosystem, and when our customers can run all of their analysis on Exabyte’s of data. To learn more about this solution or sign up to be notified of public preview, go to https://azure.com/datalake.