Organizations around the world are gearing up for a future powered by artificial intelligence (AI). From supply chain systems to genomics, and from predictive maintenance to autonomous systems, every aspect of the transformation is making use of AI. This raises a very important question: How are we making sure that the AI systems and models show the right ethical behavior and deliver results that can be explained and backed with data?

This week at Spark + AI Summit, we talked about Microsoft’s commitment to the advancement of AI and machine learning driven by principles that put people first.

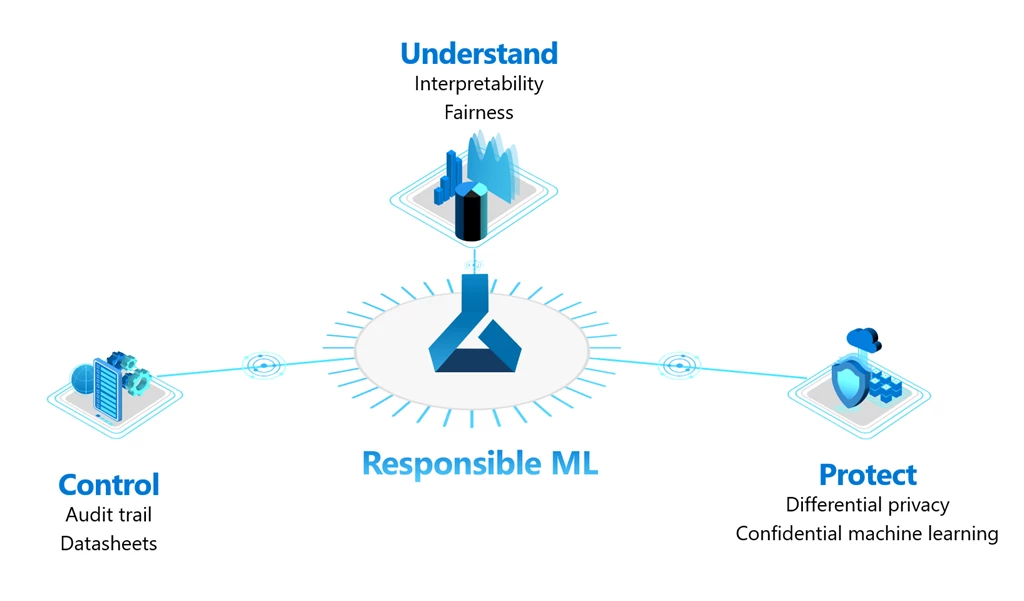

Understand, protect, and control your machine learning solution

Over the past several years, machine learning has moved out of research labs and into the mainstream and has grown from a niche discipline for data scientists with PhDs to one where all developers are empowered to participate. With power comes responsibility. As the audience for machine learning expands, practitioners are increasingly asked to build AI systems that are easy to explain and that comply with privacy regulations.

To navigate these hurdles, we at Microsoft, in collaboration with the Aether Committee and its working groups, have made available our responsible machine learning (responsible ML) innovations that help developers understand, protect and control their models throughout the machine learning lifecycle. These capabilities can be accessed in any Python-based environment and have been open sourced on GitHub to invite community contributions.

Understanding the model behavior includes being able to explain and remove any unfairness within the models. The interpretability and fairness assessment capabilities powered by the InterpretML and Fairlearn toolkits, respectively, enable this understanding. These toolkits help determine model behavior, mitigate any unfairness, and improve transparency within the models.

Protecting the data used to create models by ensuring data privacy and confidentiality, is another important aspect of responsible ML. We’ve released a differential privacy toolkit, developed in collaboration with researchers at the Harvard Institute for Quantitative Social Science and School of Engineering. The toolkit applies statistical noise to the data while maintaining an information budget. This ensures an individual’s privacy while enabling the machine learning process to run unharmed.

Controlling models and its metadata with features, like audit trails and datasheets, brings the responsible ML capabilities full circle. In Azure Machine Learning, auditing capabilities track all actions throughout the lifecycle of a machine learning model. For compliance reasons, organizations can leverage this audit trail to trace how and why a model’s predictions showed certain behavior.

Many customers, such as EY and Scandinavian Airlines, use these capabilities today to build ethical, compliant, transparent, and trustworthy solutions while improving their customer experiences.

Our continued commitment to open source

In addition to open sourcing our responsible ML toolkits, there are two more projects we are sharing with the community. The first is Hyperspace, a new extensible indexing subsystem for Apache Spark. This is designed to work as a simple add-on, and comes with Scala, Python, and .Net support. Hyperspace is the same technology that powers the indexing engine inside Azure Synapse Analytics. In benchmarking against common workloads like TPC-H and TPC-DS, Hyperspace has provided gains of 2x and 1.8x, respectively. Hyperspace is now on GitHub. We look forward to seeing new ideas and contributions on Hyperspace to make Apache Spark’s performance even better.

The second is a preview of ONNX Runtime’s support for accelerated training. The latest release of training acceleration incorporates innovations from the AI at Scale initiative, such as ZeRO optimization and Project Parasail, which improves memory utilization and parallelism on GPUs.

We deeply value our partnership with the open source community and look forward to collaborating to establish responsible ML practices in the industry.

Additional resources

- Learn more about responsible ML.

- Walk through an interactive demo for responsible ML.

- Read the IDC white paper on responsible AI.

- Use the Azure architecture center for proven architectures on analytics and AI.