Deep learning is behind many recent breakthroughs in Artificial Intelligence, including speech recognition, language understanding and computer vision. At Microsoft, it is changing customer experience in many of our applications and services, including Cortana, Bing, Office 365, SwiftKey, Skype Translate, Dynamics 365, and HoloLens. Deep learning-based language translation in Skype was recently named one of the 7 greatest software innovations of the year by Popular Science, and the technology helped us achieve human-level parity in conversational speech recognition. Deep learning is now a core feature of development platforms such as the Microsoft Cognitive Toolkit, Cortana Intelligence Suite, Microsoft Cognitive Services, Azure Machine Learning, Bot Framework, and the Azure Bot Service. I believe that the applications of this technology are so far reaching that “Deep Learning in every software” will be a reality within this decade.

We’re working very hard to empower developers with AI and Deep Learning, so that they can make smarter products and solve some of the most challenging computing tasks. By vigorously improving algorithms, infrastructure and collaborating closely with our partners like NVIDIA, OpenAI and others and harnessing the power of GPU-accelerated systems, we’re making Microsoft Azure the fastest, most versatile AI platform – a truly intelligent cloud.

Production-Ready Deep Learning Toolkit for Anyone

The Microsoft Cognitive Toolkit (formerly CNTK) is our open-source, cross-platform toolkit for learning and evaluating deep neural networks. The Cognitive Toolkit expresses arbitrary neural networks by composing simple building blocks into complex computational networks, supporting all relevant network types and applications. With the state-of-the art accuracy and efficiency, it scales to multi-GPU/multi-server environments. According to both internal and external benchmarks, the Cognitive Toolkit continues to outperform other Deep Learning frameworks in most tests and unsurprisingly, the latest version is faster than the previous releases, especially when working on massively big data sets and on Pascal GPUs from NVIDIA. That’s true for single-GPU performance, but what really matters is that Cognitive Toolkit can already scale up to using a massive number of GPUs. In the latest release, we’ve extended Cognitive Toolkit to natively support Python in addition to C++. Furthermore, the Cognitive Toolkit also now allows developers to use reinforcement learning to train their models. Finally, Cognitive Toolkit isn’t bound to the cloud in any way. You can train models on the cloud but run them on premises or with other hosters. Our goal is to empower anyone to take advantage of this powerful technology.

To quickly get up to speed on the Toolkit, we’ve published Azure Notebooks with numerous tutorials and we’ve also assembled a DNN Model Gallery with dozens of code samples, recipes and tutorials across scenarios working with a variety of datasets: images, numeric, speech and text.

What Others Are Saying

In the “Benchmarking State-of-the-Art Deep Learning Software Tools” paper published in September 2016, academic researchers have run a comparative study of the state-of-the-art GPU-accelerated deep learning software tools, including Caffe, Cognitive Toolkit (CNTK), TensorFlow, and Torch. They’ve benchmarked the running performance of these tools with three popular types of neural networks on two CPU platforms and three GPU platforms. Our Cognitive Toolkit outperformed other deep learning toolkits on nearly every workload.

Furthermore, Nvidia recently has also run a benchmark comparing all the popular Deep Learning toolkits with their latest hardware. The results show that the Cognitive Toolkit trains and evaluates deep learning algorithms faster than other available toolkits, scaling efficiently in a range of environments—from a CPU, to GPUs, to multiple machines—while maintaining accuracy. Specifically, it’s 1.7 times faster than our previous release and 3x faster than TensorFlow on Pascal GPUs (as presented at SuperComputing’16 conference).

End users of deep learning software tools can use these benchmarking results as a guide to selecting appropriate hardware platforms and software tools. Second, for developers of deep learning software tools, the in-depth analysis points out possible future directions to further optimize performance.

Real-world Deep Learning Workloads

We at Microsoft use Deep Learning and the Cognitive Toolkit in many of our internal services, ranging from digital agents to core infrastructure in Azure.

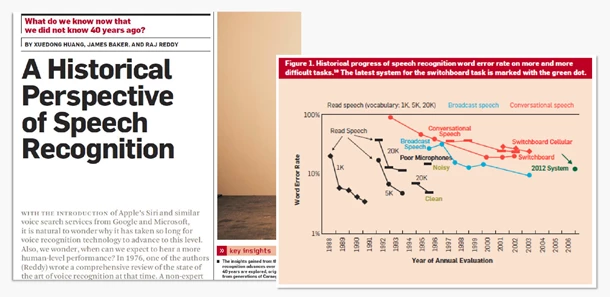

1. Agents (Cortana): Cortana is a digital agent that knows who you are and knows your work and life preferences across all your devices. Cortana has more 133 million users and has intelligently answered more than 12 billion questions. From speech recognition to computer vision in Cortana – all these capabilities are powered by Deep Learning and Cognitive Toolkit. We have recently made a major breakthrough in speech recognition, creating a technology that recognizes the words in a conversation and makes the same or fewer errors than professional transcriptionists. The researchers reported a word error rate (WER) of 5.9 percent, down from the 6.3 percent, the lowest error rate ever recorded against the industry standard Switchboard speech recognition task. Reaching human parity using Deep Learning is a truly historic achievement.

Our approach to image recognition also placed first in several major categories of the ImageNet and the Microsoft Common Objects in Context challenges. The DNNs built with our tools won first place in all three categories we competed in: classification, localization and detection. The system won by a strong margin, because we were able to accurately train extremely deep neural nets, 152 layers – much more than in the past – and it used a new “residual learning” principle. Residual learning reformulates the learning procedure and redirects the information flow in deep neural networks. That helped solve the accuracy problem that has traditionally dogged attempts to build extremely deep neural networks.

2. Applications: Our applications, from Office 365, Outlook, PowerPoint, Word and Dynamics 365 can use deep learning to provide new customer experiences. One excellent example of a deep learning application the bot used by Microsoft Customer Support and Services. Using Deep Neural Nets and the Cognitive Toolkit, it can intelligently understand the problems that a customer is asking about, and recommend the best solution to resolve those problems. The bot provides a quick self-service experience for many common customer problems and helps our technical staff focus on the harder and more challenging customer issues.

Another example of an application using Deep Learning is the Connected Drone application built for powerline inspection by one of our customers eSmart (to see the Connected Drone in action, please watch this video). eSmart Systems began developing the Connected Drone out of a strong conviction that drones combined with cloud intelligence could bring great efficiencies to the power industry. The objective of the Connected Drone is to support and automate the inspection and monitoring of power grid infrastructure instead of the currently expensive, risky, and extremely time consuming inspections performed by ground crews and helicopters. To do this, they use Deep Learning to analyze video data feeds streamed from the drones. Their analytics software recognizes individual objects, such as insulators on power poles, and directly links the new information with the component registry, so that inspectors can quickly become aware of potential problems. eSmart applies a range of deep learning technologies to analyze data from the Connected Drone, from the very deep Faster R-CNN to Single Shot Multibox Detectors and more.

3. Cloud Services (Cortana Intelligence Suite): On Azure, we offer a suite for Machine Learning and Advanced Analytics, including Cognitive Services (Vision, Speech, Language, Knowledge, Search, etc.), Bot Framework, Azure Machine Learning, Azure Data Lake, Azure SQL Data Warehouse and PowerBI, called the Cortana Intelligence Suite. You can use these services along with the Cognitive Toolkit or any other deep learning framework of your choice to deploy intelligent applications. For instance, you can now massively parallelize scoring using a pre-trained DNN machine learning model on an HDInsight Apache Spark cluster in Azure. We are seeing a growing number of scenarios that involve the scoring of pre-trained DNNs on a large number of images, such as our customer Liebherr that runs DNNs to visually recognize objects inside a refrigerator. Developers can implement such a processing architecture with just a few steps (see instructions here).

A typical large-scale image scoring scenario may require very high I/O throughput and/or large file storage capacity, for which the Azure Data Lake Store (ADLS) provides a high performance and scalable analytical storage. Furthermore, ADLS imposes data schema on read, which allows the user to not worry about the schema until the data is needed. From the user’s perspective, ADLS functions like any other HDFS storage account through the supplied HDFS connector. Training can take place on an Azure N-series NC24 GPU-enabled Virtual Machine or using recipes from the Azure Batch Shipyard, which allows training of our DNNs with bare-metal GPU hardware acceleration in the public cloud using as many as four NVIDIA Tesla K80 GPUs. For scoring, one can use HDInsight Spark Cluster or Azure Data Lake Analytics to massively parallelize the scoring of a large collection of images with the rxExec function in Microsoft R Server (MRS) by distributing the workload across the worker nodes. The scoring workload is orchestrated from a single instance of MRS and each worker node can read and write data to ADLS independently, in parallel.

SQL Server, our premier database engine, is “becoming deep” as well. This is now possible for the first time with R and ML built into SQL Server. Pushing deep learning models inside SQL Server, our customers now get throughput, parallelism, security, reliability, compliance certifications and manageability, all in one. It’s a big win for data scientists and developers – you don’t have to separately build the management layer for operational deployment of ML models. Furthermore, just like data in databases can be shared across multiple applications, you can now share the deep learning models. Models and intelligence become “yet another type of data”, managed by the SQL Server 2016. With these capabilities, developers can now build a new breed of applications that marry the latest transaction processing advancements in databases with deep learning.

4. Infrastructure (Azure): Deep Learning requires a new breed of high performance infrastructure that is able to support the computationally intensive nature of deep learning training. Azure now enables these scenarios with its N-Series Virtual machines that are powered by NVIDIA’s Tesla K80 GPUs that are best in class for single and double precision workloads in the public cloud today. These GPUs are exposed via a hardware pass-through mechanism called Discreet Device Assignment that allows us to provide near bare-metal performance. Additionally, as data grows for these workloads, data scientists have the need to distribute the training not just across multiple GPUs in a single server, but to a number of GPUs across nodes. To enable this distributed learning need across tens or hundreds of GPUs, Azure has invested in high-end networking infrastructure for the N-Series using a Mellanox’s InfiniBand fabric which allows for high bandwidth communication between VMs with less than 2 microseconds latency. This networking capability allows for libraries such as Microsoft’s own Cognitive Toolkit (CNTK) to use MPI for communication between nodes and efficiently train with a larger number of layers and great performance.

We are also working with NVIDIA on a best in class roadmap for Azure with the current N-Series as the first iteration of that roadmap. These Virtual Machines are currently in preview and recently announced General Availability of this offering starting on 1st of December.

It is easy to get started with deep learning on Azure. The Data Science Virtual Machine (DSVM) is available in the Azure Marketplace, and comes pre-loaded with a range of deep learning frameworks and tools for Linux and Windows. To easily run many training jobs in parallel or launch a distributed job across more than one server, Azure Batch “Shipyard” templates are available for the top frameworks. Shipyard takes care of configuring the GPU and InfiniBand drivers, and uses Docker containers to setup your software environment.

Lastly, our team of our engineers and researchers has created a system that uses a reprogrammable computer chip called a field programmable gate array, or FPGA, to accelerate Bing and Azure. Utilizing the FPGA chips, we can now write Deep Learning algorithms directly onto the hardware, instead of using potentially less efficient software as the middle man. What’s more, an FPGA can be reprogrammed at a moment’s notice to respond to new advances in AI/Deep Learning or meet another type of unexpected need in a datacenter. Traditionally, engineers might wait two years or longer for hardware with different specifications to be designed and deployed. This is a moonshot project that’s succeeded and we are bringing this now to our customers.

Join Us in Shaping the Future of AI

Our focus on innovation in Deep Learning is across the entire stack of infrastructure, development tools, PaaS services and end user applications. Here are a few of the benefits our products bring:

- Greater versatility: The Cognitive Toolkit lets customers use one framework to train models on premises with the NVIDIA DGX-1 or with NVIDIA GPU-based systems, and then run those models in the cloud on Azure. This scalable, hybrid approach lets enterprises rapidly prototype and deploy intelligent features.

- Faster performance: When compared to running on CPUs, the GPU-accelerated Cognitive Toolkit performs deep learning training and inference much faster on NVIDIA GPUs available in Azure N-Series servers and on premises. For example, NVIDIA DGX-1 with Pascal and NVLink interconnect technology is 170x faster than CPU servers with the Cognitive Toolkit.

- Wider availability: Azure N-Series virtual machines powered by NVIDIA GPUs are currently in preview to Azure customers, and will be generally available in December. Azure GPUs can be used to accelerate both training and model evaluation. With thousands of customers already part of the preview, businesses of all sizes are already running workloads on Tesla GPUs in Azure N-Series VMs.

- Native integration with the entire data stack: We strongly believe in pushing intelligence close to where the data lives. While a few years ago running Deep Learning inside a database engine or a Big Data engine might have seemed like a science fiction, this has now become real. You can run deep learning models on massive amounts of data, e.g., images, videos, speech and text, and you can do it in bulk. This is the sort of capability brought to you by Azure Data Lake, HDInsight and SQL Server. You can also now join now the results of deep learning with any other type of data you have and do incredibly powerful analytics and intelligence over it (which we now call “Big Cognition”). It’s not just extracting one piece of cognitive information at a time, but rather joining and integrating all the extracted cognitive data with other types of data, so you can create seemingly magical “know-it-all” cognitive applications.

Let me invite all developers to come and join us in this exciting journey into AI applications.