User interface design process involves a lot a creativity that starts on a whiteboard where designers share ideas. Once a design is drawn, it is usually captured within a photograph and manually translated into some working HTML wireframe to play within a web browser. This takes efforts and delays the design process. What if a design is refactored on the whiteboard and the browser reflects changes instantly? In that sense, by the end of the session there is a resulting prototype validated between the designer, developer, and customer. Introducing Sketch2Code, a web based solution that uses AI to transform a handwritten user interface design from a picture to a valid HTML markup code.

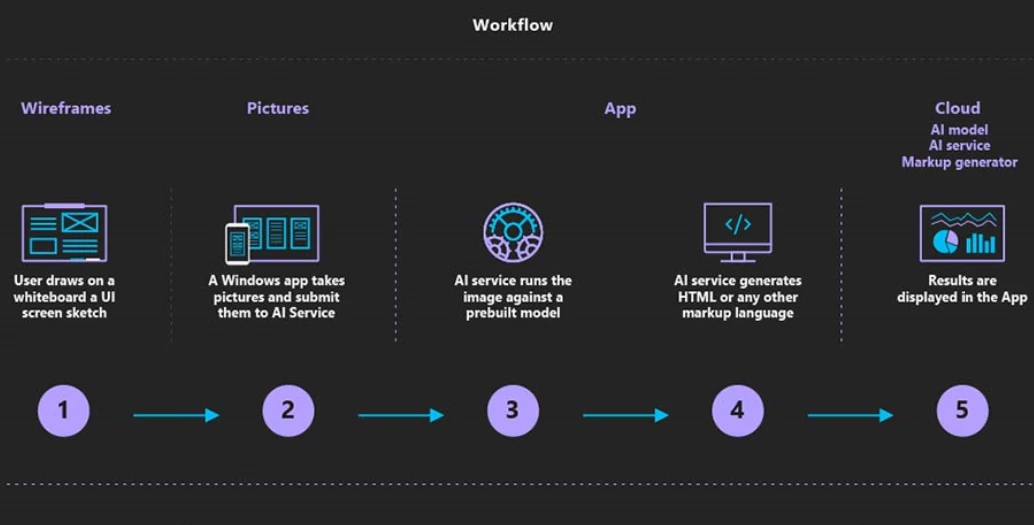

Let’s understand the process of transforming handwritten image to HTML using Sketch2Code in more details.

- First the user uploads an image through the website.

- A custom vision model predicts what HTML elements are present in the image and their location.

- A handwritten text recognition service reads the text inside the predicted elements.

- A layout algorithm uses the spatial information from all the bounding boxes of the predicted elements to generate a grid structure that accommodates all.

- An HTML generation engine uses all these pieces of information to generate an HTML markup code reflecting the result.

Below is the the application workflow:

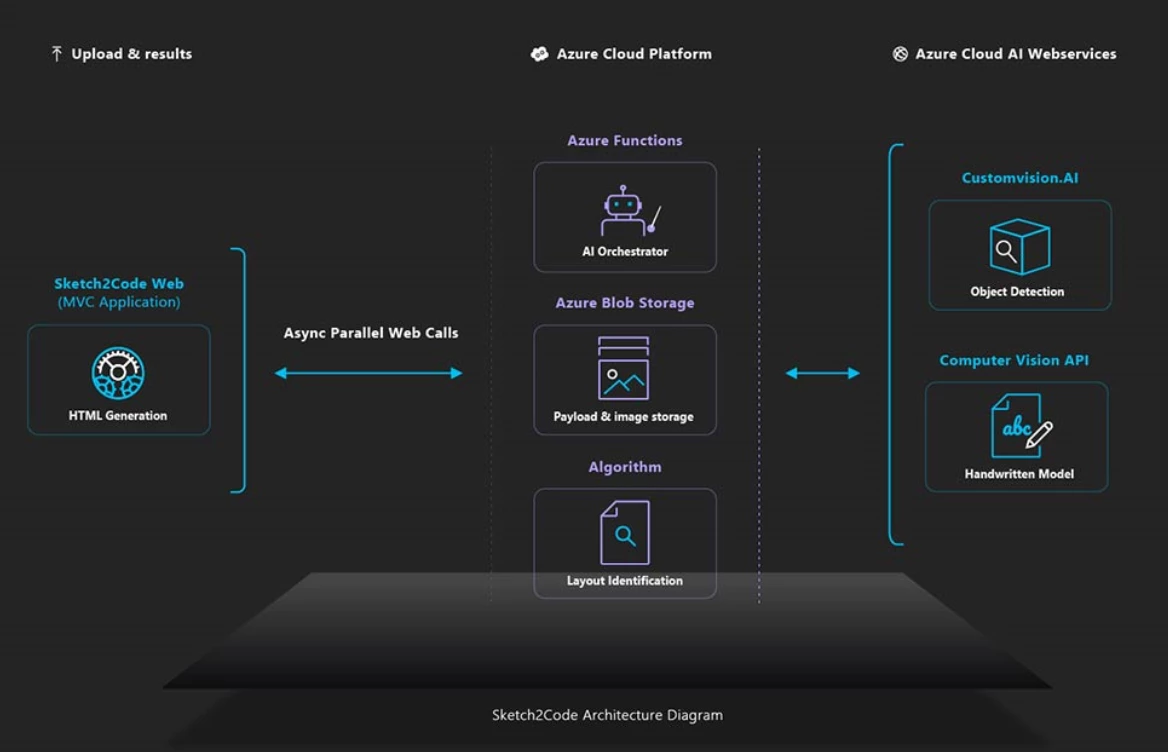

The Sketch2Code uses the following elements:

- A Microsoft Custom Vision Model: This model has been trained with images of different handwritten designs tagging the information of most common HTML elements like buttons, text box, and images.

- A Microsoft Computer Vision Service: To identify the text written into a design element a Computer Vision Service is used.

- An Azure Blob Storage: All steps involved in the HTML generation process are stored, including the original image, prediction results and layout grouping information.

- An Azure Function: Serves as the backend entry point that coordinates the generation process by interacting with all the services.

- An Azure website: User font-end to enable uploading a new design and see the generated HTML results.

The above elements form the architecture as follows:

You can find the code, solution development process, and all other details on GitHub. Sketch2Code is developed in collaboration with Kabel and Spike Techniques.

We hope this post helps you get started with AI and motivates you to become an AI developer.

Tara