Azure Blob Storage is Microsoft’s massively scalable cloud object store. Blob Storage is ideal for storing any unstructured data such as images, documents and other file types. Read this Introduction to object storage in Azure to learn more about how it can be used in a wide variety of scenarios.

The data in Azure Blob Storage is always replicated to ensure durability and high availability. Azure Storage replication copies your data so that it is protected from planned and unplanned events ranging from transient hardware failures, network or power outages, massive natural disasters, and so on. You can choose to replicate your data within the same data center, across zonal data centers within the same region, and even across regions. Find more details on storage replication.

Although Blob storage supports replication out-of-box, it’s important to understand that the replication of data does not protect against application errors. Any problems at the application layer are also committed to the replicas that Azure Storage maintains. For this reason, it can be important to maintain backups of blob data in Azure Storage.

Currently Azure Blob Storage doesn’t offer an out-of-the-box solution for backing up block blobs. In this blog post, I will design a back-up solution that can be used to perform weekly full and daily incremental back-ups of storage accounts containing block blobs for any create, replace, and delete operations. The solution also walks through storage account recovery should it be required.

The solution makes use of the following technologies to achieve this back-up functionality:

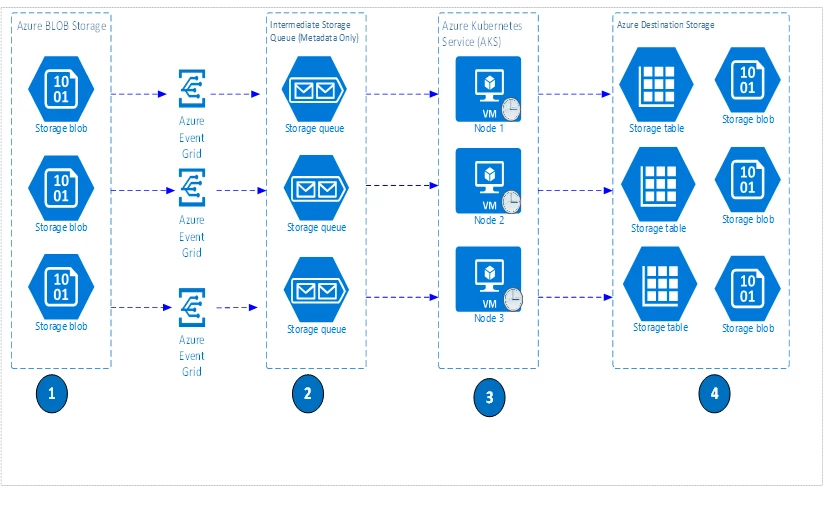

In our scenario, we will publish events to Azure Storage Queues to support daily incremental back-ups.

- Azcopy – AzCopy is a command-line utility designed for copying data to/from Microsoft Azure Blob, File, and Table storage, using simple commands designed for optimal performance. You can copy data between a file system and a storage account, or between storage accounts. In our scenario we will use AzCopy to achieve full back-up functionality and will use it to copy the content from one storage account to another storage account.

- EventGrids – Azure Storage events allow applications to react to the creation and deletion of blobs and it does so without the need for complicated code or expensive and inefficient polling services. Instead, events are pushed through Azure Event Grids to subscribers such as Azure Functions, Azure Logic Apps, or Azure Storage Queues.

- Event Grid extension – To store the storage events to Azure Queue storage. At the time of writing this blog, this feature is in preview. To use it, you must install the Event Grid extension for Azure CLI. You can install it with az extension add –name eventgrid.

- Docker Container – To host the listener to read the events from Azure Queue Storage. Please note the sample code given with the blog is a .Net core application and can be hosted on a platform of your choice and it has no dependency on docker containers.

- Azure Table Storage – This is used to keep the events metadata of incremental back-up and used while performing the re-store. Please note, you can have the events metadata stored in a database of your choice like Azure SQL, Cosmos DB etc. Changing the database will require code changes in the samples solution.

Introduction

Based on my experience in the field, I have noticed that most customers require full and incremental backups taken on specific schedules. Let’s say you have a requirement to have weekly full and daily incremental backups. In the case of a disaster, you need a capability to restore the blobs using the backup sets.

High Level architecture/data flow

Here is the high-level architecture and data flow of the proposed solution to support incremental back-up.

![clip_image002[6] clip_image002[6]](https://azure.microsoft.com/en-us/blog/wp-content/uploads/2018/08/bccabfff-8945-4d15-b6af-1f8672690516.webp)

Here is the detailed logic followed by the .Net Core based listener while copying the data for an incremental backup from the source storage account to the destination storage account.

While performing the back-up operation, the listener performs the following steps:

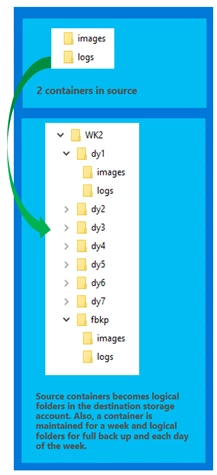

- Creates a new blob container in the destination storage account for every year like “2018”.

- Creates a logical sub folder for each week under the year container like “wk21”. In case there are no files created or deleted in wk21 no logical folder will be created. CalendarWeekRule.FirstFullWeek has been used to determine the week number.

- Creates a logical sub folder for each day of the week under the year and week container like dy0, dy1, dy2. In case there are no files created or deleted for a day no logical folder will be created for that day.

- While copying the files, the listener changes the source container names to logical folder names in the destination storage account.

Example:

SSA1 (Source Storage Account) -> Images (Container) –> Image1.jpg

Will move to:

DSA1 (Destination Storage Account) -> 2018 (Container)-> WK2 (Logical Folder) -> dy0 (Logical Folder) -> Images (Logical Folder) –> Image1.jpg

Here are the high-level steps to configure incremental backup

- Create a new storage account (destination) where you want to take the back-up.

- Create an event grid subscription for the storage account (source) to store the create/replace and delete events into Azure Storage queue. The command to set up the subscription is provided on the samples site.

- Create a table in Azure Table storage where the event grid events will finally be stored by the .Net Listener.

- Configure the .Net Listener (backup.utility) to start taking the incremental backup. Please note there can be as many as instances of this listener as needed to perform the backup, based the load on your storage account. Details on the listener configuration are provided on the samples site.

Here are the high-level steps to configure full backup

- Schedule AZCopy on the start of week, i.e., Sunday 12:00 AM to move the complete data from the source storage account to the destination storage account.

- Use AZcopy to move the data in a logical folder like “fbkp” to the corresponding year container and week folder in the destination storage account.

- You can schedule AZCopy on a VM, on a Jenkins job, etc., depending on your technology landscape.

In case of a disaster, the solution provides an option to restore the storage account by choosing one weekly full back-up as a base and applying the changes on top of it from an incremental back-up. Please note the suggested option is one of the options: you may choose to restore by applying only the logs from incremental backup, but it can take longer depending on the period of re-store.

Here are the high-level steps to configure restore

- Create a new storage account (destination) where the data needs to be restored.

- Move data from full back up folder “fbkp” using AZCopy to the destination storage account.

- Initiate the incremental restore process by providing the start date and end date to restore.utility. Details on the configuration is provided on samples site.

For example: Restore process reads the data from the table storage for the period 01/08/2018 to 01/10/2018 sequentially to perform the restore.

For each read record, the restore process adds, updates, or deletes the file in the destination storage account.

Supported Artifacts

Find source code and instructions to setup the back-up solution.

Considerations/limitations

- Blob Storage events are available in Blob Storage accounts and in General Purpose v2 storage accounts only. Hence the storage account configured for the back-up should either be Blob storage account or General Purpose V2 account. Find out more by visiting our Reacting to Blob Storage events documentation.

- Blob storage events are fired for create, replace and deletes. Hence, modifications to the blobs are not supported at this point of time but it will be eventually supported.

- In case a user creates a file at T1 and deletes the same file at T10 and the backup listener has not copied that file, you won’t be able to restore that file from the backup. For these kind of scenarios, you can enable soft delete on your storage account and either modify the solution to support restoring from soft delete or recover these missed files manually.

- Since restore will execute the restore operation by reading the logs sequentially it can take considerable amount of time to complete. The actual time can span hours or days and the correct duration can be determined only by performing a test.

- AZCopy to be used to perform the weekly full back up. The duration of execution will depend on the data size and can span hours or days.

Conclusion

In this blog post, I’ve described a proof of concept for how you would add incremental backup support to a separate storage account for Azure Blobs. The necessary code samples, description, as well as background to each step is described to allow you to create your own solution customized for what you need.