Last November we introduced Microsoft’s Project Olympus – our next generation cloud hardware design and a new model for open source hardware development. Today, I’m excited to address the 2017 Open Compute Project (OCP) U.S. Summit to share how this first-of-its-kind open hardware development model has created a vibrant industry ecosystem for datacenter deployments across the globe in both cloud and enterprise.

Since opening our first datacenter in 1989, Microsoft has developed one of the world’s largest cloud infrastructure with servers hosted in over 100 datacenters worldwide. When we joined OCP in 2014, we shared the same server and datacenter designs that power our own Azure hyper-scale cloud, so organizations of all sizes could take advantage of innovations to improve the performance, efficiency, power consumption, and costs of datacenters across the industry. As of today, 90% of servers we procure are based on designs that we have contributed to OCP.

Over the past year, we collaborated with the OCP to introduce a new hardware development model under Project Olympus for community based open collaboration. By contributing cutting edge server hardware designs much earlier in the development cycle, Project Olympus has allowed the community to contribute to the ecosystem by downloading, modifying, and forking the hardware design just like open source software. This has enabled bootstrapping a diverse and broad ecosystem for Project Olympus, making it the de facto open source cloud hardware design for the next generation of scale computing. For a short summary of Project Olympus, please see this video.

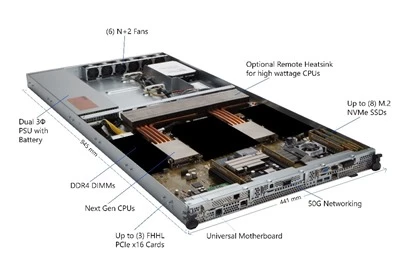

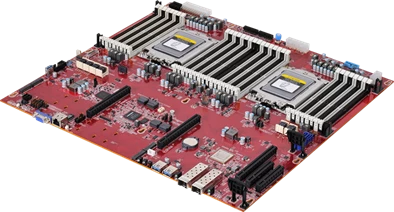

Today, we’re pleased to report that Project Olympus has attracted the latest in silicon innovation to address the exploding growth of cloud services and computing power needed for advanced and emerging cloud workloads such as big data analytics, machine learning, and Artificial Intelligence (AI). This is the first OCP server design to offer a broad choice of microprocessor options fully compliant with the Universal Motherboard specification to address virtually any type of cloud computing workload.

We have collaborated closely with Intel to enable their support of Project Olympus with the next generation Intel Xeon Processors, codename Skylake, and subsequent updates could include accelerators via Intel FPGA or Intel Nervana solutions.

AMD is bringing hardware innovation back into the server market and will be collaborating with Microsoft on Project Olympus support for their next generation “Naples” processor, enabling application demands of high performance datacenter workloads.

We have also been working on a long-term project with Qualcomm, Cavium, and others to advance the ARM64 cloud servers compatible with Project Olympus. Learn more about Enabling Cloud Workloads through innovations in Silicon.

In addition to multiple choices of microprocessors for the core computation aspects, there has also been tremendous momentum to develop the core building blocks in the Project Olympus ecosystem for supporting a wide variety of datacenter workloads.

Today, Microsoft is announcing with NVIDIA and Ingrasys a new industry standard design to accelerate Artificial Intelligence in the next generation cloud. The Project Olympus hyperscale GPU accelerator chassis for AI, also referred to as HGX-1, is designed to support eight of the latest “Pascal” generation NVIDIA GPUs and NVIDIA’s NVLink high speed multi-GPU interconnect technology, and provides high bandwidth interconnectivity for up to 32 GPUs by connecting four HGX-1 together. The HGX-1 AI accelerator provides extreme performance scalability to meet the demanding requirements of fast growing machine learning workloads, and its unique design allows it to be easily adopted into existing datacenters around the world.

Our work with NVIDIA and Ingrasys is just a one of numerous stand-out examples of how the open source strategy of Project Olympus has been embraced by the OCP community. We are pleased by the broad support across industry partners that are now part of the Project Olympus ecosystem.

This is a significant moment as we usher in a new era of open source hardware development with the OCP community. We intend for Project Olympus to provide a blueprint for future hardware development and collaboration at cloud speed. You can learn more and view the specification for Microsoft’s Project Olympus at our OCP GitHub branch.