Since its conception in 2010, as a cloud-born database, we have carefully designed and engineered Azure Cosmos DB to exploit the three fundamental properties of the cloud:

- Global distribution by virtue of transparent multi-master replication.

- Elastic scalability of throughput and storage worldwide by virtue of horizontal partitioning.

- Fine grained multi-tenancy by virtue of highly resource-governed system stack all the way from the database engine to the replication protocol.

Cosmos DB composes these three properties in a novel way to offer elastic scalability of both writes and reads all around the world with guaranteed single digit millisecond latency at the 99th percentile and 99.999% high availability. The service transparently replicates your data and provides a single system image of your globally distributed Cosmos database with a choice of five well-defined consistency models (precisely specified using TLA+), while your users write and read to local replicas anywhere in the world. Since its launch last year, the growth of the service has validated our design choices and the unique engineering tradeoffs we have made.

Blazing fast, globally scalable writes

As one of the foundational services of Azure, Cosmos DB runs in every Azure region by default. At the time of writing, Cosmos DB is operating across more than 50+ geographical regions; tens of thousands of customers have configured their Cosmos databases to be globally replicated anywhere from 2 to 50+ regions.

While our customers have been using their Cosmos databases to span multiple regions, up until now, they could only designate one of the regions for writes (and reads) with all other regions for reads. After battle-testing the service by running Microsoft’s internal workloads for a few years, today we are thrilled to announce that you will be able to configure your Cosmos databases to have multiple write regions (aka “multi-master” configuration). This capability, in-turn, will provide the following benefits to you:

- 99.999% write and read availability, all around the world – In addition to the 99.999% read availability, Cosmos DB now offers 99.999% write availability backed by financial SLAs.

- Elastic write and read scalability, all around the world – In addition to reads, you can now elastically scale writes, all around the world. The throughput that your application configures on a Cosmos DB container (or a database) is guaranteed to be delivered across all regions, backed by financial SLAs.

- Single-digit-millisecond write and read latencies at the 99th percentile, all around the world – In addition to the guaranteed single-digit-millisecond read latencies, Cosmos DB now offers <10 ms write latency at the 99th percentile, anywhere around the world, backed by financial SLAs.

- Multiple, well-defined consistency models – Cosmos DB’s replication protocol is designed to offer five well-defined, practical and intuitive consistency models to build correct globally distributed applications with ease. We have also made the high-level TLA+ specifications for the consistency models available.

- Unlimited endpoint scalability – Cosmos DB’s replication protocol is designed to scale across 100s of datacenters and billions of edge devices – homogeneously. The architecture treats an Azure region or an edge device as equals – both are capable of hosting Cosmos DB replicas and participate as true-peers in the multi-master replication protocol.

- Multi-master MongoDB, Cassandra, SQL, Gremlin and Tables – As a multi-model and multi-API database, Cosmos DB offers native wire-protocol compatible support for SQL (Cosmos DB), Cassandra (CQL), MongoDB, Table Storage, and Gremlin APIs. With Cosmos DB, you can have a fully-managed, secure, compliant, cost effective, serverless database service for your MongoDB and Cassandra applications, again backed by the industry leading, comprehensive SLAs. The above listed capabilities are now available for all the APIs Cosmos DB supports including Cassandra, MongoDB, Gremlin, Table Storage and SQL. For instance, you can now have a multi-master, globally distributed MongoDB or an Apache Gremlin accessible graph database, powered by Cosmos DB!

Decades of research + rigorous engineering = Cosmos DB

Last year, at the launch of Cosmos DB, we wrote a technical overview of Cosmos DB accompanied by a video interview with the Turing Award winner Dr. Leslie Lamport describing the technical foundations of Cosmos DB. Continuing with this tradition, here is the new video interview of Leslie describing the evolution of Cosmos DB’s architecture, application of TLA+ in the design of its novel replication protocol, and how Cosmos DB has married decades of distributed systems research from Paxos to epidemic protocols with its world-class engineering, to enable you to build truly cosmos scale apps.

Last year, at the launch of Cosmos DB, we wrote a technical overview of Cosmos DB accompanied by a video interview with the Turing Award winner Dr. Leslie Lamport describing the technical foundations of Cosmos DB. Continuing with this tradition, here is the new video interview of Leslie describing the evolution of Cosmos DB’s architecture, application of TLA+ in the design of its novel replication protocol, and how Cosmos DB has married decades of distributed systems research from Paxos to epidemic protocols with its world-class engineering, to enable you to build truly cosmos scale apps.

This blog post dives a little bit deeper into Cosmos DB’s global distribution architecture including the new capability for enabling multiple write regions for your Cosmos database. In the following sections, we discuss the system model for Cosmos DB’s global distribution with its anti-entropy-based design for scaling writes across the world.

System model for global distribution

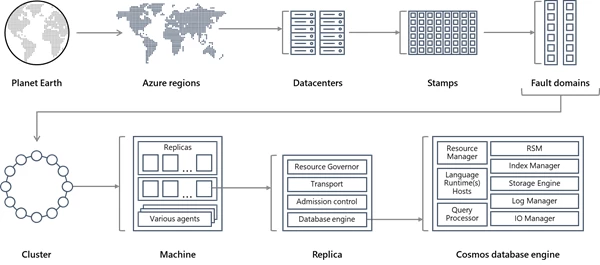

The Cosmos DB service is a foundational service of Azure, so it is deployed across all Azure regions worldwide including the public, sovereign, DoD and government clouds. Within a datacenter, we deploy and manage the Cosmos DB service on massive “stamps” of machines, each with dedicated local storage. Within a datacenter, Cosmos DB is deployed across many clusters, each potentially running multiple generations of hardware. Machines within a cluster are typically spread across 10-20 fault domains.

Figure 1: System topology

Global distribution in Cosmos DB is turn-key: at any time with a few clicks of a button (or programmatically with a single API call), customers can add (or remove) any number of geographical regions to be associated with their Cosmos database. A Cosmos database in-turn consists of a set of Cosmos containers. In Cosmos DB, containers serve as the logical units of distribution and scalability. The collections, tables, and graphs, which you create are (internally) represented as Cosmos containers. Containers are completely schema agnostic and provide a scope for a query. All data in a Cosmos container is automatically indexed upon ingestion. This enables users to query the data without having to deal with schema or hassles of index management, especially in a globally distributed setup.

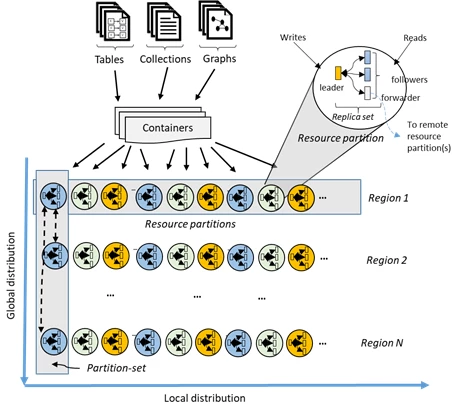

As seen from Figure 2, the data within a container is distributed along two dimensions:

- Within a given region, data within a container is distributed using a partition-key, which you provide and is transparently managed by the underlying resource partitions (local distribution).

- Each resource partition is also replicated across geographical regions (global distribution).

When an app using Cosmos DB elastically scales throughput (or consumes more storage) on a Cosmos container, Cosmos DB transparently performs partition management (e.g., split, clone, delete, etc.) operations across all the regions. Independent of the scale, distribution or failures, Cosmos DB continues to provide a single system image of the data within the containers, which are globally distributed across any number of regions.

Figure 2: Distribution of resource partitions across two dimensions, spanning multiple regions around the world.

Physically, a resource partition is implemented by a group of replicas, called a replica-set. Each machine hosts hundreds of replicas corresponding to various resource partitions within a fixed set of processes (see Figure 1). Replicas corresponding to the resource partitions are dynamically placed and load balanced across the machines within a cluster and datacenters within a region.

A replica uniquely belongs to a Cosmos DB tenant. Each replica hosts an instance of Cosmos DB’s database engine, which manages the resources as well as the associated indexes. The Cosmos DB database engine operates on an atom-record-sequence (ARS) based type system1. The engine is completely agnostic to the concept of a schema and blurring the boundary between the structure and instance values of records. Cosmos DB achieves full schema agnosticism by automatically indexing everything upon ingestion in an efficient manner, which allows users to query their globally distributed data without having to deal with schema or index management. The Cosmos DB database engine, in-turn, consists of components including implementation of several coordination primitives, language runtimes, the query processor, the storage and indexing subsystems responsible for transactional storage and indexing of data, respectively. To provide durability and high availability, the database engine persists its data and index on SSDs and replicates it among the database engine instances within the replica-set(s) respectively. Larger tenants correspond to higher scale of throughput and storage and have either bigger or more replicas or both (and vice versa). Every component of the system is fully asynchronous – no thread ever blocks, and each thread does short-lived work without incurring any unnecessary thread switches. Rate-limiting and back-pressure are plumbed across the entire stack from the admission control to all I/O paths. Our database engine is designed to exploit fine-grained concurrency and to deliver high throughput while operating within frugal amounts of system resources.

Cosmos DB’s global distribution relies on two key abstractions – replica-sets and partition-sets. A replica-set is a modular Lego block for coordination, and a partition-set is a dynamic overlay of one or more geographically distributed resource partitions. In order to understand how global distribution works, we need to understand these two key abstractions.

Replica-sets – Lego blocks of coordination

A resource partition is materialized as a self-managed and dynamically load-balanced group of replicas spread across multiple fault domains, called a replica-set. This set collectively implements the replicated state machine protocol to make the data within the resource partition highly available, durable, and strongly consistent. The replica-set membership N is dynamic – it keeps fluctuating between NMin and NMax based on the failures, administrative operations, and the time for failed replicas to regenerate/recover. Based on the membership changes, the replication protocol also reconfigures the size of read and write quorums. To uniformly distribute the throughput that is assigned to a given resource partition, we employ two ideas: first, the cost of processing the write requests on the leader is higher than that of applying the updates on the follower. Correspondingly, the leader is budgeted more system resources than the followers. Secondly, as far as possible, the read quorum for a given consistency level is composed exclusively of the follower replicas. We avoid contacting the leader for serving reads unless absolutely required. We employ a number of ideas from the research done on the relationship of load and capacity in the quorum based systems for the five consistency models that Cosmos DB supports.

Partition-sets – dynamic, geographically-distributed overlays

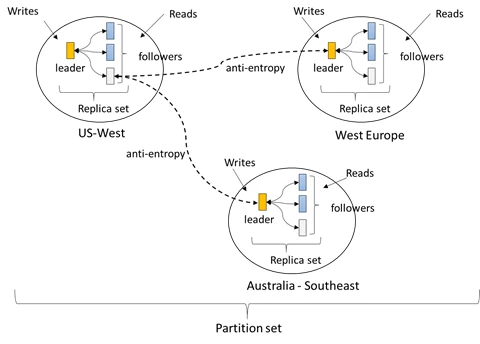

A group of resource partitions, one from each of the configured with the Cosmos database regions, is composed to manage the same set of keys replicated across all configured regions. This higher coordination primitive is called a partition-set – a geographically distributed dynamic overlay of resource partitions managing a given set of keys. While a given resource partition (i.e., a replica-set) is scoped within a cluster, a partition-set can span clusters, data centers and geographical regions (Figure 2 and Figure 3).

Figure 3: Partition-set is a dynamic overlay of resource partitions

You can think of a partition-set as a geographically dispersed “super replica-set”, which is comprised of multiple replica-sets owning the same set of keys. Similar to a replica-set, a partition-set’s membership is also dynamic – it fluctuates based on implicit resource partition management operations to add/remove new partitions to/from a given partition-set (e.g., when you scale out throughput on a container, add/remove a region to your Cosmos database, when failures occur, etc.) By virtue of having each of the partitions (of a partition-set) manage the partition-set membership within its own replica-set, the membership is fully decentralized and highly available. During the reconfiguration of a partition-set, the topology of the overlay between resource partitions is also established. The topology is dynamically selected based on consistency level, geographical distance and available network bandwidth between the source and the target resource partitions.

The service allows you to configure your Cosmos databases with either a single write region or multiple write regions, and depending on the choice, partition-sets are configured to accept writes in exactly one or all regions. The system employs a two-level, nested consensus protocol – one level operates within the replicas of a replica-set of a resource partition accepting the writes, and the other operates at the level of a partition-set to provide complete ordering guarantees for all the committed writes within the partition-set. This multi-layered, nested consensus is critical for the implementation of our stringent SLAs for high availability, as well as the implementation of the consistency models, which Cosmos DB offers to its customers.

Anti-entropy with flexible conflict resolution

Our design for the update propagation, conflict resolution and causality tracking is inspired from the prior work on epidemic algorithms and the Bayou system. While the kernels of the ideas have survived and provide a convenient frame of reference for communicating the Cosmos DB’s system design, they have also undergone significant transformation as we applied them to the Cosmos DB system. This was needed, because the previous systems were designed neither with the resource governance nor with the scale at which Cosmos DB needs to operate nor to provide the capabilities (e.g., bounded staleness consistency) and the stringent and comprehensive SLAs that Cosmos DB delivers to its customers.

Recall that a partition-set is distributed across multiple regions and follows Cosmos DB’s (multi-master) replication protocol to replicate the data among the resource partitions comprising a given partition-set. Each resource partition (of a partition-set) accepts writes and serves reads typically to the clients that are local to that region. Writes accepted by a resource partition within a region are durably committed and made highly available within the resource partition before they are acknowledged to the client. These are tentative writes and are propagated to other resource partitions within the partition-set using an anti-entropy channel. Clients can request either tentative or committed writes by passing a request header. The anti-entropy propagation (including the frequency of propagation) is dynamic, based on the topology of the partition-set, regional proximity of the resource partitions and the consistency level configured. Within a partition-set, Cosmos DB follows a primary commit scheme with a dynamically selected arbiter partition. The arbiter selection is an integral part of the reconfiguration of the partition-set based on the topology of the overlay. The committed writes (including multi-row/batched updates) are guaranteed to be totally ordered.

We employ encoded vector clocks (containing region id and logical clocks corresponding to each level of consensus at the replica-set and partition-set, respectively) for causality tracking and version vectors to detect and resolve update conflicts. The topology and the peer selection algorithm is designed to ensure fixed and minimal storage and minimal network overhead of version vectors. The algorithm guarantees the strict convergence property.

For the Cosmos databases configured with multiple write regions, the system offers a number of flexible automatic conflict resolution policies for the developers to choose from, including:

- Last-Write-Wins (LWW) which, by default, uses a system-defined timestamp property (which is based on the time-synchronization clock protocol). Cosmos DB also allows you to specify any other custom numerical property to be used for conflict resolution.

- Application-defined Custom conflict resolution policy (expressed via merge procedures) which is designed for application-defined semantics reconciliation of conflicts. These procedures get invoked upon detection of the write-write conflicts under the auspices of a database transaction on the server side. The system provides exactly once guarantee for the execution of a merge procedure as part of the commitment protocol. There are several samples available for you to play with.

- Conflict-free-Replicated-Data-Types (CRDTs) natively inside the core (ARS) type system of our database engine. This, in-turn, enables automatic resolution of conflicts, transactionally and directly inside the database engine as part of the commitment protocol.

Precisely defined five consistency models

Whether you configure your Cosmos database with a single or multiple write regions, you can use five well-defined consistency models that the service offers. With the newly added support for enabling multiple write regions, the following are a few notable aspects of the consistency levels:

As before, the bounded staleness consistency guarantees that all reads will be within k prefixes or t seconds from the latest write in any of the regions. Furthermore, reads with bounded staleness consistency are guaranteed to be monotonic and with consistent-prefix guarantees. The anti-entropy protocol operates in a rate-limited manner and ensures that the prefixes do not accumulate and the backpressure on the writes does not have to be applied. As before, session consistency guarantees monotonic read, monotonic write, RYOW, write-follows-read and consistent-prefix guarantees worldwide. For the databases configured with strong consistency, the system switches back to a single write region by designating a leader within each of the partition-sets.

The semantics of the five consistency models are described here and mathematically depicted using a high-level TLA+ specifications.

Conclusion

As a globally distributed database, Cosmos DB transparently replicates your data to any number of Azure regions. With its novel, fully decentralized, multi-master replication architecture, you can elastically scale both writes and reads across all the regions associated with your Cosmos database. The ability to elastically scale the writes globally by writing to local replicas of their Cosmos database anywhere in the world has been in the works for the last few years. We are excited that this feature is now generally available to everyone!

Acknowledgements

Azure Cosmos DB started as “Project Florence” in late 2010 before expanding and blossoming into its current form. Our thanks to all the teams inside Microsoft who have made Azure Cosmos DB robust, by their extensive use of the service over the years. We stand on the shoulders of giants – there are many component technologies Azure Cosmos DB is built upon, including Compute, Networking and Service Fabric – we thank them for their continued support. Thanks to Dr. Leslie Lamport for inspiring us and influencing our approach to designing distributed systems. We are very grateful to our customers who have relied on Cosmos DB to build their mission-critical apps, pushed the limits of the service and always demanded the best. Last but not the least, thanks to all the amazing Cosmonauts for their deep commitment and care.

- Grammars like JSON, BSON, and CQL are a strict subset of the ARS type system.