Microsoft Azure offers load balancing services for virtual machines (IaaS) and cloud services (PaaS) hosted in the Microsoft Azure cloud. Load balancing allows your application to scale and provides resiliency to application failures among other benefits.

The load balancing services can be accessed by specifying input endpoints on your services either via the Microsoft Azure Portal or via the service model of your application. Once a hosted service with one or more input endpoints is deployed in Microsoft Azure, it automatically configures the load balancing services offered by Microsoft Azure platform. To get the benefit of resiliency / redundancy of your services, you need to have at least two virtual machines serving the same endpoint.

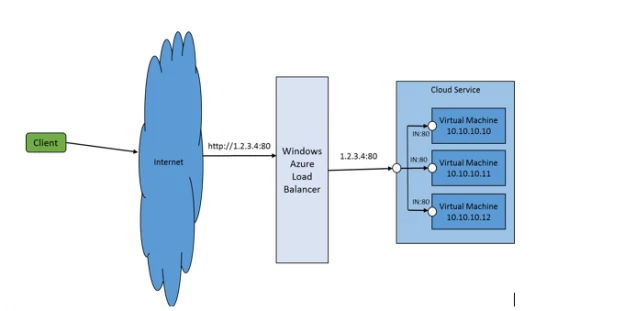

The following diagram is an example of an application hosted in Microsoft Azure that uses load balancing service to direct incoming traffic (on address/port 1.2.3.4:80) to three virtual machines, all listening on port 80.

The key features of the load balancing services in Microsoft Azure are:

PaaS / IaaS support

Load balancing services in Microsoft Azure work with all the tenant types (IaaS or PaaS) and all OS flavors (Windows or any Linux based OS supported).

PaaS tenants are configured via the service model. IaaS tenants are configured either via the Management Portal or via PowerShell.

Layer-4 Load Balancer, Hash based distribution

Microsoft Azure Load Balancer is a Layer-4 type load balancer. Microsoft Azure load balancer distributes load among a set of available servers (virtual machines) by computing a hash function on the traffic received on a given input endpoint. The hash function is computed such that all the packets from the same connection (TCP or UDP) end up on the same server. The Microsoft Azure Load Balancer uses a 5 tuple (source IP, source port, destination IP, destination port, protocol type) to calculate the hash that is used to map traffic to the available servers. The hash function is chosen such that the distribution of connections to servers is fairly random. However, depending on traffic pattern, it is possible for different connections to get mapped to the same server. (Note that the distribution of connections to servers is NOT round robin, neither there is any queuing of requests, as has been mistakenly mentioned in some articles and some blogs). The basic premise of the hash function is given a lot of requests coming from a lot of different clients, you will get a nice distribution of requests across the servers.

Multiple Protocol Support

Load balancing services in Microsoft Azure supports TCP and UDP protocols. Customers can specify protocol in the specification of input endpoint in their service model, via PowerShell or the Management Portal.

Multiple Endpoint Support

A hosted service can specify multiple input endpoints and they will automatically get configured on the load balancing service.

Currently, multiple endpoints with the same port AND protocol are not supported. There is also a limit of the maximum number of endpoints a hosted service can have which is currently set to 150.

Internal Endpoint Support

Every service can specify up to 25 internal endpoints that are not exposed to the load balancer and are used for communicating between the service roles.

Direct Port Endpoint Support (Instance Input Endpoint)

A hosted service can specify that a given endpoint should not be load balanced and get direct access to the virtual machine hosting the service. This allows an application to control a possible redirect of a client directly to a given instance of the application (VM) without having each request load balanced (and as a result potentially land to a different instance).

Automatic reconfiguration on scale out/down, service healing and updates

The load balancing service works in conjunction with Microsoft Azure Compute Service to ensure that if the number of servers instances specified for an input endpoint scales up or down (either due to increasing the instance count for web/worker role or due to putting additional persistent VMs under the same load balancing group), the load balancing service automatically reconfigures itself to load balance to the increased or decreased instances.

The load balancing service also transparently reconfigures itself in response to service healing actions by the Microsoft Azure fabric controller or service updates by the customer.

Service Monitoring

The load balancing service offers the capability to probe for health of the various server instances and to take unhealthy server instances out of rotation. There are three types of probes supported: Guest Agent probe (on PaaS VMs), HTTP custom probes and TCP custom probes. In the case of Guest Agent, load balancing service queries the Guest Agent in the VM to learn about the status of the service. In the case of HTTP, load balancing service relies on fetching a specified URL to determine the health of an instance. For TCP, it relies on successful TCP session establishment to a defined probe port.

Source NAT (SNAT)

All outbound traffic originating from a service is Source NATed (SNAT) using the same VIP address as for incoming traffic. We will dive into how SNAT works in a future post.

Intra Data Center Traffic Optimization

Microsoft Azure Load Balancer optimizes traffic between Microsoft Azure datacenters in the same region, such that traffic between Azure tenants that talk over the VIP and are within the same region, after TCP/IP connection is initiated, they bypass Microsoft Azure Load Balancer altogether.

VIP Swap

Microsoft Azure Load Balancer allows the swapping of the VIP of two tenants, allowing the move of a tenant that is in “stage” to “production” and vice versa. The VIP Swap operation allows the client to be using the same VIP to talk to the service, while a new version of the service is deployed. The new version of the service can be deployed and tested without interfering with the production traffic, in a staging environment. Once the new version passes any tests needed, it can be promoted to production, by swapping with the existing production service. Existing connections to the “old” production continue un-altered. New connections are directed to the “new” production environment.

Example: Load Balanced Service

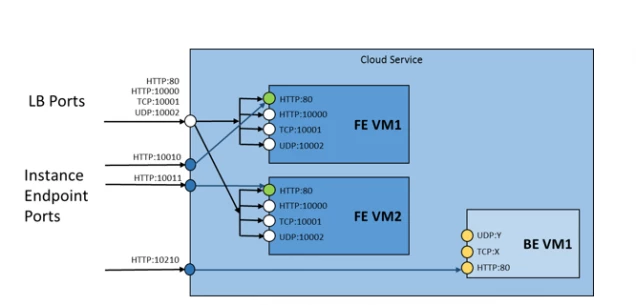

Next we will see how most of the above features offered by the load balancing service can be used in a sample cloud service. The PaaS tenant we want to model is shown in the diagram below:

The tenant has two Frontend (FE) roles and one Backend (BE) role. The FE role exposes four load balanced endpoints using http, tcp and udp protocols. One of the endpoints is also used to indicate the health of the role to the load balancer. The BE role exposes three endpoints using http, tcp and udp protocols. Both FE and BE roles expose one direct port endpoint to the corresponding instance of the service.

The above service is expressed as follows using the azure service model (some schema specifics have been removed to make it clearer):

Analyzing the service model, we start with defining the health probe the load balancer should use to query the health of the service:

This says that we have an http custom probe, using the URL relative path “Probe.aspx”. This probe will be attached later to an endpoint to be fully specified.

Next we define the FE Role as a WorkerRole. This has several load balanced endpoints using http, tcp and udp as follows:

Since we did not assign a custom probe to these endpoints, the health of the above endpoints is controlled by the guest agent on the VM and can be changed by the service using the StatusCheck event.

Next we define an additional http endpoint on port 80, which uses the custom probe we defined before (MyProbe):

The load balancer combines the info of the endpoint and the info of the probe, to create a URL in the form of: https://{DIP of VM}:80/Probe.aspx that will use to query the health of the service. The service will notice (in the logs ?), that the same IP periodically is accessing it. This is the health probe request coming from the host of the node where the VM is running.

The service has to respond with a HTTP 200 status code for the load balancer to assume that the service is healthy. Any other HTTP status code (e.g. 503) directly takes the VM out of rotation.

The probe definition also controls the frequency of the probe. In our case above, the load balancer is probing the endpoint every 15 secs. If no positive answer is received for 30 secs (two probe intervals), the probe is assumed down and the VM is taken out of rotation. Similarly, if the service is out of rotation and a positive answer is received, the service is put back to rotation right away. If the service is fluctuating between healthy / unhealthy, the load balancer can decide to delay the re-introduction of the service back to rotation until it has been healthy for a number of probes.

The FE service exposes a set of direct ports, one for each instance (instance input endpoint), that connect directly to the FE instance on the port specified below:

The above definition connects using tcp ports 10110, 10111, … to port 80 of each FE role VM instance. This capability can be used in many ways:

a) Get direct access to a given instance and perform action only to that instance

b) Redirect a user app to the particular instance after it has gone through a load balanced endpoint. This can be used for “sticky” sessions to a given instance. Note though that this can cause overload to that instance and removed any redundancy.

Finally, the FE role exposes an internal endpoint, which can be used for communicating between FE / BE roles:

Each role can discover the endpoints it exposes, as well as what endpoints each other role is exposing by using the RoleEnvironment class.

The BE role is also modeled as a WorkerRole.

The BE role does not expose any load balanced endpoint, only internal endpoints using http, tcp and udp:

The BE role also exposes an instance input endpoint, that connects directly to the BE instances:

The above definition connects using tcp ports 10210, … to port 80 of each BE role VM instance.

We hope the above example demonstrates how all the load balancing features can be used together to model a service.

In future posts we will see this tenant in action and provide code samples. In addition we will describe in more detail:

a) How SNAT works

b) Custom Probes

c) Virtual Networking

Also, please send us your requests on what would you like to see in more detail.

For the Microsoft Azure Networking Team,

Marios Zikos