Updated on April 16, 2019: We are thrilled to announce that Video Indexer’s new AI Editor won a NAB Show Product of the Year Award in the AI/ML category at this year’s event! This prestigious award recognizes “the most significant and promising new products and technologies” exhibited at NAB each year.

After sweeping up multiple awards with the general availability release of Azure Media Services’ Video Indexer, including the 2018 IABM for innovation in content management and the prestigious Peter Wayne award, the team has remained focused on building a wealth of new features and models to allow any organization with a large archive of media content to unlock insights from their content; and use those insights improve searchability, enable new user scenarios and accessibility, and open new monetization opportunities.

At NAB Show 2019, we are proud to announce a wealth of new enhancements to Video Indexer’s models and experiences that will be rolled out this week, including:

- A new AI-based editor that allows you to create new content from existing media within minutes

- Enhancements to our custom people recognition, including central management of models and the ability to train models from images

- Language model training based on transcript edits, allowing you to effectively improve your language model to include your industry-specific terms

- New scene segmentation model (preview)

- New ending rolling credits detection models

- Availability in 9 different regions worldwide

- ISO 27001, ISO 27018, SOC 1,2,3, HiTRUST, FedRAMP, HIPAA, and PCI certifications

- Ability to take your data and trained models with you when moving from trial to paid Video Indexer account

More about all of those great additions in this blog.

In addition, we have exciting news for customers who are using our live streaming platform for ingesting live feeds, transcoding, and dynamically packaging and encrypting it for delivery via industry-standard protocols like HLS and MPEG-DASH. Live transcriptions is a new feature in our v3 APIs, wherein you can enhance the streams delivered to your viewers with machine-generate text that is transcribed from spoken words in the video stream. This text will initially be only delivered as IMSC1.1 compatible TTML packaged in MPEG-4 Part 30 (ISO/IEC 14496-30) fragments, which can be played back via a new build of Azure Media Player. More information on this feature, and the private preview program, is available in the documentation, “Live transcription with Azure Media Services v3.”

We are also announcing two more private preview programs for multi-language transcription and animation detection, where selected customers will be able to influence the models and experiences around them. Come talk to us at NAB Show or contact your account manager to request to be added to these exciting programs!

Extracting fresh content from your media archive has never been easier

One of the ways to use deep insights from media files is to create new media from existing content. This can be to create movie highlights for trailers, use old clips of videos in news casts, create shorter content for social media, or for any other business need.

In order to facilitate this scenario with just a few clicks, we created an AI-based editor that enables you to find the right media content, locate the parts that you’re interested in, and use those to create an entirely new video, using the metadata generated by Video Indexer. Once you’re happy with the result, it can be rendered and downloaded from Video Indexer and used in your own editing applications or downstream workflows.

All these capabilities are also available through our updated REST API. This means that you can write code that creates clips automatically based on insights. The new editor API calls are currently open to public preview.

Want to give the new AI-based editor a try? Simply go to one of your indexed media files and click the “Open in editor” button to start creating new content.

More intuitive model customization and management

Video Indexer comes with a rich set of out-of-the-box models so you can upload your content and get insights immediately. However, AI technology always gets more accurate when you customize it to the specific content it’s employed for. Therefore, Video Indexer provides simple customization capabilities for selected models. One such customization is the ability to add custom persons models to the over 1 million celebrities that Video Indexer can currently identify out-of-the-box. This customization capability already existed in the form of training “unknown” people in the content of a video, but we received multiple customer requests to enhance it even more – so we did!

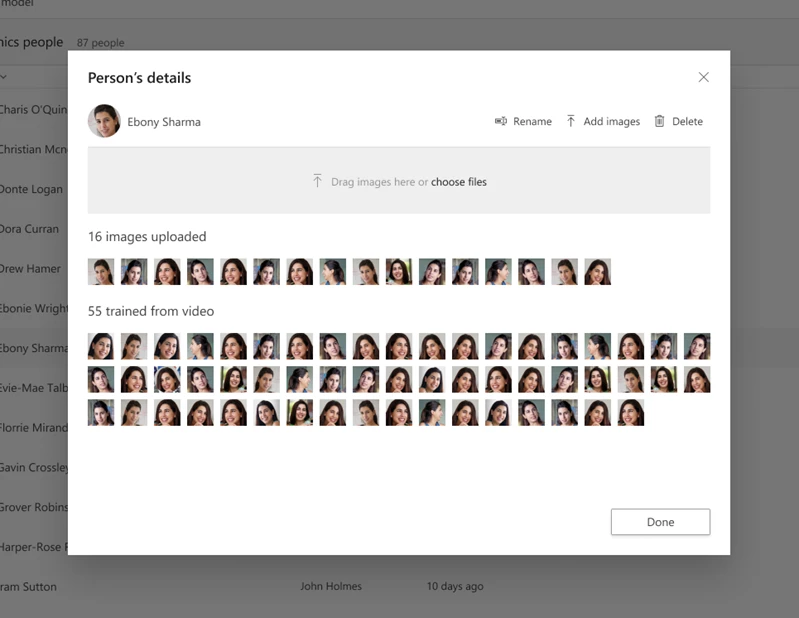

To accommodate an easy customization process for persons models, we added a central people recognition management page that allows you to create multiple custom persons models per account, each of which can hold up to 1 million different entries. From this location you can create new models, add new people to existing models, review, rename, and delete them if needed. On top of that, you can now train models based on your static images even before you have uploaded the first video to your account. Organizations that already have an archive of people images can now use those archives to pre-train their models. It’s as simple as dragging and dropping the relevant images to the person name, or submitting them via the Video Indexer REST API (currently in preview).

What to learn more? Read about our advanced custom face recognition options.

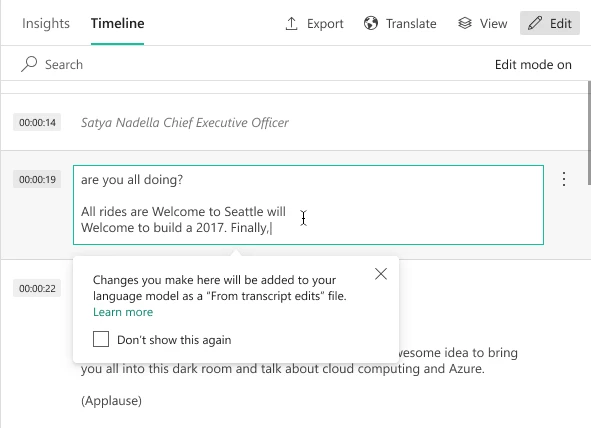

Another important customization is the ability to train language models to your organization’s terminologies or industry specific vocabulary. To allow you to improve the transcription for your organization faster, Video Indexer now automatically collects transcript edits done manually into a new entry in the specific language model you use. All you need to do then, is click the “Train” button to add those to your own customized model. The idea is to create a feedback loop where organizations begin with a base out-of-the-box language model and improve the accuracy of it through manual edits over a period of time until it aligns to their specific industry vertical vocabulary and terms.

New additions to the Video Indexer pipeline

One of the primary benefits of Video Indexer is having one pipeline that orchestrates multiple insights from different channels into one timeline. We regularly work to enrich this pipeline with additional insights.

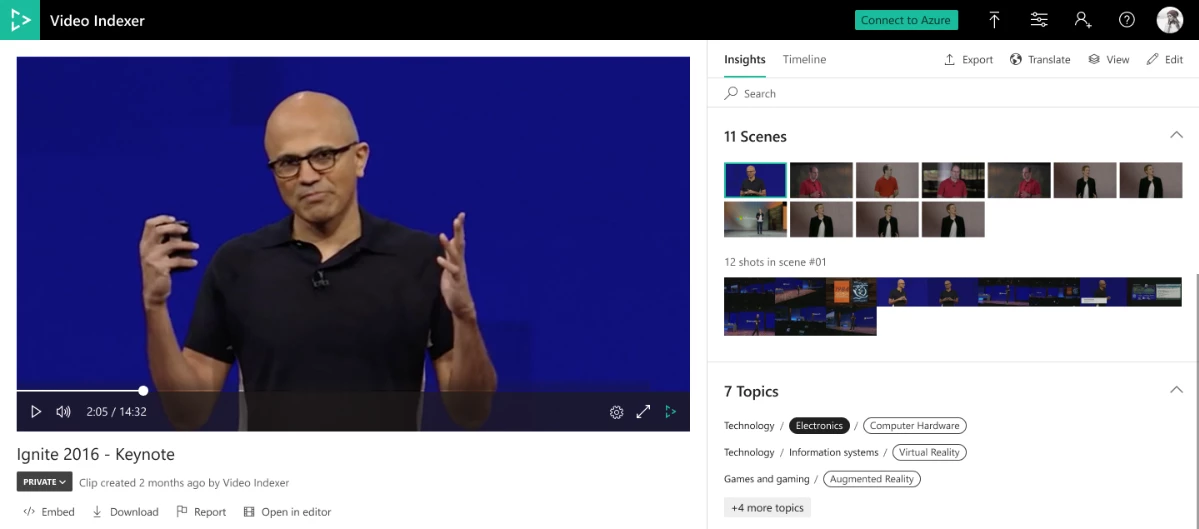

One of the latest additions to Video Indexer’s set of insights is the ability to segment the video by semantic scenes (currently in preview) based on visual cues. Semantic scenes add another level of granularity to the existing shot detection and keyframes extraction models in Video Indexer and aim to depict a single event composed of a series of consecutive shots which are semantically related.

Scenes can be used to group together a set of insights and refer to them as insights of the same context in order to deduct a more complex meaning from them. For example, if a scene includes an airplane, a runway, and luggage, the customer can build logic that deducts that it is happening in an airport. Scenes can also be used as a unit to be extracted as a clip from a full video.

Another cool addition to Video Indexer is the ability to identify ending rolling credits of a movie or a TV show. This can come in handy for a broadcasters in order to identify when their viewers completed watching the video and in order to identify the right moment to recommend the new show or movie to watch before losing the audience.

Video Indexer runs on trust (and in more regions)

As Video Indexer is part of the Azure Media Services family and is built to serve organizations of all sizes and industries, it is critical to us to help our customers meet their compliance obligations across regulated industries and markets worldwide. As part of that effort, we are excited to announce that Video Indexer is now ISO 27001, ISO 27018, SOC 1,2,3, HIPAA, FedRAMP, PCI, and HITRUST certified. Learn more about the most current certifications status of Video Indexer and all other Azure services.

Additionally, we increased our service availability around the world and are now deployed to 9 regions for your convenience. Available regions now include East US (Trial), East US 2, South Central US, West US 2, North Europe, West Europe, Southeast Asia, East Asia, and Australia East. More regions are coming online soon, so stay tuned. You can always find the latest regional availability of Video Indexer by visiting the products by region page.

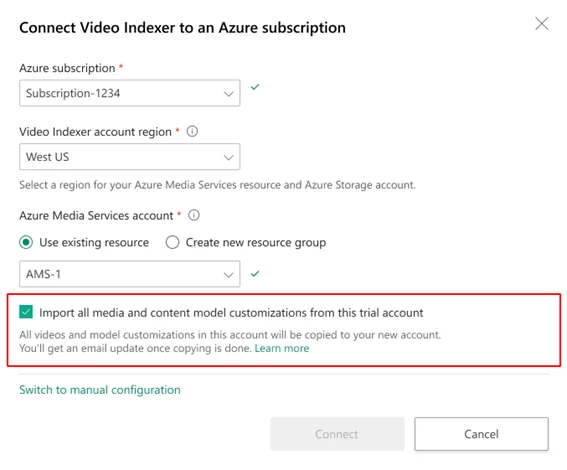

Video Indexer continues to be fully available for trial on East US. This allows organizations to evaluate the full Video Indexer functionality on their own data before creating a paid account using their own Azure subscription. Once organizations decide to move to their Azure subscription, they can copy all of the videos and model customizations that they created on their trial account by simply checking the relevant check box in the content of the account creation wizard.

Want to be the first to try out our newest capabilities?

Today, we are excited to announce three private preview programs for features that we have been asked for by many different customers.

Live transcription – the ability to stream a live event, where spoken words in the audio is transcribed to text and delivered along with video and audio.

Mixed languages transcription – The ability to automatically identify multiple spoken languages in one video file and to create a mixed language transcription for that file.

Animation characters detection – The ability to identify characters in animated content as if they were real live people!

We will be selecting a set of customers out of a list of those who would like to be our design partners for these new capabilities. Selected customers will be able to highly influence these new capabilities and get models that are highly tuned to their data and organizational flows. Want to be a part of this? Come visit us at NAB Show or contact your account manager for more details!

Visit us at NAB Show 2019

If you are attending NAB Show 2019, please stop by booth #SL6716 to see the latest Azure Media Services innovations! We’d love to meet you, learn more about what you’re building, and walk you through the different innovations Azure Media Services and our partners are releasing at NAB Show. We will also have product presentations in the booth throughout the show.

Have questions or feedback? We would love to hear from you! Use our UserVoice to help us prioritize features, or email VISupport@Microsoft.com for any questions.