AI + Machine Learning, Azure DevOps, Azure Machine Learning, DevOps, How to

MLOps Blog Series Part 4: Testing security of secure machine learning systems using MLOps

Posted on

2 min read

The growing adoption of data-driven and machine learning–based solutions is driving the need for businesses to handle growing workloads, exposing them to extra levels of complexities and vulnerabilities.

Cybersecurity is the biggest risk for AI developers and adopters. According to a survey released by Deloitte, in July 2020, 62 percent of adopters saw cybersecurity risks as a significant or extreme threat, but only 39 percent said they felt prepared to address those risks.

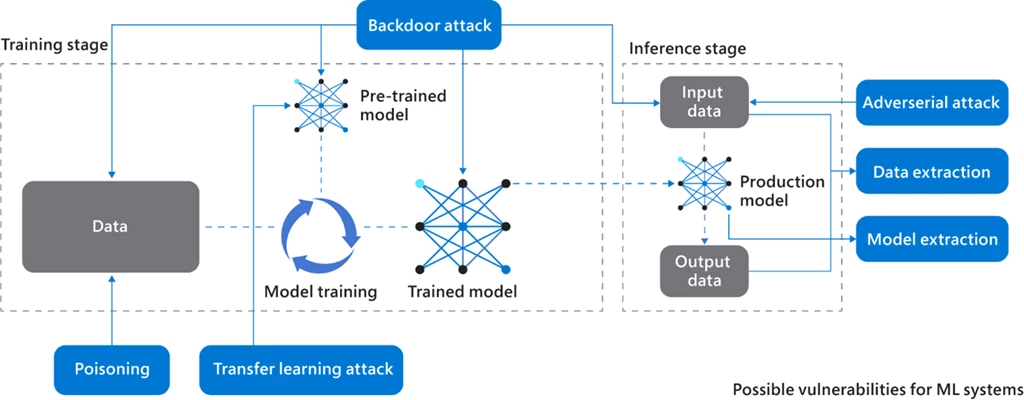

In Figure 1, we can observe possible attacks on a machine learning system (in the training and inference stages).

Figure 1: Vulnerabilities of a machine learning system.

To know more about how these attacks are carried out, check out the Engineering MLOps book. Here are some key approaches and tests for securing your machine learning systems against these attacks:

Homomorphic encryption

Homomorphic encryption is a type of encryption that allows direct calculations on encrypted data. It ensures that the decrypted output is identical to the result obtained using unencrypted inputs.

For example, encrypt(x) + encrypt(y) = decrypt(x+y).

Privacy by design

Privacy by design is a philosophy or approach for embedding privacy, fairness, and transparency in the design of information technology, networked infrastructure, and business practices. The concept brings an extensive understanding of principles to achieve privacy, fairness, and transparency. This approach will enable possible data breaches and attacks to be avoided.

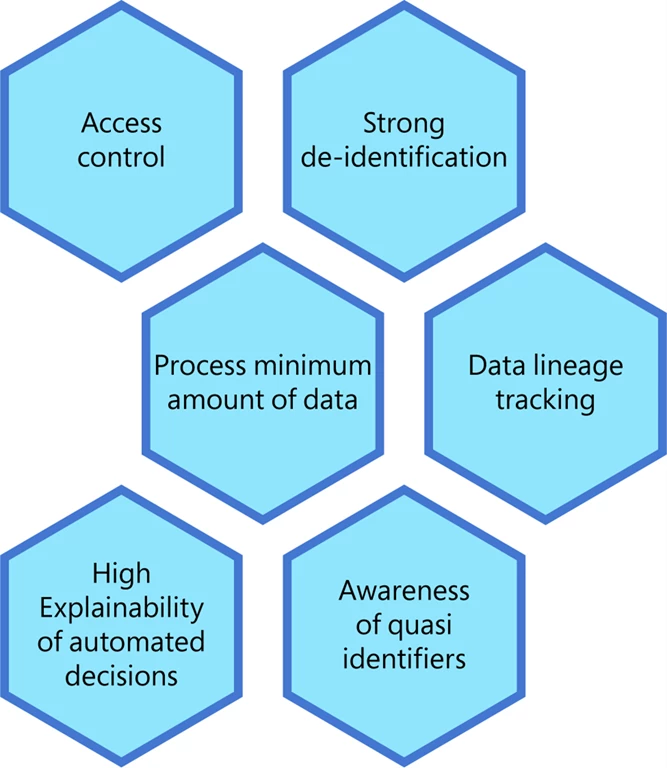

Figure 2: Privacy by design for machine learning systems.

Figure 2 depicts some core foundations to consider when building a privacy by design–driven machine learning system. Let’s reflect on some of these key areas:

- Maintaining strong access control is basic.

- Utilizing robust de-identification techniques (in other words, pseudonymization) for personal identifiers, data aggregation, and encryption approaches are critical.

- Securing personally identifiable information and data minimization are crucial. This involves collecting and processing the smallest amounts of data possible in terms of the personal identifiers associated with the data.

- Understanding, documenting, and displaying data as it travels from data sources to consumers is known as data lineage tracking. This covers all of the data’s changes along the journey, including how the data was converted, what changed, and why. In a data analytics process, data lineage provides visibility while considerably simplifying the ability to track data breaches, mistakes, and fundamental causes.

- Explaining and justifying automated decisions when you need to are vital for compliance and fairness. High explainability mechanisms are required to interpret automated decisions.

- Avoiding quasi-identifiers and non-unique identifiers (for example, gender, postcode, occupation, or languages spoken) is best practice, as they can be used to re-identify persons when combined.

As artificial intelligence is fast evolving, it is critical to incorporate privacy and proper technological and organizational safeguards into the process so that privacy concerns do not stifle its progress but instead lead to beneficial outcomes.

Real-time monitoring for security

Real-time monitoring (of data: inputs and outputs) can be used against backdoor attacks or adversarial attacks by:

- Monitoring data (input and outputs).

- Accessing management efficiently.

- Monitoring telemetry data.

One key solution is to monitor inputs during training or testing. To sanitize (pre-process, decrypt, transformations, and so on) the model input data, autoencoders, or other classifiers can be used to monitor the integrity of the input data. The efficient monitoring of access management (who gets access, and when and where access is obtained) and telemetry data can result in being aware of quasi-identifiers and help prevent suspicious attacks.

Learn more

For further details and to learn about hands-on implementation, check out the Engineering MLOps book, or learn how to build and deploy a model in Azure Machine Learning using MLOps in the Get Time to Value with MLOps Best Practices on-demand webinar. Also, check out our recently announced blog about solution accelerators (MLOps v2) to simplify your MLOps workstream in Azure Machine Learning.