Networking, Thought leadership, Virtual WAN

Advancing global network reliability through intelligent software—part 2 of 2

Posted on

7 min read

“Microsoft’s global network connects over 60 Azure regions, over 220 Azure data centers, over 170 edge sites, and spans the globe with more than 165,000 miles of terrestrial and subsea fiber. The global network connects to the rest of the internet via peering at our strategically placed edge points of presence (PoPs) around the world. Every day, millions of people around the globe access Microsoft Azure, Office 365, Dynamics 365, Xbox, Bing and many other Microsoft cloud services. This translates to trillions of requests per day and terabytes of data transferred each second on our global network. It goes without saying that the reliability of this global network is critical, so I’ve asked Principal Program Manager Mahesh Nayak and Principal Software Engineer Umesh Krishnaswamy to write this two-part post in our Advancing Reliability series. They explain how we’ve approached our network design, and how we’re constantly working to improve both reliability and performance.”—Mark Russinovich, CTO, Azure

Read Advancing global network reliability through intelligent software part 1.

In part one of this networking post, we presented the key design principles of our global network, explored how we emulate changes, our zero touch operations and change automation, and capacity planning. In part two, we start with traffic management. For a large-scale network like ours, it would not be efficient to use traditional hardware managed traffic routing. Instead, we have developed several software-based solutions to intelligently manage traffic engineering in our global network.

SDN-based Internet Traffic Engineering (ITE)

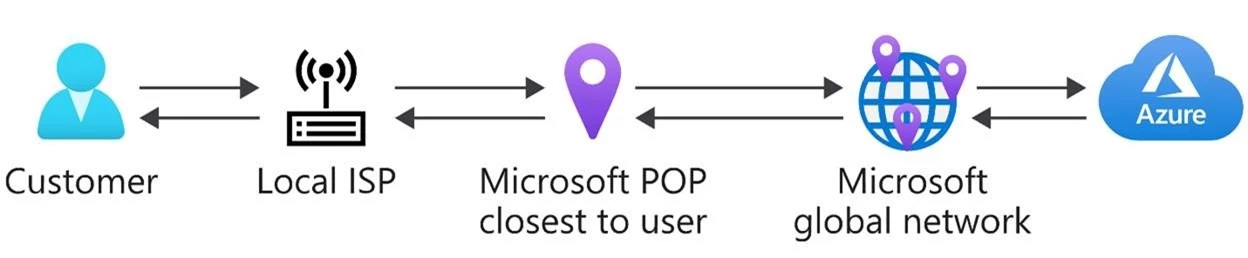

The edge is the most dynamic part of the global network—because the edge is how users connect to Microsoft’s services. We have strategically deployed edge PoPs close to users, to improve customer/network latency and extend the reach of Microsoft cloud services.

For example, if a user in Sydney, Australia accesses Azure resources hosted in Chicago, USA their traffic enters the Microsoft network at an edge PoP in Sydney then travels on our network to the service hosted in Chicago. The return traffic from Azure in Chicago flows back to Sydney on our Microsoft network. By accepting and delivering the traffic to the point closest to the user, we can better control the performance.

Each edge PoP is connected to tens or hundreds of peering networks. Routes between our network and providers’ networks are exchanged using the Border Gateway Protocol (BGP). BGP best path selection has no inherent concept of congestion or performance—neither is BGP capacity aware. So, we developed an SDN-based Internet Traffic Engineering (ITE) system that steers traffic at the edge. The entry and exit points are dynamically altered based on the traffic load of the edge, internet partners’ capacity constraints, reduction or augments in capacity, demand spikes sometimes caused by distributed denial of service attacks, and latency performance of our internet partners. The ITE controller constantly monitors these signals and alters the routes we advertise to our internet partners and/or the routes advertised inside the Microsoft network, to select the best peer-edge.

Optimizing last mile resilience with Azure Peering Service

In addition to optimizing routes within our global network, the Azure Peering Service extends the optimized connectivity to the last mile in the networks of Internet Service Providers (ISPs). Azure Peering Service is a collaboration platform with providers, to enable reliable high-performing connectivity from the users to the Microsoft network. The partnership ensures local and geo redundancy, and proximity to the end users. Each peering location is provisioned with redundant and diverse peering links. Also, providers interconnect at multiple Microsoft PoP locations so that if one of the edge nodes has degraded performance, the traffic routes to and from Microsoft via alternative sites. Internet performance telemetries from Map of Internet (MOI) drive traffic steering for optimized last mile performance.

Route Anomaly Detection and Remediation (RADAR)

The internet runs on BGP. A network or autonomous system is bound to trust, accept, and propagate the routes advertised by its peers without questioning its provenance. That is the strength of BGP and allows the internet to update quickly and heal failures. But it is also its weakness—the path to prefixes owned by a network can be changed by accident or malicious intent to redirect, intercept, or blackhole traffic. There are several incidents that happen to every major provider and some make front page news. We developed a global Route Anomaly Detection and Remediation (RADAR) system to protect our global network.

RADAR detects and mitigates Microsoft route hijacks on the Internet. BGP route leak is the propagation of routing announcement(s) beyond their intended scope. RADAR detects route leaks in Azure and the internet. It can identify stable versus unstable versions of a route and validate new announcements. Using RADAR, and the ITE controller, we built real-time protection for Microsoft prefixes. Peering Service platform extends the route monitoring and protection against hijacks, leaks and any other BGP misconfiguration (intended or not) in the last mile up to the customer location.

Software-driven Wide Area Network (SWAN)

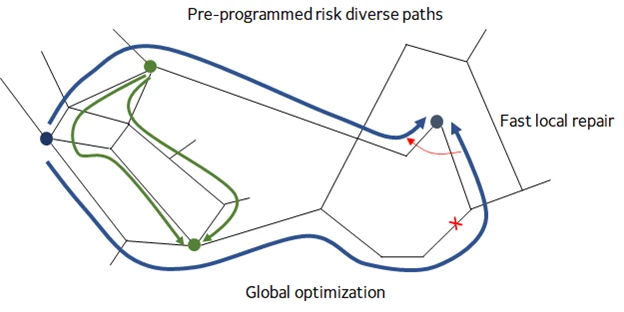

The backbone of the global network is analogous to a highway system connecting major cities. The SWAN controller is effectively the navigation system that assigns the routes for each vehicle, such that every vehicle reaches its destination as soon as possible and without causing congestion on the highways. The system consists of topology discovery, demand prediction, path computation, optimization, and route programming.

Over the last 12 months, the speed of the controller to program the network improved by an order of magnitude and the route-finding capability improved two-fold. Link failures are like lane closures so the controller must recompute routes to decrease congestion. The controller uses the same shared risk link groups (SRLGs) to compute backup routes in case of failure of the primary routes. The backup routes activate immediately upon failure, and the controller gets to work at reoptimizing traffic placement. Links that go up and down in rapid succession are held back from service until they stabilize.

One measure of reliability is the percentage of successfully transmitted bytes to requested bytes, measured over an hour and averaged for the day. Ours is 99.999 percent or better for customer workloads. All communication between Microsoft services is through our dedicated global network. Thousand Eyes Cloud Performance Benchmark reports that over 99 percent of Azure inter-region latencies faster than the performance baseline, and over 60 percent of region pairs are at least 10 percent faster. This is a result of the capacity augments and software systems described in this post.

Bandwidth Broker—software-driven Network Quality of Service (QoS)

If the global network is a highway system, Bandwidth Broker is the system that controls the metering lights at the onramps of highways. For every customer vehicle, there is more than one Microsoft vehicle traversing the highway. Some of the Microsoft vehicles are discretionary and can be deferred to avoid congestion for customer vehicles. Customer vehicles always have a free pass to enter the highways. The metering lights are green in normal operation but when there is a failure or a demand spike, Bandwidth Broker turns on the metering lights in a controlled manner. Microsoft internal workloads are divided into traffic tiers, each with a different priority. Higher priority workloads are admitted in preference to lower priority workloads.

Brokering occurs at the sending host. Hosts periodically request bandwidth on behalf of applications running on them. The requests are aggregated by the controller, bandwidth is reserved, and grants are disseminated to each host. Bandwidth Broker and SWAN coordinate to adjust traffic volume to match routes, and traffic routes to match volume.

It is possible to experience multiple fiber cuts or failures that suddenly reduce network capacity. Geo-replication operations to increase resilience can cause a huge surge in network traffic. Bandwidth Broker generally allows us to preserve the customer experience during these conditions, by shedding discretionary internal workloads when congestion was imminent.

Continuous monitoring

A robust monitoring solution is the foundation to achieve higher network reliability. It lowers both the time to detect and time to repair. The monitoring pipelines constantly analyze several telemetry streams including traffic statistics, health signals, logs, and device configurations. The pipelines automatically collect more data when anomalies are detected or diagnose and remediate common failures. These automated interventions are also guarded by safety check systems.

Major investments in monitoring have been:

- Polling and ingestion of metrics data at sub-minute speeds. A few samples are needed to filter transients and a few more to generate a strong signal. This leads to faster detection times.

- An enhanced diagnostics system that is triggered by packet loss or latency alerts, instructs agents at different vantage points to collect additional information to help triangulate and pinpoint the issue to a specific link or device.

- Enhanced diagnostics trigger auto-mitigation and remediation actions for the most common incidents, with the help of Clockwerk and Real Time Operation Checker (ROC). This translates to faster time to repair and has the ripple effect of keeping engineers focused on more complex incidents.

Other pipelines continuously monitor network graphs for node isolation, and periodically assess risks with “what-if” intent using ROC as described above. We have multiple canary agents deployed throughout the network checking reachability, latency, and packet loss across our regions. This includes agents within Azure, as well as outside of our network, to enable outside-in monitoring. We also periodically analyze Map of Internet (MOI) telemetries to measure end to end performance from customers to Azure. Finally, we have robust monitoring in place to protect the network from security attacks such as BGP route hijacks, and distributed denial of service (DDoS).

Conclusion

We have open-sourced some of these SDN technologies such as SONIC. SONIC and its rapidly growing ecosystem of hardware and software partners enables intelligent new ways to operate and manage the network, and faster evolution of the network itself. Also, we have built services such as ExpressRoute Global Reach, Azure Virtual WAN, Global VNET Peering, and Azure Peering Service that empowers you to build your own overlay on top of the Microsoft global network.

We are continually investing in and enhancing our global network resilience with software and hardware innovations. Our optical fiber investments have improved resilience, performance, and reliability both within and across continents. The MAREA submarine cable system is the industry’s first subsea Open Line System (OLS). It has become a blueprint for all subsequent submarine cable builds which are now following the OLS construct. The ability to upgrade these systems more easily and maintain a homogenous platform across all parts of our network allows Microsoft to seamlessly keep pace with growing demand.

Read Advancing global network reliability through intelligent software part 1.