Application Gateway, Networking

Lighting up network innovation

Posted on

6 min read

Driving up network speed, reducing cost, saving power, expanding capacity, and automating management become crucial when you run one of the world’s largest cloud infrastructures. Microsoft has invested heavily in optical technology to meet these needs for its Azure network infrastructure. The goal is to provide faster, less expensive, and more reliable service to customers, and at the same time, enable the networking industry to benefit from this work. We’ve been collaborating with industry leaders to develop optical solutions that add more capacity for metro area, long-haul, and even undersea cable deployments. We have integrated these optical solutions within network switches and manage them through Software Defined Networking (SDN).

Our goal was to provide 500 percent additional optics capacity at 10x reduced power, a fraction of the previous footprint at a lower cost than what’s possible with traditional systems. Microsoft chose to ignore the chicken and egg problem and create a demand for 100 Gbps optics in a stagnant ecosystem unable to meet the demands of cloud computing. In this blog, we explain the improvements we’ve made and where we’re boldly heading next.

Optical innovation leadership

We began thinking about how to more efficiently move network traffic between cloud datacenters, both within metro areas and over long distances around the world. We homed in on fiber optics, or “optics,” as an area where we could innovate, and decided to invest in our own optical program to integrate all optics into our network switching platforms.

What do we mean when we talk about optics? Optics is the means for transmitting network traffic between our global datacenters. Copper cabling has been the traditional means of carrying data and is still a significant component of server racks within the datacenter. However, moving beyond the rack at high bandwidth (for example, 100 Gbps and more) requires optical technologies. Optical links, light over fiber, replace copper wires to extend the reach and bandwidth of network connections.

Optical transmitters “push” pulses of light through fiber optic cables, converting high-speed electrical transmission signals from a network switch to optical signals over fiber. Optical receivers convert the signals back to electrical at the far end of the cable. This makes it possible to interconnect high-speed switching systems tens of kilometers apart in metro areas, or thousands of kilometers apart between regional datacenters.

To connect devices within the datacenter, each device has its own dedicated fiber. Since the light’s optical wavelength, or color, is isolated by the fiber, the color used to make the connections can be reused on every connection. By using a single color, optic manufacturers can improve costs of high-volume manufacturing. However, single-color has a high fiber cost, particularly as distances increase beyond 500 meters. Although this cost is manageable in intra-datacenter implementations where distances are shorter, the fiber used for inter-datacenter connections, metro and long-haul, is much more expensive.

Cost

We focused on optics because the cost can be 10x the cost of the switch port and even more for ultra-long haul. We began by looking for partners to collaborate on ultra-high integration of optics into new commodity switching platforms to break this pattern. Simultaneously, we developed open line systems (OLS) for both metro and ultra-long-haul transmission solutions to accept the cost-optimized optical sources. Microsoft partnered with several networking suppliers, including Arista, Cisco, and Juniper, to integrate these optics with substantially reduced power, a very small footprint, and much lower cost to create a highly interoperable ecosystem.

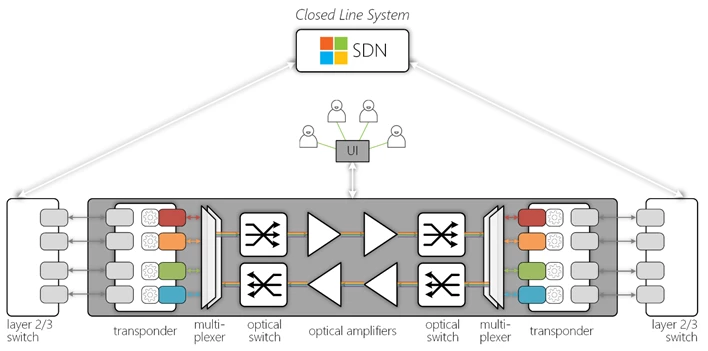

Figure 1. Traditional closed line system

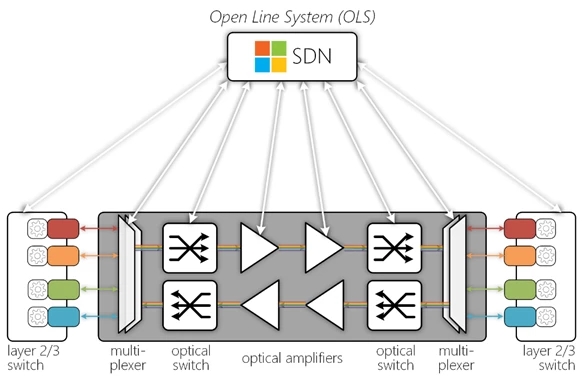

In the past, suppliers have tried to integrate optics directly into switches, but these attempts didn’t include SDN capabilities. SDN is what enables network operators to orchestrate the optical sources and line system with switches and routers. By innovating with the OLS concept, including interfaces to the SDN controller, we can successfully build optics directly into commodity switches and make them fully interoperable. By integrating optics into the switch, we can easily manage and automate the entire solution at a large scale.

With recent OLS advances, we’re also able to achieve 70 percent more spectral efficiency for ultra–long haul connections of our datacenters between distant regions. We have drastically cut costs with this approach, more than doubling the capacity between datacenter when combining lower channel spacing with new modulation techniques that offer 150–200 Gbps per channel.

Figure 2. Open line system (OLS)

Power

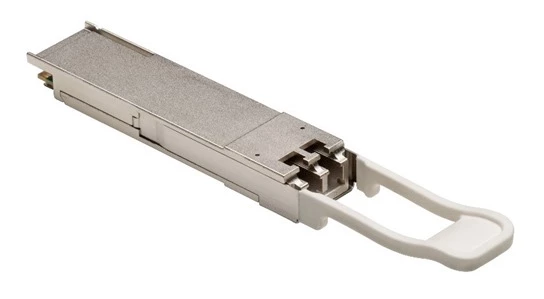

In cloud-scale datacenters, power usage is a major consideration and can limit overall capacity of the solution. As such, we developed a new type of inexpensive 100 Gbps colored optic that fits into a tiny industry standard QSFP28 package to cover distances within metro area and the metro OLS that’s needed to support it. This solution completely replaces expensive long-haul optics for distances up to 80 km.

Due to miniaturization, integration, and innovations in both ultra–long-haul and metro optics, network operators can take advantage of these new approaches and use a fraction of the power (up to 10x less power) while giving customers up to 500 percent more capacity. We’ve expanded capacity and spectral efficiencies at lower overall cost in both capital expenditure and operating expenses than our current systems.

Space

The physical equipment necessary to connect datacenters between regions takes a large amount of space in some of the most expensive datacenter realty. Optical equipment often dominates limited rack space. The equipment necessary to connect datacenters within a region can also require a large amount of space and can limit the number of servers that can be deployed.

By integrating the metro optics and long-haul optics into commodity switching platforms, we’ve reduced the total space needed for optical equipment to just a few racks. In turn, this creates space for more switching equipment and more capacity. By miniaturizing optics, we’ve reduced the overall size of the metro switching equipment to half of its previous footprint, while still offering 500 percent more capacity.

Figure 3. Inphi’s ColorZ® product—large-scale integration of metro-optimized optics into a standard switch-pluggable QSFP28 package

Automation

Microsoft is focused on simplicity and efficiency in monitoring and maintenance in cloud datacenters. We recognized that a further opportunity to serve the industry lay in full automation of our optical systems to provide reliable capacity at scale and on demand.

Monitoring for legacy systems can’t distinguish optical defects from switching defects. This can result in delays in diagnosing and repairing hardware failures. For the optical space, this has historically been a manual process.

We saw that we could solve this problem by fully integrating optics into commodity switches and making them accessible with our SDN monitoring and automation tooling. By driving an open and optimized OLS model for optical networking equipment, we’ve ensured that the proper interfaces are present to integrate optical operations into SDN orchestration. Now automation can quickly mitigate defects across all networking layers, including service repair, with end-to-end work flow management. The industry benefits from this because optics monitoring and mitigations can now keep pace with cloud scale and growth patterns.

Industry impact

Microsoft has incorporated all these technologies into the Azure network, but the industry at large will benefit. For example, findings from ACG Research show that the Microsoft metro solution will result in a more than 65 percent reduction in Total Cost of Ownership. In addition, the research demonstrates power savings of more than 70 percent over 5 years.

Several of our partners are making available the building blocks of the Microsoft implementation of open optical systems. For example:

- Cisco and Arista provide the integration of ultra–long-haul optics into their cloud switching platforms.

- If your switches don’t support optical integration, several suppliers offer dense, ultra–long-haul solutions that enable disaggregation of optics from the OLS in the form of pizza boxes.

- ADVA Optical Networking provides open metro OLS solutions that support Inphi ColorZ® optics and several other turnkey alternatives.

- Most ultra–long-haul line systems have supported International Telecommunication Union–defined alien wavelengths (optical sources) for quite some time. Talk to your supplier for additional details.

If you’re interested in the deep, technical details behind these innovations, you can read the following technical papers:

- Interoperation of Layer-2/3 Modular Switches with 8QAM/16QAM Integrated Coherent Optics over 2000 km Open Line System

- Demonstration and Performance Analysis of 4 Tb/s DWDM Metro-DCI System with 100G PAM4 QSFP28 Modules

- Transmission Performance of Layer-2/3 Modular Switch with mQAM Coherent ASIC and CFP2-ACOs over Flex-Grid OLS with 104 Channels Spaced 37.5 GHz.

- Open Undersea Cable Systems for Cloud Scale Operation

Opening new frontiers of innovation

As these innovations in optics demonstrate, Microsoft is developing unique networking solutions and opening our advances for the benefit of the entire industry. Microsoft is working with our partners to bring even more integration, miniaturization, and power savings into future 400 Gbps interconnects that will power our network.

Read more

To read more posts from this series please visit: