Azure Load Balancer becomes more efficient

Posted on

4 min read

Azure introduced an advanced, more efficient Load Balancer platform in late 2017. This platform adds a whole new set of abilities for customer workloads using the new Standard Load Balancer. One of the key additions the new Load Balancer platform brings, is a simplified, more predictable and efficient outbound connectivity management.

While already integrated with Standard Load Balancer, we are now bringing this advantage to the rest of Azure deployments. In this blog, we will explain what it is and how it makes life better for all our consumers. An important change that we want to focus on is the outbound connectivity behavior pre and post platform integration as this is a very important design point for our customers.

Load Balancer and Source NAT

Azure deployments use one or more of three scenarios for outbound connectivity, depending on the customer’s deployment model and the resources utilized and configured. Azure uses Source Network Address Translation (SNAT) to enable these scenarios. When multiple private IP addresses or roles share the same public IP (public IP address assign to Load Balancer, used for outbound rules or automatically assigned public IP address for standalone virtual mahines), Azure uses port masquerading SNAT (PAT) to translate private IP addresses to public IP addresses using the ephemeral ports of the public IP address. PAT does not apply when Instance Level Public IP addresses (ILPIP) are assigned.

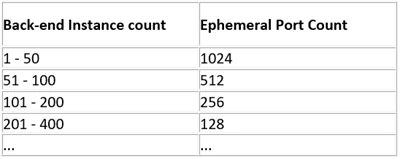

For the cases where multiple instances share a public IP address, each instance behind an Azure Load Balancer VIP is pre-allocated a fixed number of ephemeral ports used for PAT (SNAT ports), needed for masquerading outbound flows. The number of pre-allocated ports per instance is determined by the size of backend pool, see the SNAT algorithm section for details.

Differences between legacy and new SNAT algorithms

The platform improvements also involved improvements in the way the SNAT algorithm works in Azure. The table below does a side-by-side comparison of these allocation modes and their properties

| Legacy SNAT Port Allocation (Legacy Basic SKU Deployments) |

New SNAT Port Allocation (Recent Basic SKU deployments and Standard SKU deployments) |

|

| Applicability | Services deployed before September 2017 use this allocation mode | Services deployed after September 2017 use this allocation mode. |

| Pre-allocation | 160 (smaller number for tenants larger than 300 instances) |

For SNAT port allocation according to the back-end pool size and the pool boundaries, visit SNAT port pre-allocation.

In case outbound rules are used, the pre-allocation will be equal to the ports defined in the outbound rules. If the ports are exhausted on a subset of instances they will not be allocated any SNAT ports. |

| Max ports | Dynamic, on-demand allocation of a small number of ports until all are exhausted. (total SNAT port cound available for one frontend IP address is 65,535, which is shared across all backend instances.) No throttling of requests. |

For Basic SKU resources*, all available SNAT ports are allocated dynamically on-demand. Some throttling of requests is applied (per instance per sec.) *No more dynamic allocation of ports is done for Standard SKU resources. |

| Scale up |

Port re-allocation is done. Existing connections might drop on re-allocation. |

Static SNAT ports are always allocated to the new instance. If backend pool boundary is changed, or ports are exhausted, port reallocation is done. Existing connections might drop on re-allocation. |

| Scale Down | Port re-allocation is done. | If backend pool boundary is changed, port reallocation is done to allocate additional ports to all. |

| Use Cases |

|

|

Platform Integration and impact on SNAT port allocation

We’re working on the integration of the two platforms to extend reliability and efficiency and enable capabilities like telemetry and SKU upgrade for the customers. As a result of this integration, all the users across Azure will be moved to the new SNAT port allocation algorithm. This integration exercise is in progress and expected to finish before Spring 2020.

What type of SNAT allocation do I get after platform integration?

Let’s categorize these into different scenarios:

- Legacy SNAT port allocation is the older mode of SNAT port allocation and is being used by deployments made before September 2017. This mode allocates a small number of SNAT ports (160) statically to instances behind a Load Balancer and relies on SNAT failures and dynamic on-demand allocations afterwards.

- After platform integration, these deployments will be moved to the new SNAT allocation in the new platform as described in section A above. However, we’ll ensure a port allocation equal to a maximum of in the new platform after migration.

- New SNAT port allocation mode in the older platform was introduces in early 2018. This mode is same as the new SNAT port allocation Mode described above.

- After platform integration, these deployments will remain unchanged, ensuring the preservation of SNAT port allocation from the older platform.

How does it impact my services or my existing outbound flows?

- In majority of the cases, where the instances are consuming less than the default pre-allocated SNAT ports, there will be no impact to the existing flows.

- In a small number of the customer deployments, which are using a significantly higher number of SNAT ports (received via Dynamic allocation), there might be a temporary drop of a portion of flows, which depend on additional dynamic port allocation. This should auto-correct within a few seconds.

What should I do right now?

Review and familiarize yourself with the scenarios and patterns described in Managing SNAT port exhaustion for guidance on how to design for reliable and scalable scenarios.

How do I ensure no disruption for upcoming critical period?

The platform integration and resulting port allocation algorithm is an Azure platform level change. However, we do understand that you are running critical production workloads in Azure and want to ensure this level of service logic changes are not implemented during critical periods and avoiding any service disruption. In such scenarios, please create a Load Balancer support case from the portal with your deployment information, and we’ll work with you to ensure no disruption to your services.