AI + Machine Learning, Announcements, Azure AI Services

At Build, Microsoft expands its Cognitive Services collection of intelligent APIs

Posted on

14 min read

This blog post was authored by the Microsoft Cognitive Services Team.

Microsoft Cognitive Services enables developers to augment the next generation of applications with the ability to see, hear, speak, understand, and interpret needs using natural methods of communication.

Today at the Build 2017 conference, we are excited to announce the next big wave of innovation for Microsoft Cognitive Services, significantly increasing the value for developers looking to embrace AI and build the next generation of applications.

- Customizable: With the addition of Bing Custom Search, Custom Vision Service and Custom Decision Service on top of Custom Speech and Language Understanding Intelligent Service, we now have a broader set of custom AI APIs available, allowing customers to use their own data with algorithms that are customized for their specific needs.

- Cutting edge technologies: Today we are launching Microsoft’s Cognitive Services Labs, which allow any developer to take part in the broader research community’s quest to better understand the future of cognitive computing, by experimenting with new services still in the early stages of development. One of the first AI services being made available via our Cognitive Services Labs is Project Prague, which lets you use gestures to control and interact with technologies to have more intuitive and natural experiences. This cutting edge and easy to use SDK is in private preview.

- High pace of innovation: We’re expanding our Cognitive Services portfolio to 29 intelligent APIs with the addition of Video Indexer, Custom Decision Service, Bing Custom Search, and Custom Vision Service, along with the new Cognitive Services Lab Project Prague, for gestures, and updates to our existing Cognitive Services, such as Bing Search, Microsoft Translator and Language Understanding Intelligent Service.

Today, 568,000+ developers from more than 60 of countries are using Microsoft Cognitive Services that allow systems to see, hear, speak, understand and interpret our needs.

What are the capabilities of these new services?

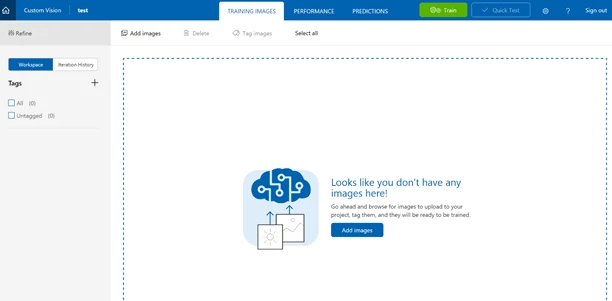

- Custom Vision Service, available today in free public preview, is an easy-to-use, customizable web service that learns to recognize specific content in imagery, powered by state-of-the-art machine learning neural networks that become smarter with training. You can train it to recognize whatever you choose, whether that be animals, objects, or abstract symbols. This technology could easily apply to retail environments for machine-assisted product identification, or in digital space to automatically help sorting categories of pictures.

- Video Indexer, available today in free public preview, is one of the industry’s most comprehensive video AI services. It helps you unlock insights from any video by indexing and enabling you to search spoken audio that is transcribed and translated, sentiment, faces that appeared and objects. With these insights, you can improve discoverability of videos in your applications or increase user engagement by embedding this capability in sites. All of these capabilities are available through a simple set of APIs, ready to use widgets and a management portal.

- Custom Decision Service, available today in free public preview, is a service that helps you create intelligent systems with a cloud-based contextual decision-making API that adapts with experience. Custom Decision service uses reinforcement learning in a new approach for personalizing content; it’s able to plug into your application and helps to make decisions in real time as it automatically adapts to optimize your metrics over time.

- Bing Custom Search, available today in free public preview, lets you create a highly-customized web search experience, which delivers better and more relevant results from your targeted web space. Featuring a straightforward User Interface, Bing Custom Search enables you to create your own web search service without a line of code. Specify the slices of the web that you want to draw from and explore site suggestions to intelligently expand the scope of your search domain. Bing Custom Search can empower businesses of any size, hobbyists and entrepreneurs to design and deploy web search applications for any possible scenario.

- Microsoft’s Cognitive Services Labs allow any developer to experiment with new services still in the early stages of development. Among them, Project Prague is one of the services currently in private preview. This SDK is built from an intensive library of hand poses that creates more intuitive experiences by allowing users to control and interact with technologies through typical hand movements. Using a special camera to record the gestures, the API then recognizes the formation of the hand and allows the developer to tie in-app actions to each gesture.

- Next version of Bing APIs, available in public preview, allowing developers to bring the vast knowledge of the web to their users and benefit from improved performance, new sorting and filtering options, robust documentation, and easy Quick Start guides. This release includes the full suite of Bing Search APIs (Bing Web Search API Preview, Bing News Search API Preview, Bing Video Search API Preview, and Bing Image Search API Preview), Bing Autosuggest API Preview, and Bing Spell Check API Preview. Please find more information in the announcement blog.

- Presentation Translator, a Microsoft Garage project provides presenters the ability to add subtitles to their presentations, in the same language for accessibility scenarios or in another language for multi-language situations. Audience members get subtitles in their desired language on their own device through the Microsoft Translator app, in a browser and (optionally) translate the slides while preserving their formatting. Click here to be notified when it’s available.

- Language Understanding Intelligent Service (LUIS) improvements – helps developers integrate language models that understand users quickly and easily, using either prebuilt or customized models. Updates to LUIS include increased intents and entities, introduction of new powerful developer tools for productivity, additional ways for the community to use and contribute, improved speech recognition with Microsoft Bot Framework, and more global availability.

Let’s take a closer look at what these new APIs and Services can do for you.

Bring custom vision to your app

Thank to Custom Vision Service, it becomes pretty easy to create your own image recognition service. You can use the Custom Vision Service Portal to upload a series of images to train your classifier and a few images to test it after the classifier is trained.

It’s also possible to code each step: let’s say I need to quickly create my image classifier for a specific need, this can be products my users are uploading on my website, retail merchandize or even animal images in a forest.

- To get started, I would need the Custom Vision API, which can be found with this SDK. I need to create a console application and prepare the training key & the images needed for the example

I can start with Visual Studio to create a new Console Application, and replace the contents of Program.cs with the following code. This code defines and calls two helper methods:

- The method called GetTrainingKey prepares the training key.

- The one called LoadImagesFromDisk loads two sets of images that this example uses to train the project, and one test image that the example loads to demonstrate the use of the default prediction endpoint.

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using System.Threading;

using Microsoft.Cognitive.CustomVision;

namespace SmokeTester

{

class Program

{

private static List hemlockImages;

private static List japaneseCherryImages;

private static MemoryStream testImage;

static void Main(string[] args)

{

// You can either add your training key here, pass it on the command line, or type it in when the program runs

string trainingKey = GetTrainingKey("", args);

// Create the Api, passing in a credentials object that contains the training key

TrainingApiCredentials trainingCredentials = new TrainingApiCredentials(trainingKey);

TrainingApi trainingApi = new TrainingApi(trainingCredentials);

// Upload the images we need for training and the test image

Console.WriteLine("tUploading images");

LoadImagesFromDisk();

}

private static string GetTrainingKey(string trainingKey, string[] args)

{

if (string.IsNullOrWhiteSpace(trainingKey) || trainingKey.Equals(""))

{

if (args.Length >= 1)

{

trainingKey = args[0];

}

while (string.IsNullOrWhiteSpace(trainingKey) || trainingKey.Length != 32)

{

Console.Write("Enter your training key: ");

trainingKey = Console.ReadLine();

}

Console.WriteLine();

}

return trainingKey;

}

private static void LoadImagesFromDisk()

{

// this loads the images to be uploaded from disk into memory

hemlockImages = Directory.GetFiles(@"..........SampleImagesHemlock").Select(f => new MemoryStream(File.ReadAllBytes(f))).ToList();

japaneseCherryImages = Directory.GetFiles(@"..........SampleImagesJapanese Cherry").Select(f => new MemoryStream(File.ReadAllBytes(f))).ToList();

testImage = new MemoryStream(File.ReadAllBytes(@"..........SampleImagesTesttest_image.jpg"));

}

}

}

- As next step, I would need to Create a Custom Vision Service project, adding the following code in the Main() method after the call to LoadImagesFromDisk().

// Create a new project

Console.WriteLine("Creating new project:");

var project = trainingApi.CreateProject("My New Project");

- Next, I need to add tags to my project by insert the following code after the call to CreateProject()

// Make two tags in the new project

var hemlockTag = trainingApi.CreateTag(project.Id, "Hemlock");

var japaneseCherryTag = trainingApi.CreateTag(project.Id, "Japanese Cherry");

- Then, I need to Upload images in memory to the project, by inserting the following code at the end of the Main() method:

// Images can be uploaded one at a time

foreach (var image in hemlockImages)

{

trainingApi.CreateImagesFromData(project.Id, image, new List() { hemlockTag.Id.ToString() });

}

// Or uploaded in a single batch

trainingApi.CreateImagesFromData(project.Id, japaneseCherryImages, new List() { japaneseCherryTag.Id });

- Now that I’ve added tags and images to the project, I can train it. I would need to insert the following code at the end of Main(). This creates the first iteration in the project. I can then mark this iteration as the default iteration.

// Now there are images with tags start training the project

Console.WriteLine("tTraining");

var iteration = trainingApi.TrainProject(project.Id);

// The returned iteration will be in progress, and can be queried periodically to see when it has completed

while (iteration.Status == "Training")

{

Thread.Sleep(1000);

// Re-query the iteration to get it's updated status

iteration = trainingApi.GetIteration(project.Id, iteration.Id);

}

// The iteration is now trained. Make it the default project endpoint

iteration.IsDefault = true;

trainingApi.UpdateIteration(project.Id, iteration.Id, iteration);

Console.WriteLine("Done!n");

- As I’m now ready to use the model for prediction, I first obtain the endpoint associated with the default iteration; then I send a test image to the project using that endpoint. Insert the code below at the end of Main().

// Now there is a trained endpoint, it can be used to make a prediction

// Get the prediction key, which is used in place of the training key when making predictions

var account = trainingApi.GetAccountInfo();

var predictionKey = account.Keys.PredictionKeys.PrimaryKey;

// Create a prediction endpoint, passing in a prediction credentials object that contains the obtained prediction key

PredictionEndpointCredentials predictionEndpointCredentials = new PredictionEndpointCredentials(predictionKey);

PredictionEndpoint endpoint = new PredictionEndpoint(predictionEndpointCredentials);

// Make a prediction against the new project

Console.WriteLine("Making a prediction:");

var result = endpoint.PredictImage(project.Id, testImage);

// Loop over each prediction and write out the results

foreach (var c in result.Predictions)

{

Console.WriteLine($"t{c.Tag}: {c.Probability:P1}");

}

Console.ReadKey();

- Last step, let’s build and run the solution: the prediction results appear on the console.

For more information about Custom Vision Service, please take a look at the following resources:

- The Custom Vision Service portal and webpage

- The full get started guides

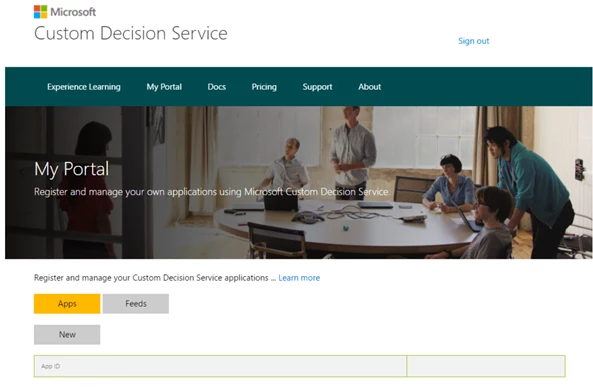

Personalization of your site with Custom Decision Service

With Custom Decision Service, you can personalize content on your website, so that users see the most engaging content for them.

Let’s say I own a news website, with a front page with links to several articles. As the page loads, I want to request Custom Decision Service to provide a ranking of articles to include on the page.

When one of my users clicks on an article, a second request is going to be sent to the Custom Decision Service to log the outcome of the decision. The easiest integration mode requires just an RSS feed for the content and a few lines of javascript to be added into the application. Let’s get started!

- First, I need to register on the Decision Service Portal by clicking on My Portal menu item in the top ribbon, then I can register the application, choosing a unique identifier. It’s also possible to create a name for an action set feed, along with an RSS or Atom end point currently.

- The basic use of Custom Decision Service is fairly straightforward: the front page will use Custom Decision Service to specify the ordering of the article pages. I just need to insert the following code into the HTML head of the front page.

// Define the "callback function" to render UI

function callback(data) { … }

// call to Ranking API