Azure Machine Learning is the center for all things machine learning on Azure, be it creating new models, deploying models, managing a model repository, or automating the entire CI/CD pipeline for machine learning. We recently made some amazing announcements on Azure Machine Learning, and in this post, I’m taking a closer look at two of the most compelling capabilities that your business should consider while choosing the machine learning platform.

Before we get to the capabilities, let’s get to know the basics of Azure Machine Learning.

What is Azure Machine Learning?

Azure Machine Learning is a managed collection of cloud services, relevant to machine learning, offered in the form of a workspace and a software development kit (SDK). It is designed to improve the productivity of:

- Data scientists who build, train and deploy machine learning models at scale

- ML engineers who manage, track and automate the machine learning pipelines

Azure Machine Learning comprises of the following components:

- An SDK that plugs into any Python-based IDE, notebook or CLI

- A compute environment that offers both scale up and scale out capabilities with the flexibility of auto-scaling and the agility of CPU or GPU based infrastructure for training

- A centralized model registry to help keep track of models and experiments, irrespective of where and how they are created

- Managed container service integrations with Azure Container Instance, Azure Kubernetes Service and Azure IoT Hub for containerized deployment of models to the cloud and the IoT edge

- Monitoring service that helps tracks metrics from models that are registered and deployed via Machine Learning

Let us introduce you to Machine Learning with the help of this video where Chris Lauren from the Azure Machine Learning team showcases and demonstrates it.

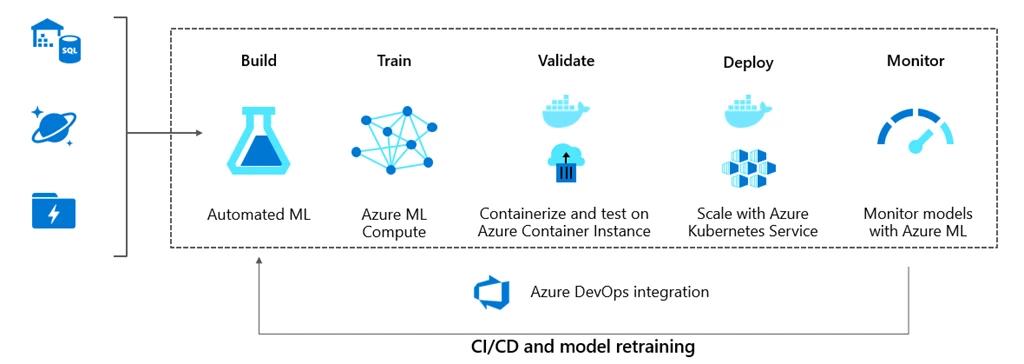

As you see in the video, Azure Machine Learning can cater to workloads of any scale and complexity. Please see below, a flow for the connected car application demonstrated in the video. This is also a canonical pattern for machine learning solutions built on Machine Learning:

Visual: Connected Car demo architecture leveraging Azure Machine Learning

Now that you understand Azure Machine Learning, let’s look at the two capabilities that stand out:

Automated machine learning

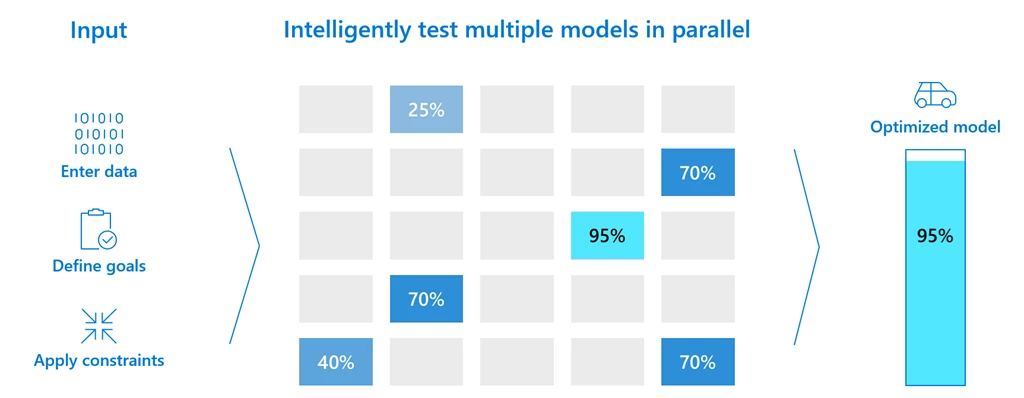

Data scientists spend an inordinate amount of time iterating over models during the experimentation phase. The whole process of trying out different algorithms and hyperparameter combinations until an acceptable model is built is extremely taxing for data scientists, due to the monotonous and non-challenging nature of work. While this is an exercise that yields massive gains in terms of the model efficacy, it sometimes costs too much in terms of time and resources and thus may have a negative return on investment (ROI).

This is where automated machine learning (ML) comes in. It leverages the concepts from the research paper on Probabilistic Matrix Factorization and implements an automated pipeline of trying out intelligently-selected algorithms and hypermeter settings, based on the heuristics of the data presented, keeping into consideration the given problem or scenario. The result of this pipeline is a set of models that are best suited for the given problem and dataset.

Visual: Automated machine learning

Automated ML supports classification, regression, and forecasting and it includes features such as handling missing values, early termination by a stopping metric, blacklisting algorithms you don’t want to explore, and many more to optimize the time and resources.

Automated ML is designed to help professional data scientists be more productive and spend their precious time concentrating on specialized tasks such as tuning and optimizing the models, alongside mapping real-world cases to ML problems, rather than spending time in monotonous tasks like trial and error with a bunch of algorithms. Automated ML with its newly introduced UI mode (akin to a wizard) also helps open the doors of machine learning to novice or non-professional data scientists as they can now become valuable contributors in data science teams by leveraging these augmented capabilities and churning out accurate models to accelerate time to market. This ability to expand data science teams beyond the handful of highly specialized data scientists enables enterprises to invest and reap the benefits of machine learning at scale without having to compromise high-value use cases due to the lack of data science talent.

To learn more about automated ML in Azure Machine Learning, explore this automated machine learning article.

Machine learning operations (MLOps)

Creating a model is just one part of an ML pipeline, arguably the easier part. To take this model to production and reap benefits of the data science model is a completely different ball game. One has to be able to package the models, deploy the models, track and monitor these models in various deployment targets, collects metrics, use these metrics to determine the efficacy of these models and then enable retraining of the models on the basis of these insights and/or new data. To add to it, all this needs a mechanism that can be automated with the right knobs and dials to allow data science teams to be able to keep a tab and not allow the pipeline to go rogue, which could result in considerable business losses, as these data science models are often linked directly to customer actions.

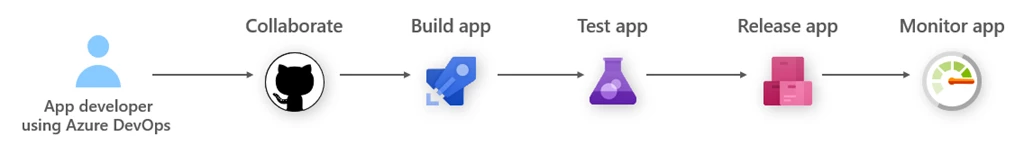

This problem is very similar to what application development teams face with respect to managing apps and releasing new versions of it at regular intervals with improved features and capabilities. The app dev teams address these with DevOps, which is the industry standard for managing operations for an app dev cycle. To be able to replicate the same to machine learning cycles is not the easiest task.

Visual: DevOps Process

Visual: DevOps Process

This is where the Azure Machine Learning shines the most. It presents the most complete and intuitive model lifecycle management experience alongside integrating with Azure DevOps and GitHub.

The first task in the ML lifecycle management, after a data scientist has created and validated a model or an ML pipeline, is that it needs to be packaged, so that it can execute where it needs to be deployed. This means that the ML platform needs to enable containerizing the model with all its dependencies, as containers are the default execution unit across scalable cloud services and the IoT edge. Azure Machine Learning provides an easy way for data scientists to be able to package their models with simple commands that can track all dependencies like conda environments, python versioned libraries, and other libraries that the model references so that the model can execute seamlessly within the deployed environment.

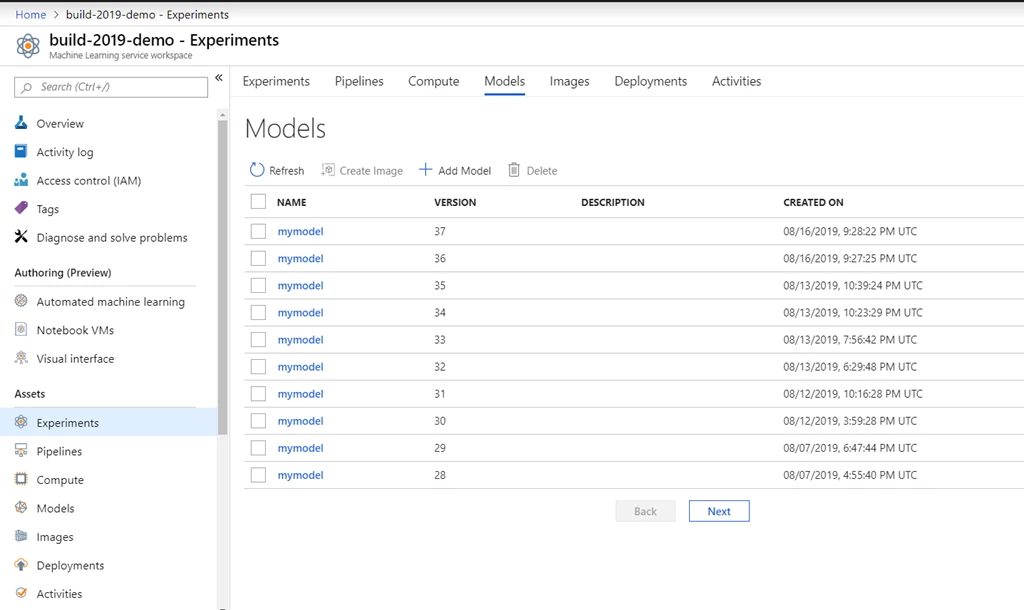

The next step is to be able to version control these models. Now, the code generated, like the Python notebooks or scripts can be easily versioned controlled in GitHub, and this is the recommended approach, but in addition to the notebooks and scripts you also need a way to version control the models, which are different entities than the python files. This is important as data scientists may create multiple versions of the model, and very easily lose track of these in search of better accuracy or performance. Azure Machine Learning provides a central model registry, which forms the foundation of the lifecycle management process. This repository enables version control of models, it stores model metrics, it allows for one-click deployment, and even tracks all deployments of the models so that you can restrict usage, in case the model becomes stale or its efficacy is no longer acceptable. Having this model registry is key as it also helps trigger other activities in the lifecycle when new changes appear, or metrics cross a threshold.

Visual: Model Registry in Azure Machine Learning

Visual: Model Registry in Azure Machine Learning

Once a model is packaged and registered, it’s time to test the packaged model. Since the package is a container, it is most ideal to test it in Azure Container Instances, which provides an easy, cost-effective mechanism to deploy containers. The important thing here is you don’t have to go outside Azure Machine Learning, as it has built strong links to Azure Container Instances within its workspace. You can easily set up an Azure Container Instance from within the workspace or from your IDE, where you’re already using Azure Machine Learning, via the SDK. Once you deploy this container to Azure Container Instances, you can easily inference against this model for testing purposes.

Following a thorough round of testing of the model, it is now time to be able to deploy the model into production. Production environments are synonymous with scale, flexibility and tight monitoring capabilities. This is where Azure Kubernetes Services (AKS) can be very useful for container deployments. It provides scale-out capabilities as it’s a cluster and can be sized to cater to the business’ needs. Again, very much like Azure Container Instances, Azure Machine Learning also provides the capability to set up an AKS cluster from within its workspace or the IDE of choice for the user.

If your models are sufficiently small and don’t need scale-out requirements, you can also take your models to production on Azure Container Instances. Usually, that’s not the case, as models are accessed by end-user applications or many different systems, and such planning for scale always helps. Both Azure Container Instances and AKS provide extensive monitoring and logging capabilities.

Once your model is deployed, you want to be able to collect metrics on the model. You want to ascertain that the model is drifting from its objective and that the inference is useful for the business. This means you capture a lot of metrics and analyze them. Azure Machine Learning enables this tracking of metrics for the model is a very efficient manner. The central model registry becomes the one place where all this hosted.

As you collect more metrics and additional data becomes available for training, there may be a need to be able to retrain the model in the hope of improving its accuracy and/or performance. Also, since this is a continuous process of integrations and deployment (CI/CD), there’s a need for this process to be automated. This process of retraining and effective CI/CD of ML models is the biggest strength of Azure Machine Learning.

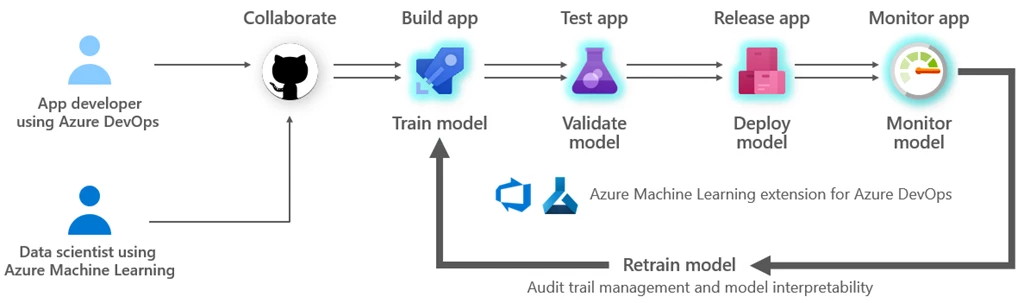

Azure Machine Learning integrated with Azure DevOps for you to be able to create MLOps pipelines inside the DevOps environment. Azure DevOps has an extension for Azure Machine Learning, which enables it to listen to Azure Machine Learning’s model registry in addition to the code repository maintained in GitHub for the python notebooks and scripts. This enables to trigger Azure Pipelines based on new code commits into the code repository or new models published into the model repository. This is extremely powerful, as data science teams can configure stages for build and release pipelines within Azure DevOps for the machine learning models and completely automate the process.

What’s more, since Azure DevOps is also the environment to manage app lifecycles it now enables data science teams and app dev teams to collaborate seamlessly and trigger new version of the apps whenever certain conditions are met for the MLOps cycle, as they are the ones often leveraging the new versions of the ML models, infusing them into apps or updating inference call URLs, when desired.

This may sound simple and the most logical way of doing it, but nobody has been able to bring MLOps to life with such close-knit integration into the whole process. Azure Machine Learning does an amazing job of it enabling data science teams to become immensely productive.

Please see the diagrammatic representation below for MLOps with Azure Machine Learning.

Visual: MLOps with Azure Machine Learning

To learn more about MLOps please visit the Azure Machine Learning documentation on MLOps.

Get started now!

This has been a long post so thank you for your patience, but this is just the beginning. As we observe, Azure Machine Learning presents capabilities that make the entire ML lifecycle a seamless process. With these two features, we’re just scratching the surface of its capabilities as there are many more features to help data scientists and machine learning engineers create, manage, and deploy their models in a much more robust and thoughtful manner.

-

Model interpretability – Understand the model and its behavior.

-

ONNX runtime support – Deploy models created in the open ONNX format.

-

Model telemetry collection – Collect telemetry from live running models.

-

Field programmable gated-array (FPGA) inferencing – Score or featurize image data using pre-trained deep neural networks with blazing fast speed and low cost.

-

IoT Edge deployment – Deploy model to IoT devices.

And many more to come. Please visit the Getting started guide to start the exciting journey with us!