Over the last couple of years, deep learning has become widely adopted across the Bing search stack and powers a vast number of our intelligent features. We use natural language models to improve our core search algorithm’s understanding of a user’s search intent and the related webpages so that Bing can deliver the most relevant search results to our users. We rely on deep learning computer vision techniques to enhance the discoverability of billions of images even if they don’t have accompanying text descriptions or summary metadata. We leverage machine-based reading comprehension models to retrieve captions within larger text bodies that directly answer the specific questions users have. All these enhancements lead toward more relevant, contextual results for web search queries.

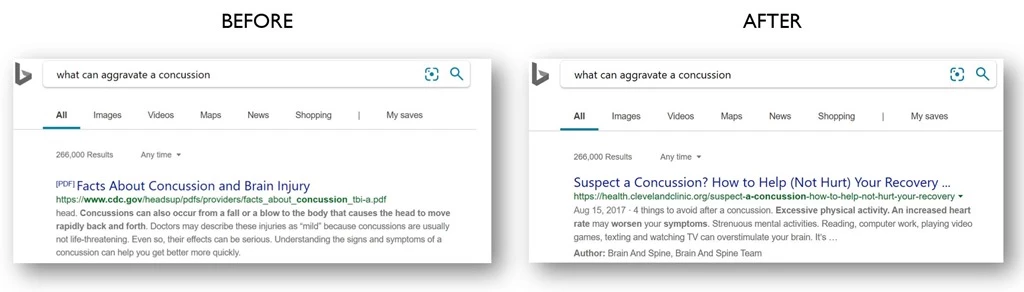

Recently, there was a breakthrough in natural language understanding with a type of model called transformers (as popularized by Bidirectional Encoder Representations from Transformers, BERT). Unlike previous deep neural network (DNN) architectures that processed words individually in order, transformers understand the context and relationship between each word and all the words around it in a sentence. Starting from April of this year, we used large transformer models to deliver the largest quality improvements to our Bing customers in the past year. For example, in the query “what can aggravate a concussion”, the word “aggravate” indicates the user wants to learn about actions to be taken after a concussion and not about causes or symptoms. Our search powered by these models can now understand the user intent and deliver a more useful result. More importantly, these models are now applied to every Bing search query globally making Bing results more relevant and intelligent.

Deep learning at web-search scale can be prohibitively expensive

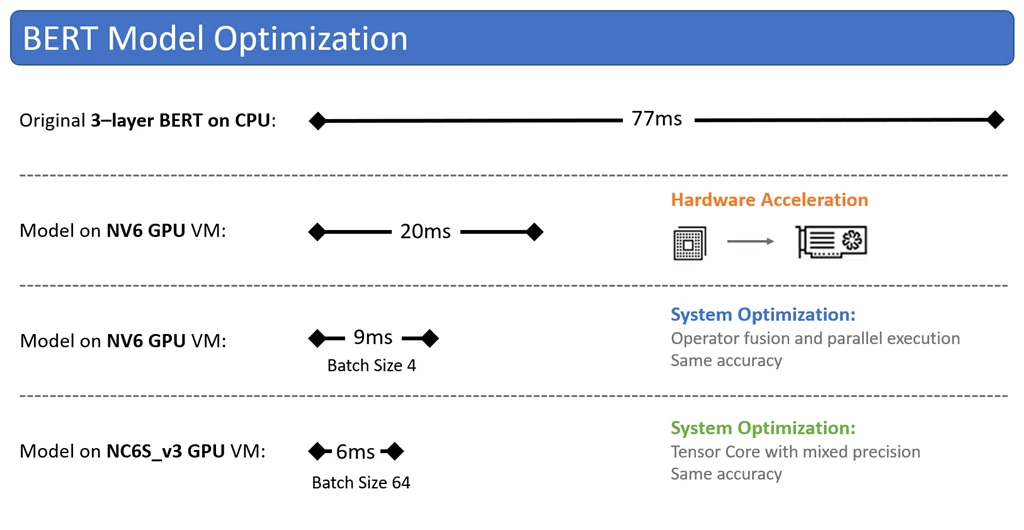

Bing customers expect an extremely fast search experience and every millisecond of latency matters. Transformer-based models are pre-trained with up to billions of parameters, which is a sizable increase in parameter size and computation requirement as compared to previous network architectures. A distilled three-layer BERT model serving latency on twenty CPU cores was initially benchmarked at 77ms per inference. However, since these models would need to run over millions of different queries and snippets per second to power web search, even 77ms per inference remained prohibitively expensive at web search scale, requiring tens of thousands of servers to ship just one search improvement.

Leveraging Azure Virtual Machine GPUs to achieve 800x inference throughput

One of the major differences between transformers and previous DNN architectures is that it relies on massive parallel compute instead of sequential processing. Given that graphics processing unit (GPU) architecture was designed for high throughput parallel computing, Azure’s N-series Virtual Machines (VM) with GPU accelerators built-in were a natural fit to accelerate these transformer models. We decided to start with the NV6 Virtual Machine primarily because of the lower cost and regional availability. Just by running the three-layer BERT model on that VM with GPU, we observed a serving latency of 20ms (about 3x improvement). To further improve the serving efficiency, we partnered with NVIDIA to take full advantage of the GPU architecture and re-implemented the entire model using TensorRT C++ APIs and CUDA or CUBLAS libraries, including rewriting the embedding, transformer, and output layers. NVIDIA also contributed efficient CUDA transformer plugins including softmax, GELU, normalization, and reduction.

We benchmarked the TensorRT-optimized GPU model on the same Azure NV6 Virtual Machine and was able to serve a batch of four inferences in 9ms, an 8x latency speedup and 34x throughput improvement compared to the model without GPU acceleration. We then leveraged Tensor Cores with mixed precision on a NC6s_v3 Virtual Machine to even further optimize the performance, benchmarking a batch size of 64 inferences at 6ms (~800x throughput improvement compared to CPU).

Transforming the Bing search experience worldwide using Azure’s global scale

With these GPU optimizations, we were able to use 2000+ Azure GPU Virtual Machines across four regions to serve over 1 million BERT inferences per second worldwide. Azure N-series GPU VMs are critical in enabling transformative AI workloads and product quality improvements for Bing with high availability, agility, and significant cost savings, especially as deep learning models continue to grow in complexity. Our takeaway was very clear, even large organizations like Bing can accelerate their AI workloads by using N-series virtual machines on Azure with built-in GPU acceleration. Delivering this kind of global-scale AI inferencing without GPUs would have required an exponentially higher number of CPU-based VMs, which ultimately would have become cost-prohibitive. Azure also provides customers with the agility to deploy across multiple types of GPUs immediately, which would have taken months of time if we were to install GPUs on-premises. The N-series Virtual Machines were essential to our ability to optimize and ship advanced deep learning models to improve Bing search, available globally today.

N-series general availability

Azure provides a full portfolio of Virtual Machine capabilities across the NC, ND, and NV series product lines. These Virtual Machines are designed for application scenarios for which GPU acceleration is common, such as compute-intensive, graphics-intensive, and visualization workloads.

- NC-series Virtual Machines are optimized for compute-intensive and network-intensive applications.

- ND-series Virtual Machines are optimized for training and inferencing scenarios for deep learning.

- NV-series Virtual Machines are optimized for visualization, streaming, gaming, encoding, and VDI scenarios.

See our Supercomputing19 blog for recent product additions to the ND and NV-series Virtual Machines.

Learn more

Join us at Supercomputing19 to learn more about our Bing optimization journey, leveraging Azure GPUs.