Azure Data Lake Storage

Massively scalable and secure data lake for your high-performance analytics workloads.

Build a foundation for your high-performance analytics

Eliminate data silos with a single storage platform. Optimize costs with tiered storage and policy management. Authenticate data using Microsoft Entra ID (formerly Azure Active Directory) and role-based access control (RBAC). And help protect data with security features like encryption at rest and advanced threat protection.

Limitless scale and 16 9s of data durability with automatic geo-replication

Highly secure storage with flexible mechanisms for protection across data access, encryption, and network-level control

Single storage platform for ingestion, processing, and visualization that supports the most common analytics frameworks

Cost optimization via independent scaling of storage and compute, lifecycle policy management, and object-level tiering

Scale to match your most demanding analytics workloads

Meet any capacity requirements and manage data with ease with Azure global infrastructure. Run large-scale analytics queries with consistently high performance.

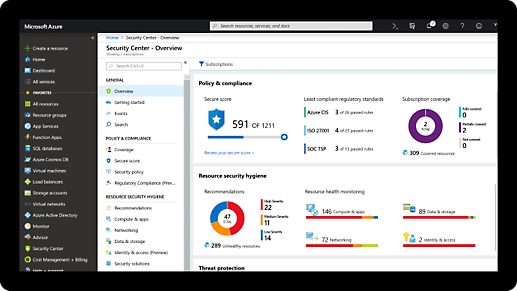

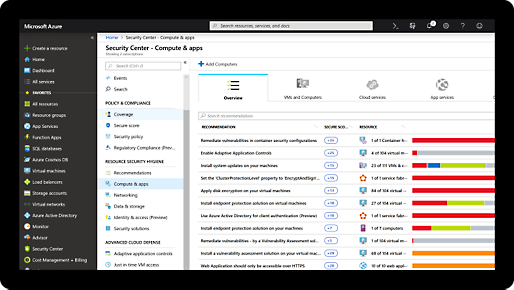

Utilize flexible security mechanisms

Safeguard your data lake with capabilities that span encryption, data access, and network-level control—all designed to help you drive insights more securely.

Build a scalable foundation for your analytics

Ingest data at scale using a wide range of data ingestion tools. Process data using Azure Databricks, Azure Synapse Analytics, or Azure HDInsight. And visualize the data with Microsoft Power BI for transformational insights.

Build cost-effective cloud data lakes

Optimize costs by scaling storage and compute independently—which you can’t do with on-premises data lakes. Tier up or down based on usage and take advantage of automated lifecycle management policies for optimizing storage costs.

Comprehensive security and compliance, built in

-

Microsoft invests more than USD1 billion annually on cybersecurity research and development.

-

We employ more than 3,500 security experts who are dedicated to data security and privacy.

-

Flexible pricing for building data lakes

Choose from pricing options including tiering, reservations, and lifecycle management.

Get started with an Azure free account

1

2

After your credit, move to pay as you go to keep building with the same free services. Pay only if you use more than your free monthly amounts.

3

Trusted by companies of all sizes

"With Azure, we now have the capability to rapidly drive value from our data. The actionable insights from the data models we're creating will help us increase revenue, reduce costs, and minimize risk."

Ahmed Adnani, Director of Applications and Analytics, Smiths Group

"Microsoft Azure gives us good value when we need huge clusters for a couple of days to do a job, then lets us get rid of them to conserve, whereas the datacenter is almost completely unfeasible. That was a big, big game-changer for us."

James Ferguson, Product Manager, Marks & Spencer

Developer resources

How-to documentation

Frequently asked questions about Data Lake Storage

-

Adding the Hierarchical Namespace on top of blobs allows the cost benefits of cloud storage to be retained, without compromising the file system interfaces that big data analytics frameworks were designed for.

A simple example is a frequently occurring pattern of an analytics job writing output data to a temporary directory, and then renaming that directory to the final name during the commit phase. In an object store (which, by design, doesn’t support the notion of directories), these renames can be lengthy operations involving N copy and delete operations, wherein N is the number of files in the directory. With the Hierarchical Namespace, these directory manipulation operations are atomic, improving performance and cost. Additionally, supporting directories as elements of the file system permits the application of POSIX-compliant access control lists (ACLs) that use parent directories to propagate permissions.

-

Similar to other cloud storage services, Data Lake Storage is billed according to the amount of data stored plus any costs of operations performed on that data. See a cost breakdown.

-

Data Lake Storage is primarily designed to work with Hadoop and all frameworks that use the Hadoop FileSystem as their data access layer (for example, Spark and Presto). See details.

In Azure, Data Lake Storage is interoperable with:

- Azure Data Factory

- Azure HDInsight

- Azure Databricks

- Azure Synapse Analytics

- Power BI

The service is also included in the Azure Blob Storage ecosystem.

-

Data Lake Storage provides multiple mechanisms for data access control. By offering the Hierarchical Namespace, the service is the only cloud analytics store that features POSIX-compliant access control lists (ACLs) that form the basis for Hadoop Distributed File System (HDFS) permissions. Data Lake Storage also includes capabilities for transport-level security via storage firewalls, private endpoints, TLS 1.2 enforcement, and encryption at rest using system or customer supplied keys.