AI + Machine Learning, Azure Operator Nexus, Industry trends, Microsoft Azure portal, Networking

Microsoft RAN slicing solutions: Discover AI-assisted application service assurance capabilities

Posted on

6 min read

The marketing and scientific communities are excited about radio access network (RAN) slicing. RAN slicing is one of the important new features of 5G networks; it makes differentiated services possible, enabling new features for customers and network monetization opportunities for operators. The 3rd Generation Partnership Project (3GPP) specifications define the slice mechanism, but they don’t say anything about how to implement the slices. Also, we haven’t seen many production-level, real-world implementations of RAN slicing, perhaps because 5G business roll-out is complex. We have done research and produced new results related to RAN slicing and I’d like to enumerate a few that will make it easier for operators to use it with Microsoft Azure.

Service assurance with RAN slicing

Latency-sensitive mobile applications—such as Xbox Cloud Gaming, Microsoft Teams video conferencing, Microsoft Mixed Reality, remote telemedicine, and cloud robotics—require predictable network throughput and latency. The 3GPP specifications recognized this requirement for next-generation mobile apps, and so they introduced network slicing, a virtualization primitive that allows an operator to run multiple differentiated virtual networks, called slices, layered on top of a single physical network. RAN slicing is of particular interest for service assurance since the last-mile wireless link is often the bottleneck for mobile apps.

The Technical Problem

Ideally, a network operator should be able to configure a network’s resource allocation policy to cater to the specific connectivity requirements of each subscribing application. But, in the real-world, typical base station schedulers optimize for coarse metrics, such as the aggregate throughput at the base station or the aggregate throughput achieved by a bundle of applications. The problem is that neither of these methods ensures adequate performance for each application connected to the network.

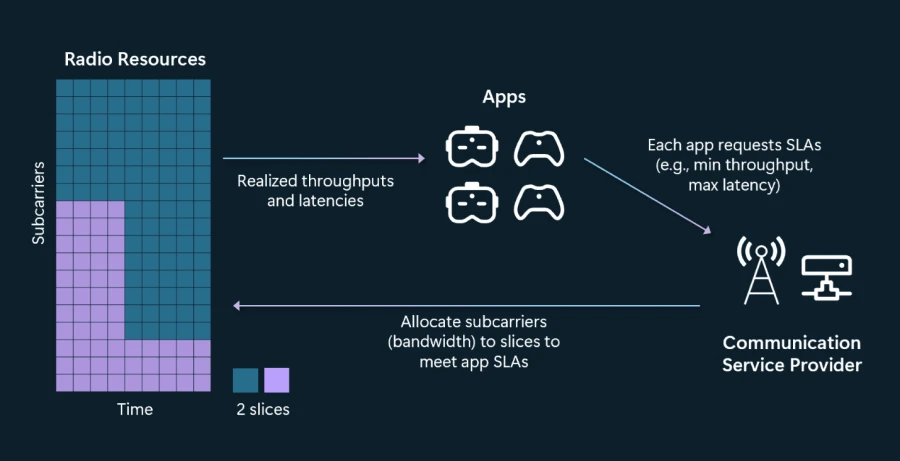

A network slice can support a set of users or a set of applications with similar connectivity requirements. Operators can distribute resources, like physical resource blocks (PRBs), in the RAN amongst the slices to provide differentiated connectivity.

Existing approaches allocate PRBs to different slices to guarantee slice-level service assurance through service-level agreements (SLAs). However, as I mentioned earlier, to realize the envisioned benefits where apps achieve the network performance they require, service assurance should be provided at the application level. Existing approaches fall short of enabling operators to provide this important capability. Slice-level service assurance does not guarantee throughput and latency to each app in the slice, since different users in the same slice can experience wildly different channel conditions. Also, apps join and leave the network asynchronously, which makes optimization hard. We need app-level service assurance to meet the requirements of each app within a slice. To accomplish this, we identified and addressed the following two challenges:

- State-space complexity

Prior approaches provide slice-level service assurance by tracking a state space consisting of aggregate slice-level statistics, including the average channel quality of all users in a slice and the observed slice throughput. To extend these methods to support app-level requirements, one could treat each app as a slice. The problem is that doing so expands the state space to include the channel quality, the observed throughput, and the observed latency experienced by each app. The resulting state space, consisting of all possible values that the tracked variables can take, grows quickly, and searching through this state space to determine an allocation of PRBs that complies with the apps SLA results in an intractable optimization problem for practical deployments where the network must accommodate hundreds of apps. - Determining resource availability

To compute bandwidth allocation for slices, operators typically run admission controllers that admit or reject incoming apps according to some policy. The policy may depend on slice monetization preferences, fairness constraints, or other objectives. Algorithms for admission control have been studied widely. Fundamentally, operators need a way to determine if the RAN has resources to accommodate the SLAs of an incoming app without negatively impacting the SLAs of apps already admitted. Unfortunately, prior approaches are difficult to adapt because they compute required PRBs to support slice-level SLAs. Once again, the state-space complexity precludes treating each app as a slice.

Explore the RAN-slicing system from Microsoft

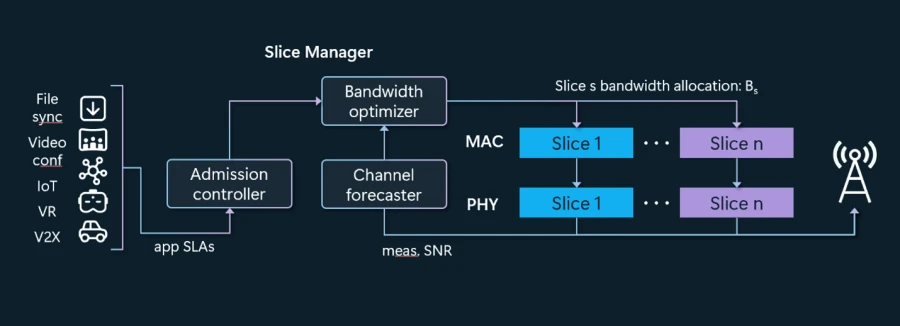

We have designed and developed a radio resource scheduler that fulfills throughput and latency SLAs for individual apps operating over a cellular network. Our system bundles apps with similar SLA requests into network slices. It takes advantage of classical schedulers that maximize base station throughput by computing resource schedules for each slice in a way that satisfies each app’s requirements. Under this model, apps express their network requirements to the operator in the form of minimum throughput and maximum latency. Working on behalf of the operator, our system then fulfills these SLAs over the shared wireless medium by computing and allocating the PRBs required by each slice.

Our system addresses the challenges in enabling app-level service assurance in a wireless environment by applying the following techniques:

- We manage the search-space complexity, and we decouple the network model and the control policy. We do this by formulating SLA-compliant bandwidth allocation as a model predictive control (MPC) problem. MPC is great at solving sequential decision-making problems over a moving look-ahead horizon. It decouples a controller, which solves a classical optimization problem, from a predictor, which explicitly models uncertainty in the environment.

- We use standalone predictors to forecast each of the state-space variables, such as the wireless channel experienced by each app. Our system then feeds these predictions into a control algorithm that computes a sequence of future bandwidths for each slice based on the predicted state.

- We reduce complexity by letting our control algorithm efficiently prune the search space of possible bandwidth allocations because we note that app throughput and latency vary monotonically with the number of PRBs.

- We forecast RAN resource availability by designing a family of deep neural networks to predict the distribution of required PRBs. We train these neural networks on simulations of our control algorithm offline and then apply them to predict the resource availability in real time.

At a high-level, we base bandwidth (PRB) allocation on predicted channel conditions. When the signal to noise ratio (SNR) is high, we believe packet loss will be lower, and the PRB allocation matches what the app asked for. When SNR is low, packet loss will be higher, so to compensate, PRB allocation is higher. To help the admission controller, our system exposes a primitive that estimates if there is bandwidth available to accommodate an incoming app’s requirements. The nice thing about this is that the admission control policies are independent of the bandwidth availability, allowing the operator to independently implement their monetization policies.

Our O-RAN-compatible system realizes the above ideas. We have implemented our RAN slicing system in our production-class, end-to-end 5G platform. We implemented hooks across different modules in vRAN distributed unit to control slice bandwidth dynamically without compromising real-time performance.

The operator can configure its RAN with a set of slices, catering to different traffic types and enterprise policies, for example, separate slices for Microsoft Teams and Xbox Cloud Gaming sessions. Relative to a slice-level service assurance scheduler, we significantly reduce SLA violations, measured as a ratio of the violation of the app’s request. Our system enables operators to solve the important challenge of providing predictable network performance to apps. In this way, app-level service assurance can be built into a production-class vRAN.

Discover solutions that empower developers

Microsoft is pushing hard on making programmable networks real. We believe this is a necessary, fundamental capability for developers to write applications and build services that are significantly better than the current day applications. Network RAN slicing is an important step in this journey. With RAN slicing, we can support secure and time critical applications, which require sustained predictable bandwidth. This in turn will lead to operators being able to provide many new and attractive network service features with operational efficiency for next-gen application developers.

RAN slicing is an excellent idea, and we are making it real. We hope various RAN vendors will incorporate these ideas as they integrate with Microsoft Azure Operator Nexus. Deeper technical details of what I wrote about are provided in a paper we published recently, “Application-Level Service Assurance with 5G RAN Slicing.”

Leave a Reply