AI + Machine Learning, Announcements, Azure AI, Azure AI Speech, Azure OpenAI Service, Speech to text

Accelerate your productivity with the Whisper model in Azure AI now generally available

Posted on

4 min read

Human speech remains one of the most complex things for computers to process. With thousands of spoken languages in the world, enterprises often struggle to choose the right technologies to understand and analyze audio conversations while keeping right data security and privacy guardrails in place. Thanks to generative AI, it has become easier for enterprises to analyze every customer interaction and derive actionable insights from these interactions.

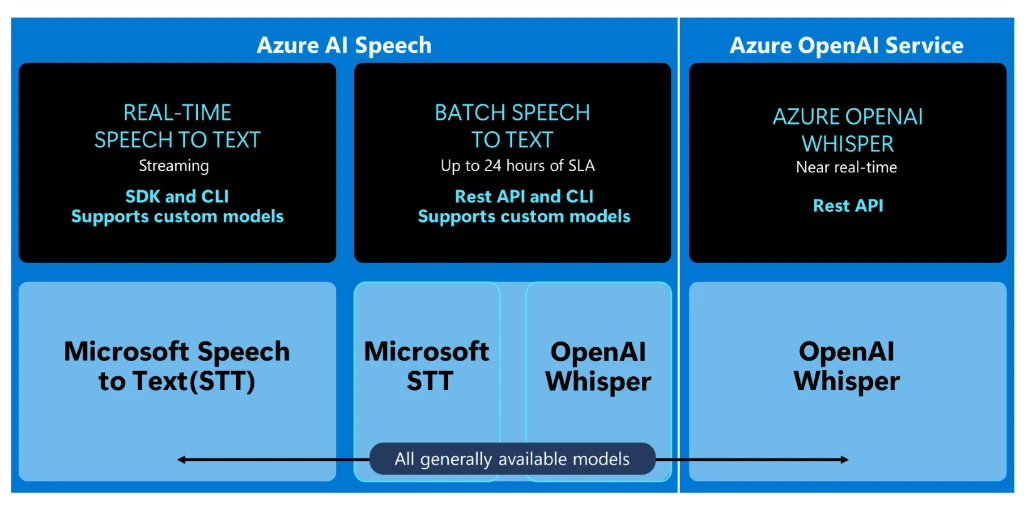

Azure AI offers an industry-leading portfolio of AI services to help customers make sense of their voice data. Our speech-to-text service in particular offers a variety of differentiated features through Azure OpenAI Service and Azure AI Speech. These features have been instrumental in helping customers develop multilingual speech transcription and translation, both for long audio files and for near-real-time and real-time assistance for customer service representatives.

Today, we are excited to announce that OpenAI Whisper on Azure is generally available. Whisper is a speech to text model from OpenAI that developers can use to transcribe audio files. Starting today, developers can begin using the generally available Whisper API in both Azure OpenAI Service as well as Azure AI Speech services on production workloads, knowing that it is backed by Azure’s enterprise-readiness promise. With all our speech-to-text models generally available, customers have greater choice and flexibility to enable AI powered transcription and other speech scenarios.

Since the public preview of the Whisper API in Azure, thousands of customers across industries across healthcare, education, finance, manufacturing, media, agriculture, and more are using it to translate and transcribe audio into text across many of the 57 supported languages. They use Whisper to process call center conversations, add captions for accessibility purposes to audio and video content, and mine audio and video data for actionable insights.

We continue to bring OpenAI models to Azure to enrich our portfolio and address the next generation of use-cases and workflows customers are looking to build with speech technologies and LLMs. For instance, imagine building an end-to-end contact center workflow—with a self-service copilot carrying out human-like conversations with end users through voice or text; an automated call routing solution; real-time agent assistance copilots; and automated post-call analytics. This end-to-end workflow, powered by generative AI, has the potential to bring a new era in productivity to call centers around the world.

Whisper in Azure OpenAI Service

Azure OpenAI Service enables developers to run OpenAI’s Whisper model in Azure, mirroring the OpenAI Whisper model functionalities including fast processing time, multi-lingual support, and transcription and translation capabilities. OpenAI Whisper in Azure OpenAI Service is ideal for processing smaller size files for time-sensitive workloads and use-cases.

Lightbulb.ai, an AI innovator, is looking to transform call center workflows, has been using Whisper in Azure OpenAI Service.

“By merging our call center expertise with tools like Whisper and a combination of LLMs, our product is proven to be 500X more scalable, 90X faster, and 20X more cost-effective than manual call reviews and enables third-party administrators, brokerages, and insurance companies to not only eliminate compliance risk; but also to significantly improve service and boost revenue. We are grateful for our partnership with Azure, which has been instrumental in our success, and we’re enthusiastic about continuing to leverage Whisper to create unprecedented outcomes for our customers.”

Tyler Amundsen, CEO and Co-Founder, Lightbulb.AI

To learn more about how to use the Whisper model with the Azure OpenAI Service click here: Speech to text with Azure OpenAI Service.

Try out the Whisper REST (representational state transfer) API in the Azure OpenAI Studio. The API supports translation services from a growing list of languages to English, producing English-only output.

OpenAI Whisper model in Azure AI Speech

Users of Azure AI Speech can leverage OpenAI’s Whisper model in conjunction with the Azure AI Speech batch transcription API. This enables customers to easily transcribe large volumes of audio content at scale for non-time-sensitive batch workloads.

Developers using Whisper in Azure AI Speech also benefit from the following additional capabilities:

- Processing of large file sizes up to 1GB in size with the ability to process large amounts of files with up to 1000 files in a single request that processes multiple audio files simultaneously.

- Speaker diarization which allows developers to distinguish between different speakers, accurately transcribe their words, and create a more organized and structured transcription of audio files.

- And lastly, developers can use Custom Speech in Speech Studio or via API to finetune the Whisper model using audio plus human labeled transcripts.

Customers are using Whisper in Azure AI Speech for post-call analysis, deriving insights from audio and video recordings, and many more such applications.

For details on how to use the Whisper model with Azure AI Speech click here: Create a batch transcription.

Getting started with Whisper

Azure OpenAI Studio

Developers preferring to use the Whisper model in Azure OpenAI Service can access it through the Azure OpenAI Studio.

- To gain access to Azure OpenAI Service, users need to apply for access.

- Once approved, visit the Azure portal and create an Azure OpenAI Service resource.

- After creating the resource, users can begin using Whisper.

Azure AI Speech Studio

Developers preferring to use the Whisper model in Azure AI Speech can access it through the batch speech-to-text in Azure AI Speech Studio.

The batch speech to text try-out allows you to compare the output of the Whisper model side by side with an Azure AI Speech model as a quick initial evaluation of which model may work better for your specific scenario.

The Whisper model is a great addition to the broad portfolio of capabilities that Azure AI offers. We are looking forward to seeing the innovative ways in which developers will take advantage of this new offering to improve business productivity and to delight users.