AI + Machine Learning, Azure Machine Learning

ONNX Runtime for inferencing machine learning models now in preview

Posted on

3 min read

We are excited to release the preview of ONNX Runtime, a high-performance inference engine for machine learning models in the Open Neural Network Exchange (ONNX) format. ONNX Runtime is compatible with ONNX version 1.2 and comes in Python packages that support both CPU and GPU to enable inferencing using Azure Machine Learning service and on any Linux machine running Ubuntu 16.

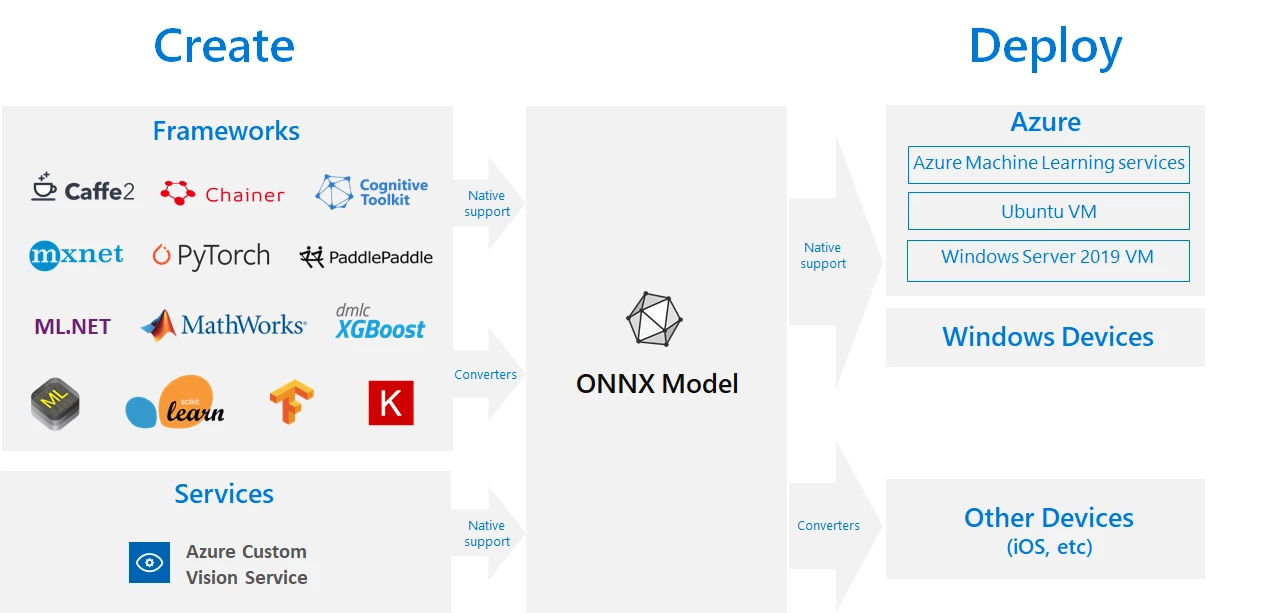

ONNX is an open source model format for deep learning and traditional machine learning. Since we launched ONNX in December 2017 it has gained support from more than 20 leading companies in the industry. ONNX gives data scientists and developers the freedom to choose the right framework for their task, as well as the confidence to run their models efficiently on a variety of platforms with the hardware of their choice.

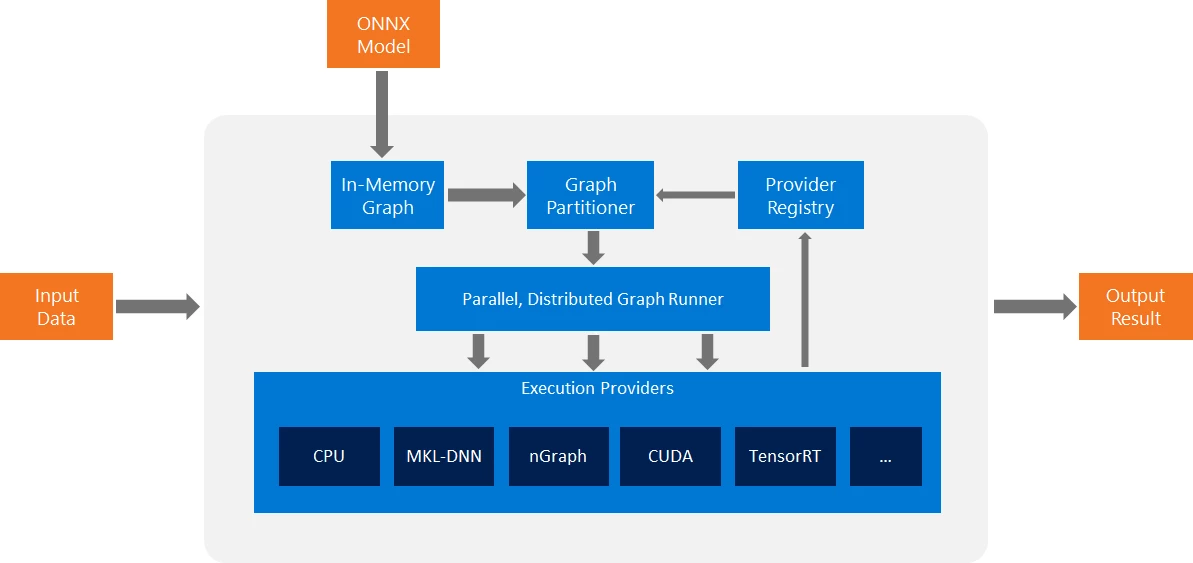

The ONNX Runtime inference engine provides comprehensive coverage and support of all operators defined in ONNX. Developed with extensibility and performance in mind, it leverages a variety of custom accelerators based on platform and hardware selection to provide minimal compute latency and resource usage. Given the platform, hardware configuration, and operators defined within a model, ONNX Runtime can utilize the most efficient execution provider to deliver the best overall performance for inferencing.

The pluggable model for execution providers allows ONNX Runtime to rapidly adapt to new software and hardware advancements. The execution provider interface is a standard way for hardware accelerators to expose their capabilities to the ONNX Runtime. We have active collaborations with companies including Intel and NVIDIA to ensure that ONNX Runtime is optimized for compute acceleration on their specialized hardware. Examples of these execution providers include Intel’s MKL-DNN and nGraph, as well as NVIDIA’s optimized TensorRT.

The release of ONNX Runtime expands upon Microsoft’s existing support of ONNX, allowing you to run inferencing of ONNX models across a variety of platforms and devices.

Azure: Using the ONNX Runtime Python package, you can deploy an ONNX model to the cloud with Azure Machine Learning as an Azure Container Instance or production-scale Azure Kubernetes Service. Here are some examples to get started.

.NET: You can integrate ONNX models into your .NET apps with ML.NET.

Windows Devices: You can run ONNX models on a wide variety of Windows devices using the built-in Windows Machine Learning APIs available in the latest Windows 10 October 2018 update.

Using ONNX

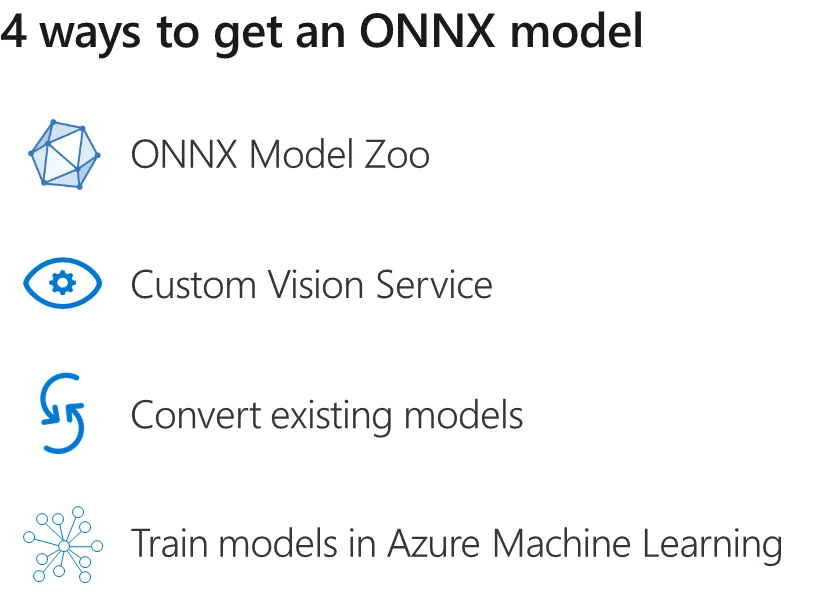

Get an ONNX model

Getting an ONNX model is simple: choose from a selection of popular pre-trained ONNX models in the ONNX Model Zoo, build your own image classification model using Azure Custom Vision service, convert existing models from other frameworks to ONNX, or train a custom model in AzureML and save it in the ONNX format.

Inference with ONNX Runtime

Once you have a trained model in ONNX format, you’re ready to feed it through ONNX Runtime for inferencing. The pre-built Python packages include integration with various execution providers, offering low compute latencies and resource utilization. The GPU build requires CUDA 9.1.

To start, install the desired package from PyPi in your Python environment:

pip install onnxruntime pip install onnxruntime-gpu

Then, create an inference session to begin working with your model.

import onnxruntime

session = onnxruntime.InferenceSession("your_model.onnx")

Finally, run the inference session with your selected outputs and inputs to get the predicted value(s).

prediction = session.run(None, {"input1": value})

For more details, refer to the full API documentation.

Now you are ready to deploy your ONNX model for your application or service to use.

Get started today

As champions of open and interoperable AI, we are actively invested in building products and tooling to help you efficiently deliver new and exciting AI innovation. We are excited for the community to participate and try out ONNX Runtime! Get started today by installing ONNX Runtime and let us know your feedback on the Azure Machine Learning Service Forum.