There are numerous IoT use cases in different industries, with common categories like predictive maintenance, connected vehicles, anomaly detection, asset monitoring, and many others. For example, in water treatment facilities in the state of California, IoT devices can be installed in water pumps to measure horse power, flow rate, and electric usage of the water pumps. The events emitted from these devices get sent to an IoT hub every 30 seconds for aggregation and processing. A water treatment facility company could build a dashboard to monitor the water pumps and build notifications to alert the maintenance team when the event data is beyond a certain threshold. They could then alert the maintenance team to repair the water pump if the flow rate is dangerously low. This is a very typical proactive maintenance IoT use case.

Azure IoT is a complete stack of IoT solutions. It’s a collection of Microsoft managed cloud services that connect, monitor, and control billions of IoT assets. The common set of components in the Azure IoT core subsystem include:

- IoT devices that stream the events

- Cloud gateway, where Azure IoT is most often used to enable communication to and from devices and edge devices

- Stream processing that ingests events from the device and triggers actions based on the output of the analysis. A common workflow is the input telemetry encoded in Avro that may return output telemetry encoded in JSON for storage

- Storage, that’s usually a database used to store IoT event data for reporting and visualization purposes

Let’s take a look at how we implement an end to end Azure IoT solution and use Azure Database for PostgreSQL to store IoT event data in the JSONB format. Using PostgreSQL as the NoSQL data store has its own advantages with its strong native JSON processing, indexing capabilities, and plv8 extension that further enhances it by integrating the JavaScript v8 engine with SQL. Besides the managed services capabilities and lower cost, one of the key advantages of using Azure Database for PostgreSQL is its native integration with the Azure ecosystem that enables modern applications with improved developer productivity.

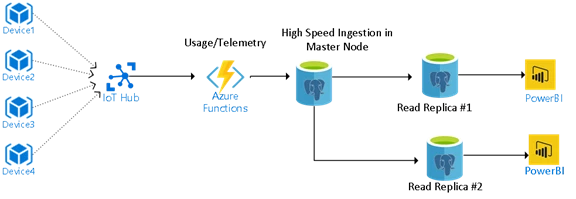

In this implementation, we use Azure Database for PostgreSQL with the plv8 extension as a persistent layer for IoT telemetry stream for storage, analytics, and reporting. The high-speed streaming data is first loaded into the PostgreSQL database (master server) as a persistent layer. The master server is used for high speed data ingestion and the read replicas are leveraged for reporting and downstream data processing to take data-driven actions. You can leverage the Azure IoT Hub as the event processing hub and Azure Function to trigger the processing steps and extract what’s needed from emitted events to store them in Azure Database for PostgreSQL.

In this post, we’ll walk through the high-level implementation to get you started. Our GitHub repository has sample applications and a detailed QuickStart tutorial with step-by-step instructions for implementing the solution below. The QuickStart uses Node.js applications to send telemetry to the IoT Hub.

Step 1: Create an Azure IoT Hub and register a device with the Hub

In this implementation, the IoT sensor simulators are constantly emitting temperature and humidity data back to the cloud. The first step would be creating an Azure IoT Hub in the Azure portal using these instructions. Next, you’ll want to register the device name in the IoT Hub so that the IoT Hub can receive and process the telemetry from the registered devices.

In GitHub, you will see sample scripts to register the device using CLI and export the IoT Hub service connection string.

Step 2: Create an Azure Database for PostgreSQL server and a database IoT demo to store the telemetry data stream

Provision an Azure Database for PostgreSQL with the appropriate size. You can use the Azure portal or the Azure CLI to provision the Azure Database for PostgreSQL.

In the database, you will enable the plv8 extension and create a sample plv8 function that’s useful for querying to extract a temperature column from the JSON documents. You can use the JSON table to store the IoT telemetry data. You can locate the script to create a database and table and enable the plv8 extension in GitHub.

Step 3: Create an Azure Function Event Hub and extract message and store in PostgreSQL

Next you will create a JavaScript Azure Function with Event Hub trigger bindings to Azure IoT Hub created in step 1. Use the JavaScript index.js sample to create this function. The function is triggered for each incoming message stream in the IoT Hub. It extracts the JSON message stream and inserts the data into the PostgreSQL database created in Step 2.

Getting started by running the IoT solution end to end

We recommend that you try and implement this solution using the sample application in our GitHub repository. In GitHub, you will find steps on running the node.js application to simulate the generation of event data, creating an IoT Hub with device registration, sending the event data to the IoT Hub, deploying Azure function to extract the data from JSON message, and inserting it in Azure Database for PostgreSQL.

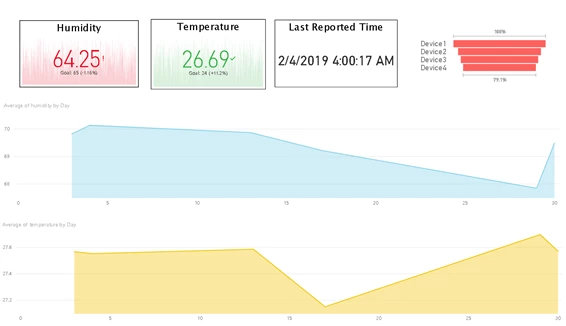

At the end of implementing all the steps in GitHub, you will be able to query and analyze the data using reporting tools like Power BI that allow you to build real-time dashboards as shown below.

We hope that you enjoy working with the latest features and functionality available in our Azure Database Service for PostgreSQL. Be sure to share your feedback via User Voice for PostgreSQL.

If you need any help or have questions, please check out the Azure Database for PostgreSQL documentation.

Acknowledgements

Special thanks to Qingqing Yuan, Bassu Hiremath, Parikshit Savjani, Anitah Cantele, and Rachel Agyemang for their contributions to this post.