Cloud data integration helps organizations integrate data of various forms and unify complex processes in a hybrid data environment. A number of times different organizations have similar data integration needs and require repeat business processes. Data Engineers or data developers in these organizations want to quickly get started with building data integration solutions and avoid building same workflows repeatedly. Today, we are announcing the support for templates in Azure Data Factory (ADF) to get started quickly with building data factory pipelines and improve developer productivity along with reducing development time for repeat processes. The template feature enables a ‘Template gallery’ for our customers that contains use-case based templates, data movement templates, SSIS templates or transformation templates that you can use to get hands-on with building your data factory pipelines

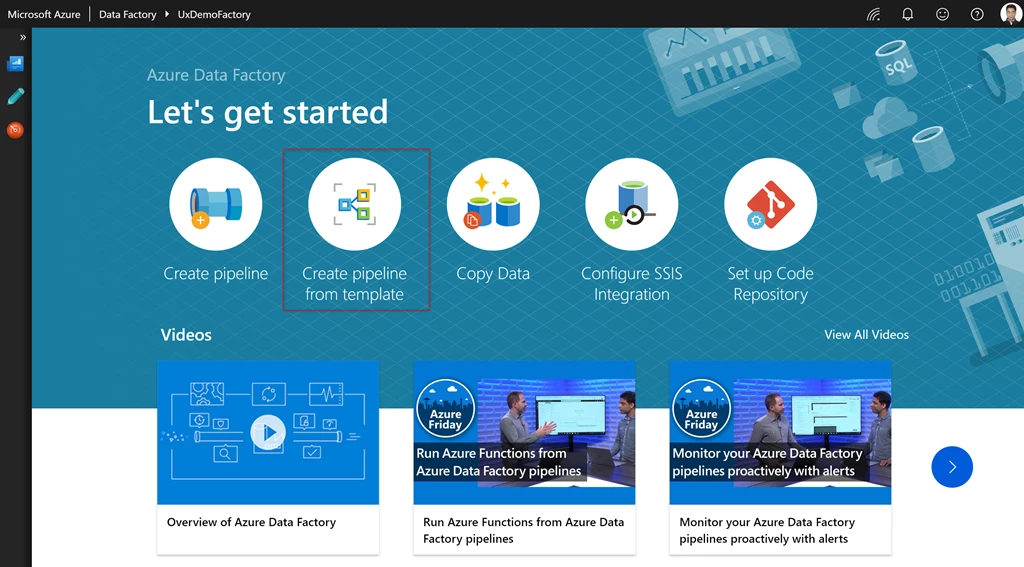

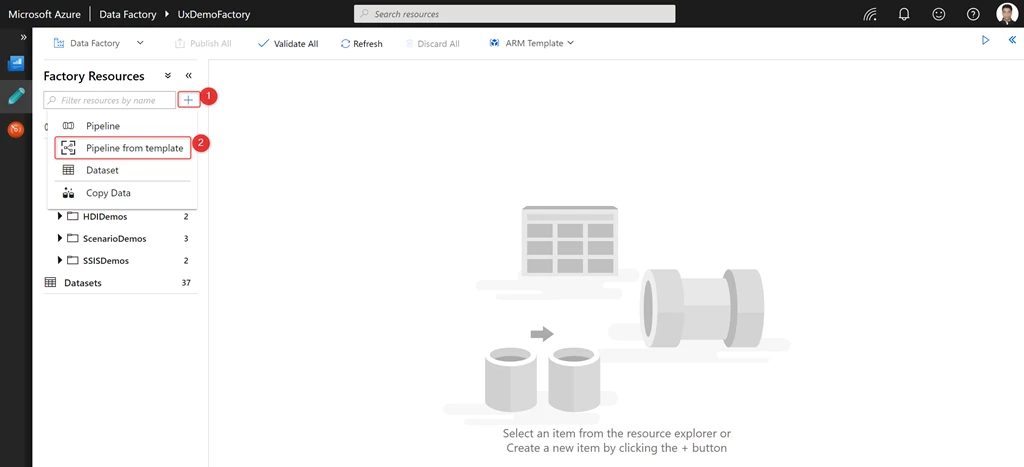

Simply click Create pipeline from template on the Overview page or click +-> Pipeline from template on the Author page in your data factory UX to get started.

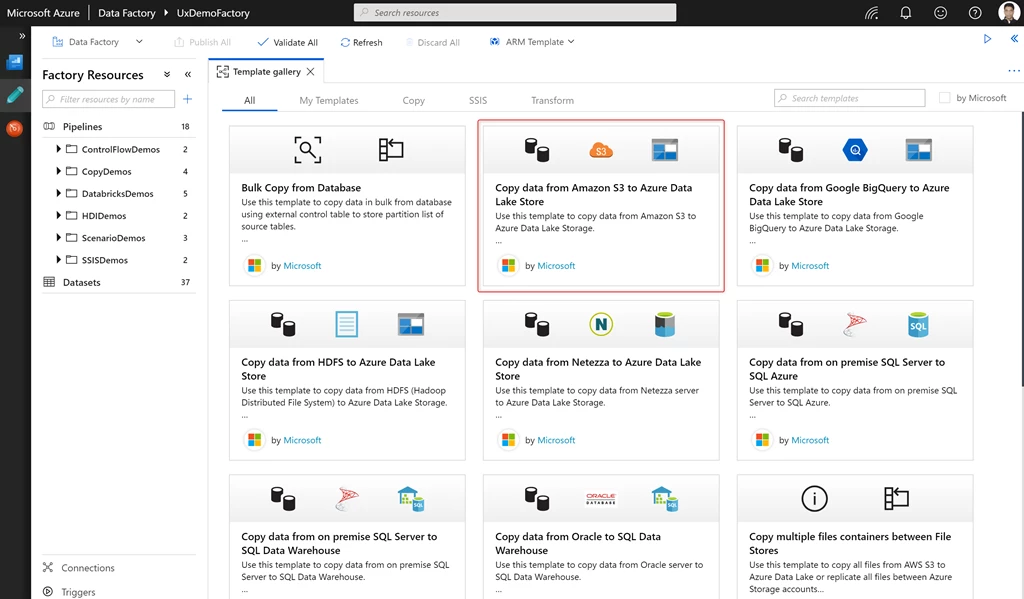

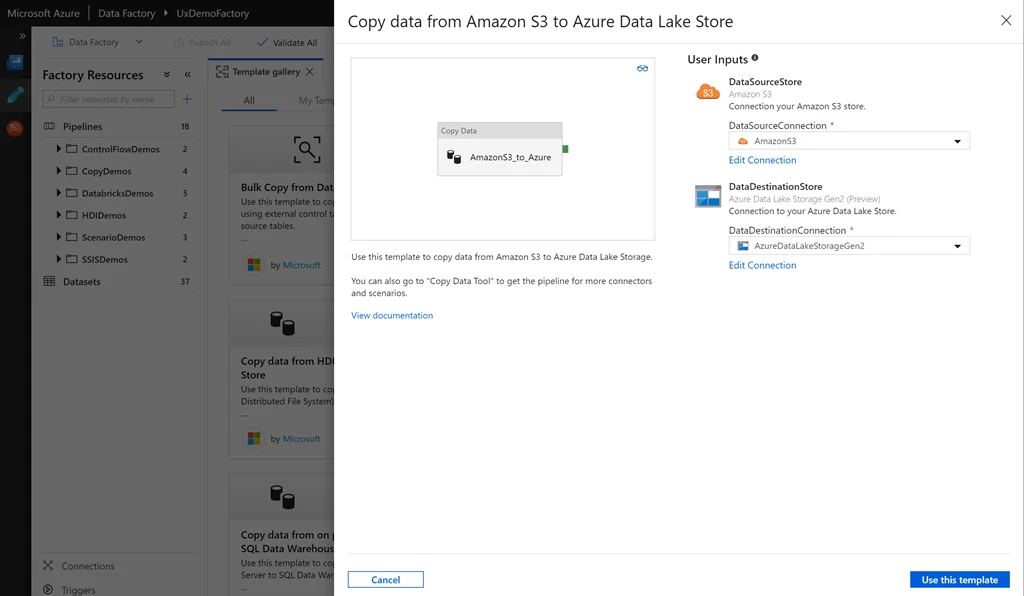

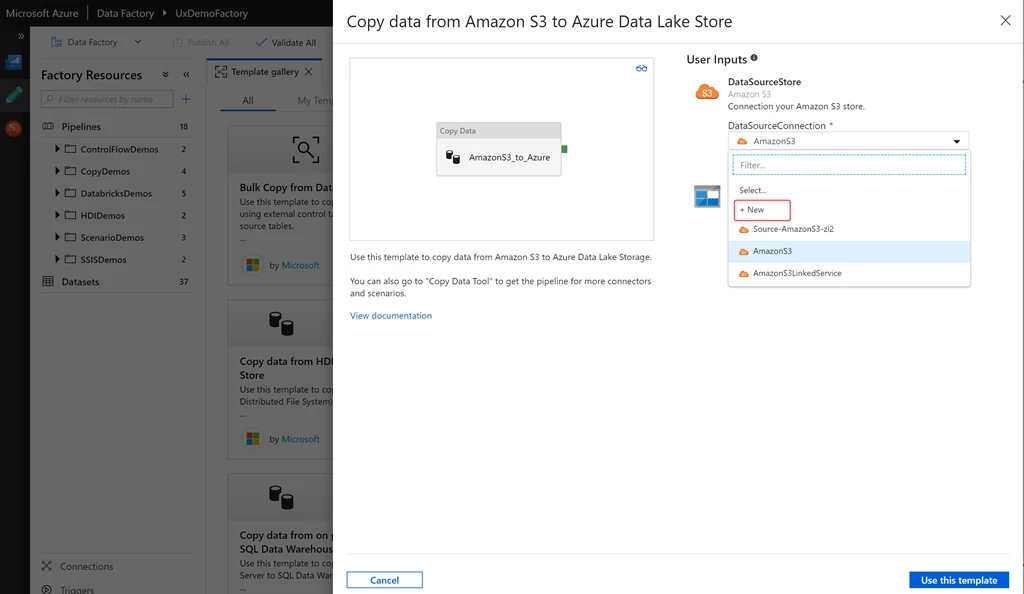

Select any template from the gallery and provide the necessary inputs to use the template. You can also read detailed description about the template or visualize the end to end data factory pipeline.

You can also create new connections to your data store or compute while providing the template inputs.

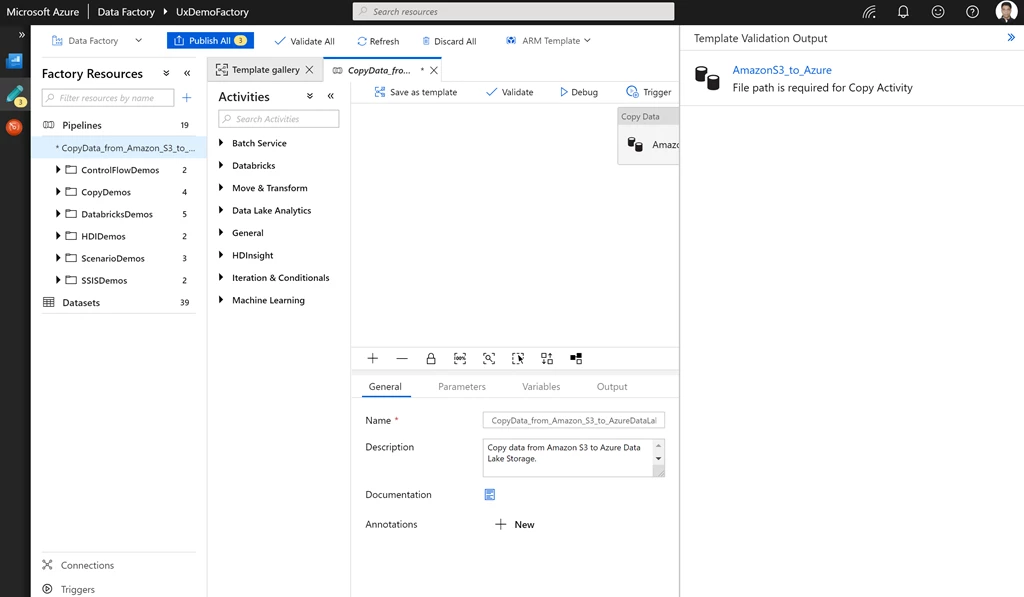

Once you click Use this template, you are taken to the template validation output. This guides you to fill in the required properties needed to publish and run the pipeline created from the template.

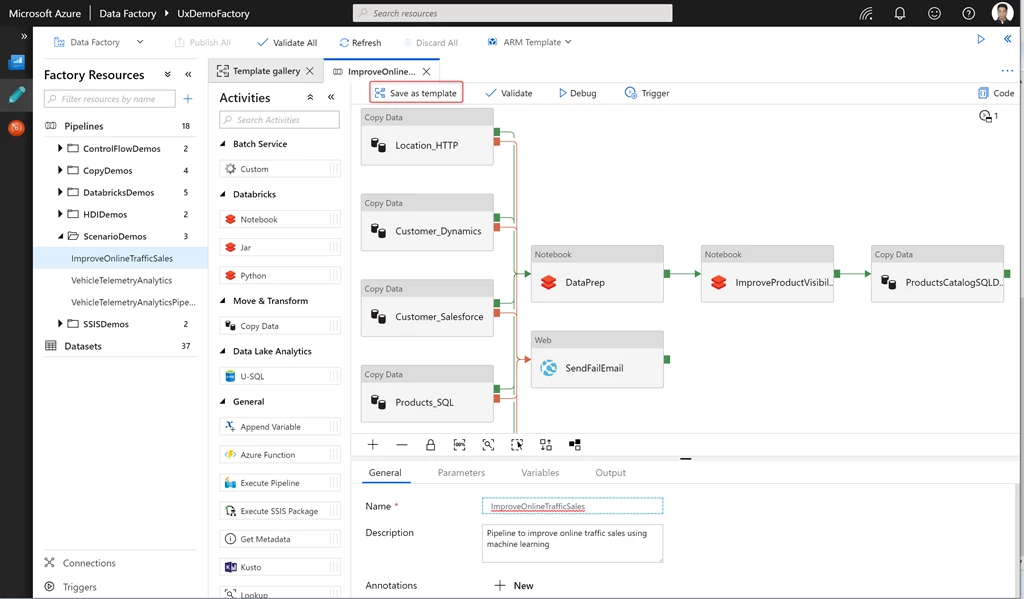

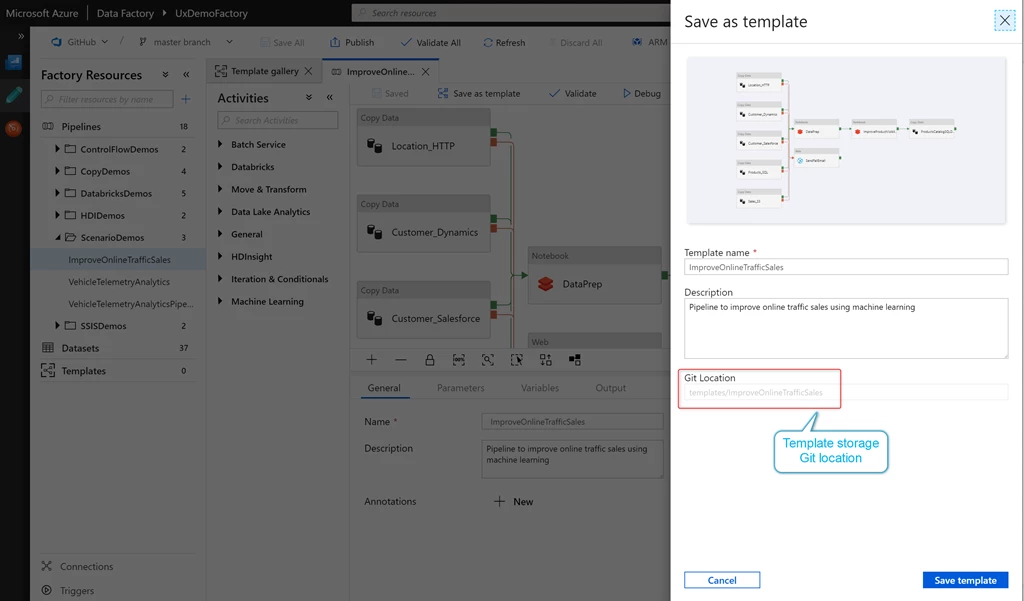

In addition to using out of box templates from the Template gallery, you might want to save your existing pipelines as templates as well. This might be required if different business units within your organization want to use the same pipeline but with different inputs. The templates feature in data factory allows you to save your existing pipelines as templates as well.

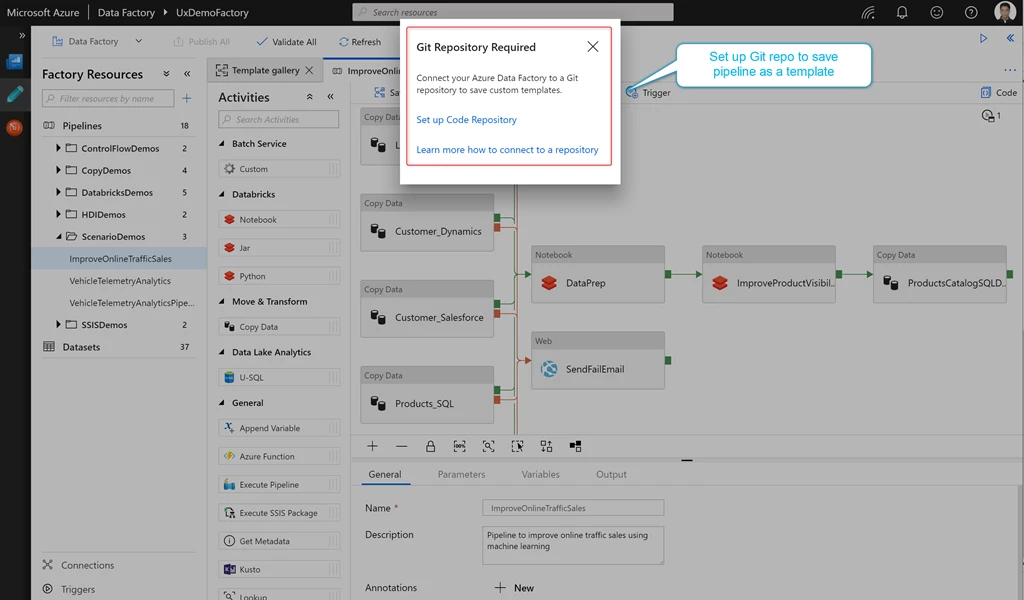

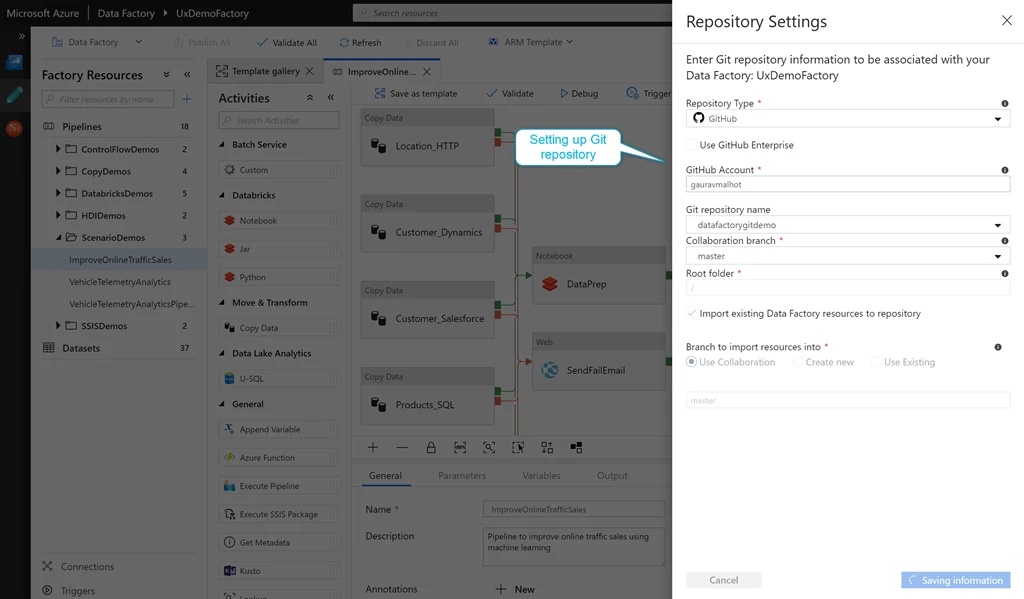

Ability to save your pipeline as a template requires you to enable GIT integration (Azure Dev Ops GIT or GitHub) in your data factory.

The template is then saved in your GIT repo under the templates folder.

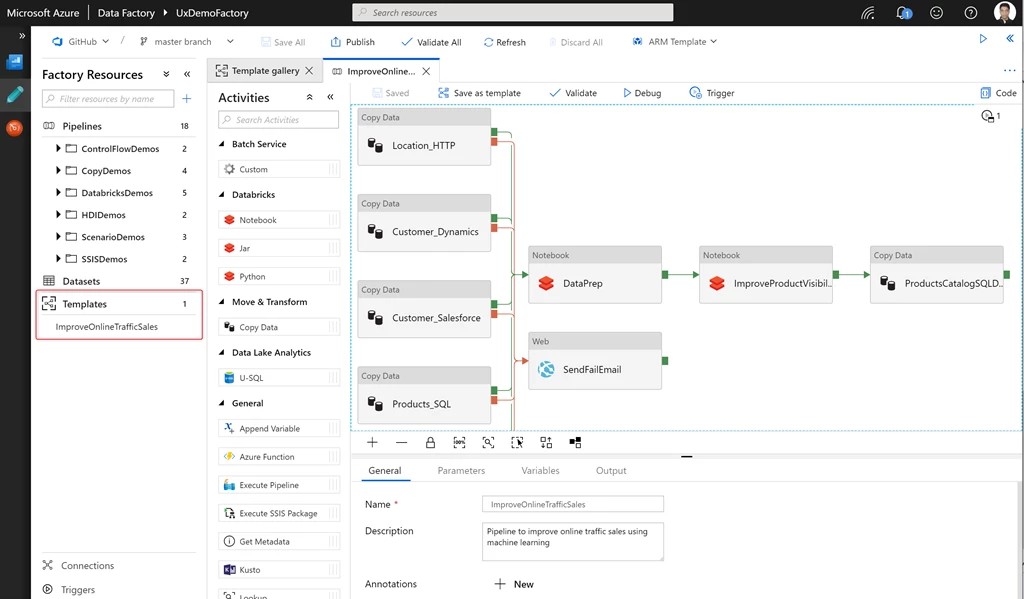

The template is now visible to anyone who has access to your GIT repo. This template can be seen in the Templates section of the resource explorer.

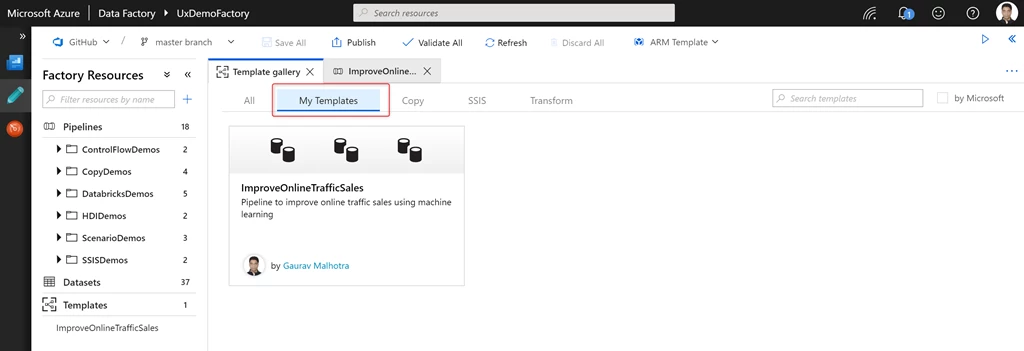

You can also see the template under the My templates section in the template gallery.

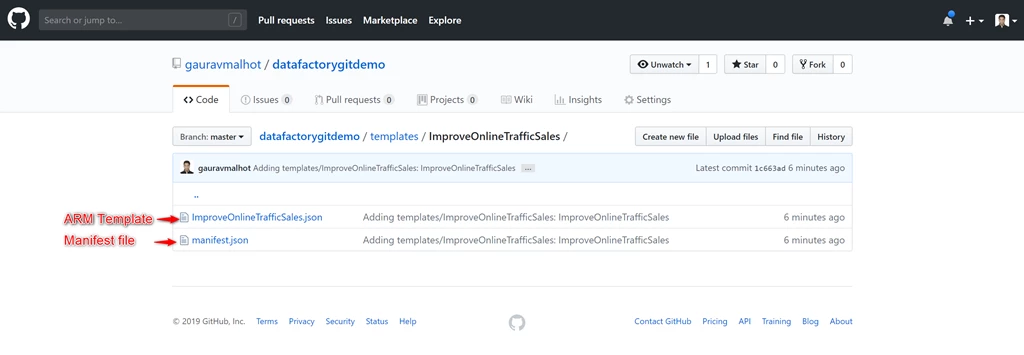

Saving the template to the Git repository generates two files. It generates an ARM template along with a manifest file that is saved in your Git repo. The ARM template contains all information about your data factory pipeline, including pipeline activities, linked services, datasets etc. The manifest file contains information about the template description, template author, template tile icons and other metadata about the template.

All the ARM template and manifest files for the out of box official templates provided in the Template gallery can be seen in the official data factory GitHub location. In future, we will be working with our partners to come up with a certification process wherein anyone can submit a template that they want to enable in the Template gallery. The data factory team will certify the pull request corresponding to the submitted template and make the submitted template available in the Template gallery.

Find more information about the templates feature in data factory.

Our goal is to continue adding features to improve the usability of Data Factory tools. Get started building pipelines easily and quickly using Azure Data Factory. If you have any feature requests or want to provide feedback, please visit the Azure Data Factory forum.