Today, we are excited to announce General Availability (GA) of Microsoft Azure Premium Storage!

With the introduction of Premium Storage, Microsoft Azure now offers two types of durable storage: Premium Storage and Standard Storage. Premium Storage stores data on the latest technology Solid State Drives (SSDs) whereas Standard Storage stores data on Hard Disk Drives (HDDs). Premium Storage is designed for Azure Virtual Machine workloads which require consistent high IO performance and low latency in order to host IO intensive workloads like OLTP, Big Data, and Data Warehousing on platforms like SQL Server, MongoDB, Cassandra, and others. With Premium Storage, more customers will be able to lift-and-shift demanding enterprise applications to the cloud.

Premium Storage is currently available for Page Blobs and Data Disks used by Azure Virtual Machines. You can provision a Premium Storage Data Disk with the right performance characteristics to meet your requirements. You can attach multiple disks to a VM and enable up to 32 TB of storage per VM with more than 64,000 IOPS per VM at low-millisecond latency for read operations.

Since we launched our Premium Storage Preview last December, thousands of Azure customers have used this capability and experienced the tremendous improvement it brings to their Virtual Machines’ performance. Many customers are already running production VMs using our new DS Series VMs which support Premium Storage Data Disks. Preview customers have been running a wide range of scenarios like Windows Server, Linux, SQL Server, Microsoft Dynamics, Exchange, SharePoint, Oracle, SAP, MySQL, MongoDB, Cassandra, Redis and others.

Here are some stats based on feedback from our Preview customers:

- Backup of a 2 TB SQL Server database is 6 times faster on Premium Storage than the same operation performed on Standard Storage

- Restore of a 2 TB SQL database is 30 times faster on Premium Storage than the same operation performed on Standard Storage

- Write throughput to a SQL database running on Premium Storage is 2 times the write throughput on the same database running on an on-premise mid-level SAN

We worked with our Preview customers to validate Premium Storage in real-world scenarios. Below are quotes from our Preview customers:

At JumpStart, we are using Premium Storage for SQL Servers running our educational games like School of Dragons and JumpStart, on Azure. The disk speeds have enabled us to both run the application and take back-ups two times faster than we have before.

Michael Boldezar, VP Platform Technology, JumpStart

Serko®, one of Asia’s leading corporate travel solutions, handles over 300 million SQL transactions per week using 8 SQL Server VMs. With Azure Premium Storage we envisage that the current VMs will scale to over 500 million SQL transactions per SQL server per week, reducing our overall costs is key as we scale globally.

Philip Ball, Chief Technical Officer, Serko

After the implementation of a critical application for Chronodrive on Azure, we quickly noticed an additional need for I/O on our SQL Server. Thanks to Azure Premium Storage, we have finally the capacity to guarantee that our application runs smoothly, and we hope to save 2 million Euros a year with the new optimal performance!

Anthony Lewkowicz, Technical Architect – Chief Information Security Officer, Chronodrive

Premium Storage Characteristics

Premium Storage disk performance is achieved with a combination of durable Azure storage and server SSD caches made available to DS series VMs.

Durability

Durability of data is essential for critical Enterprise workloads. That is why we built Premium Storage on our existing Locally Redundant Storage (LRS) technology which stores three replicas of data within the same region. A write will be confirmed back to your application only when it has been durably replicated by our LRS system. This is a unique capability provided exclusively by Azure today.

New “DS” Series VMs

We launched new “DS” series VMs which include support for attaching Premium Storage Data Disks. These VMs leverage a new sophisticated caching capability which enables extremely low latency for read operations. In order to use Premium Storage, you must use these new “DS” VMs. The pricing and billing for the DS sizes are the same as D-series.

To learn about the characteristics of DS series VMs, please see Virtual Machine and Cloud Service Sizes for Azure.

Linux Support

With the release of Linux Integration Services v4.0 we have enabled support for even more Linux flavors. Please refer to Premium Storage Overview for specifics.

Pricing

Pricing details for Premium Storage service are here. GA price takes effect from June 1, 2015 unless otherwise noted.

Preview users automatically transition to GA users at service GA. No additional action is required by Preview customers. Customer VMs, Data Disks and Premium Storage accounts will continue to function normally.

Available Regions

Currently, Premium Storage is available in the following regions:

- West US

- East US 2

- West Europe

- East China

- Southeast Asia

- West Japan

We will expand the service to more regions in the near future.

Inside Premium Storage and DS Series VMs

Just as for standard storage disks, Premium Storage disks are implemented as page blobs in Azure Storage. This means that every uncached write operation replicates to the SSDs of three servers in different racks (fault domains). Each Premium storage account offers 35 TB of disk and 10 TB of snapshot capacity, with up to 50 Gbps of inbound and outbound data traffic.

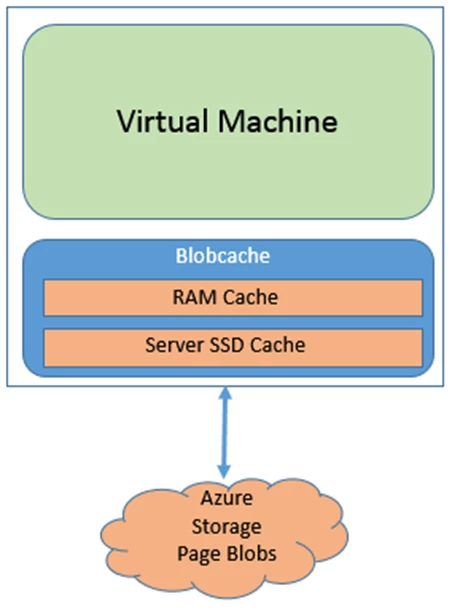

Besides the fact that Premium Storage disk data is stored on SSDs instead of HDDs, Premium Storage disks are different than standard storage disks because a component called Blobcache that runs on the servers hosting the DS series VMs takes advantage of the RAM and SSDs of the servers to implement a low-latency, high-throughput cache, as shown in the following figure:

Blobcache is enabled for disks, both standard and premium, configured with Read/Write or Read Only caching, as well as for VM local disks. For disks with Read Only caching, Blobcache synchronously writes data to the cache and to Azure storage, but for disks with the Read/Write cache setting, Blobcache implements write-back caching, where it only synchronously writes data to Azure storage when a VM requests it via a disk flush or by specifying the write-through flag on an I/O (also known as FUA – Forced Unit Access), and when it evicts modified data from the cache that hasn’t yet been written. Currently, only OS disks support Read/Write caching.

Blobcache also implements the storage for VM temporary disks. For those disks, Blobcache doesn’t write data to Azure storage, but keeps it on the server, either in RAM or on the server’s SSDs. That means that the data is lost when the VM is shut down or is “healed” to a different server in the case of a hardware failure. A VM’s temporary disk stores the OS paging file and can be used by applications to store data that does not need to be persistent.

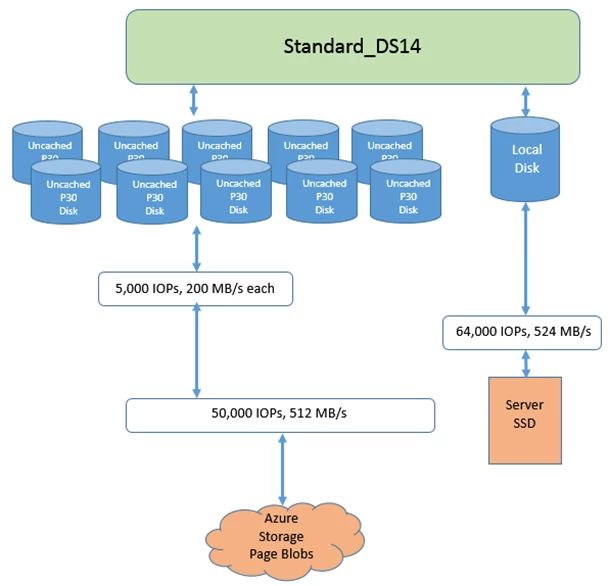

This unique architecture means that DS series VMs that take advantage of Premium Storage disk caching can achieve extremely high-levels of performance, both in terms of throughput and latency that exceeds that of the underlying Premium Storage disk performance. The maximum size and performance of an individual Premium Storage disk is defined by the disk type, whether P10, P20 or P30, but the amount of SSD cache Blobcache assigns to a VM depends on the size of the VM, as does the throughput of the SSD that Blobcache makes available to the VMs local and Premium Storage disks. Blobcache also reserves a specific amount of server network bandwidth for data that it reads and writes to Azure storage on behalf of a VM, that’s also dependent on the VM size. For example, the largest DS series VM size, Standard_DS14, has a temporary disk that’s 224 GB in size, a 576 GB cache, makes available up to 64,000 IOPS and 524 MB/s of SSD performance, and dedicates 50,000 IOPS and 512 MB/s of disk network bandwidth:

| VM Size | CPU Cores | Local Disk Size | Max. Data Disks | SSD Cache Size (GB) | Max. Premium Storage Disk Performance | Max. Local Disk + SSD Cache Performance | |

| Standard_DS14 | 16 | 224 GB | 32 | 576 | 50,000 IOPS 512 MB/s | 64,000 IOPS 524 MB/s |

You can find the limits and resources assigned for all VM sizes on the Azure Virtual Machine Sizing and Pricing page.

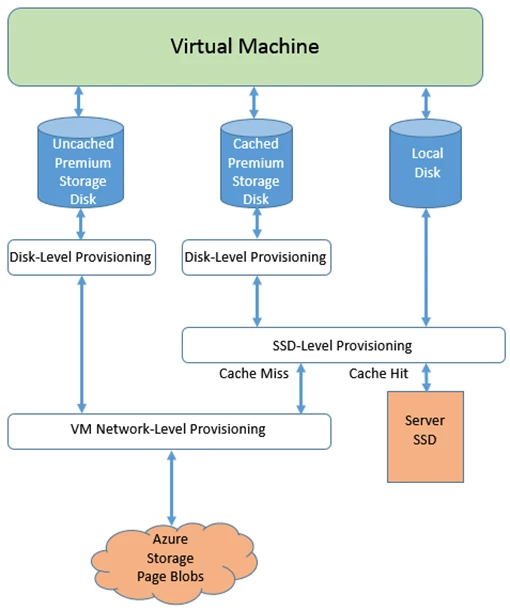

Cache and disk sizes are set at the time you create a VM or disk, but Blobcache provisions bandwidth and IOPS performance in real-time by using a three-level system, as shown in the figure below:

First level provisioning applies only to premium storage disks and sets the per-disk IOPS and bandwidth assignment. Blobcache implements SSD provisioning at the second level, applying it only to data that is stored on the server’s SSD, which includes that of Premium Storage disks with caching (Read/Write and Read Only) as well as local disks. Finally, VM network provisioning takes place at the third level for any I/O that Blobcache issues to the Premium Storage backend.

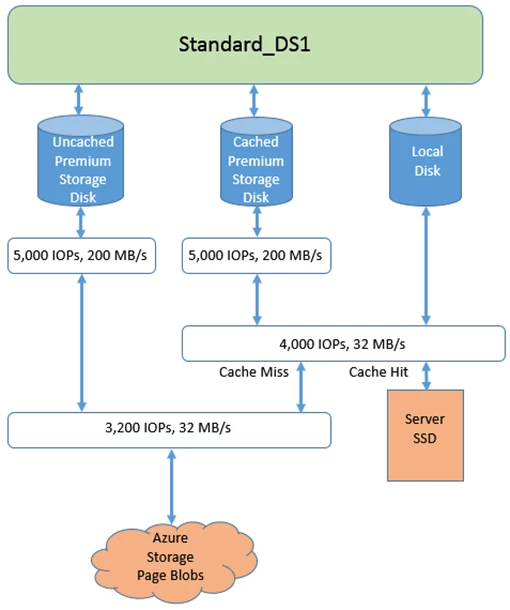

With this scheme, the performance of a VM depends on a variety of factors, from how it uses the local SSD, to the number, performance and caching type of the Premium Storage disks it has attached. For example, a Standard_DS1 VM is prevented from achieving the 5,000 IOPs potential of a P30 disk, whether cached or not, because of limits at the SSD and network levels:

A Standard_DS14 VM configured with 10 P30 Premium Storage disks that are uncached will experience up to 50,000 IOPS or up to 512 MB/s of read or write I/O from those disks, according to the size of the I/Os it issues. A VM similarly configured, but with a cached P30 disk that executes only reads that are satisfied from the cache, will achieve up to 64,000 IOPs or up to 524 MB/s because it will achieve the maximum performance offered from the local SSD. In fact, 64,000 read IOPS performance can be achieved by a single cached Premium Storage disk, including the OS disk, of any size with a pure read I/O pattern, or by the local disk with a read or write pattern.

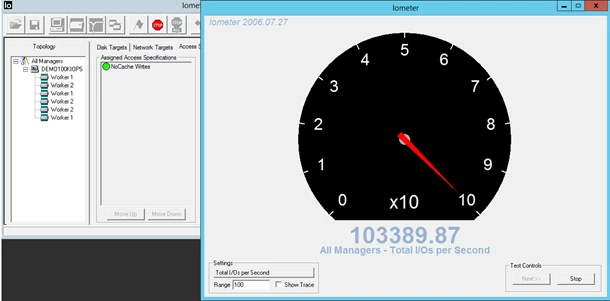

Many configurations are possible and can be tuned by workload. Persistent disk performance of 64,000 IOPs for a single VM is the most delivered by any major public cloud, but Blobcache enables even higher levels of VM disk performance. The most extreme performance possible is with a Standard_DS14 VM that drives uncached Premium Storage disks to the limit of 50,000 IOPs and the SSD cache to its limit of 64,000 IOPs for a total of over 100,000 IOPs. There a number of combinations that can achieve this, one of which is shown here:

To reach 50,000 IOPs, the VM must have a number of uncached premium storage disks that sum to at least 50,000 IOPS at the disk level, which can be as few as 10 P30 disks. The 64,000 IOPs can be driven by any of the following:

- Cached reads from OS disk

- Cached reads from one or more data disks of any size, premium or standard, so long as the data fits in the cache

- Reads or writes to the local disk

This is a screenshot of the IOMeter benchmarking tool running in a Standard_DS14 VM with one of these configurations and achieving 100,000 IOPs of performance:

With its unique and innovative use of Azure server SSDs, Blobcache enables a wide-variety of workload types and system configurations to achieve cloud-leading levels of disk performance.

Getting Started

You can read more about getting started at Premium Storage Overview, but here is a summarized list of steps:

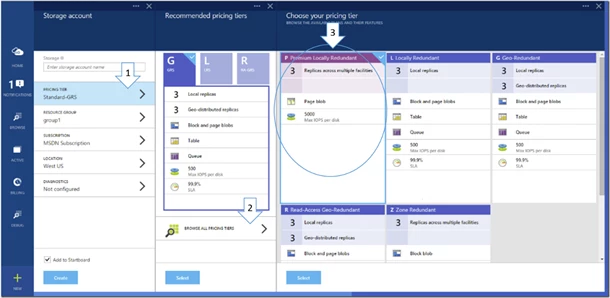

Step 1: Create a new storage account

Go to the new Microsoft Azure Portal and create a Premium Storage account. While creating the storage account be sure to use of the regions where Premium Storage service is available and select “Premium Locally Redundant” as the storage account type.

Step 2: Create a “DS” series VM

You can create the VM via the new Microsoft Azure Portal, or using Azure PowerShell SDK version 0.8.10 or later. Make sure that your Premium Storage account is used for the VM.

Following is a PowerShell example to create a VM by using the DS-series under your Premium storage account:

$storageAccount = "yourpremiumccount"

$adminName = "youradmin"

$adminPassword = "yourpassword"

$vmName = "yourVM"

$location = "West US"

$imageName = "a699494373c04fc0bc8f2bb1389d6106__Windows-Server-2012-R2-201409.01-en.us-127GB.vhd"

$vmSize = "Standard_DS2"

$OSDiskPath = "https://" + $storageAccount + ".blob.core.windows.net/vhds/" + $vmName + "_OS_PIO.vhd"

$vm = New-AzureVMConfig -Name $vmName -ImageName $imageName -InstanceSize $vmSize -MediaLocation $OSDiskPath

Add-AzureProvisioningConfig -Windows -VM $vm -AdminUsername $adminName -Password $adminPassword

New-AzureVM -ServiceName $vmName -VMs $VM -Location $location

If you want more disk space for your VM, attach a new data disk to an existing DS-series VM after it is created:

$storageAccount = "yourpremiumaccount"

$vmName = "yourVM"

$vm = Get-AzureVM -ServiceName $vmName -Name $vmName

$LunNo = 1

$path = "https://" + $storageAccount + ".blob.core.windows.net/vhds/" + "myDataDisk_" + $LunNo + "_PIO.vhd"

$label = "Disk " + $LunNo

Add-AzureDataDisk -CreateNew -MediaLocation $path -DiskSizeInGB 128 -DiskLabel $label -LUN $LunNo -HostCaching ReadOnly -VM $vm | Update-AzureVm

If you want to create a VM using your own VM image or disks, you should first upload the image or disks to your Premium Storage account, and then create the VM using that.

Summary and Links

We are very excited to announce the General Availability of Azure Premium Storage which stores data on the latest technology Solid State Drives (SSDs). Premium Storage is designed for IO intensive workloads like OLTP, Big Data, and data warehousing on platforms like SQL Server, MongoDB, Cassandra, and others. With Premium Storage, more customers will be able to lift-and-shift demanding enterprise applications to the cloud.

As always, we would love to hear feedback via comments on this blog, Azure Storage MSDN forum or send email to mastoragequestions@microsoft.com.

Please see these links for more information: