A few months ago, I shared best practices for alerting on metrics with Azure Database for PostgreSQL. Though I was able to cover how to monitor certain key metrics on Azure Database for PostgreSQL, I did not cover how to monitor and alert on the performance of queries that your application is heavily relying on. As a PostgreSQL database, from time to time you will need to investigate if there are any queries running indefinitely on a PostgreSQL database. These long running queries may interfere with the overall database performance and likely get stuck on some background process. This blog post covers how you can set up alerting on query performance related metrics using Azure Functions and Azure Key Vault.

What is Query Store?

Query Store was a feature in Azure Database for PostgreSQL announced in early Fall 2018 that seamlessly enables tracking query performance over time. This simplifies performance troubleshooting by helping you quickly find the longest running and most resource-intensive queries. Learn how you can use Query Store on a wide variety of scenarios by visiting our documentation, “Usage scenarios for Query Store.” Query Store, when enabled, automatically captures a history of query runtime and wait statistics. It tracks this data over time so that you can see database usage patterns. Data for all users, databases, and queries is stored in a database named azure_sys in the Azure Database for PostgreSQL instance.

Query Store is not enabled on a server by default. However, it is very straightforward to opt-in on your server by following the simple steps detailed in our documentation, “Monitor performance with the Query Store.” After you have enabled Query Store to monitor your application performance, you can set alerts on various metrics such as long running queries, regressed queries, and more that you want to monitor.

How to set up alerting on Query Store metrics

You can achieve near real-time alerting on Query Store metrics monitoring using Azure Functions and Azure Key Vault. This GitHub repo provides you with an Azure Function and a PowerShell script to deploy a simple monitoring solution, which gives you some flexibility to change what and when to alert.

Alternatively, you can clone the repo to use this as a starting point and make code changes to better fit your scenario. The Visual Studio solution, when built with your changes, will automatically package the zip file you need to complete your deployment in the same fashion that is described here.

In this repo, the script DeployFunction creates an Azure function to serve as a monitor for Azure Database for PostgreSQL Query Store. Understanding the data collected by query performance insights will help you identify the metrics that you can alert on.

If you don’t make any changes to the script or the function code itself and only provide the required parameters to DeployFunction script, here is what you will get:

- A function app.

- A function called PingMyDatabase that is time triggered every one minute.

- An alert condition that looks for any query that has a mean execution time of longer than five seconds since the last time query store data is flushed to the disk.

- An email when an alert condition is met with an attached list of all of the processes that was running on the instance, as well as the list of long running queries.

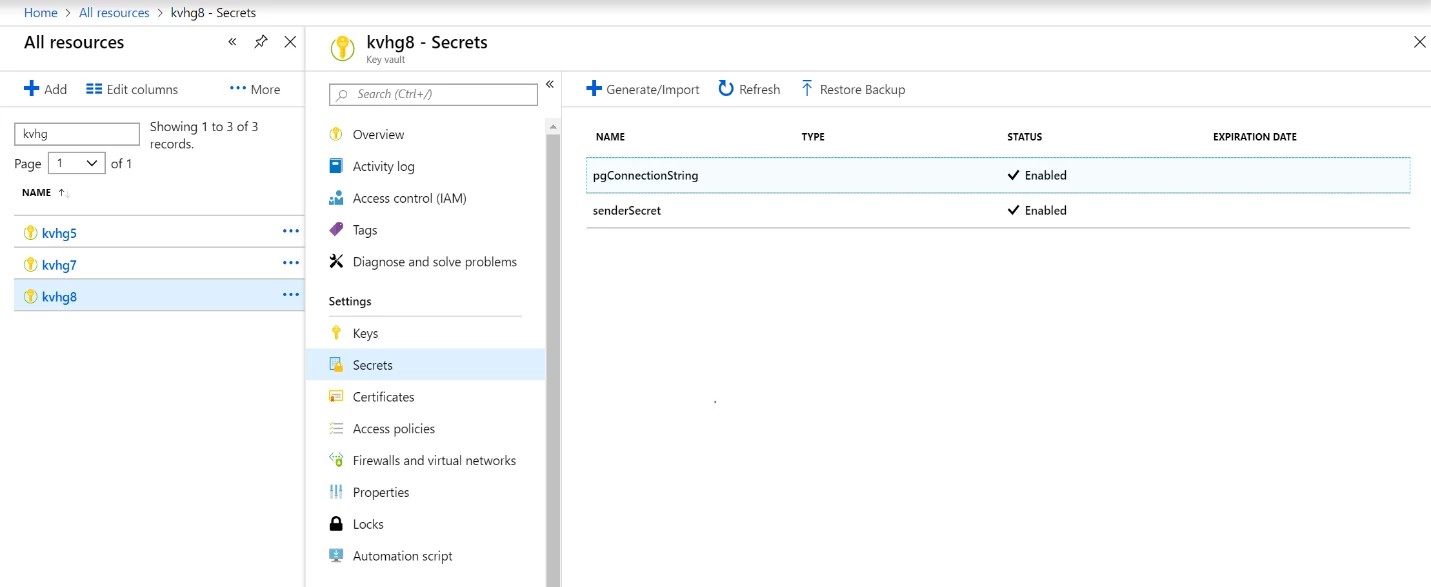

- A key vault that contains two secrets named pgConnectionString and senderSecret that hold the connection string to your database and password to your sender email account respectively.

- An identity for your function app with access to a Get policy on your secrets for this key vault.

You simply need to run DeployFunction on Windows PowerShell command prompt. It is important to run this script from Windows PowerShell. Using Windows PowerShell ISE will likely result in errors as some of the macros may not resolve as expected.

The script then creates the resource group and Key Vault deploys a monitoring function app, updates app configuration settings, and sets up the required Key Vault secrets. At any point during the deployment, you can view the logs available in the .logs folder.

After the deployment is complete, you can validate the secrets by going to the resource group in the Azure portal. As shown in the following diagram, two secrets keys are created, pgConnString and senderSecret. You can select the individual secrets if you want to update the value.

Depending on the condition set in the SENDMAILIF_QUERYRETURNSRESULTS app settings, you will receive an email alert when the condition is met.

How can I customize alert condition or supporting data in email?

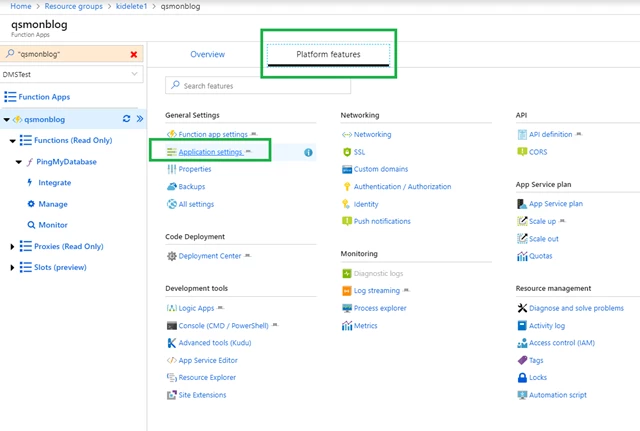

After the default deployment goes through, using Azure portal you can update settings by selecting Platform features and then Application settings.

You can change the run interval, mail to, if condition, or supporting data to be attached by making changes to the below settings and saving them on your exit.

Alternatively, you can simply use az cli to update these settings like the following.

$cronIntervalSetting="CronTimerInterval=0 */1 * * * *" az functionapp config appsettings set --resource-group yourResourceGroupName --name yourFunctionAppName --settings $cronIntervalSetting

Or

az functionapp config appsettings set --resource-group $resourceGroupName --name $functionAppName --settings "SENDMAILIF_QUERYRETURNSRESULTS=select * from query_store.qs_view where mean_time > 5000 and start_time >= now() - interval '15 minutes'"

Below are common cases on conditions that you can monitor and alert by either updating the function app settings after your deployment goes through or updating the corresponding value in DeployFunction.ps1 prior to your deployment:

|

Case |

Function app setting name |

Sample value |

|

Query 3589441560 takes more than x milliseconds on average in the last fifteen minutes |

SENDMAILIF_QUERYRETURNSRESULTS |

select * from query_store.qs_view where query_id = 3589441560 and mean_time > x and start_time >= now() – interval ’15 minutes’ |

|

Queries with cache hit less than 90 percent |

SENDMAILIF_QUERYRETURNSRESULTS |

select * , shared_blks_hit / nullif(shared_blks_hit + shared_blks_read, 0) AS as cache_hit from query_store.qs_view where shared_blks_hit / nullif(shared_blks_hit + shared_blks_read, 0) < 0.90 |

|

Queries with a mean execution time that is more than x milliseconds |

SENDMAILIF_QUERYRETURNSRESULTS |

select * from query_store.qs_view where mean_time > x and start_time >= now() – interval ’15 minutes’ |

|

If an alert condition is met, check if there is an ongoing autovacuum operation, list the processes running and attach the results to email |

LIST_OF_QUERIESWITHSUPPORTINGDATA |

{“count_of_active_autovacuum”:” select count(*) from pg_stat_activity where position(‘autovacuum:’ IN query) = 1 “,”list_of_processes_at_the_time_of_alert”:”select now()-query_start as Running_Since,pid,client_hostname,client_addr, usename, state, left(query,60) as query_text from pg_stat_activity”} |

How secure is this?

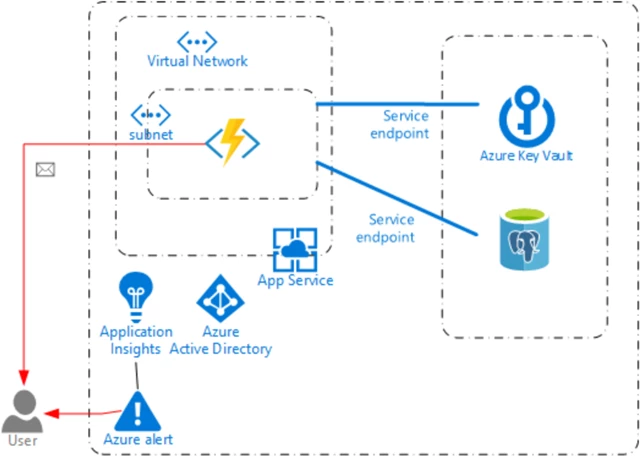

The script provides you with the mechanism to store your secrets in a Key Vault. Your secrets are secured as they are encrypted in-transit and at rest. However, the function app accesses the Key Vault over the network. If you want to avoid this and access your secrets over your virtual network (VNet) through the backbone, you will need to configure a VNet for both your function app and your Key Vault. Note, that VNet support of function apps is in preview and is currently available in selected Azure regions. When the proper deployment scenarios are supported, we may revisit this script to accommodate the changes. Until then, you will need to configure a VNet manually to accomplish the setup below.

We are always looking to hear feedback from you. If you have any feedback for the Query Store on PostgreSQL, or monitoring and alerting on query performance, please don’t hesitate to contact the Azure Database for PostgreSQL team.

Acknowledgments

Special thanks to Korhan Ileri, Senior Data Scientist, for developing the script and contributing to this post. As well as Tosin Adewale, Software Engineer from the Azure CLI team for closely partnering with us.