Performs ETL job using Azure services

Overview

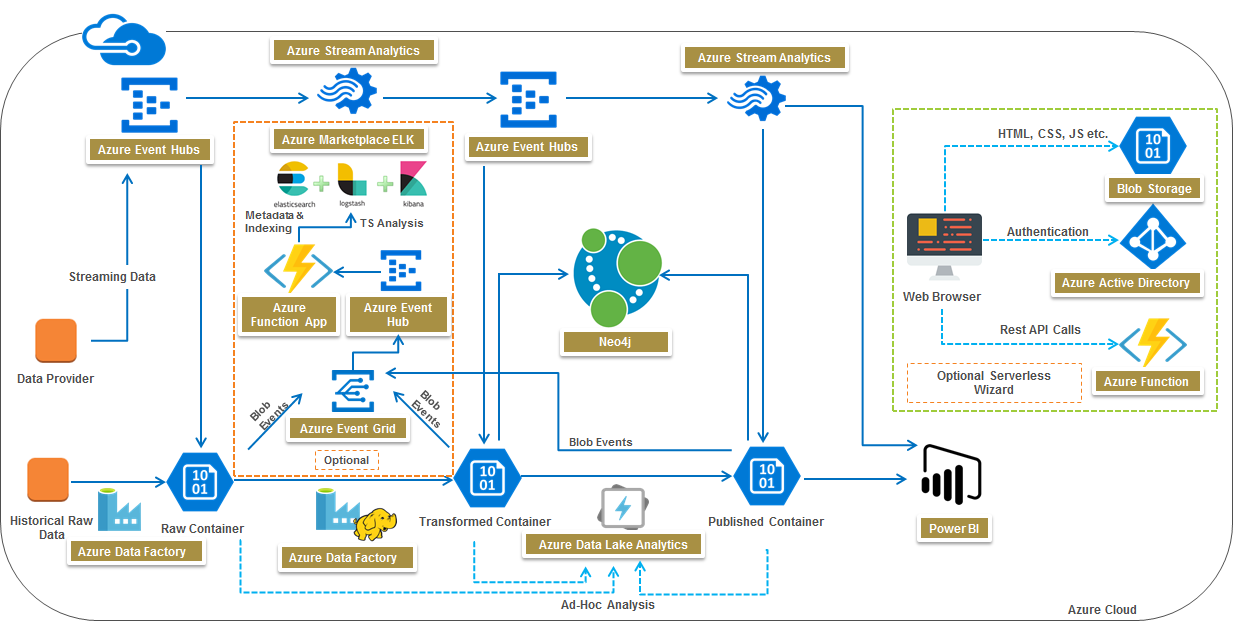

The aim of the Yash Azure quickstart solution is to showcase the capabilities of serverless Data Lake in the Azure Cloud.

- Yash Data Lake in Azure Cloud showcases features such as

- Data Ingestion

- Batch

- Streaming

- Data Processing

- Batch

- Real Time

- Data Governance

- Meta data management

- Data Lineage

- Data Security

- Ad-Hoc analytics for exploring different data insights and visualization

- Data Ingestion

The deployment also includes an optional wizard and a sample dataset that is used to demonstrate data lake capabilities

Pre-Requisites

Register application in AAD with the following steps and note the application id and app secret key:

- Assign following permissions:- -Azure Data Lake -Azure Analysis Services

- Set Reply URL :https://your-function-app-name.azurewebsites.net/.auth/login/aad/callback [Note = your-function-app-name : The function name which you would be giving while deploying Yash Data Lake]

Add following permissions to Application registered in AAD :

- Azure Analysis Services

- Azure Data Lake

- Windows Azure Active Directory

Add Application in IAM of subscription.

Open Elasticsearch function app to load data to Elasticsearch for first time

The following section provide steps for running the Quickstart.

Run and monitor the deployment

After you deploy the template, to run Quickstart, do the following steps:

- Go to the Output section of ARM template deployment services.

- Copy URL from output section.

- Open this URL in new tab

- After successful deployment do the following steps, 1.Add Application and user in IAM of ADL store and in data explorer access. 2.Add application and user in IAM of Azure Data Factory.

- After completing the above procedure return to the tab and follow the quickstart guide

Tags: Microsoft.Resources/deployments, Microsoft.Web/serverfarms, Microsoft.Web/sites, siteextensions, extensions, Microsoft.Web/sites/config, Microsoft.EventHub/namespaces, EventHubs, ConsumerGroups, Microsoft.Storage/storageAccounts/providers/eventSubscriptions, Microsoft.DataFactory/factories, linkedservices, HttpServer, AzureStorage, SecureString, HDInsightOnDemand, LinkedServiceReference, datasets, DelimitedText, HttpServerLocation, Expression, AzureBlob, pipelines, ForEach, Copy, DelimitedTextSource, HttpReadSettings, DelimitedTextReadSettings, BlobSink, DatasetReference, HDInsightSpark, Microsoft.DataLakeStore/accounts, Microsoft.DataLakeAnalytics/accounts, Microsoft.Network/publicIPAddresses, Microsoft.Network/virtualNetworks, Microsoft.Network/networkInterfaces, Microsoft.Compute/virtualMachines, Microsoft.Network/networkSecurityGroups, Microsoft.Compute/virtualMachines/extensions, CustomScript, Microsoft.Storage/storageAccounts, Microsoft.StreamAnalytics/StreamingJobs, stream, JSON, Microsoft.ServiceBus/EventHub, Reference, Microsoft.Storage/Blob