Azure Cosmos DB – A polymorphic database for an expanding data universe

Posted on

5 min read

There is a discussion that occurs frequently in the data world today, which centers around comparisons between traditional databases that are based on relational theory e.g. Oracle or SQL Server and a more modern wave of platforms commonly referred to as “NoSQL” databases. Proponents from both types of databases tend to get into disputes concerning, “which database is best?” However, this can be a misguided point of contention. To understand why, it can help to trace back through history, and reflect on how NoSQL databases first rose to prominence.

In the past 15 years, database technology has radically expanded beyond what could be described, to use a physics analogy, as a singularity in the initial conditions of our data universe: transactional processing using relational databases. This expansion has grown with improved technology and adoption, fueled by demand for the capability to process more data, as well as different kinds of data. There has been a revolution in the exchange of data, precipitated by the social media and mobile age. This has given rise to the increased popularity of different transient, flexible storage mediums, and protocols such as XML and JSON. While these became de facto standards in various forms of web publishing and messaging, methods of building applications have also evolved and matured. Object-oriented design in applications has increased in popularity, which has given rise to object-relational impedance mismatch. This further throttled the way in which we can build and maintain applications using relational databases. In addition, we have started to store various kinds of unstructured data, log files, binary images, text, sensory data, and more. This has given rise to distributed computing architectures like Hadoop and Spark that have allowed us to perform big queries on large data sets, without the need to apply structure such as schema to it at design time. In short, the variety of data structures we now need to manage has changed dramatically.

These changing approaches to modelling data in response to demands for greater flexibility can be thought of as emerging trade-offs between structural integrity vs agility/productivity when it comes to building applications that store any kind of data. This need to be more flexible has been characterized as a paradigm known as schema on read. The idea that data structures can be self-describing or semi-structured, and therefore the schema for applying meaning to data can in some sense live within the consumer/client code rather than being tight-coupled to databases at design time. This leaves databases free to be more flexible in how they ingest data.

In parallel to this shift in consumer demand for more flexible data structures, there has been a phenomenal increase in the volume and velocity of data we are handling in databases. This has given rise to the need to balance transactional integrity with physical availability, latency, and concurrency. Volumes of data being processed have become so large that traditional relational databases can sometimes struggle to offer the levels of overall performance that end users demand. In the case of ACID transactions, this has led to a common practice of relaxing the isolation element of ACID semantics in order to provide greater concurrency. In the case of availability and latency, this has given rise to the emergence of distributed database architectures, which in turn require us to balance trade-offs between consistency (of replicas) and availability. Learn more by reading about the CAP Theorem and PACELC theorem.

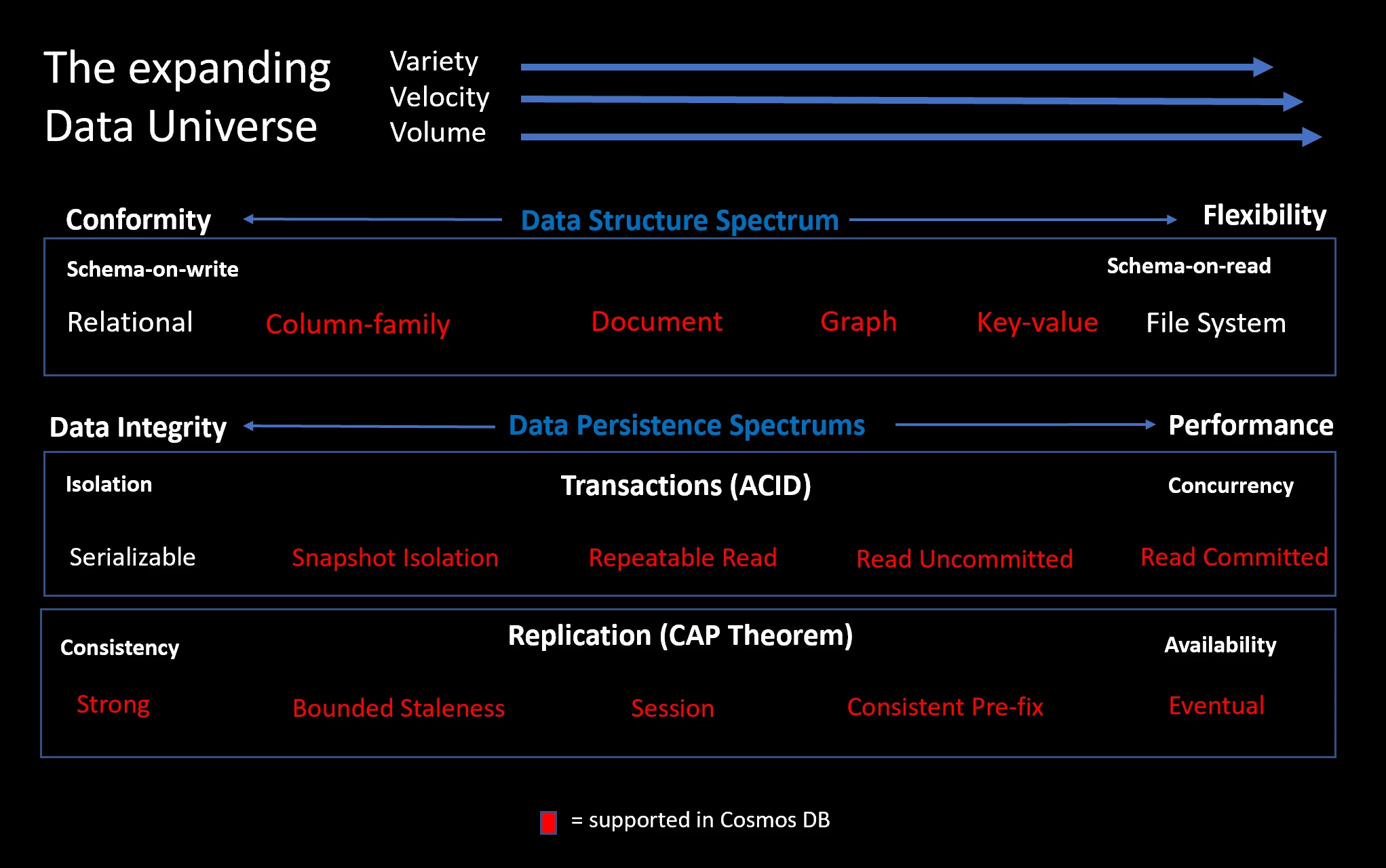

In the context of deciding which type of database engine to use, we now have an emerging set of spectrums for data storage and persistence in an “expanding data universe”. The below diagram illustrates this, and suggests where we might place some of the emergent paradigms that have been solidifying within them.

Ultimately these spectrums have emerged and solidified through the increasing variety, velocity, and volume of data that modern day applications need to handle, and the advances in technology to support them.

The isolation trade-offs in ACID databases have been known for some time, and CAP Theorem/PACELC theorem have received a lot of recent press in exposing some of the trade-offs that relate to replication consistency spectrum in distributed databases. The emergence of a data structure spectrum is perhaps less discussed, but just as important in understanding the shifting paradigms in the database world. This proliferation of data paradigms means that the questions we ask about database technologies should really be centered around the business use case, and where along these spectrums that use case would be optimally served by applying the appropriate paradigm, rather than asking which database is the best. We now live in an “expanding data universe”, in which there is no single paradigm that fits best for all data structures or data persistence scenarios.

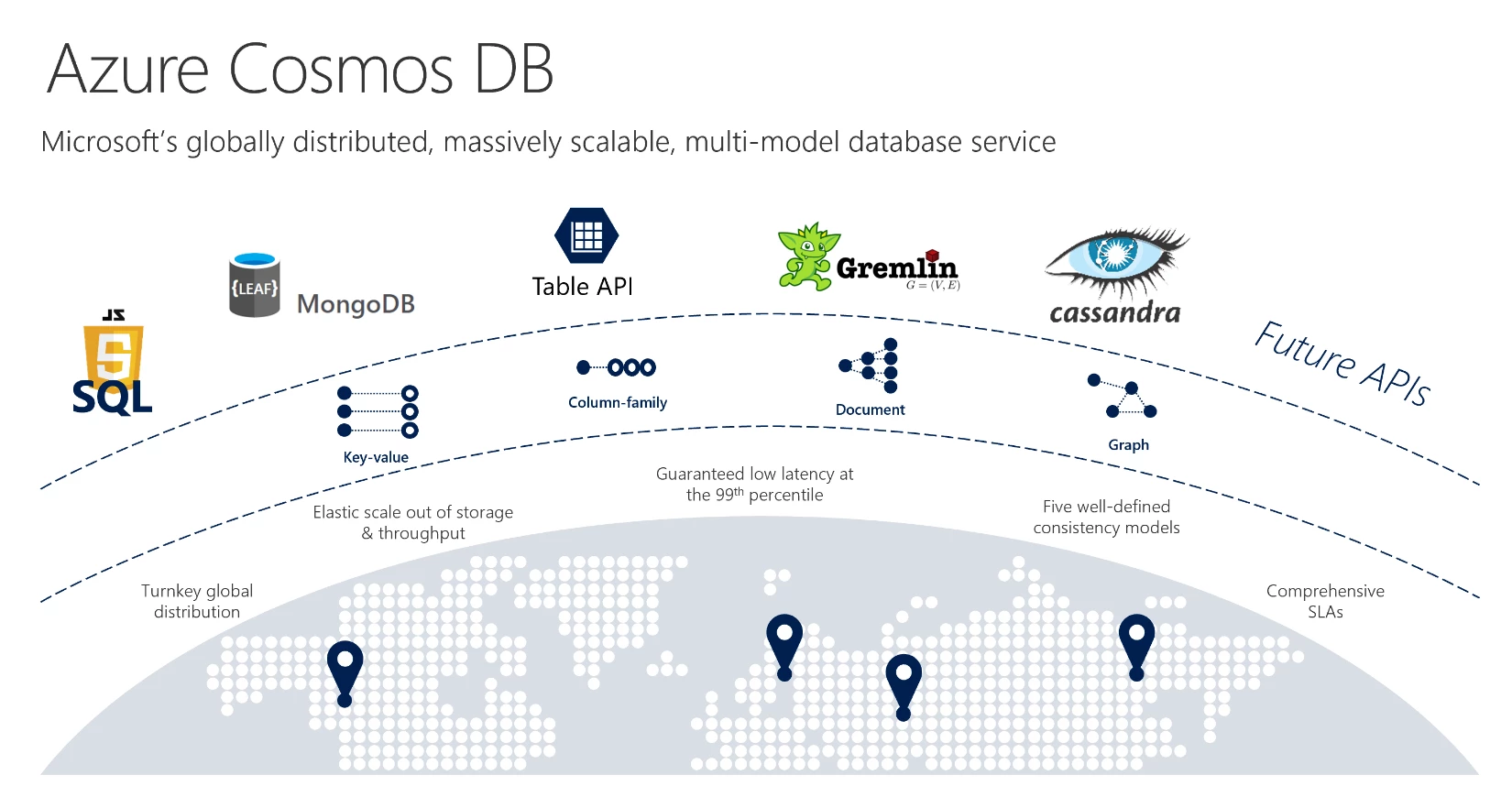

Of course, there is one database that covers more ground than other databases across these expanding and maturing paradigms, Microsoft’s polymorphic Azure Cosmos DB!

The data structure spectrum

On the data structure spectrum, Azure Cosmos DB provides a revolutionary common type system referred to as atom-record-sequence (ARS). This facilitates multiple data models at an API and wire protocol level, each representing the different data models shown in the earlier diagram. Although these models may seem unrelated, they conceptually occupy points along a spectrum, as each represents a different level of trade-off between applying structure/meaning to data at design-time vs query time, or schema-on-write vs schema-on-read. From left to right, in the case of column-family, this is provided in the form of the open source Cassandra API. For the document data model, users have a choice between the native SQL API, or the open source Azure Cosmos DB for MongoDB API. For graph data, users can adopt the Gremlin API. Finally for a key-value store, users can opt for the Table API.

The data persistence spectrums

Similarly on the data persistence spectrum, Azure Cosmos DB is one of the only databases in the world to offer multiple consistency abstractions with turnkey enablement for replication, which can be overridden per request. Azure Cosmos DB also offers the ability to do ACID transactions with snapshot isolation.

As shown in the earlier diagram, all points along the data persistence spectrum for replication consistency are supported, and were in fact uniquely pioneered as well-defined abstractions in Cosmos DB. Read my blog title “Azure Cosmos DB – Tunable Consistency!” for a discussion of the benefits and use cases for each consistency setting, along with descriptions using real world examples. For a more in-depth exploration of the data consistency models we created for Cosmos DB, please take a look at our e-book.

Global distribution and SLAs

In addition to this wide coverage across all these burgeoning spectrums, Azure Cosmos DB is also one of the only turnkey globally distributed databases in the world, enabling ultra-low latencies for both reads and writes in geo-distributed applications through seamless replication and multi-master write region capability, with automatic conflict resolution. Through a tightly controlled resource governance model made possible through a cloud-native software architecture, it also offers financially backed SLAs across consistency, availability, latency and throughput.

Flexible options

Azure Cosmos DB does not circumvent the need to make informed decisions between different points along each spectrum, nor does it completely abdicate the need in some cases to choose a different database platform entirely such as a relational database. However, as illustrated above it does provide very wide support across a growing number of spectrum points in an “expanding data universe”, with turnkey convenience and efficiency. It is thus very strongly placed to give excellent coverage across a high number of real world business use cases.