For many customers, making outbound connections to the internet from their virtual networks is a fundamental requirement of their Azure solution architectures. Factors such as security, resiliency, and scalability are important to consider when designing how outbound connectivity will work for a given architecture. Luckily, Azure has just the solution for ensuring highly available and secure outbound connectivity to the internet: Virtual Network NAT. Virtual Network NAT, also known as NAT gateway, is a fully managed and highly resilient service that is easy to scale and specifically designed to handle large-scale and variable workloads.

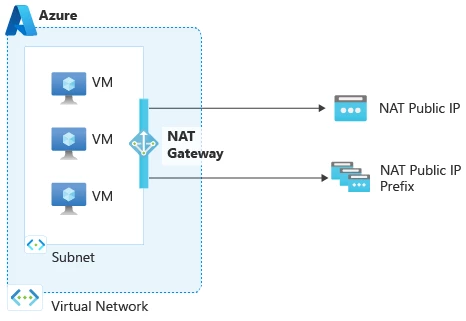

NAT gateway provides outbound connectivity to the internet through its attachment to a subnet and public IP address. NAT stands for network address translation, and as its name implies, when NAT gateway is associated to a subnet, all of the private IPs of a subnet’s resources (such as, virtual machines) are translated to NAT gateway’s public IP address. The NAT gateway public IP address then serves as the source IP address for the subnet’s resources. NAT gateway can be attached to a total of 16 IP addresses from any combination of public IP addresses and prefixes.

Figure 1: NAT gateway configuration with a subnet and a public IP address and prefix.

Customer is halted by connection timeouts while trying to make thousands of connections to the same destination endpoint

Customers in industries like finance, retail, or other scenarios that require leveraging large sets of data from the same source need a reliable and scalable method to connect to this data source.

In this blog, we’re going to walk through one such example that was made possible by leveraging NAT gateway.

Customer background

A customer collects a high volume of data to track, analyze, and ultimately make business decisions for one of their primary workloads. This data is collected over the internet from a service provider’s REST APIs, hosted in a data center they own. Because the data sets the customer is interested in may change daily, a recurring report can’t be relied on—they must request the data sets each day. Because of the volume of data, results are paginated and shared in chunks. This means that the customer must make tens of thousands of API requests for this one workload each day, typically taking from one to two hours. Each request correlates to its own separate HTTP connection, similar to their previous on-premises setup.

The starting architecture

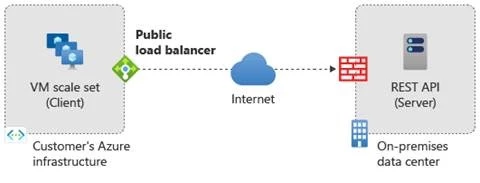

In this scenario, the customer connects to REST APIs in the service provider’s on-premises network from their Azure virtual network. The service provider’s on-premises network sits behind a firewall. The customer started to notice that sometimes one or more virtual machines waited for long periods of time for responses from the REST API endpoint. These connections waiting for a response would eventually time out and result in connection failures.

Figure 2: The customer sends traffic from their virtual machine scale set (VMSS) in their Azure virtual network over the internet to an on-premises service provider’s data center server (REST API) that is fronted by a firewall.

The investigation

Upon deeper inspection with packet captures, it was found that the service provider’s firewall was silently dropping incoming connections from their Azure network. Since the customer’s architecture in Azure was specifically designed and scaled to handle the volume of connections going to the service provider’s REST APIs for collecting the data they required, this seemed puzzling. So, what exactly was causing the issue?

The customer, the service provider, and Microsoft support engineers collectively investigated why connections from the Azure network were being sporadically dropped, and made a key discovery. Only connections coming from a source port and IP address that were recently used (on the order of 20 seconds) were dropped by the service provider’s firewall. This is because the service provider’s firewall enforces a 20-second cooldown period on new connections coming from the same source IP and port. Any connections using a new source port on the same public IP were not impacted by the firewall’s cooldown timer. From these findings, it was concluded that source network address translation (SNAT) ports from the customer’s Azure virtual network were being reused too quickly to make new connections to the service provider’s REST API. When ports were reused before the cooldown timer completed, the connection would timeout and ultimately fail. The customer was then confronted with the question of, how do we prevent ports from being reused too quickly to make connections to the service provider’s REST API? Since the firewall’s cooldown timer could not be changed, the customer had to work within its constraints.

NAT gateway to the rescue

Based on this data, NAT gateway was introduced into the customer’s setup in Azure as a proof of concept. With this one change, connection timeout issues became a thing of the past.

NAT gateway was able to resolve this customer’s outbound connectivity issue to the service provider’s REST APIs for two reasons. One, NAT gateway selects ports at random from a large inventory of ports. The source port selected to make a new connection has a high probability of being new and therefore will pass through the firewall without issue. This large inventory of ports available to NAT gateway is derived from the public IPs attached to it. Each public IP address attached to NAT gateway provides 64,512 SNAT ports to a subnet’s resources and up to 16 public IP addresses can be attached to NAT gateway. That means a customer can have over 1 million SNAT ports available to a subnet for making outbound connections. Secondly, source ports being reused by NAT gateway to connect to the service provider’s REST APIs are not impacted by the firewall’s 20-second cooldown timer. This is because the source ports are set on their own cooldown timer by NAT gateway for at least as long as the firewall’s cooldown timer before they can be reused. See our public article on NAT gateway SNAT port reuse timers to learn more.

Stay tuned for our next blog where we’ll do a deep dive into how NAT gateway solves for SNAT port exhaustion through not only its SNAT port reuse behavior but also through how it dynamically allocates SNAT ports across a subnet’s resources.

Learn more

Through the customer scenario above, we learned how NAT gateway’s selection and reuse of SNAT ports proves why it is Azure’s recommended option for connecting outbound to the internet. Because NAT gateway is not only able to mitigate risk of SNAT port exhaustion but also connection timeouts through its randomized port selection, NAT gateway ultimately serves as the best option when connecting outbound to the internet from your Azure network.

To learn more about NAT gateway, see Design virtual networks with NAT gateway.