When it comes to executing a machine learning project in an organization, data scientists, project managers, and business leads need to work together to deploy the best models to meet specific business objectives. A central objective of this step is to identify the key business variables that the analysis needs to predict. We refer to these variables as the model targets, and we use the metrics associated with them to determine the success of the project.

In this use case, available to the public on GitHub, we’ll see how a data scientist, project manager, and business lead at a retail grocer can leverage automated machine learning and Azure Machine Learning service to reduce product overstock. Azure Machine Learning service is a cloud service that you use to train, deploy, automate, and manage machine learning models, all at the broad scale that the cloud provides. Automated machine learning within Azure Machine Learning service is the process of taking training data with a defined target feature, and iterating through combinations of algorithms and feature selections to automatically select the best model for your data based on the training scores.

Excess stock quickly becomes a liquidity problem, as it is not converted back to cash unless margins are reduced by means of discounts and promotions or, even worse, when it accumulates to be sent to other channels such as outlets, delaying its sale. Identifying in advance which products will not have the level of rotation they expect and controlling replenishment with stock cover that is aligned with sales forecasts are key factors in helping retailers achieve ROI on their investments. Let’s see how the team goes about solving this problem and how automated machine learning enables the democratization of artificial intelligence across the company.

Identify the right business objective for the company

Strong sales and profits are the result of having the right product mix and level of inventory. Achieving this ideal mix requires having current and accurate inventory information. Manual processes not only take time, causing delays in producing current and accurate inventory information, but also increase the likelihood of errors. These delays and errors are likely to cause lost revenue due to inventory overstocks, understocks, and out-of-stocks.

Overstock inventory can also take valuable warehouse space and tie up cash that ought to be used to purchase new inventory. But selling it in liquidation mode can cause its own set of problems, such as tarnishing your reputation and cannibalizing sales of other current products.

The project manager, being the bridge between data scientists and business operations, reaches out to the business lead to discuss the possibilities of using some of their internal and historical sales to solve their overstock inventory problem. The project manager and the business lead define project goals by asking and refining tangible questions that are relevant for the business objective.

There are two main tasks addressed in this stage:

- Define objectives: The project manager and the business lead need to identify the business problems and, most importantly, formulate questions that define the business goals that the data science techniques can target.

- Identify data sources: The project manager and data scientist need to find relevant data that helps answer the questions that define the objectives of the project.

Look for the right data and pipeline

It all starts with data. The project manager and the data scientist need to identify data sources that contain known examples of answers to the business problem. They look for the following types of data:

- Data that is relevant to the question. Do they have measures of the target and features that are related to the target?

- Data that is an accurate measure of their model target and the features of interest.

There are three main tasks that the data scientist needs to address in this stage:

- Ingest the data into the target analytics environment

- Explore the data to determine if the data quality is adequate to answer the question

- Set up a data pipeline to score new or regularly refreshed data

After setting up the process to move the data from the source locations to the target locations where it’s possible to run analytics operations, the data scientist starts working on raw data to produce a clean, high-quality data set whose relationship to the target variables is understood. Before training machine learning models, the data scientist needs to develop a sound understanding of the data and create a data summarization and visualization to audit the quality of the data and provide the information needed to process the data before it’s ready for modeling.

Finally, the data scientist is also in charge of developing a solution architecture of the data pipeline that refreshes and scores the data regularly.

Forecast orange juice sales with automated machine learning

The data scientist and project manager decide to use automated machine learning for a few reasons: automated machine learning empowers customers, with or without data science expertise, to identify an end-to-end machine learning pipeline for any problem, achieving higher accuracy while spending far less of their time. And it also enables a significantly larger number of experiments to be run, resulting in faster iteration toward production-ready intelligent experiences.

Let’s look at how their process using automated machine learning for orange juice sales forecasting delivers on these benefits.

After agreeing on the business objective and what type of internal and historical data should be used to meet that objective, the data scientist creates a workspace. This workspace is the top-level resource for the service and provides data scientists with a centralized place to work with all the artifacts they need to create. When a workspace is created in an AzureML service, the following Azure resources are added automatically (if they are regionally available):

- Azure Container Registry

- Azure Storage

- Azure Application Insights

- Azure Key Vault

To run automated machine learning, the data scientist also needs to create an Experiment. An Experiment is a named object in a workspace that represents a predictive task, the output of which is a trained model and a set of evaluation metrics for the model.

The data scientist is now ready to load the historical orange juice sales data and loads the CSV file into a plain pandas DataFrame. The time column in the CSV is called WeekStarting, so it will be specially parsed into the datetime type.

Each row in the DataFrame holds a quantity of weekly sales for an orange juice brand at a single store. The data also includes the sales price, a flag indicating if the orange juice brand was advertised in the store that week, and some customer demographic information based on the store location. For historical reasons, the data also includes the logarithm of the sales quantity.

The task is now to build a time series model for the Quantity column. It’s important to note that this data set is comprised of many individual time series; one for each unique combination of Store and Brand. To distinguish the individual time series, we thus define the grain—the columns whose values determine the boundaries between time series.

After splitting the data into a training and a testing set for later forecast evaluation, the data scientist starts working on the modeling step for forecasting tasks, and automated machine learning uses pre-processing and estimation steps that are specific to time series. Automated machine learning will undertake the following pre-processing steps:

- Detect the time series sample frequency (e.g., hourly, daily, weekly) and create new records for absent time points to make the series regular. A regular time series has a well-defined frequency and has a value at every sample point in a contiguous time span.

- Impute missing values in the target via forward-fill and feature columns using median column values.

- Create grain-based features to enable fixed effects across different series.

- Create time-based features to assist in learning seasonal patterns.

- Encode categorical variables to numeric quantities.

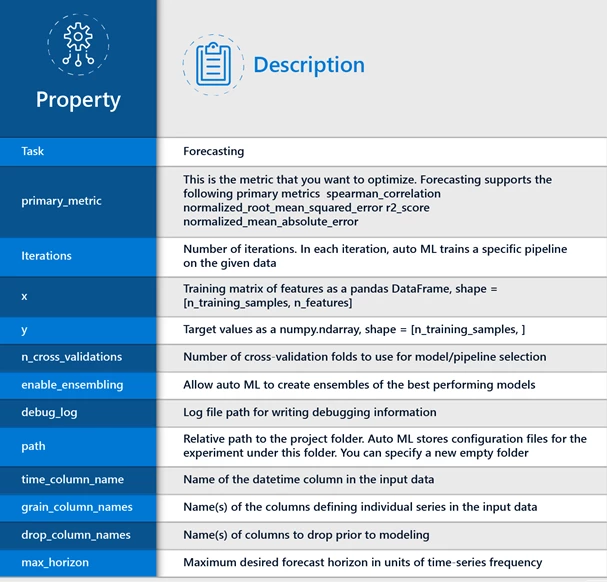

The AutoMLConfig object defines the settings and data for an automated machine learning training job. Below is a summary of automated machine learning configuration parameters that were used for training the orange juice sales forecasting model:

Visit GitHub for more information on forecasting. Each iteration runs within an experiment and stores serialized pipelines from the automated machine learning iterations until they retrieve the pipeline with the best performance on the validation data set.

Once the evaluation has been performed, the data scientist, project manager, and business lead meet again to review the forecasting results. It’s the project manager and business lead’s job to make sense of the outputs and choose practical steps based on those results. The business lead needs to confirm that the best model and pipeline meet the business objective and that the machine learning solution answers the questions with acceptable accuracy to deploy the system to production for use by their internal sales forecasting application.

Microsoft invests in Automated Machine Learning

Automated machine learning is based on a breakthrough from the Microsoft Research division. The approach combines ideas from collaborative filtering and Bayesian optimization to search an enormous space of possible machine learning pipelines intelligently and efficiently. It’s essentially a recommender system for machine learning pipelines. Similar to how streaming services recommend movies for users, automated machine learning recommends machine learning pipelines for data sets.

It’s now offered as part of the Azure Machine Learning service. As you’ve seen here, Automated machine learning empowers customers, with or without data science expertise, to identify an end-to-end machine learning pipeline for any problem and save time while increasing accuracy. It also enables a larger number of experiments to be run and faster iterations. How could automated machine learning benefit your organization? How could your team work more closely on using machine learning to meet your business objectives?

Resources

- Learn more about Azure Machine Learning service

- Learn more about automated machine learning

- Get started with a free trial of the Azure Machine Learning service