You can’t have a discussion on cloud computing lately without talking about containers. Organizations across all business segments, from banks and major financial service firms to e-commerce sites, want to understand what containers are, what they mean for applications in the cloud, and how to best use them for their specific development and IT operations scenarios.

From the basics of what containers are and how they work, to the scenarios they’re being most widely used for today, to emerging trends supporting “containerization”, I thought I’d share my perspectives to better help you understand how to best embrace this important cloud computing development to more seamlessly build, test, deploy and manage your cloud applications.

Containers Overview

In abstract terms, all of computing is based upon running some “function” on a set of “physical” resources, like processor, memory, disk, network, etc., to accomplish a task, whether a simple math calculation, like 1+1, or a complex application spanning multiple machines, like Exchange. Over time, as the physical resources became more and more powerful, often the applications did not utilize even a fraction of the resources provided by the physical machine. Thus “virtual” resources were created to simulate underlying physical hardware, enabling multiple applications to run concurrently – each utilizing fractions of the physical resources of the same physical machine.

We commonly refer to these simulation techniques as virtualization. While many people immediately think virtual machines when they hear virtualization, that is only one implementation of virtualization. Virtual memory, a mechanism implemented by all general purpose operating systems (OSs), gives applications the illusion that a computer’s memory is dedicated to them and can even give an application the experience of having access to much more RAM than the computer has available.

Containers are another type of virtualization, also referred to as OS Virtualization. Today’s containers on Linux create the perception of a fully isolated and independent OS to the application. To the running container, the local disk looks like a pristine copy of the OS files, the memory appears only to hold files and data of a freshly-booted OS, and the only thing running is the OS. To accomplish this, the “host” machine that creates a container does some clever things.

The first technique is namespace isolation. Namespaces include all the resources that an application can interact with, including files, network ports and the list of running processes. Namespace isolation enables the host to give each container a virtualized namespace that includes only the resources that it should see. With this restricted view, a container can’t access files not included in its virtualized namespace regardless of their permissions because it simply can’t see them. Nor can it list or interact with applications that are not part of the container, which fools it into believing that it’s the only application running on the system when there may be dozens or hundreds of others.

For efficiency, many of the OS files, directories and running services are shared between containers and projected into each container’s namespace. Only when an application makes changes to its containers, for example by modifying an existing file or creating a new one, does the container get distinct copies from the underlying host OS – but only of those portions changed, using Docker’s “copy-on-write” optimization. This sharing is part of what makes deploying multiple containers on a single host extremely efficient.

Second, the host controls how much of the host’s resources can be used by a container. Governing resources like CPU, RAM and network bandwidth ensure that a container gets the resources it expects and that it doesn’t impact the performance of other containers running on the host. For example, a container can be constrained so that it cannot use more than 10% of the CPU. That means that even if the application within it tries, it can’t access to the other 90%, which the host can assign to other containers or for its own use. Linux implements such governance using a technology called “cgroups.” Resource governance isn’t required in cases where containers placed on the same host are cooperative, allowing for standard OS dynamic resource assignment that adapts to changing demands of application code.

The combination of instant startup that comes from OS virtualization and reliable execution that comes from namespace isolation and resource governance makes containers ideal for application development and testing. During the development process, developers can quickly iterate. Because its environment and resource usage are consistent across systems, a containerized application that works on a developer’s system will work the same way on a different production system. The instant-start and small footprint also benefits cloud scenarios, since applications can scale-out quickly and many more application instances can fit onto a machine than if they were each in a VM, maximizing resource utilization.

Comparing a similar scenario that uses virtual machines with one that uses containers highlights the efficiency gained by the sharing. In the example shown below, the host machine has three VMs. In order to provide the applications in the VMs complete isolation, they each have their own copies of OS files, libraries and application code, along with a full in-memory instance of an OS. Starting a new VM requires booting another instance of the OS, even if the host or existing VMs already have running instances of the same version, and loading the application libraries into memory. Each application VM pays the cost of the OS boot and the in-memory footprint for its own private copies, which also limits the number of application instances (VMs) that can run on the host.

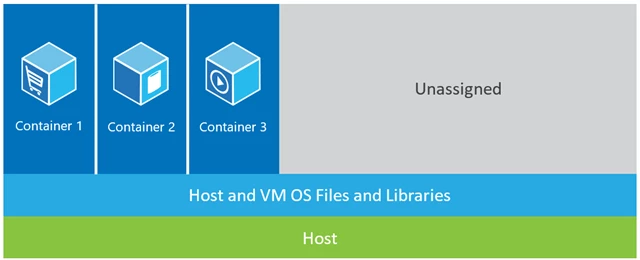

The figure below shows the same scenario with containers. Here, containers simply share the host operating system, including the kernel and libraries, so they don’t need to boot an OS, load libraries or pay a private memory cost for those files. The only incremental space they take is any memory and disk space necessary for the application to run in the container. While the application’s environment feels like a dedicated OS, the application deploys just like it would onto a dedicated host. The containerized application starts in seconds and many more instances of the application can fit onto the machine than in the VM case.

Docker’s Appeal

The concept of namespace isolation and resource governance related to OSs has been around for a long time, going back to BSD Jails, Solaris Zones and even the basic UNIX chroot (change root) mechanism. However, by creating a common toolset, packaging model and deployment mechanism, Docker greatly simplified the containerization and distribution of applications that can then run anywhere on any Linux host. This ubiquitous technology not only simplifies management by offering the same management commands against any host, it also creates a unique opportunity for seamless DevOps.

From a developer’s desktop to a testing machine to a set of production machines, a Docker image can be created that will deploy identically across any environment in seconds. This story has created a massive and growing ecosystem of applications packaged in Docker containers, with DockerHub, the public containerized-application registry that Docker maintains, currently publishing more than 180,000 applications in the public community repository. Additionally, to guarantee the packaging format remains universal, Docker recently organized the Open Container Initiative (OCI), aiming to ensure container packaging remains an open and foundation-led format, with Microsoft as one of the founding members.

Windows Server and Containers

To bring the power of containers to all developers, last October we announced plans to implement container technology in Windows Server. To enable developers that use Linux Docker containers with the exact same experience on Windows Server, we also announced our partnership with Docker to extend the Docker API and toolset to support Windows Server Containers. For us, this was an opportunity to benefit all of our customers, both Linux and Windows alike. As I recently demonstrated at DockerCon, we are excited to create a unified and open experience for developers and system administrators to deploy their containerized applications comprising both Windows Server and Linux. We are developing this in the open Docker GitHub repository.

In Windows Server 2016, we will be releasing two flavors of containers, both of which will be deployable using Docker APIs and the Docker client: Windows Server Containers and Hyper-V Containers. Linux containers require Linux APIs from the host kernel and Windows Server Containers require the Windows APIs of a host Windows kernel, so you cannot run Linux containers on a Windows Server host or a Windows Server Container on a Linux host. However, the same Docker client can manage all of these containers, and while you can’t run a packaged Windows container on Linux, a Windows container package works with Windows Server Containers and Hyper-V Containers because they both utilize the Windows kernel.

There’s the question of when you might want to use a Windows Server Container versus a Hyper-V Container. While the sharing of the kernel enables fast start-up and efficient packing, Windows Server Containers share the OS with the host and each other. The amount of shared data and APIs means that there may be ways, whether by design or because of an implementation flaw in the namespace isolation or resource governance, for an application to escape out of its container or deny service to the host or other containers. Local elevation of privilege vulnerabilities that operating system vendors patch is an example of a flaw that an application could leverage.

Thus, Windows Server Containers are great for scenarios where the OS trusts the applications that will be hosted on it, and all the applications also trust each other. In other words, the host OS and applications are within the same trust boundary. That’s true for many multi-container applications, applications that make up a shared service of a larger application, and sometimes applications from the same organization.

There are cases where you may want to run applications from different trust boundaries on the same host, however. One example is if you are implementing a multitenant PaaS or SaaS offering where you allow your customers to supply their own code to extend the functionality of your service. You don’t want one customer’s code to interfere with your service or gain access to the data of your other customers, but you need a container that is more agile than a VM and that takes advantage of the Docker ecosystem. We have several examples of such services in Azure, like Azure Automation and Machine Learning. We call the environment they run in “hostile multi-tenancy,” since we have to assume that there are customers that deliberately seek to subvert the isolation. In these types of environments, the isolation of Windows Server Containers may not provide sufficient assurance, which motivated our development of Hyper-V Containers.

Hyper-V Containers take a slightly different approach to containerization. To create more isolation, Hyper-V Containers each have their own copy of the Windows kernel and have memory assigned directly to them, a key requirement of strong isolation. We use Hyper-V for CPU, memory and IO isolation (like network and storage), delivering the same level of isolation found in VMs. Like for VMs, the host only exposes a small, constrained interface to the container for communication and sharing of host resources. This very limited sharing means Hyper-V Containers have a bit less efficiency in startup times and density than Windows Server Containers, but the isolation required to allow untrusted and “hostile multi-tenant” applications to run on the same host.

So aren’t Hyper-V Containers the same as VMs? Besides the optimizations to the OS that result from it being fully aware that it’s in a container and not a physical machine, Hyper-V Containers will be deployed using the magic of Docker and can use the exact same packages that run in Windows Server Containers. Thus, the tradeoff of level of isolation versus efficiency/agility is a deploy-time decision, not a development-time decision – one made by the owner of the host.

Orchestration

As they’ve adopted containers, customers have discovered a challenge. When they deploy dozens, hundreds or thousands of containers that make up an application, tracking and managing the deployment requires advances in both management and orchestration. Container orchestration has become an exciting new area of innovation with multiple options and solutions. Container orchestrators are assigned a pool of servers (VMs or bare metal servers), commonly called a “cluster,” and “schedule” deployment of containers onto those servers. Some orchestrators go further and configure networking between containers on different servers, while some include load balancing, container name resolution, rolling updates and more. Some are extensible and enable application frameworks to bring these additional capabilities.

While a deeper discussion on orchestration solutions might require a whole other post on its own, here’s a quick outline a few of the technologies, all supported on top of Azure:

- Docker Compose enables the definition of simple multi-container applications. Docker Swarm manages and organizes Docker containers across multiple hosts via the same API used by a single Docker host. Swarm and Compose come together to offer a complete orchestration technology built by Docker.

- Mesos is an orchestration and management solution that actually predates Docker, but has recently added support for Docker into its built-in application framework Marathon. It is an open and community-driven solution built by Mesosphere. We recently demonstrated integration with Mesos and DCOS on Azure.

- Kubernetes is an open-source solution built by Google offering container grouping into “Pods” for management across multiple hosts. This is also supported on Azure.

- Deis is an open source PaaS platform to deploy and manage applications integrated with Docker. We have an easy way to deploy a Deis cluster on Azure.

We are excited to have support in Azure for most of the popular orchestration solutions and expect to get more engaged in these communities as we see interest and usage increase over time.

Microservices

The more immediately lucrative usage for containers has been focused on simplifying DevOps with easy developer to test to production flows for services deployed in the cloud or on-premises. But there is another growing scenario where containers are becoming very compelling. Microservices is an approach to application development where every part of the application is deployed as a fully self-contained component, called a microservice that can be individually scaled and updated. For example, the subsystem of an application that receives requests from the public Internet might be separate from the subsystem putting the request on to a queue for a backend subsystem to read and drop them into a database. When the application is constructed using microservices, each subsystem is a microservice. In a dev/test environment on a single box, the microservices might each have one instance, but when run in production each can scale out to different numbers of instances across a cluster of servers depending on their resource demands as customer request levels rise and fall. If different teams produce them, the teams can also independently update them.

Microservices is not a new approach to programming, nor is it tied explicitly to containers, but the benefits of Docker containers are magnified when applied to a complex microservice-based application. Agility means that a microservice can quickly scale out to meet increased load, the namespace and resource isolation of containers prevents one microservice instance from interfering with others, and use of the Docker packaging format and APIs unlock the Docker ecosystem for the microservice developer and application operator. With a good microservice architecture, customers can solve the management, deployment, orchestration and patching needs of a container-based service with reduced risk of availability loss while maintaining high agility.

Today there are several solutions for building application models using microservices and we partner with many of them on Azure. Docker Compose and Mesosphere Marathon are two examples. Shortly before //build, we announced and then released a developer preview of Service Fabric, our own microservices application platform. It includes a rich collection of microservice lifecycle management capabilities, including rolling update with rollback, partitioning, placement constraints and more. Notably, in addition to stateless microservices, it supports stateful microservices, which are differentiated by the fact that the microservice manages data that’s co-resident with it on the same server. In fact, Service Fabric is the only PaaS platform that offers stateful microservices with state management and replication frameworks built directly into its cluster management. We developed this application model for internal services to be able to scale to hyperscale with stateful replication, and services like Cortana, Azure SQL Database and Skype for Business are built on it. We will release a public preview of Service Fabric later this year, but in the meantime you can check out more on Service Fabric here.

I hope the above helps paint a useful picture of Microsoft’s container vision, the most common container use cases, and also some of the emerging industry trends around containers. As always, we’d love your feedback, particularly if there are any areas where you’d like to learn more.