Artificial Intelligence (AI) has emerged as one of the most powerful forces in the digital transformation. At Microsoft, we believe developers, data scientists and enterprises should have easy access to the power of AI so they can build systems that augment human ingenuity in unique and differentiated ways. Today, at Microsoft Build 2018, as we engage in conversations about digital transformation with over a million developers, customers and partners, I am pleased to share some of our latest and most exciting innovations in the Azure AI Platform.

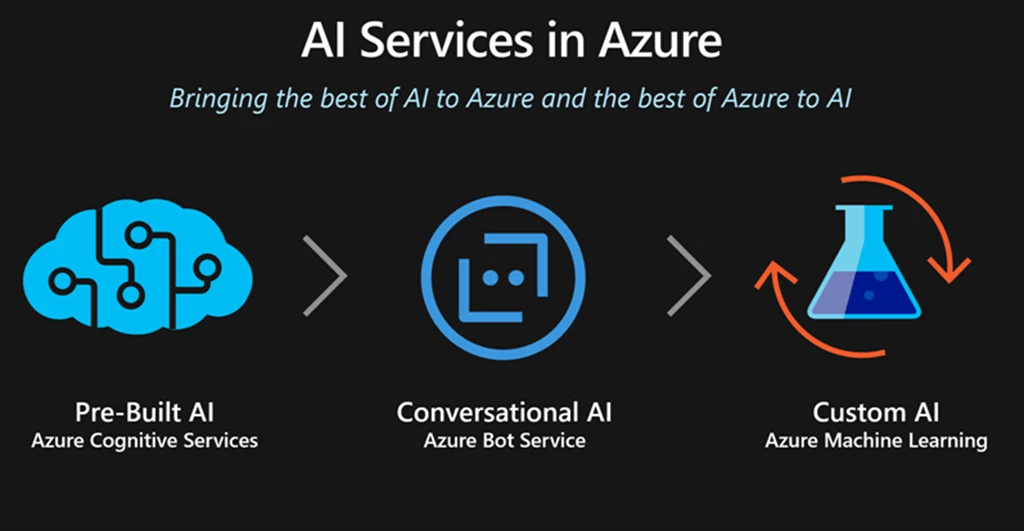

The Azure AI Platform consists of three major sets of capabilities:

1. AI Services (Figure 1): These span pre-built AI capabilities such as Azure Cognitive Services and Cognitive Search (Azure Search + integrated Cognitive Services), Conversational AI with Azure Bot Service, and custom AI development with Azure Machine Learning (AML).

Figure1: AI Services in Azure

2. AI Tools & Frameworks: These span Visual Studio tools for AI, Azure Notebooks, Data Science VMs, Azure Machine Learning Studio and the AI Toolkit for Azure IoT Edge.

3. AI Infrastructure: These span Azure Data Services, compute services including Azure Kubernetes Services (AKS) and AI Silicon support including GPUs and FPGAs.

Cognitive Services are cloud hosted APIs for developers to easily add AI capabilities such as speech recognition, voice synthesis, vision, language understanding, translation, knowledge and Search. Today, we are unveiling several exciting new innovations in Cognitive Services:

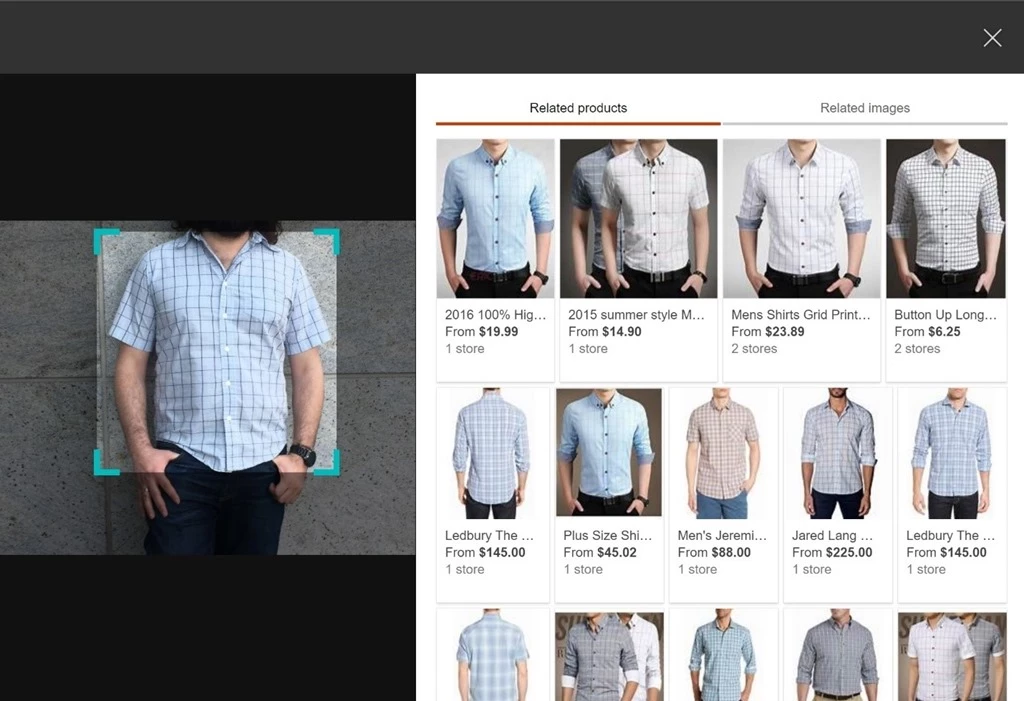

- Vision services are now updated with a vastly expanded object detection capability including the ability to customize object detection with your own data. The OCR capabilities are now significantly improved, and Bing Visual Search now provides an API to search with images as a query (Figure 2). We are also announcing the public preview of Video Indexer, a powerful capability to understand and index video streams.

- We are launching a unified Speech service, with new customizable features such as trainable custom speech recognition, custom voice synthesis, and a custom speech translator.

- We’re also adding edge AI capabilities with the ability to export Custom Vision models to support deployments on edge and mobile devices.

Figure2: Similar image results with Bing Visual Search, powered by Bing

To date more than a million developers have already discovered and tried Cognitive Services, including the major auto insurance provider Progressive, British Telecom, Box.com, KPMG, and Big Fish Games. To learn more and start building AI infused apps right away, please visit the Cognitive Services site in Azure and our documentation pages.

Harnessing Search capabilities for every developer

Another key area of AI investment has been around Search – whether it be searching the world’s knowledge on the internet, or your own corporate data repositories. Now through a simple set of Bing Search APIs every developer can add the power to query the internet for web pages, video, images, news and much more. You can embed these APIs it into any app with a few lines of code and help users find the right information from the knowledge of our planet in real-time. For non-public data that you want to upload, index and search, Azure Search provides a managed, elastic, cloud search service.

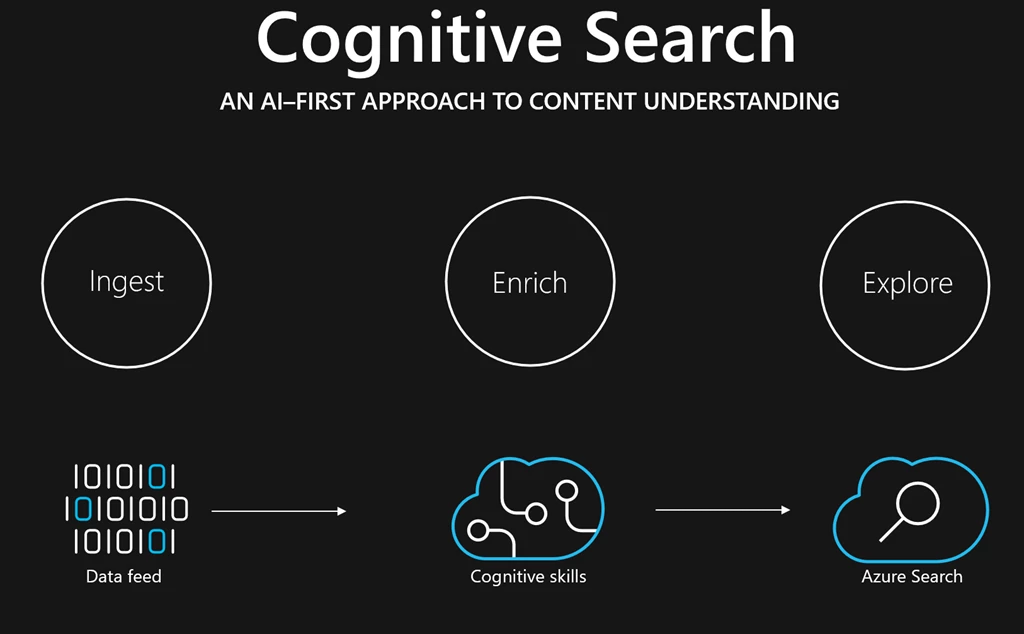

Today we are announcing a new capability called Cognitive Search (Figure 3), an AI-first approach to content understanding, tightly integrated with Azure Search. Available in the Azure Portal, developers can go from zero to AI-enriched content in a Search index in a few minutes. It supports built-in file readers (PDFs, Office formats etc.) and cognitive skills for OCR (handwritten and print text), entity recognition, key phrase extraction, language detection, image analysis returning tags, descriptions and faces, and more. It has built-in extensibility based on webhooks, enabling developers to add capabilities while staying within the Cognitive Search framework. It can pull data from a variety of Azure data sources, extract knowledge with cognitive skills, and then organize and store this knowledge in a search index enabling new experiences for exploring the data.

Here are a few examples of how customers are leveraging Cognitive Search:

- Healthcare organizations apply cognitive search to intelligently index large volumes of text includes references to general entities (e.g. hospital names) and domain-specific ones (e.g. drug and disease names) that need to be connected and related.

- Financial Services apply cognitive search to provide their employees and customers with a global view of their information assets including scanned documents.

- Oil & Gas companies to connect places, people (domain experts), events, images, and navigate all this information to make key decisions.

Figure3: Cognitive Search, an AI-first approach to content understanding

To learn more and start building, head to the Azure portal, create an Azure Search service and you’ll see the “Cognitive Search” step in the Import Data flow. Check out the documentation to learn how to use more cognitive skills and how to extend the enrichment process with your own data. To explore a scenario where we applied Cognitive Search to a public dataset, check out the JFK Files demo in AI.lab. We published a live version of the JFK Files application and posted the code in GitHub in case you want to use it as a starting point for something that you want to build.

Conversational AI is heralding the next user interface wave in computing. We’ve evolved from a world of having to learn and adapt to computers, to one where computers can learn to understand and interact with us conversationally. These interactions start with language, speech, and semantic understanding, and is now extending to rich multi-modal interactions including gestures.

Today, we are announcing major updates related to our Conversational AI tools including updates to the Azure Bot Service, Bot Builder SDK v4. Public Preview, general availability of QnAMaker, as well as the release of new experimental projects from Cognitive Services labs such as Project Gesture, Project Personality Chat and Project Conversation Learner. Personality Chat helps make intelligent agents more complete and conversational by handling small talk and reducing less palatable fallback responses. You can have your agent do small talk in the flavor of one of three personalities – Professional, Friendly, Humorous. Try out a demo at Personality Chat Cognitive Labs and learn about the response generation technology. A customizable editorial library of the 100 most common small talk scenarios is available to integrate with the Bot Builder SDK v4. This blog post provides a more detail on all Conversational AI announcements and describes some of our newly updated services.

Updates to Azure Machine Learning (Azure ML)

Today we are also announcing a set of powerful new capabilities to Azure ML:

- Azure ML SDK for Python (Preview): This SDK provides a single control plane API to the data scientist to execute the key AML workflows of Provisioning Compute, Model Training, Model Deployment and Scoring entirely in Python. It enables simple, seamless dispatch and management of scale -jobs on a serverless fabric that supports a diversity of CPU/ GPU configurations with support for all python compatible frameworks, including TensorFlow, CNTK, Scikit-learn, and more. The SDK is fully integrated into the AML Experimentation service giving you a single pane of glass for seeing all your team’s experiments, rapidly comparing them side by side, and choosing the models you want to deploy.

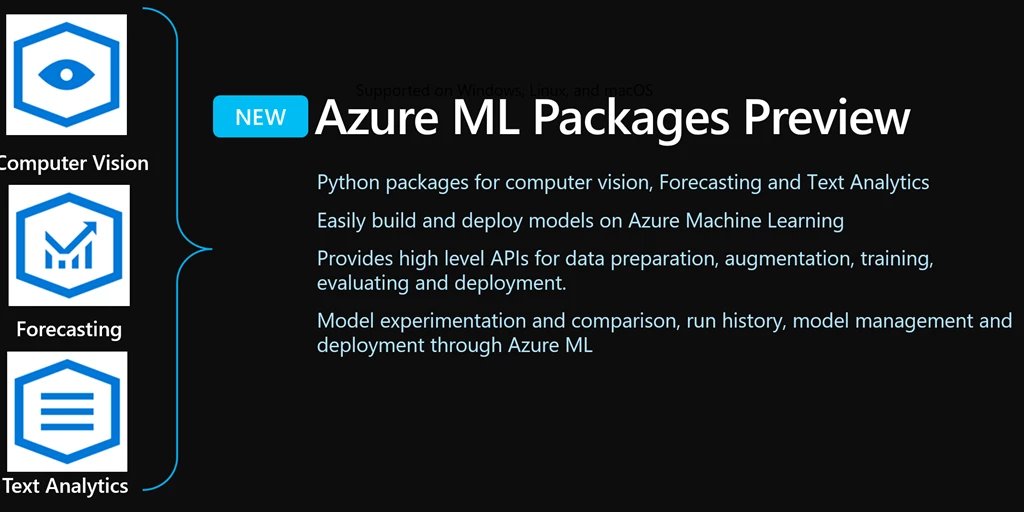

- Azure ML Packages (Figure 4) for Computer Vision, Financial Forecasting and Text Analytics (Preview). At Microsoft, our Data Science teams have been working for years on multiple data and customer scenarios. In the process, we have identified common approaches to solving problems that every organization is facing and developed specific packaged algorithms from these insights. The Azure ML Packages represent a rich set of Python pip-installable extensions to Azure ML that accelerate deep learning scenarios and enable data scientists to easily build, train, fine-tune and deploy highly accurate and efficient models. To learn more and get started visit the AML Packages Overview – Python Packages for Azure Machine Learning and AML Packages Python Reference API.

Figure 4: Azure ML Packages for Computer Vison, Financial Forecasting and Text Analytics

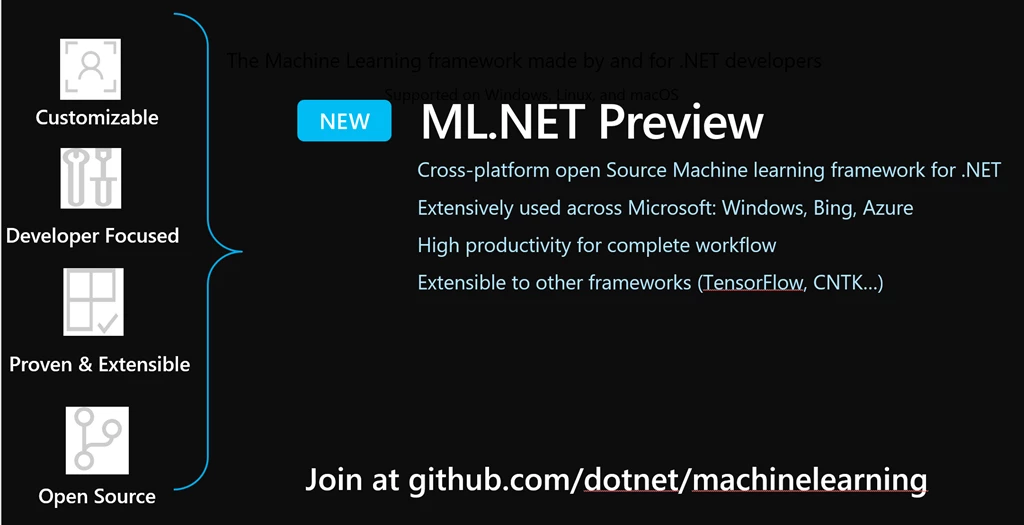

- ML.NET (Figure 5), now in preview, is a cross-platform open source ML Framework for .NET developers. It is an enterprise-grade software library of the latest and best innovations in Machine Learning from Microsoft. Our teams across Bing, Office and Windows have been using ML.NET for years on multiple data and customer scenarios that span all stages of the data science pipeline, including data ingest, featurization, transformation, model training, auto ml and pipeline deployment. To learn more and get started visit https://github.com/dotnet/machinelearning.

Figure 5: ML.NET, a cross-platform open source machine learning framework for .NET

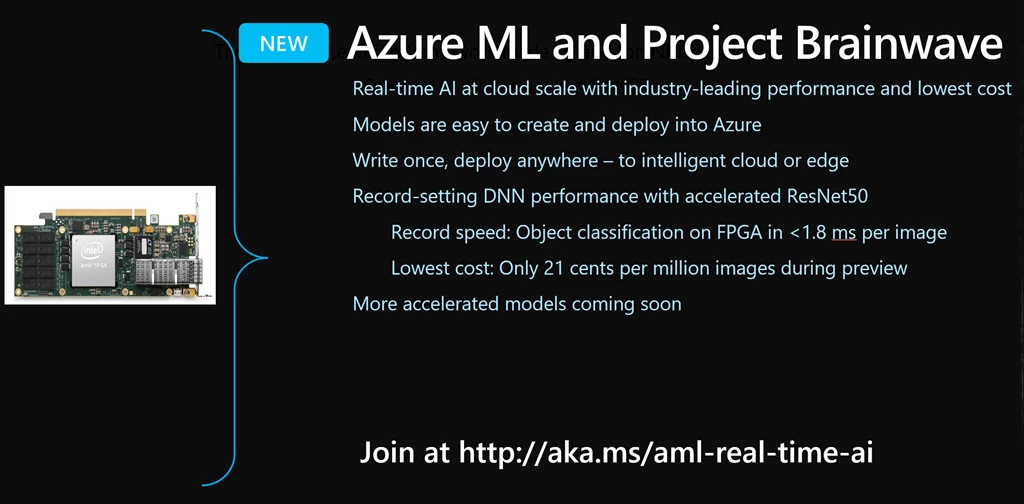

Azure ML with Project Brainwave: We're announcing a preview of Project Brainwave (Figure 6) integrated with Azure ML. Brainwave is an architecture for deep neural net processing that enables super-fast AI with hardware acceleration. It initially supports ResNet50-based neural networks on Intel FPGA hardware for very low latency inferencing and transfer learning. Based on our internal performance metrics, Project Brainwave’s promise is the lowest latency and lowest cost for real-time AI in the industry, especially for single (batch size 1) inferences.

Figure 6: AML and Project Brainwave for Real-Time AI Inferencing at cloud scale

These announcements are part of our continuing mission to bring AI to every developer and every organization on the planet, and to help businesses augment human ingenuity in unique and differentiated ways. I invite you to visit www.azure.com/ai and the AI School to learn more about how AI can augment and empower your digital transformation efforts.

Joseph

@josephsirosh